Simon-Pierre Deschênes

Saturation-Aware Angular Velocity Estimation: Extending the Robustness of SLAM to Aggressive Motions

Oct 11, 2023

Abstract:We propose a novel angular velocity estimation method to increase the robustness of Simultaneous Localization And Mapping (SLAM) algorithms against gyroscope saturations induced by aggressive motions. Field robotics expose robots to various hazards, including steep terrains, landslides, and staircases, where substantial accelerations and angular velocities can occur if the robot loses stability and tumbles. These extreme motions can saturate sensor measurements, especially gyroscopes, which are the first sensors to become inoperative. While the structural integrity of the robot is at risk, the resilience of the SLAM framework is oftentimes given little consideration. Consequently, even if the robot is physically capable of continuing the mission, its operation will be compromised due to a corrupted representation of the world. Regarding this problem, we propose a way to estimate the angular velocity using accelerometers during extreme rotations caused by tumbling. We show that our method reduces the median localization error by 71.5 % in translation and 65.5 % in rotation and reduces the number of SLAM failures by 73.3 % on the collected data. We also propose the Tumbling-Induced Gyroscope Saturation (TIGS) dataset, which consists of outdoor experiments recording the motion of a lidar subject to angular velocities four times higher than other available datasets. The dataset is available online at https://github.com/norlab-ulaval/Norlab_wiki/wiki/TIGS-Dataset.

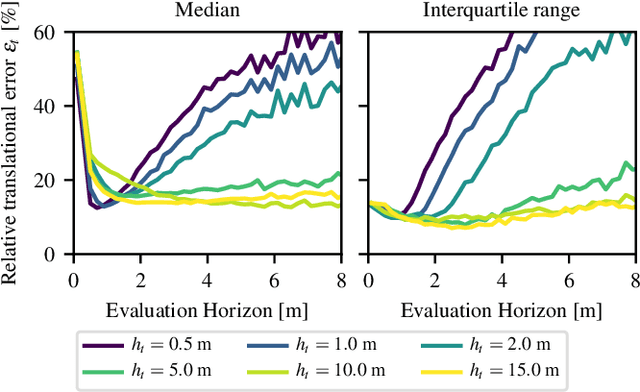

DRIVE: Data-driven Robot Input Vector Exploration

Sep 19, 2023Abstract:An accurate motion model is a fundamental component of most autonomous navigation systems. While much work has been done on improving model formulation, no standard protocol exists for gathering empirical data required to train models. In this work, we address this issue by proposing Data-driven Robot Input Vector Exploration (DRIVE), a protocol that enables characterizing uncrewed ground vehicles (UGVs) input limits and gathering empirical model training data. We also propose a novel learned slip approach outperforming similar acceleration learning approaches. Our contributions are validated through an extensive experimental evaluation, cumulating over 7 km and 1.8 h of driving data over three distinct UGVs and four terrain types. We show that our protocol offers increased predictive performance over common human-driven data-gathering protocols. Furthermore, our protocol converges with 46 s of training data, almost four times less than the shortest human dataset gathering protocol. We show that the operational limit for our model is reached in extreme slip conditions encountered on surfaced ice. DRIVE is an efficient way of characterizing UGV motion in its operational conditions. Our code and dataset are both available online at this link: https://github.com/norlab-ulaval/DRIVE.

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

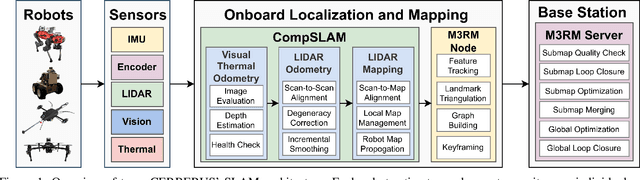

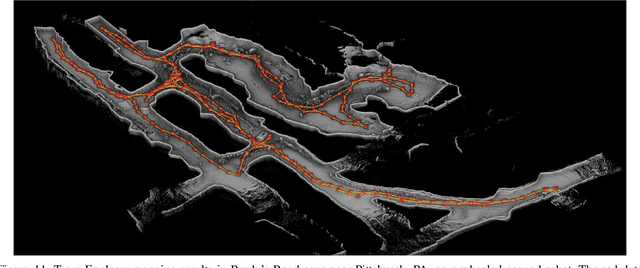

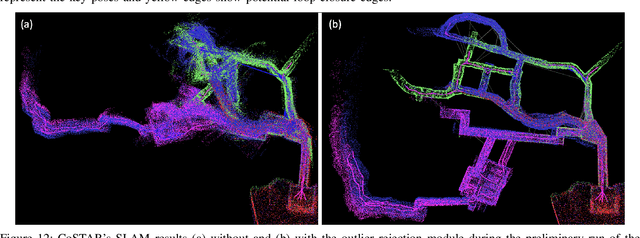

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

Kilometer-scale autonomous navigation in subarctic forests: challenges and lessons learned

Nov 27, 2021

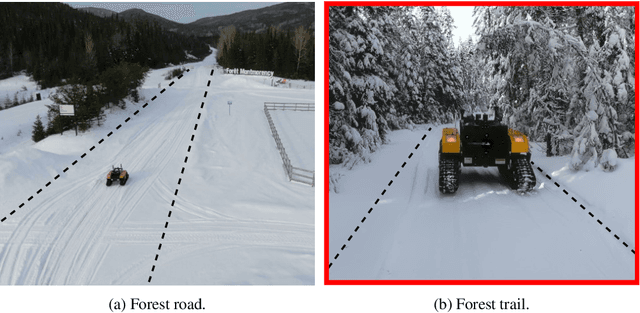

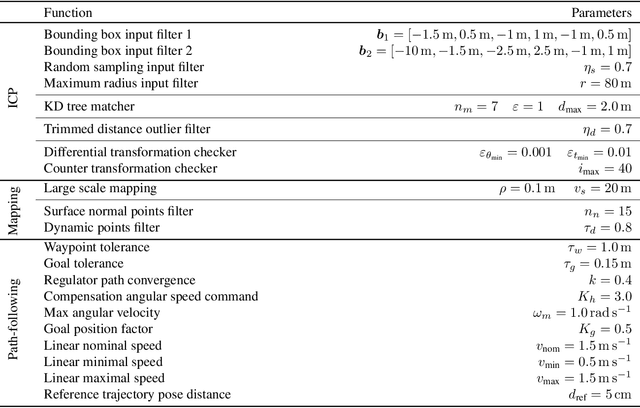

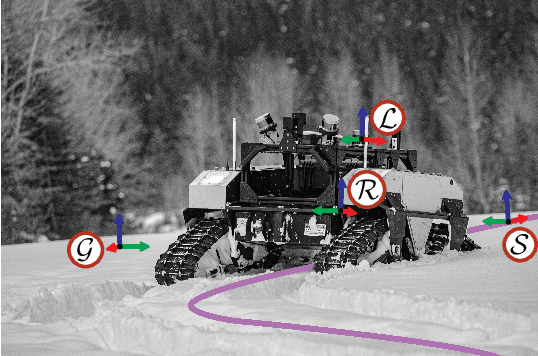

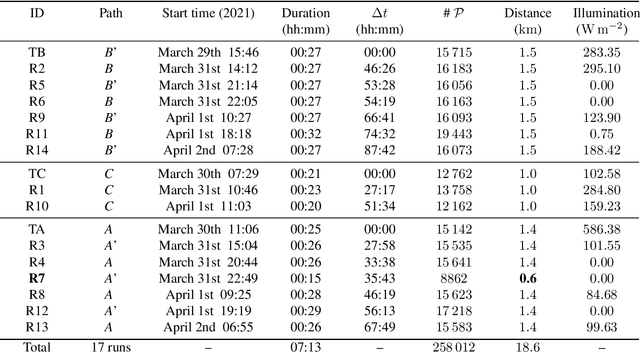

Abstract:Challenges inherent to autonomous wintertime navigation in forests include lack of reliable a Global Navigation Satellite System (GNSS) signal, low feature contrast, high illumination variations and changing environment. This type of off-road environment is an extreme case of situations autonomous cars could encounter in northern regions. Thus, it is important to understand the impact of this harsh environment on autonomous navigation systems. To this end, we present a field report analyzing teach-and-repeat navigation in a subarctic region while subject to large variations of meteorological conditions. First, we describe the system, which relies on point cloud registration to localize a mobile robot through a boreal forest, while simultaneously building a map. We experimentally evaluate this system in over 18.6 km of autonomous navigation in the teach-and-repeat mode. We show that dense vegetation perturbs the GNSS signal, rendering it unsuitable for navigation in forest trails. Furthermore, we highlight the increased uncertainty related to localizing using point cloud registration in forest corridors. We demonstrate that it is not snow precipitation, but snow accumulation that affects our system's ability to localize within the environment. Finally, we expose some lessons learned and challenges from our field campaign to support better experimental work in winter conditions.

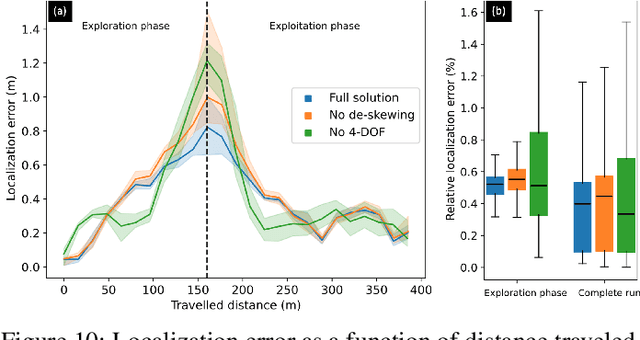

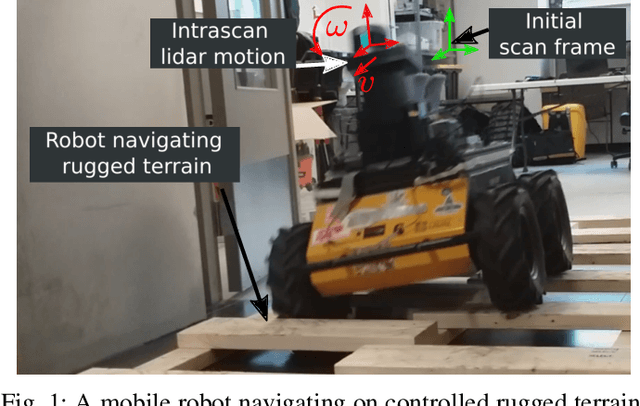

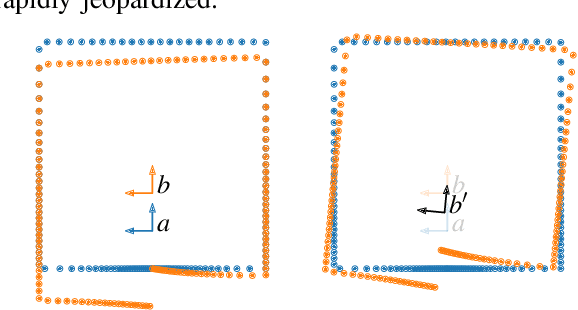

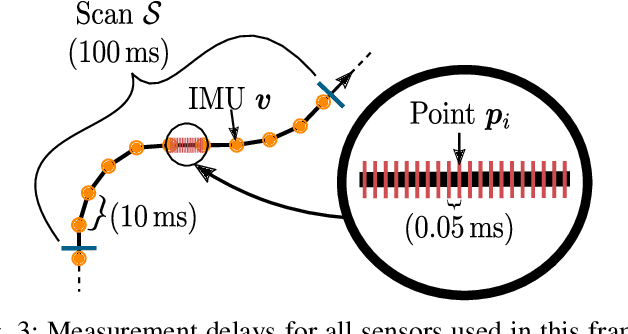

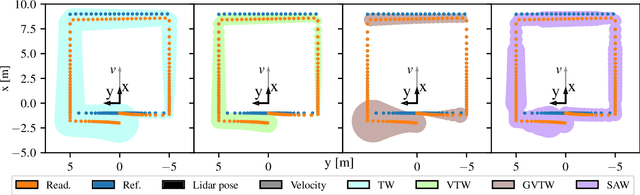

Lidar Scan Registration Robust to Extreme Motions

May 03, 2021

Abstract:Registration algorithms, such as Iterative Closest Point (ICP), have proven effective in mobile robot localization algorithms over the last decades. However, they are susceptible to failure when a robot sustains extreme velocities and accelerations. For example, this kind of motion can happen after a collision, causing a point cloud to be heavily skewed. While point cloud de-skewing methods have been explored in the past to increase localization and mapping accuracy, these methods still rely on highly accurate odometry systems or ideal navigation conditions. In this paper, we present a method taking into account the remaining motion uncertainties of the trajectory used to de-skew a point cloud along with the environment geometry to increase the robustness of current registration algorithms. We compare our method to three other solutions in a test bench producing 3D maps with peak accelerations of 200 m/s^2 and 800 rad/s^2. In these extreme scenarios, we demonstrate that our method decreases the error by 9.26 % in translation and by 21.84 % in rotation. The proposed method is generic enough to be integrated to many variants of weighted ICP without adaptation and supports localization robustness in harsher terrains.

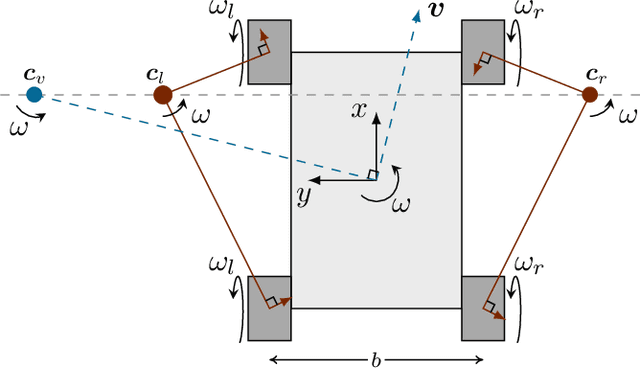

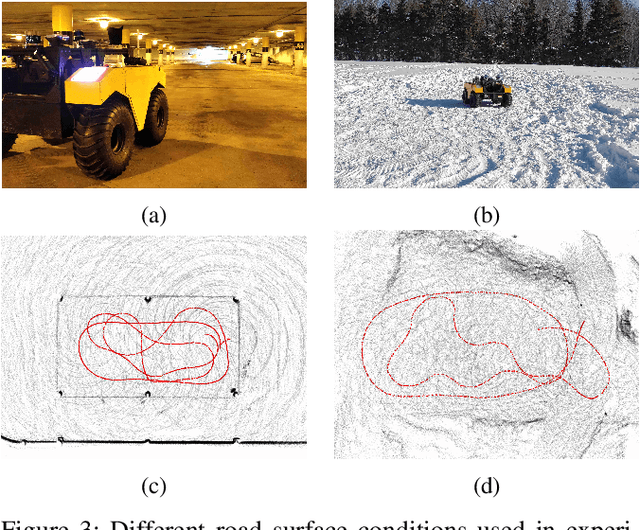

Evaluation of Skid-Steering Kinematic Models for Subarctic Environments

Apr 10, 2020

Abstract:In subarctic and arctic areas, large and heavy skid-steered robots are preferred for their robustness and ability to operate on difficult terrain. State estimation, motion control and path planning for these robots rely on accurate odometry models based on wheel velocities. However, the state-of-the-art odometry models for skid-steer mobile robots (SSMRs) have usually been tested on relatively lightweight platforms. In this paper, we focus on how these models perform when deployed on a large and heavy (590 kg) SSMR. We collected more than 2 km of data on both snow and concrete. We compare the ideal differential-drive, extended differential-drive, radius-of-curvature-based, and full linear kinematic models commonly deployed for SSMRs. Each of the models is fine-tuned by searching their optimal parameters on both snow and concrete. We then discuss the relationship between the parameters, the model tuning, and the final accuracy of the models.

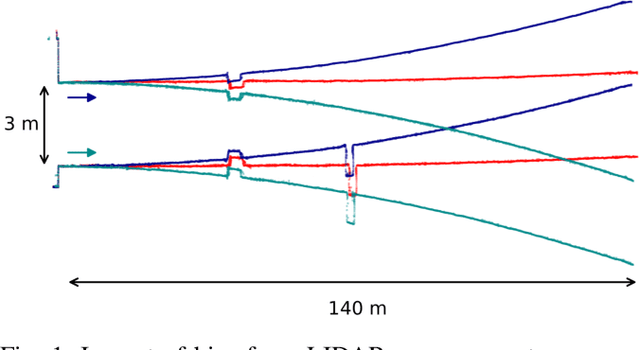

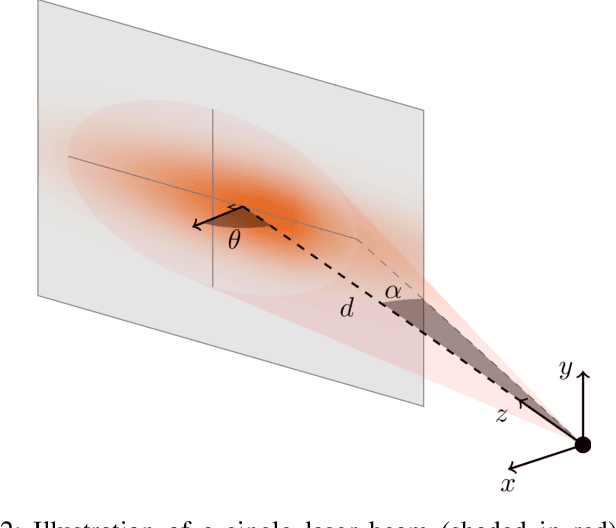

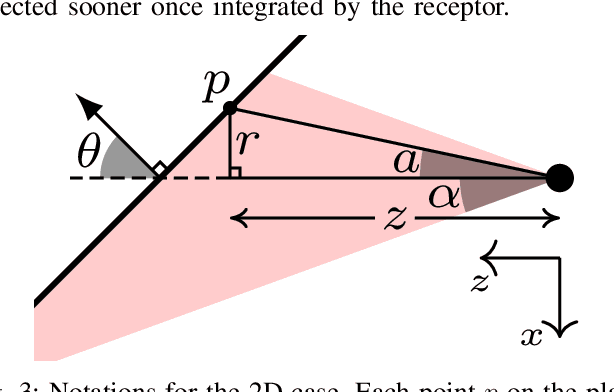

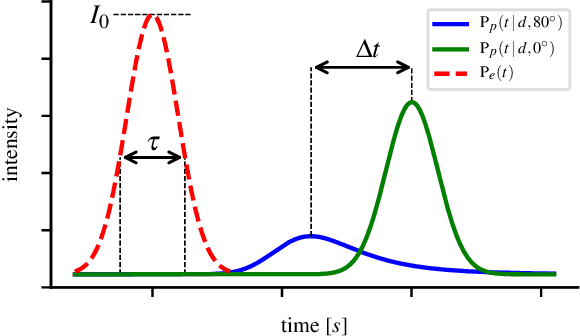

Lidar Measurement Bias Estimation via Return Waveform Modelling in a Context of 3D Mapping

Oct 03, 2018

Abstract:In a context of 3D mapping, it is very important to get accurate measurements from sensors. In particular, Light Detection And Ranging (LIDAR) measurements are typically treated as a zero-mean Gaussian distribution. We show that this assumption leads to predictable localisation drifts, especially when a bias related to measuring obstacles with high incidence angles is not taken into consideration. Moreover, we present a way to physically understand and model this bias, which generalises to multiple sensors. Using an experimental setup, we measured the bias of the Sick LMS151, Velodyne HDL-32E, and Robosense RS-LiDAR-16 as a function of depth and incidence angle, and showed that the bias can go up to 20 cm for high incidence angles. We then used our modelisations to remove the bias from the measurements, leading to more accurate maps and a reduced localisation drift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge