Vladimír Kubelka

Introspective Loop Closure for SLAM with 4D Imaging Radar

Mar 04, 2025Abstract:Simultaneous Localization and Mapping (SLAM) allows mobile robots to navigate without external positioning systems or pre-existing maps. Radar is emerging as a valuable sensing tool, especially in vision-obstructed environments, as it is less affected by particles than lidars or cameras. Modern 4D imaging radars provide three-dimensional geometric information and relative velocity measurements, but they bring challenges, such as a small field of view and sparse, noisy point clouds. Detecting loop closures in SLAM is critical for reducing trajectory drift and maintaining map accuracy. However, the directional nature of 4D radar data makes identifying loop closures, especially from reverse viewpoints, difficult due to limited scan overlap. This article explores using 4D radar for loop closure in SLAM, focusing on similar and opposing viewpoints. We generate submaps for a denser environment representation and use introspective measures to reject false detections in feature-degenerate environments. Our experiments show accurate loop closure detection in geometrically diverse settings for both similar and opposing viewpoints, improving trajectory estimation with up to 82 % improvement in ATE and rejecting false positives in self-similar environments.

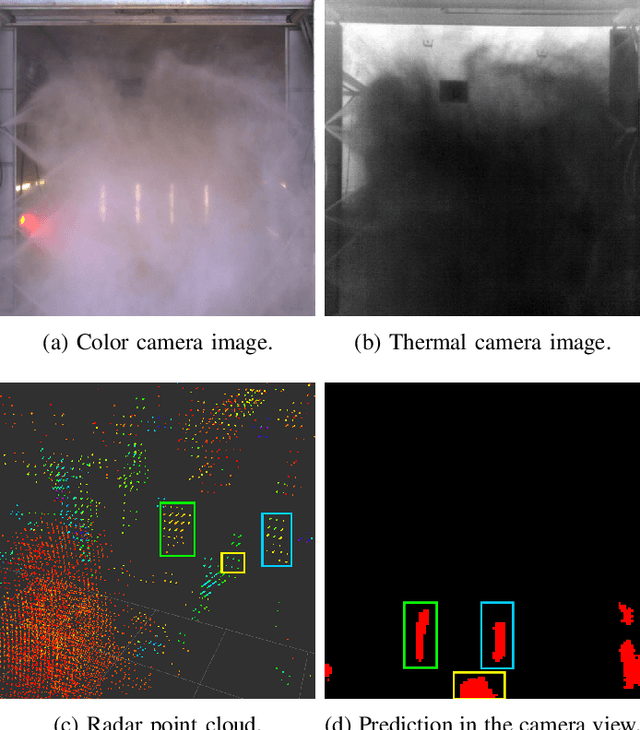

Human Detection from 4D Radar Data in Low-Visibility Field Conditions

Apr 08, 2024

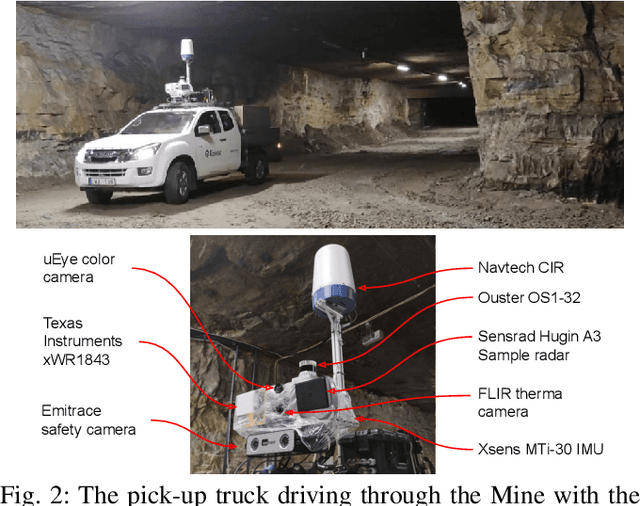

Abstract:Autonomous driving technology is increasingly being used on public roads and in industrial settings such as mines. While it is essential to detect pedestrians, vehicles, or other obstacles, adverse field conditions negatively affect the performance of classical sensors such as cameras or lidars. Radar, on the other hand, is a promising modality that is less affected by, e.g., dust, smoke, water mist or fog. In particular, modern 4D imaging radars provide target responses across the range, vertical angle, horizontal angle and Doppler velocity dimensions. We propose TMVA4D, a CNN architecture that leverages this 4D radar modality for semantic segmentation. The CNN is trained to distinguish between the background and person classes based on a series of 2D projections of the 4D radar data that include the elevation, azimuth, range, and Doppler velocity dimensions. We also outline the process of compiling a novel dataset consisting of data collected in industrial settings with a car-mounted 4D radar and describe how the ground-truth labels were generated from reference thermal images. Using TMVA4D on this dataset, we achieve an mIoU score of 78.2% and an mDice score of 86.1%, evaluated on the two classes background and person

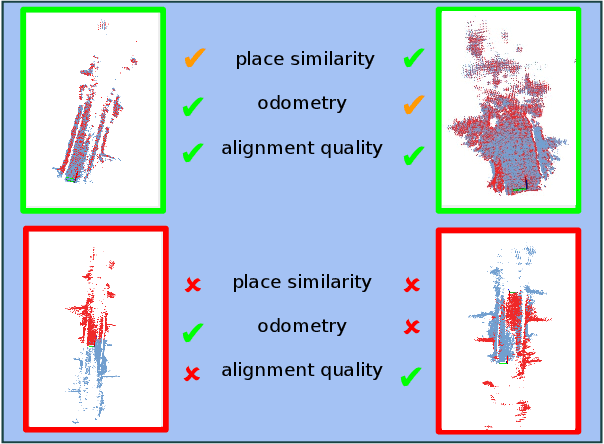

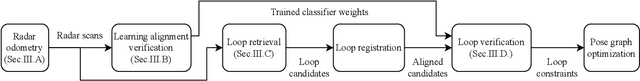

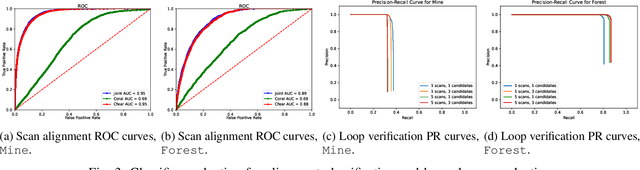

Towards introspective loop closure in 4D radar SLAM

Apr 05, 2024

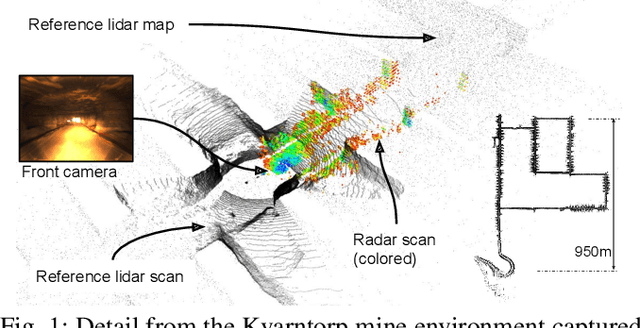

Abstract:Imaging radar is an emerging sensor modality in the context of Localization and Mapping (SLAM), especially suitable for vision-obstructed environments. This article investigates the use of 4D imaging radars for SLAM and analyzes the challenges in robust loop closure. Previous work indicates that 4D radars, together with inertial measurements, offer ample information for accurate odometry estimation. However, the low field of view, limited resolution, and sparse and noisy measurements render loop closure a significantly more challenging problem. Our work builds on the previous work - TBV SLAM - which was proposed for robust loop closure with 360$^\circ$ spinning radars. This article highlights and addresses challenges inherited from a directional 4D radar, such as sparsity, noise, and reduced field of view, and discusses why the common definition of a loop closure is unsuitable. By combining multiple quality measures for accurate loop closure detection adapted to 4D radar data, significant results in trajectory estimation are achieved; the absolute trajectory error is as low as 0.46 m over a distance of 1.8 km, with consistent operation over multiple environments.

Do we need scan-matching in radar odometry?

Oct 27, 2023

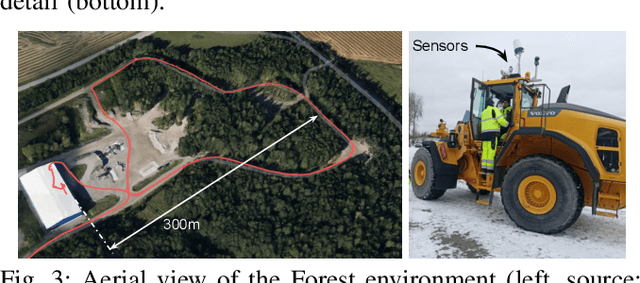

Abstract:There is a current increase in the development of "4D" Doppler-capable radar and lidar range sensors that produce 3D point clouds where all points also have information about the radial velocity relative to the sensor. 4D radars in particular are interesting for object perception and navigation in low-visibility conditions (dust, smoke) where lidars and cameras typically fail. With the advent of high-resolution Doppler-capable radars comes the possibility of estimating odometry from single point clouds, foregoing the need for scan registration which is error-prone in feature-sparse field environments. We compare several odometry estimation methods, from direct integration of Doppler/IMU data and Kalman filter sensor fusion to 3D scan-to-scan and scan-to-map registration, on three datasets with data from two recent 4D radars and two IMUs. Surprisingly, our results show that the odometry from Doppler and IMU data alone give similar or better results than 3D point cloud registration. In our experiments, the average position error can be as low as 0.3% over 1.8 and 4.5km trajectories. That allows accurate estimation of 6DOF ego-motion over long distances also in feature-sparse mine environments. These results are useful not least for applications of navigation with resource-constrained robot platforms in feature-sparse and low-visibility conditions such as mining, construction, and search & rescue operations.

Doppler-only Single-scan 3D Vehicle Odometry

Oct 06, 2023Abstract:We present a novel 3D odometry method that recovers the full motion of a vehicle only from a Doppler-capable range sensor. It leverages the radial velocities measured from the scene, estimating the sensor's velocity from a single scan. The vehicle's 3D motion, defined by its linear and angular velocities, is calculated taking into consideration its kinematic model which provides a constraint between the velocity measured at the sensor frame and the vehicle frame. Experiments carried out prove the viability of our single-sensor method compared to mounting an additional IMU. Our method provides the translation of the sensor, which cannot be reliably determined from an IMU, as well as its rotation. Its short-term accuracy and fast operation (~5ms) make it a proper candidate to supply the initialization to more complex localization algorithms or mapping pipelines. Not only does it reduce the error of the mapper, but it does so at a comparable level of accuracy as an IMU would. All without the need to mount and calibrate an extra sensor on the vehicle.

TBV Radar SLAM -- trust but verify loop candidates

Jan 12, 2023

Abstract:Robust SLAM in large-scale environments requires fault resilience and awareness at multiple stages, from sensing and odometry estimation to loop closure. In this work, we present TBV (Trust But Verify) Radar SLAM, a method for radar SLAM that introspectively verifies loop closure candidates. TBV Radar SLAM achieves a high correct-loop-retrieval rate by combining multiple place-recognition techniques: tightly coupled place similarity and odometry uncertainty search, creating loop descriptors from origin-shifted scans, and delaying loop selection until after verification. Robustness to false constraints is achieved by carefully verifying and selecting the most likely ones from multiple loop constraints. Importantly, the verification and selection are carried out after registration when additional sources of loop evidence can easily be computed. We integrate our loop retrieval and verification method with a fault-resilient odometry pipeline within a pose graph framework. By evaluating on public benchmarks we found that TBV Radar SLAM achieves 65% lower error than the previous state of the art. We also show that it's generalizing across environments without needing to change any parameters.

Extrinsic calibration for highly accurate trajectories reconstruction

Oct 03, 2022

Abstract:In the context of robotics, accurate ground-truth positioning is the cornerstone for the development of mapping and localization algorithms. In outdoor environments and over long distances, total stations provide accurate and precise measurements, that are unaffected by the usual factors that deteriorate the accuracy of Global Navigation Satellite System (GNSS). While a single robotic total station can track the position of a target in three Degrees Of Freedom (DOF), three robotic total stations and three targets are necessary to yield the full six DOF pose reference. Since it is crucial to express the position of targets in a common coordinate frame, we present a novel extrinsic calibration method of multiple robotic total stations with field deployment in mind. The proposed method does not require the manual collection of ground control points during the system setup, nor does it require tedious synchronous measurement on each robotic total station. Based on extensive experimental work, we compare our approach to the classical extrinsic calibration methods used in geomatics for surveying and demonstrate that our approach brings substantial time savings during the deployment. Tested on more than 30 km of trajectories, our new method increases the precision of the extrinsic calibration by 25 % compared to the best state-of-the-art method, which is the one taking manually static ground control points.

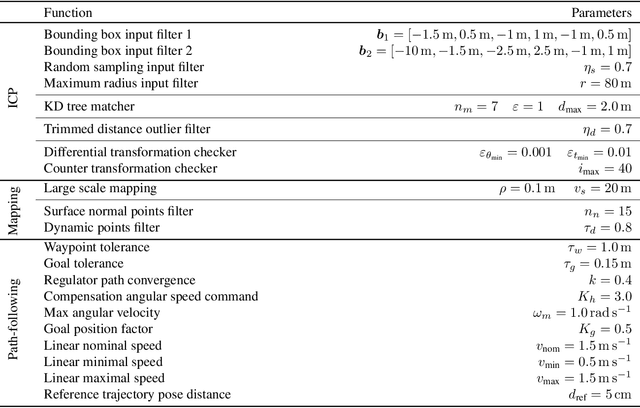

Gravity-constrained point cloud registration

Mar 25, 2022

Abstract:Visual and lidar Simultaneous Localization and Mapping (SLAM) algorithms benefit from the Inertial Measurement Unit (IMU) modality. The high-rate inertial data complement the other lower-rate modalities. Moreover, in the absence of constant acceleration, the gravity vector makes two attitude angles out of three observable in the global coordinate frame. In visual odometry, this is already being used to reduce the 6-Degrees Of Freedom (DOF) pose estimation problem to 4-DOF. In lidar SLAM, the gravity measurements are often used as a penalty in the back-end global map optimization to prevent map deformations. In this work, we propose an Iterative Closest Point (ICP)-based front-end which exploits the observable DOF and provides pose estimates aligned with the gravity vector. We believe that this front-end has the potential to support the loop closure identification, thus speeding up convergences of global map optimizations. The presented approach has been extensively tested in large-scale outdoor environments as well as in the Subterranean Challenge organized by Defense Advanced Research Projects Agency (DARPA). We show that it can reduce the localization drift by 30% when compared to the standard 6-DOF ICP. Moreover, the code is readily available to the community as a part of the libpointmatcher library.

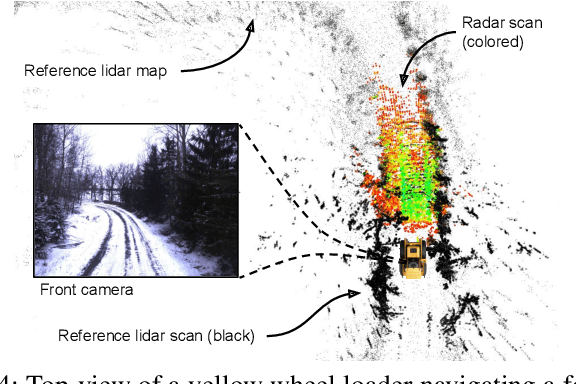

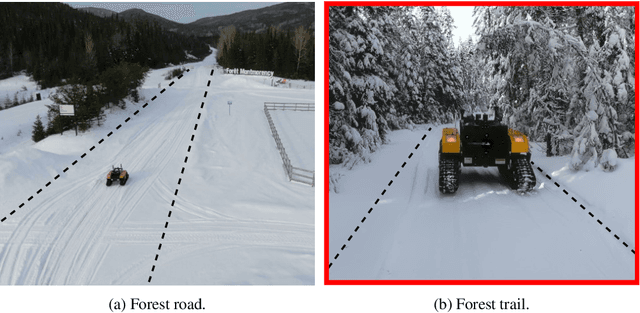

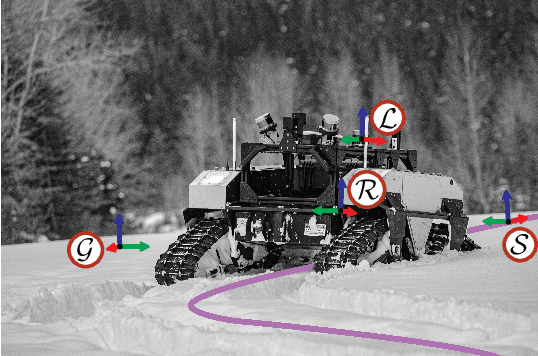

Kilometer-scale autonomous navigation in subarctic forests: challenges and lessons learned

Nov 27, 2021

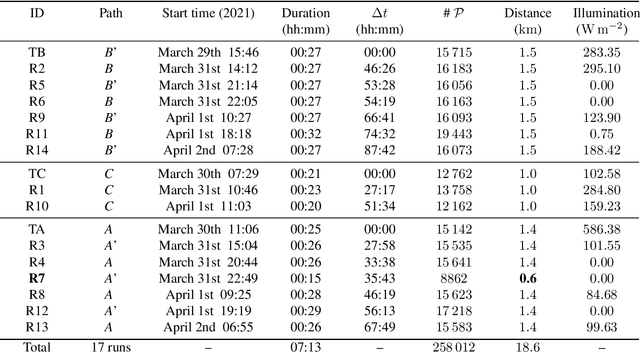

Abstract:Challenges inherent to autonomous wintertime navigation in forests include lack of reliable a Global Navigation Satellite System (GNSS) signal, low feature contrast, high illumination variations and changing environment. This type of off-road environment is an extreme case of situations autonomous cars could encounter in northern regions. Thus, it is important to understand the impact of this harsh environment on autonomous navigation systems. To this end, we present a field report analyzing teach-and-repeat navigation in a subarctic region while subject to large variations of meteorological conditions. First, we describe the system, which relies on point cloud registration to localize a mobile robot through a boreal forest, while simultaneously building a map. We experimentally evaluate this system in over 18.6 km of autonomous navigation in the teach-and-repeat mode. We show that dense vegetation perturbs the GNSS signal, rendering it unsuitable for navigation in forest trails. Furthermore, we highlight the increased uncertainty related to localizing using point cloud registration in forest corridors. We demonstrate that it is not snow precipitation, but snow accumulation that affects our system's ability to localize within the environment. Finally, we expose some lessons learned and challenges from our field campaign to support better experimental work in winter conditions.

System for multi-robotic exploration of underground environments CTU-CRAS-NORLAB in the DARPA Subterranean Challenge

Oct 12, 2021

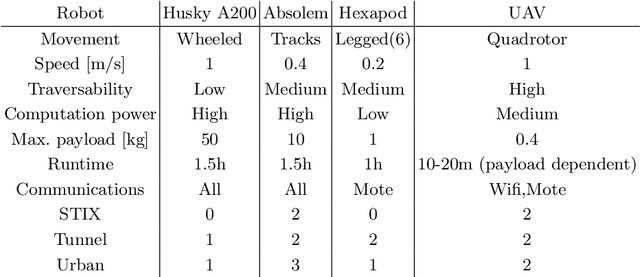

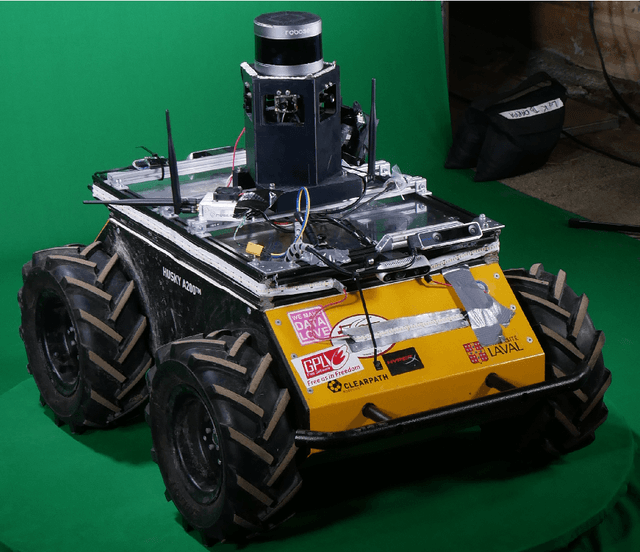

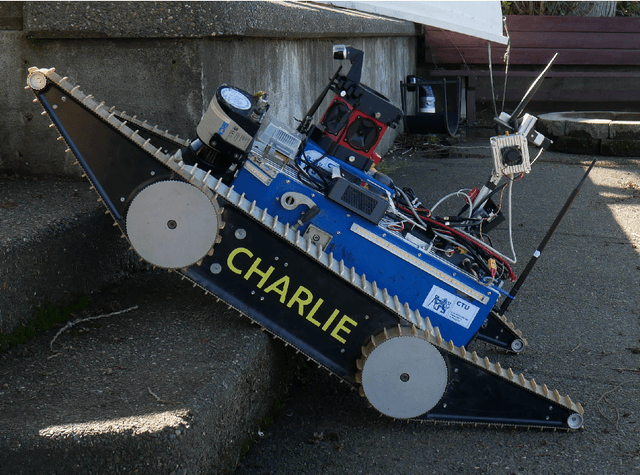

Abstract:We present a field report of CTU-CRAS-NORLAB team from the Subterranean Challenge (SubT) organised by the Defense Advanced Research Projects Agency (DARPA). The contest seeks to advance technologies that would improve the safety and efficiency of search-and-rescue operations in GPS-denied environments. During the contest rounds, teams of mobile robots have to find specific objects while operating in environments with limited radio communication, e.g. mining tunnels, underground stations or natural caverns. We present a heterogeneous exploration robotic system of the CTU-CRAS-NORLAB team, which achieved the third rank at the SubT Tunnel and Urban Circuit rounds and surpassed the performance of all other non-DARPA-funded teams. The field report describes the team's hardware, sensors, algorithms and strategies, and discusses the lessons learned by participating at the DARPA SubT contest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge