Maximilian Hilger

Collecting Human Motion Data in Large and Occlusion-Prone Environments using Ultra-Wideband Localization

May 09, 2025Abstract:With robots increasingly integrating into human environments, understanding and predicting human motion is essential for safe and efficient interactions. Modern human motion and activity prediction approaches require high quality and quantity of data for training and evaluation, usually collected from motion capture systems, onboard or stationary sensors. Setting up these systems is challenging due to the intricate setup of hardware components, extensive calibration procedures, occlusions, and substantial costs. These constraints make deploying such systems in new and large environments difficult and limit their usability for in-the-wild measurements. In this paper we investigate the possibility to apply the novel Ultra-Wideband (UWB) localization technology as a scalable alternative for human motion capture in crowded and occlusion-prone environments. We include additional sensing modalities such as eye-tracking, onboard robot LiDAR and radar sensors, and record motion capture data as ground truth for evaluation and comparison. The environment imitates a museum setup, with up to four active participants navigating toward random goals in a natural way, and offers more than 130 minutes of multi-modal data. Our investigation provides a step toward scalable and accurate motion data collection beyond vision-based systems, laying a foundation for evaluating sensing modalities like UWB in larger and complex environments like warehouses, airports, or convention centers.

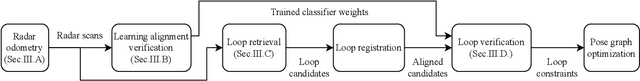

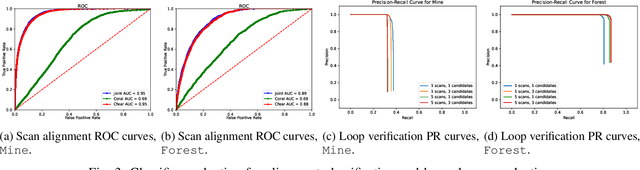

Introspective Loop Closure for SLAM with 4D Imaging Radar

Mar 04, 2025Abstract:Simultaneous Localization and Mapping (SLAM) allows mobile robots to navigate without external positioning systems or pre-existing maps. Radar is emerging as a valuable sensing tool, especially in vision-obstructed environments, as it is less affected by particles than lidars or cameras. Modern 4D imaging radars provide three-dimensional geometric information and relative velocity measurements, but they bring challenges, such as a small field of view and sparse, noisy point clouds. Detecting loop closures in SLAM is critical for reducing trajectory drift and maintaining map accuracy. However, the directional nature of 4D radar data makes identifying loop closures, especially from reverse viewpoints, difficult due to limited scan overlap. This article explores using 4D radar for loop closure in SLAM, focusing on similar and opposing viewpoints. We generate submaps for a denser environment representation and use introspective measures to reject false detections in feature-degenerate environments. Our experiments show accurate loop closure detection in geometrically diverse settings for both similar and opposing viewpoints, improving trajectory estimation with up to 82 % improvement in ATE and rejecting false positives in self-similar environments.

RaNDT SLAM: Radar SLAM Based on Intensity-Augmented Normal Distributions Transform

Aug 21, 2024

Abstract:Rescue robotics sets high requirements to perception algorithms due to the unstructured and potentially vision-denied environments. Pivoting Frequency-Modulated Continuous Wave radars are an emerging sensing modality for SLAM in this kind of environment. However, the complex noise characteristics of radar SLAM makes, particularly indoor, applications computationally demanding and slow. In this work, we introduce a novel radar SLAM framework, RaNDT SLAM, that operates fast and generates accurate robot trajectories. The method is based on the Normal Distributions Transform augmented by radar intensity measures. Motion estimation is based on fusion of motion model, IMU data, and registration of the intensity-augmented Normal Distributions Transform. We evaluate RaNDT SLAM in a new benchmark dataset and the Oxford Radar RobotCar dataset. The new dataset contains indoor and outdoor environments besides multiple sensing modalities (LiDAR, radar, and IMU).

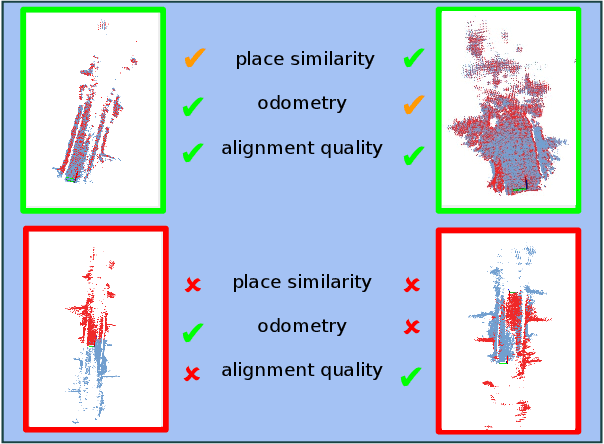

Towards introspective loop closure in 4D radar SLAM

Apr 05, 2024

Abstract:Imaging radar is an emerging sensor modality in the context of Localization and Mapping (SLAM), especially suitable for vision-obstructed environments. This article investigates the use of 4D imaging radars for SLAM and analyzes the challenges in robust loop closure. Previous work indicates that 4D radars, together with inertial measurements, offer ample information for accurate odometry estimation. However, the low field of view, limited resolution, and sparse and noisy measurements render loop closure a significantly more challenging problem. Our work builds on the previous work - TBV SLAM - which was proposed for robust loop closure with 360$^\circ$ spinning radars. This article highlights and addresses challenges inherited from a directional 4D radar, such as sparsity, noise, and reduced field of view, and discusses why the common definition of a loop closure is unsuitable. By combining multiple quality measures for accurate loop closure detection adapted to 4D radar data, significant results in trajectory estimation are achieved; the absolute trajectory error is as low as 0.46 m over a distance of 1.8 km, with consistent operation over multiple environments.

An evaluation of CFEAR Radar Odometry

Apr 03, 2024

Abstract:This article describes the method CFEAR Radar odometry, submitted to a competition at the Radar in Robotics workshop, ICRA 20241. CFEAR is an efficient and accurate method for spinning 2D radar odometry that generalizes well across environments. This article presents an overview of the odometry pipeline with new experiments on the public Boreas dataset. We show that a real-time capable configuration of CFEAR - with its original parameter set - yields surprisingly low drift in the Boreas dataset. Additionally, we discuss an improved implementation and solving strategy that enables the most accurate configuration to run in real-time with improved robustness, reaching as low as 0.61% translation drift at a frame rate of 68 Hz. A recent release of the source code is available to the community https://github.com/dan11003/CFEAR_Radarodometry_code_public, and we publish the evaluation from this article on https://github.com/dan11003/cfear_2024_workshop

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge