Dominic Baril

Comparing Motion Distortion Between Vehicle Field Deployments

Apr 30, 2024Abstract:Recent advances in autonomous driving for uncrewed ground vehicles (UGVs) have spurred significant development, particularly in challenging terrains. This paper introduces a classification system assessing various UGV deployments reported in the literature. Our approach considers motion distortion features that include internal UGV features, such as mass and speed, and external features, such as terrain complexity, which all influence the efficiency of models and navigation systems. We present results that map UGV deployments relative to vehicle kinetic energy and terrain complexity, providing insights into the level of complexity and risk associated with different operational environments. Additionally, we propose a motion distortion metric to assess UGV navigation performance that does not require an explicit quantification of motion distortion features. Using this metric, we conduct a case study to illustrate the impact of motion distortion features on modeling accuracy. This research advocates for creating a comprehensive database containing many different motion distortion features, which would contribute to advancing the understanding of autonomous driving capabilities in rough conditions and provide a validation framework for future developments in UGV navigation systems.

Saturation-Aware Angular Velocity Estimation: Extending the Robustness of SLAM to Aggressive Motions

Oct 11, 2023

Abstract:We propose a novel angular velocity estimation method to increase the robustness of Simultaneous Localization And Mapping (SLAM) algorithms against gyroscope saturations induced by aggressive motions. Field robotics expose robots to various hazards, including steep terrains, landslides, and staircases, where substantial accelerations and angular velocities can occur if the robot loses stability and tumbles. These extreme motions can saturate sensor measurements, especially gyroscopes, which are the first sensors to become inoperative. While the structural integrity of the robot is at risk, the resilience of the SLAM framework is oftentimes given little consideration. Consequently, even if the robot is physically capable of continuing the mission, its operation will be compromised due to a corrupted representation of the world. Regarding this problem, we propose a way to estimate the angular velocity using accelerometers during extreme rotations caused by tumbling. We show that our method reduces the median localization error by 71.5 % in translation and 65.5 % in rotation and reduces the number of SLAM failures by 73.3 % on the collected data. We also propose the Tumbling-Induced Gyroscope Saturation (TIGS) dataset, which consists of outdoor experiments recording the motion of a lidar subject to angular velocities four times higher than other available datasets. The dataset is available online at https://github.com/norlab-ulaval/Norlab_wiki/wiki/TIGS-Dataset.

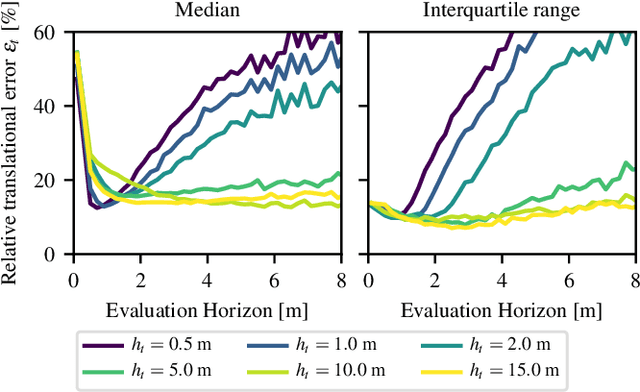

DRIVE: Data-driven Robot Input Vector Exploration

Sep 19, 2023Abstract:An accurate motion model is a fundamental component of most autonomous navigation systems. While much work has been done on improving model formulation, no standard protocol exists for gathering empirical data required to train models. In this work, we address this issue by proposing Data-driven Robot Input Vector Exploration (DRIVE), a protocol that enables characterizing uncrewed ground vehicles (UGVs) input limits and gathering empirical model training data. We also propose a novel learned slip approach outperforming similar acceleration learning approaches. Our contributions are validated through an extensive experimental evaluation, cumulating over 7 km and 1.8 h of driving data over three distinct UGVs and four terrain types. We show that our protocol offers increased predictive performance over common human-driven data-gathering protocols. Furthermore, our protocol converges with 46 s of training data, almost four times less than the shortest human dataset gathering protocol. We show that the operational limit for our model is reached in extreme slip conditions encountered on surfaced ice. DRIVE is an efficient way of characterizing UGV motion in its operational conditions. Our code and dataset are both available online at this link: https://github.com/norlab-ulaval/DRIVE.

On the Importance of Quantifying Visibility for Autonomous Vehicles under Extreme Precipitation

Sep 07, 2022

Abstract:In the context of autonomous driving, vehicles are inherently bound to encounter more extreme weather during which public safety must be ensured. As climate is quickly changing, the frequency of heavy snowstorms is expected to increase and become a major threat to safe navigation. While there is much literature aiming to improve navigation resiliency to winter conditions, there is a lack of standard metrics to quantify the loss of visibility of lidar sensors related to precipitation. This chapter proposes a novel metric to quantify the lidar visibility loss in real time, relying on the notion of visibility from the meteorology research field. We evaluate this metric on the Canadian Adverse Driving Conditions (CADC) dataset, correlate it with the performance of a state-of-the-art lidar-based localization algorithm, and evaluate the benefit of filtering point clouds before the localization process. We show that the Iterative Closest Point (ICP) algorithm is surprisingly robust against snowfalls, but abrupt events, such as snow gusts, can greatly hinder its accuracy. We discuss such events and demonstrate the need for better datasets focusing on these extreme events to quantify their effect.

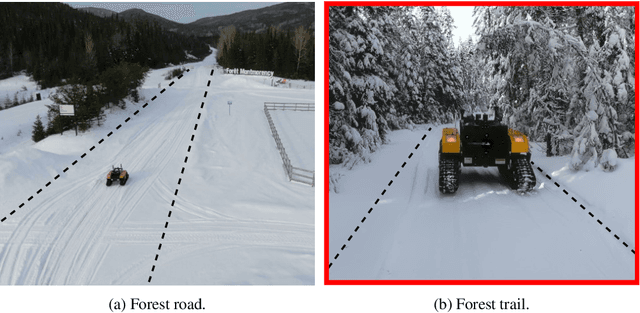

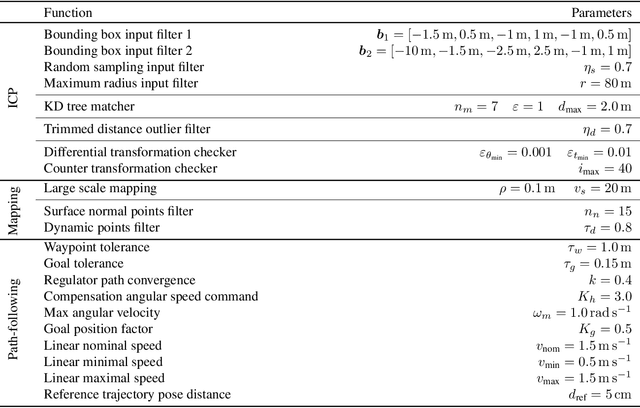

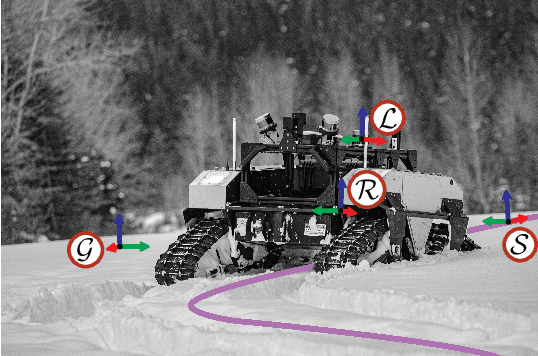

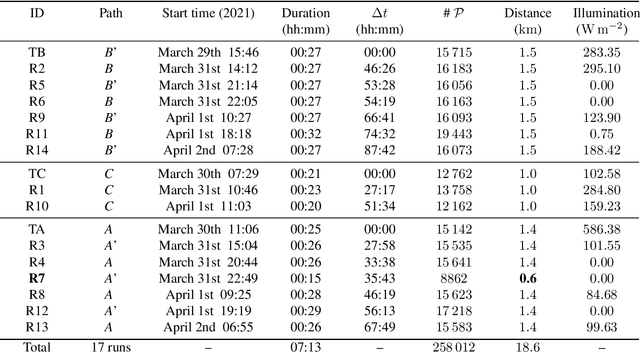

Kilometer-scale autonomous navigation in subarctic forests: challenges and lessons learned

Nov 27, 2021

Abstract:Challenges inherent to autonomous wintertime navigation in forests include lack of reliable a Global Navigation Satellite System (GNSS) signal, low feature contrast, high illumination variations and changing environment. This type of off-road environment is an extreme case of situations autonomous cars could encounter in northern regions. Thus, it is important to understand the impact of this harsh environment on autonomous navigation systems. To this end, we present a field report analyzing teach-and-repeat navigation in a subarctic region while subject to large variations of meteorological conditions. First, we describe the system, which relies on point cloud registration to localize a mobile robot through a boreal forest, while simultaneously building a map. We experimentally evaluate this system in over 18.6 km of autonomous navigation in the teach-and-repeat mode. We show that dense vegetation perturbs the GNSS signal, rendering it unsuitable for navigation in forest trails. Furthermore, we highlight the increased uncertainty related to localizing using point cloud registration in forest corridors. We demonstrate that it is not snow precipitation, but snow accumulation that affects our system's ability to localize within the environment. Finally, we expose some lessons learned and challenges from our field campaign to support better experimental work in winter conditions.

System for multi-robotic exploration of underground environments CTU-CRAS-NORLAB in the DARPA Subterranean Challenge

Oct 12, 2021

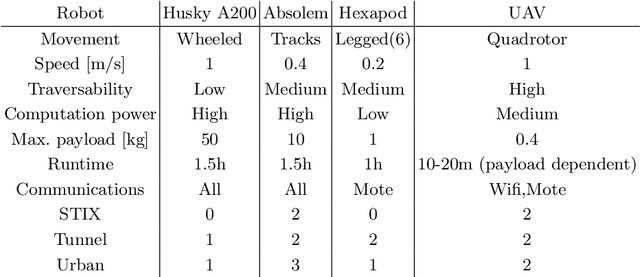

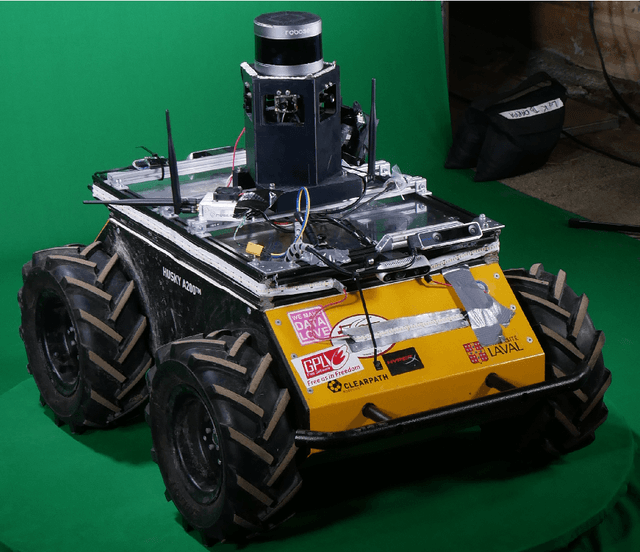

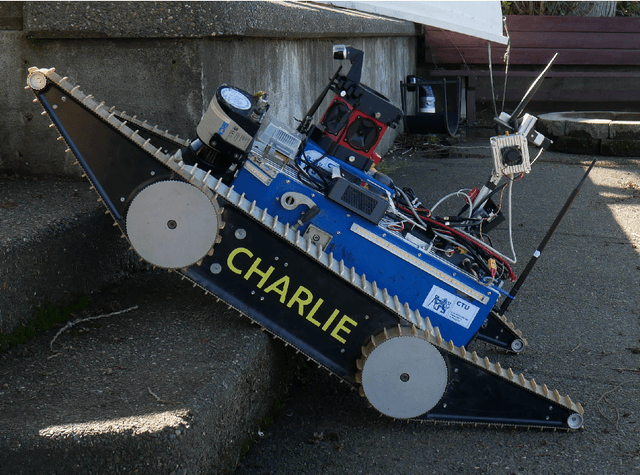

Abstract:We present a field report of CTU-CRAS-NORLAB team from the Subterranean Challenge (SubT) organised by the Defense Advanced Research Projects Agency (DARPA). The contest seeks to advance technologies that would improve the safety and efficiency of search-and-rescue operations in GPS-denied environments. During the contest rounds, teams of mobile robots have to find specific objects while operating in environments with limited radio communication, e.g. mining tunnels, underground stations or natural caverns. We present a heterogeneous exploration robotic system of the CTU-CRAS-NORLAB team, which achieved the third rank at the SubT Tunnel and Urban Circuit rounds and surpassed the performance of all other non-DARPA-funded teams. The field report describes the team's hardware, sensors, algorithms and strategies, and discusses the lessons learned by participating at the DARPA SubT contest.

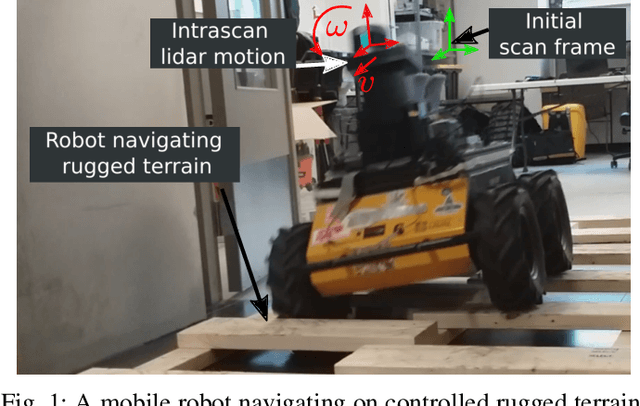

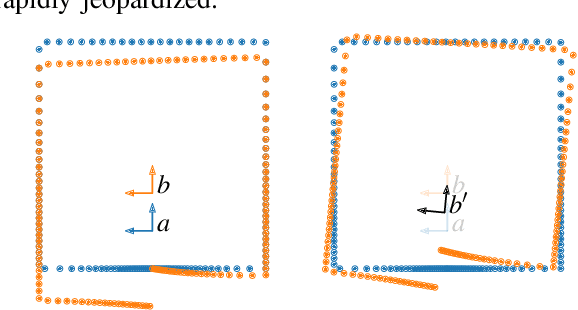

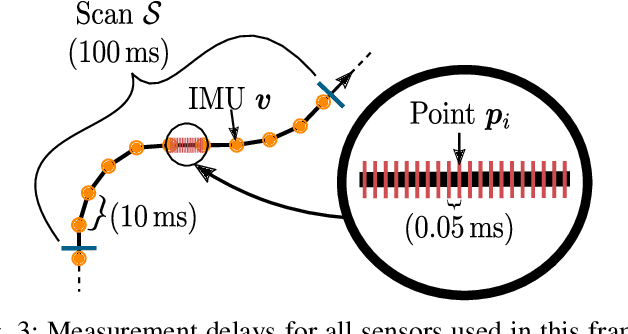

Lidar Scan Registration Robust to Extreme Motions

May 03, 2021

Abstract:Registration algorithms, such as Iterative Closest Point (ICP), have proven effective in mobile robot localization algorithms over the last decades. However, they are susceptible to failure when a robot sustains extreme velocities and accelerations. For example, this kind of motion can happen after a collision, causing a point cloud to be heavily skewed. While point cloud de-skewing methods have been explored in the past to increase localization and mapping accuracy, these methods still rely on highly accurate odometry systems or ideal navigation conditions. In this paper, we present a method taking into account the remaining motion uncertainties of the trajectory used to de-skew a point cloud along with the environment geometry to increase the robustness of current registration algorithms. We compare our method to three other solutions in a test bench producing 3D maps with peak accelerations of 200 m/s^2 and 800 rad/s^2. In these extreme scenarios, we demonstrate that our method decreases the error by 9.26 % in translation and by 21.84 % in rotation. The proposed method is generic enough to be integrated to many variants of weighted ICP without adaptation and supports localization robustness in harsher terrains.

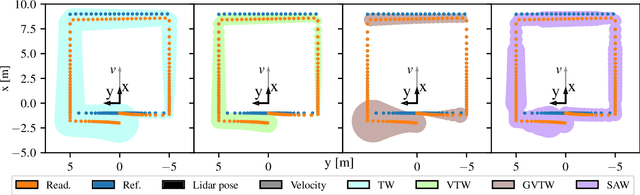

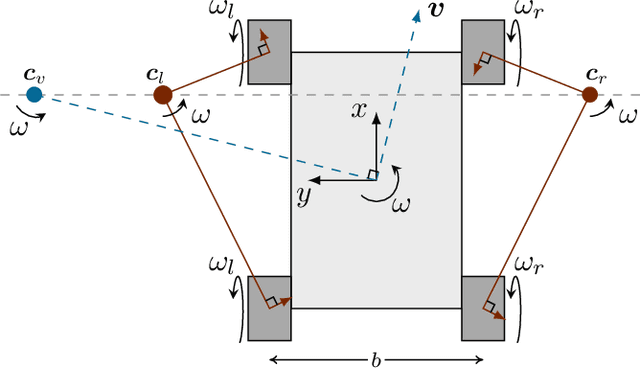

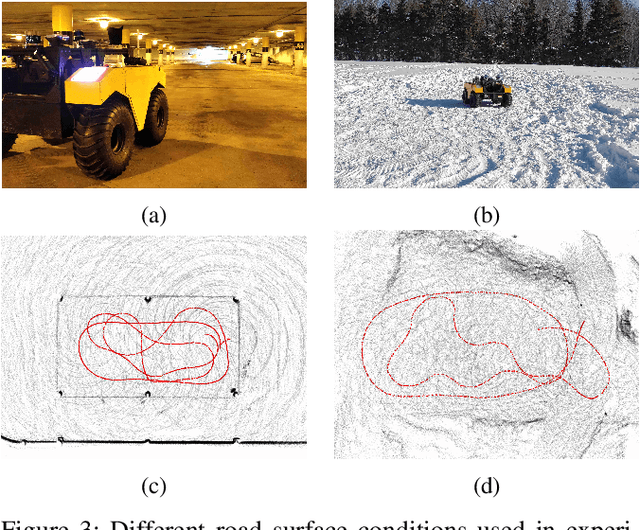

Evaluation of Skid-Steering Kinematic Models for Subarctic Environments

Apr 10, 2020

Abstract:In subarctic and arctic areas, large and heavy skid-steered robots are preferred for their robustness and ability to operate on difficult terrain. State estimation, motion control and path planning for these robots rely on accurate odometry models based on wheel velocities. However, the state-of-the-art odometry models for skid-steer mobile robots (SSMRs) have usually been tested on relatively lightweight platforms. In this paper, we focus on how these models perform when deployed on a large and heavy (590 kg) SSMR. We collected more than 2 km of data on both snow and concrete. We compare the ideal differential-drive, extended differential-drive, radius-of-curvature-based, and full linear kinematic models commonly deployed for SSMRs. Each of the models is fine-tuned by searching their optimal parameters on both snow and concrete. We then discuss the relationship between the parameters, the model tuning, and the final accuracy of the models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge