Mathieu Labussière

Blur aware metric depth estimation with multi-focus plenoptic cameras

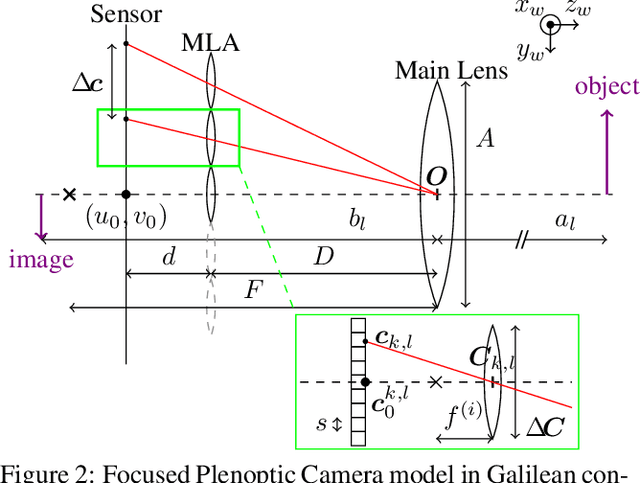

Aug 08, 2023Abstract:While a traditional camera only captures one point of view of a scene, a plenoptic or light-field camera, is able to capture spatial and angular information in a single snapshot, enabling depth estimation from a single acquisition. In this paper, we present a new metric depth estimation algorithm using only raw images from a multi-focus plenoptic camera. The proposed approach is especially suited for the multi-focus configuration where several micro-lenses with different focal lengths are used. The main goal of our blur aware depth estimation (BLADE) approach is to improve disparity estimation for defocus stereo images by integrating both correspondence and defocus cues. We thus leverage blur information where it was previously considered a drawback. We explicitly derive an inverse projection model including the defocus blur providing depth estimates up to a scale factor. A method to calibrate the inverse model is then proposed. We thus take into account depth scaling to achieve precise and accurate metric depth estimates. Our results show that introducing defocus cues improves the depth estimation. We demonstrate the effectiveness of our framework and depth scaling calibration on relative depth estimation setups and on real-world 3D complex scenes with ground truth acquired with a 3D lidar scanner.

Leveraging blur information for plenoptic camera calibration

Nov 09, 2021

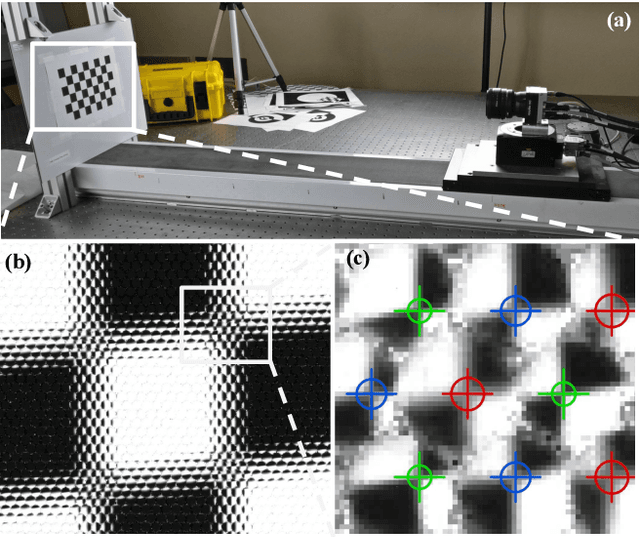

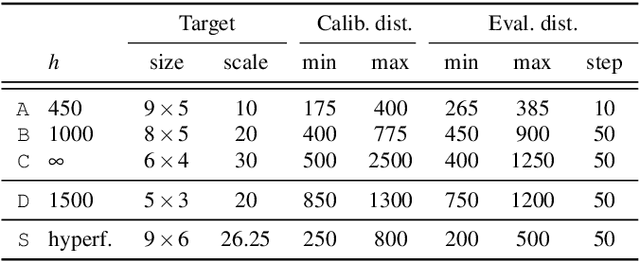

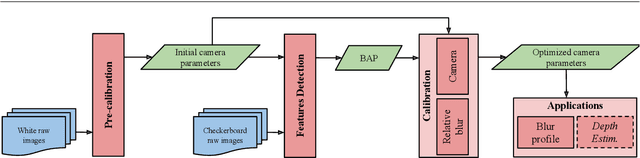

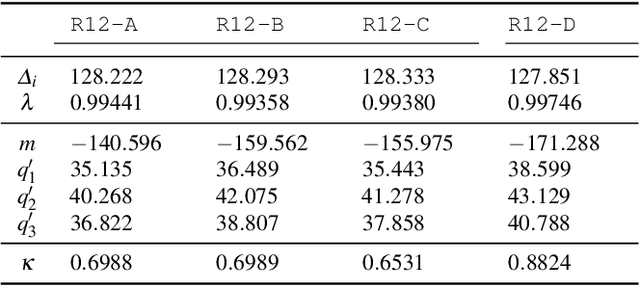

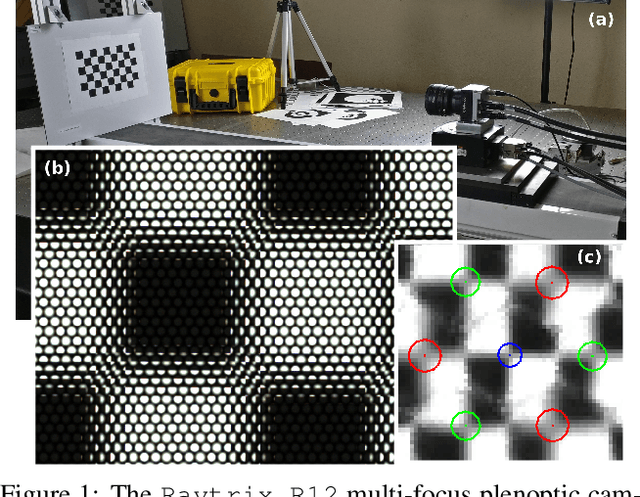

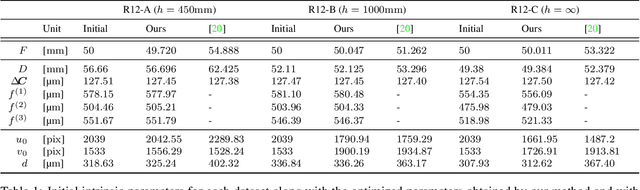

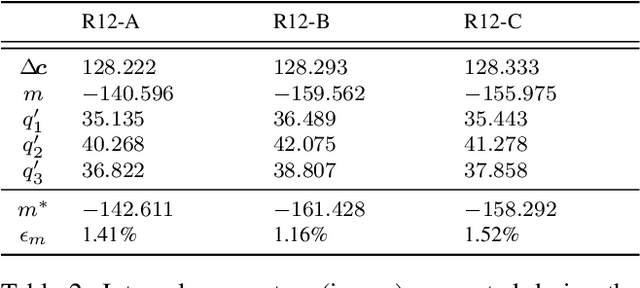

Abstract:This paper presents a novel calibration algorithm for plenoptic cameras, especially the multi-focus configuration, where several types of micro-lenses are used, using raw images only. Current calibration methods rely on simplified projection models, use features from reconstructed images, or require separated calibrations for each type of micro-lens. In the multi-focus configuration, the same part of a scene will demonstrate different amounts of blur according to the micro-lens focal length. Usually, only micro-images with the smallest amount of blur are used. In order to exploit all available data, we propose to explicitly model the defocus blur in a new camera model with the help of our newly introduced Blur Aware Plenoptic (BAP) feature. First, it is used in a pre-calibration step that retrieves initial camera parameters, and second, to express a new cost function to be minimized in our single optimization process. Third, it is exploited to calibrate the relative blur between micro-images. It links the geometric blur, i.e., the blur circle, to the physical blur, i.e., the point spread function. Finally, we use the resulting blur profile to characterize the camera's depth of field. Quantitative evaluations in controlled environment on real-world data demonstrate the effectiveness of our calibrations.

Blur Aware Calibration of Multi-Focus Plenoptic Camera

Apr 16, 2020

Abstract:This paper presents a novel calibration algorithm for Multi-Focus Plenoptic Cameras (MFPCs) using raw images only. The design of such cameras is usually complex and relies on precise placement of optic elements. Several calibration procedures have been proposed to retrieve the camera parameters but relying on simplified models, reconstructed images to extract features, or multiple calibrations when several types of micro-lens are used. Considering blur information, we propose a new Blur Aware Plenoptic (BAP) feature. It is first exploited in a pre-calibration step that retrieves initial camera parameters, and secondly to express a new cost function for our single optimization process. The effectiveness of our calibration method is validated by quantitative and qualitative experiments.

Lidar Measurement Bias Estimation via Return Waveform Modelling in a Context of 3D Mapping

Oct 03, 2018

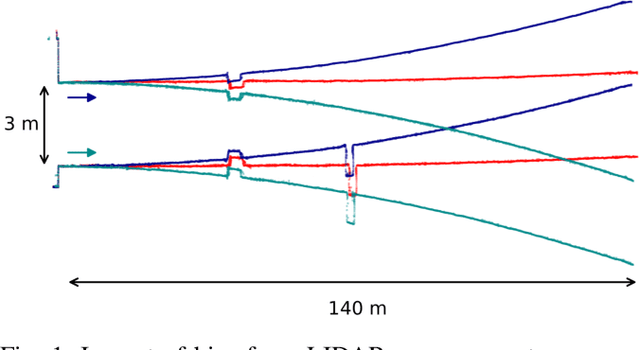

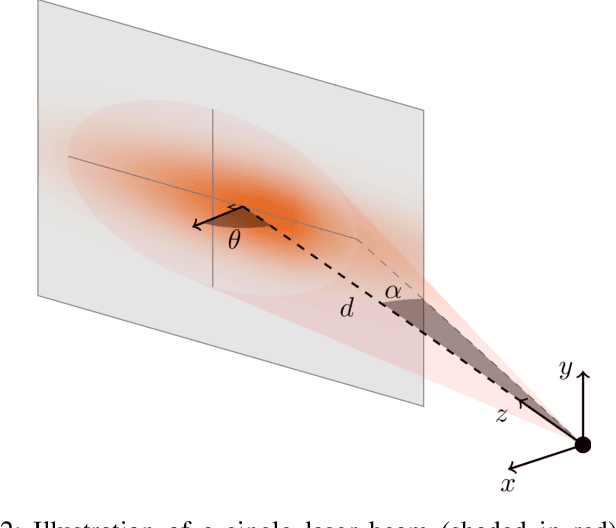

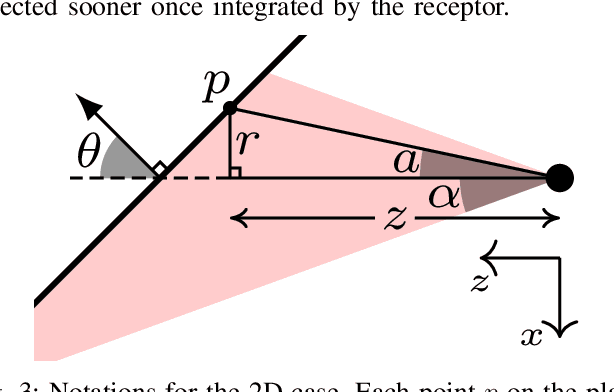

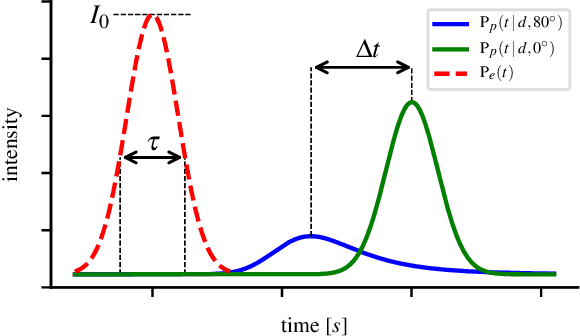

Abstract:In a context of 3D mapping, it is very important to get accurate measurements from sensors. In particular, Light Detection And Ranging (LIDAR) measurements are typically treated as a zero-mean Gaussian distribution. We show that this assumption leads to predictable localisation drifts, especially when a bias related to measuring obstacles with high incidence angles is not taken into consideration. Moreover, we present a way to physically understand and model this bias, which generalises to multiple sensors. Using an experimental setup, we measured the bias of the Sick LMS151, Velodyne HDL-32E, and Robosense RS-LiDAR-16 as a function of depth and incidence angle, and showed that the bias can go up to 20 cm for high incidence angles. We then used our modelisations to remove the bias from the measurements, leading to more accurate maps and a reduced localisation drift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge