From Perception to Decision: A Data-driven Approach to End-to-end Motion Planning for Autonomous Ground Robots

Paper and Code

Nov 06, 2018

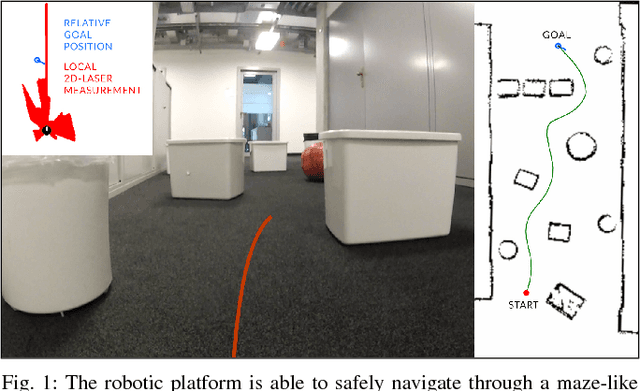

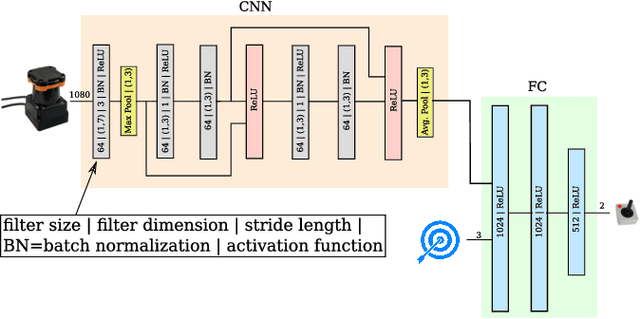

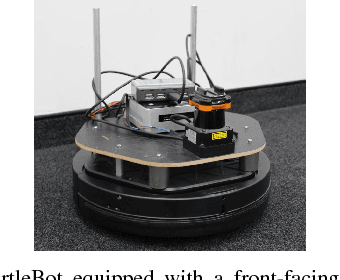

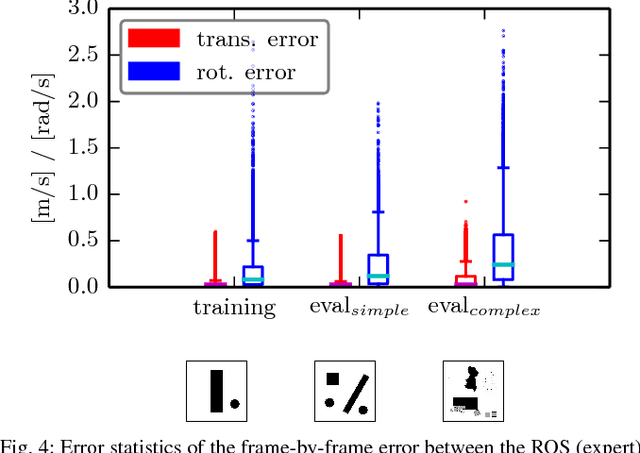

Learning from demonstration for motion planning is an ongoing research topic. In this paper we present a model that is able to learn the complex mapping from raw 2D-laser range findings and a target position to the required steering commands for the robot. To our best knowledge, this work presents the first approach that learns a target-oriented end-to-end navigation model for a robotic platform. The supervised model training is based on expert demonstrations generated in simulation with an existing motion planner. We demonstrate that the learned navigation model is directly transferable to previously unseen virtual and, more interestingly, real-world environments. It can safely navigate the robot through obstacle-cluttered environments to reach the provided targets. We present an extensive qualitative and quantitative evaluation of the neural network-based motion planner, and compare it to a grid-based global approach, both in simulation and in real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge