Valentina Cavinato

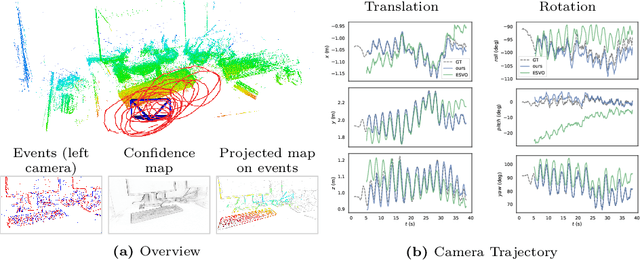

ES-PTAM: Event-based Stereo Parallel Tracking and Mapping

Aug 28, 2024

Abstract:Visual Odometry (VO) and SLAM are fundamental components for spatial perception in mobile robots. Despite enormous progress in the field, current VO/SLAM systems are limited by their sensors' capability. Event cameras are novel visual sensors that offer advantages to overcome the limitations of standard cameras, enabling robots to expand their operating range to challenging scenarios, such as high-speed motion and high dynamic range illumination. We propose a novel event-based stereo VO system by combining two ideas: a correspondence-free mapping module that estimates depth by maximizing ray density fusion and a tracking module that estimates camera poses by maximizing edge-map alignment. We evaluate the system comprehensively on five real-world datasets, spanning a variety of camera types (manufacturers and spatial resolutions) and scenarios (driving, flying drone, hand-held, egocentric, etc). The quantitative and qualitative results demonstrate that our method outperforms the state of the art in majority of the test sequences by a margin, e.g., trajectory error reduction of 45% on RPG dataset, 61% on DSEC dataset, and 21% on TUM-VIE dataset. To benefit the community and foster research on event-based perception systems, we release the source code and results: https://github.com/tub-rip/ES-PTAM

* 17 pages, 7 figures, 4 tables, https://github.com/tub-rip/ES-PTAM

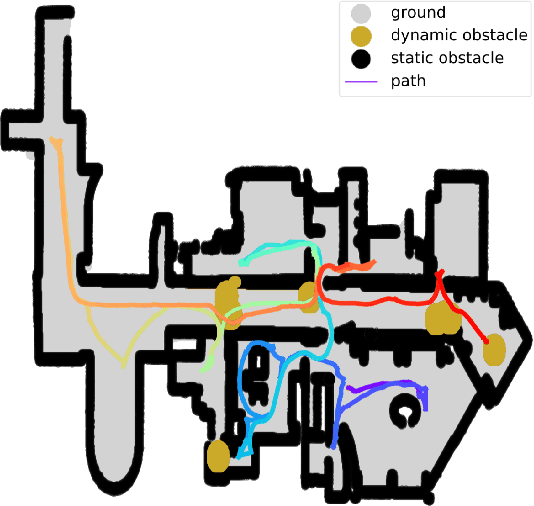

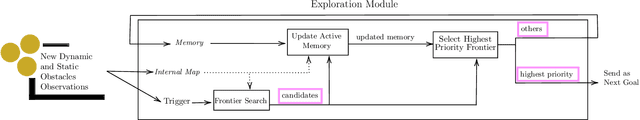

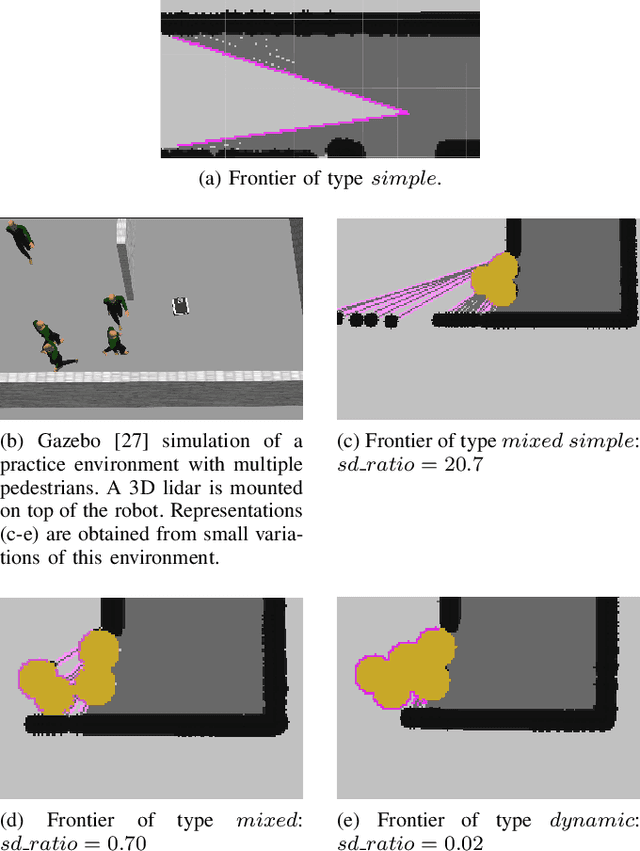

Dynamic-Aware Autonomous Exploration in Populated Environments

Apr 06, 2021

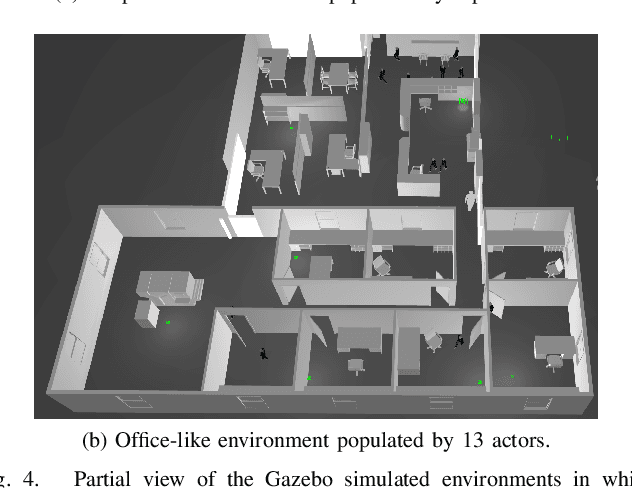

Abstract:Autonomous exploration allows mobile robots to navigate in initially unknown territories in order to build complete representations of the environments. In many real-life applications, environments often contain dynamic obstacles which can compromise the exploration process by temporarily blocking passages, narrow paths, exits or entrances to other areas yet to be explored. In this work, we formulate a novel exploration strategy capable of explicitly handling dynamic obstacles, thus leading to complete and reliable exploration outcomes in populated environments. We introduce the concept of dynamic frontiers to represent unknown regions at the boundaries with dynamic obstacles together with a cost function which allows the robot to make informed decisions about when to revisit such frontiers. We evaluate the proposed strategy in challenging simulated environments and show that it outperforms a state-of-the-art baseline in these populated scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge