Tran Nguyen Le

Interactive Identification of Granular Materials using Force Measurements

Mar 26, 2024

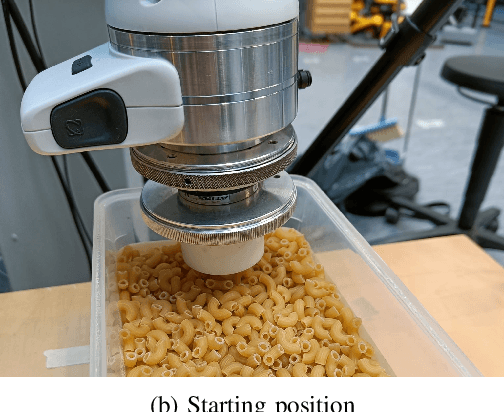

Abstract:The ability to identify granular materials facilitates the emergence of various new applications in robotics, ranging from cooking at home to truck loading at mining sites. However, granular material identification remains a challenging and underexplored area. In this work, we present a novel interactive material identification framework that enables robots to identify a wide range of granular materials using only a force-torque sensor for perception. Our framework, comprising interactive exploration, feature extraction, and classification stages, prioritizes simplicity and transparency for seamless integration into various manipulation pipelines. We evaluate the proposed approach through extensive experiments with a real-world dataset comprising 11 granular materials, which we also make publicly available. Additionally, we conducted a comprehensive qualitative analysis of the dataset to offer deeper insights into its nature, aiding future development. Our results show that the proposed method is capable of accurately identifying a wide range of granular materials solely relying on force measurements obtained from direct interaction with the materials. Code and dataset are available at: https://irobotics.aalto.fi/indentify_granular/.

Dynamic Manipulation of Deformable Objects using Imitation Learning with Adaptation to Hardware Constraints

Mar 19, 2024Abstract:Imitation Learning (IL) is a promising paradigm for learning dynamic manipulation of deformable objects since it does not depend on difficult-to-create accurate simulations of such objects. However, the translation of motions demonstrated by a human to a robot is a challenge for IL, due to differences in the embodiments and the robot's physical limits. These limits are especially relevant in dynamic manipulation where high velocities and accelerations are typical. To address this problem, we propose a framework that first maps a dynamic demonstration into a motion that respects the robot's constraints using a constrained Dynamic Movement Primitive. Second, the resulting object state is further optimized by quasi-static refinement motions to optimize task performance metrics. This allows both efficiently altering the object state by dynamic motions and stable small-scale refinements. We evaluate the framework in the challenging task of bag opening, designing the system BILBO: Bimanual dynamic manipulation using Imitation Learning for Bag Opening. Our results show that BILBO can successfully open a wide range of crumpled bags, using a demonstration with a single bag. See supplementary material at https://sites.google.com/view/bilbo-bag.

Online Learning of Human Constraints from Feedback in Shared Autonomy

Mar 05, 2024Abstract:Real-time collaboration with humans poses challenges due to the different behavior patterns of humans resulting from diverse physical constraints. Existing works typically focus on learning safety constraints for collaboration, or how to divide and distribute the subtasks between the participating agents to carry out the main task. In contrast, we propose to learn a human constraints model that, in addition, considers the diverse behaviors of different human operators. We consider a type of collaboration in a shared-autonomy fashion, where both a human operator and an assistive robot act simultaneously in the same task space that affects each other's actions. The task of the assistive agent is to augment the skill of humans to perform a shared task by supporting humans as much as possible, both in terms of reducing the workload and minimizing the discomfort for the human operator. Therefore, we propose an augmentative assistant agent capable of learning and adapting to human physical constraints, aligning its actions with the ergonomic preferences and limitations of the human operator.

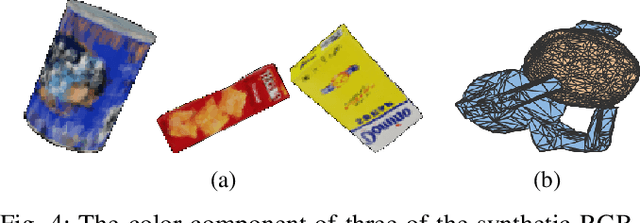

SPONGE: Sequence Planning with Deformable-ON-Rigid Contact Prediction from Geometric Features

Mar 24, 2023Abstract:Planning robotic manipulation tasks, especially those that involve interaction between deformable and rigid objects, is challenging due to the complexity in predicting such interactions. We introduce SPONGE, a sequence planning pipeline powered by a deep learning-based contact prediction model for contacts between deformable and rigid bodies under interactions. The contact prediction model is trained on synthetic data generated by a developed simulation environment to learn the mapping from point-cloud observation of a rigid target object and the pose of a deformable tool, to 3D representation of the contact points between the two bodies. We experimentally evaluated the proposed approach for a dish cleaning task both in simulation and on a real \panda with real-world objects. The experimental results demonstrate that in both scenarios the proposed planning pipeline is capable of generating high-quality trajectories that can accomplish the task by achieving more than 90\% area coverage on different objects of varying sizes and curvatures while minimizing travel distance. Code and video are available at: \url{https://irobotics.aalto.fi/sponge/}.

Constrained Generative Sampling of 6-DoF Grasps

Feb 21, 2023

Abstract:Most state-of-the-art data-driven grasp sampling methods propose stable and collision-free grasps uniformly on the target object. For bin-picking, executing any of those grasps is sufficient. However, for completing specific tasks, such as squeezing out liquid from a bottle, we want the grasp to be on a specific part on the object body while avoiding other locations, such as the cap. In this work, we present a generative grasp sampling network, VCGS, capable of constrained 6-Degrees-of-Freedom (DoF) grasp sampling. In addition, we also curate a new dataset designed to train and evaluate methods for constrained grasping. The new dataset, called CONG, consists of over 14 million training samples of synthetically rendered point clouds and grasps at random target areas on 2889 objects. VCGS is benchmarked against GraspNet, a state-of-the-art unconstrained grasp sampler, in simulation and on a real robot. The results demonstrate that VCGS achieves a 10-15% higher grasp success rate than the baseline while being 2-3 times as sample efficient.

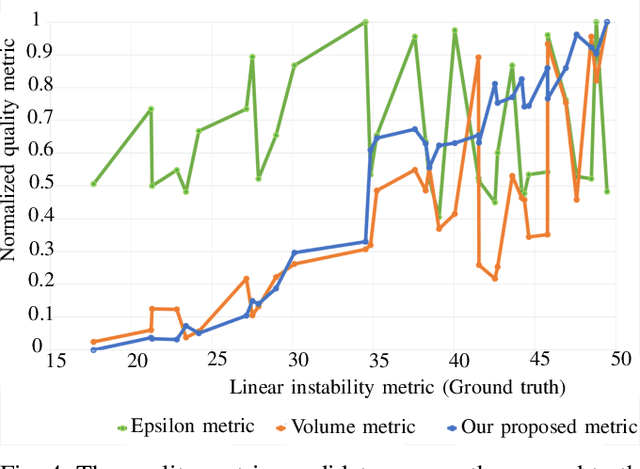

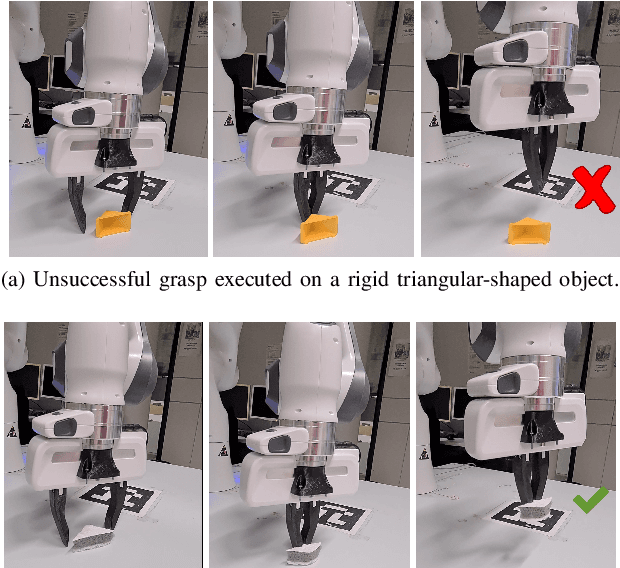

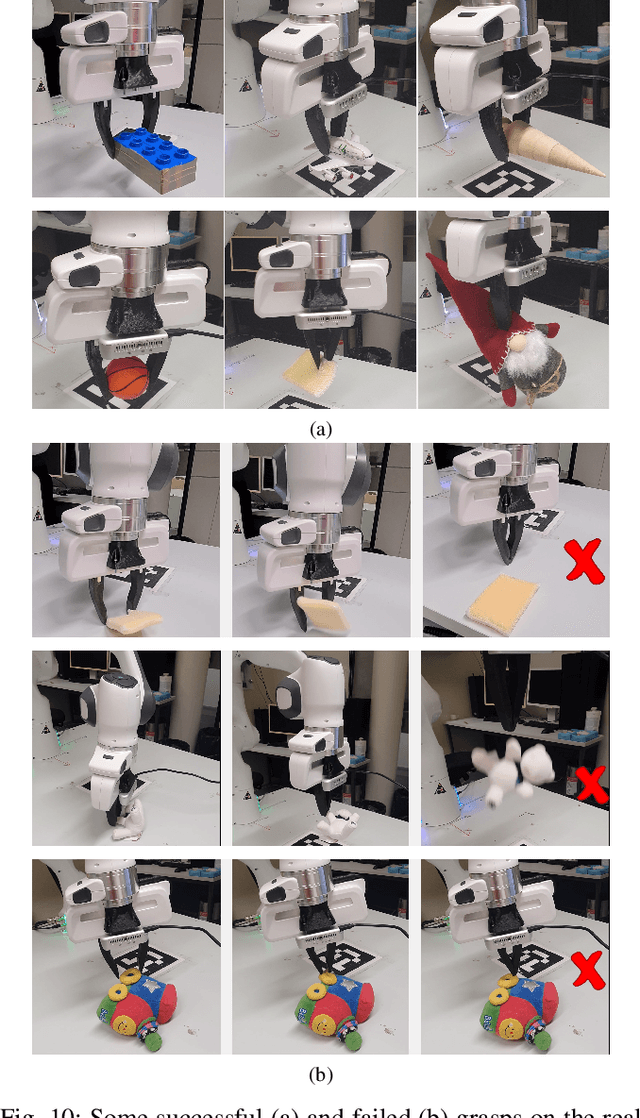

A Novel Simulation-Based Quality Metric for Evaluating Grasps on 3D Deformable Objects

Mar 23, 2022

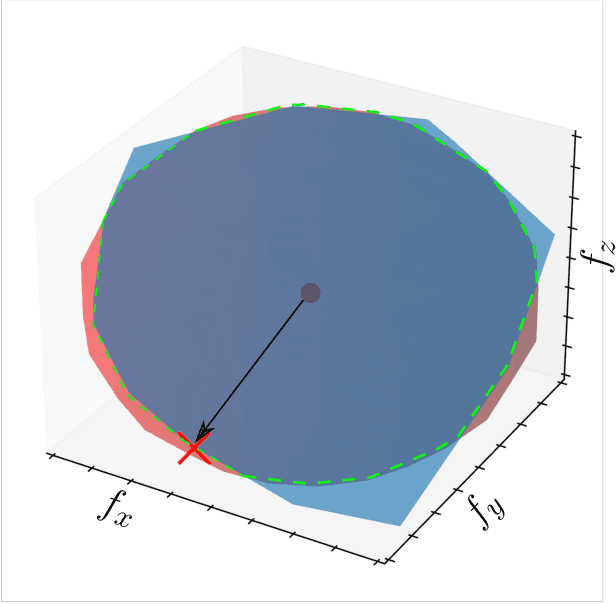

Abstract:Evaluation of grasps on deformable 3D objects is a little-studied problem, even if the applicability of rigid object grasp quality measures for deformable ones is an open question. A central issue with most quality measures is their dependence on contact points which for deformable objects depend on the deformations. This paper proposes a grasp quality measure for deformable objects that uses information about object deformation to calculate the grasp quality. Grasps are evaluated by simulating the deformations during grasping and predicting the contacts between the gripper and the grasped object. The contact information is then used as input for a new grasp quality metric to quantify the grasp quality. The approach is benchmarked against two classical rigid-body quality metrics on over 600 grasps in the Isaac gym simulation and over 50 real-world grasps. Experimental results show an average improvement of 18\% in the grasp success rate for deformable objects compared to the classical rigid-body quality metrics.

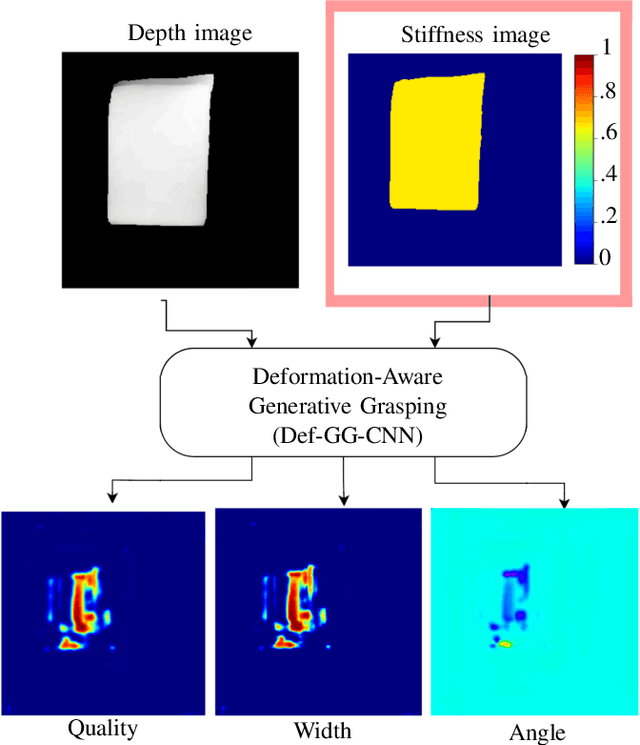

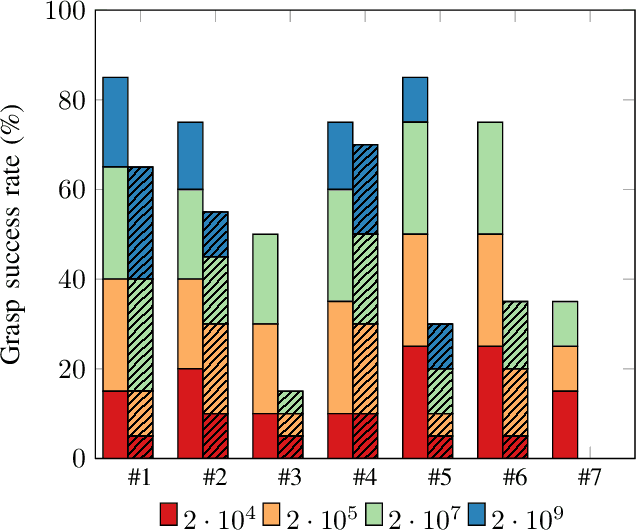

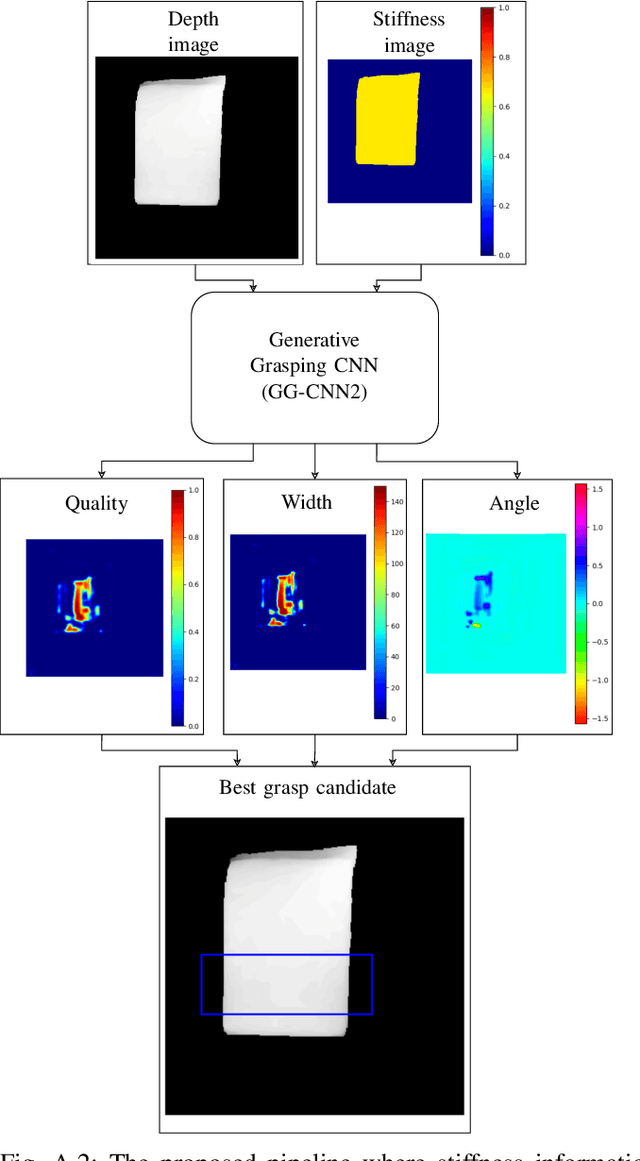

Deformation-Aware Data-Driven Grasp Synthesis

Sep 11, 2021

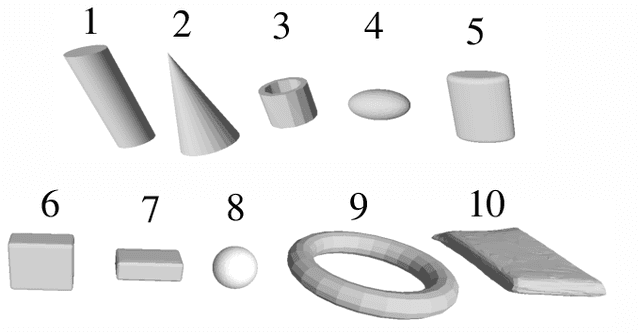

Abstract:Grasp synthesis for 3D deformable objects remains a little-explored topic, most works aiming to minimize deformations. However, deformations are not necessarily harmful -- humans are, for example, able to exploit deformations to generate new potential grasps. How to achieve that on a robot is though an open question. This paper proposes an approach that uses object stiffness information in addition to depth images for synthesizing high-quality grasps. We achieve this by incorporating object stiffness as an additional input to a state-of-the-art deep grasp planning network. We also curate a new synthetic dataset of grasps on objects of varying stiffness using the Isaac Gym simulator for training the network. We experimentally validate and compare our proposed approach against the case where we do not incorporate object stiffness on a total of 2800 grasps in simulation and 420 grasps on a real Franka Emika Panda. The experimental results show significant improvement in grasp success rate using the proposed approach on a wide range of objects with varying shapes, sizes, and stiffness. Furthermore, we demonstrate that the approach can generate different grasping strategies for different stiffness values, such as pinching for soft objects and caging for hard objects. Together, the results clearly show the value of incorporating stiffness information when grasping objects of varying stiffness.

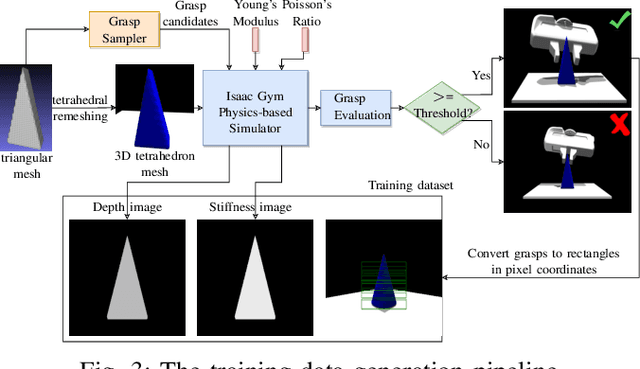

Towards synthesizing grasps for 3D deformable objects with physics-based simulation

Jul 19, 2021

Abstract:Grasping deformable objects is not well researched due to the complexity in modelling and simulating the dynamic behavior of such objects. However, with the rapid development of physics-based simulators that support soft bodies, the research gap between rigid and deformable objects is getting smaller. To leverage the capability of such simulators and to challenge the assumption that has guided robotic grasping research so far, i.e., object rigidity, we proposed a deep-learning based approach that generates stiffness-dependent grasps. Our network is trained on purely synthetic data generated from a physics-based simulator. The same simulator is also used to evaluate the trained network. The results show improvement in terms of grasp ranking and grasp success rate. Furthermore, our network can adapt the grasps based on the stiffness. We are currently validating the proposed approach on a larger test dataset in simulation and on a physical robot.

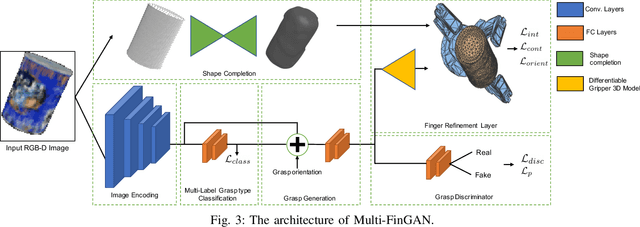

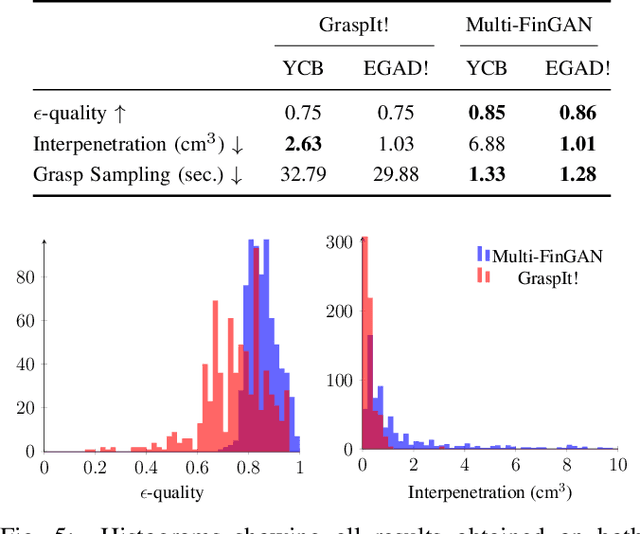

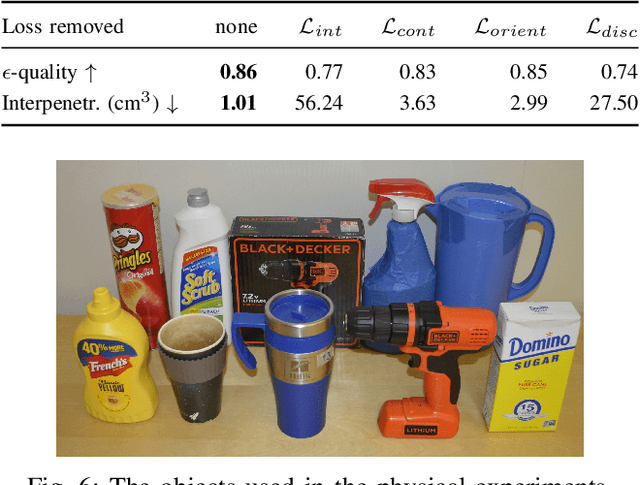

Multi-FinGAN: Generative Coarse-To-Fine Sampling of Multi-Finger Grasps

Dec 17, 2020

Abstract:While there exists a large number of methods for manipulating rigid objects with parallel-jaw grippers, grasping with multi-finger robotic hands remains a quite unexplored research topic. Reasoning and planning collision-free trajectories on the additional degrees of freedom of several fingers represents an important challenge that, so far, involves computationally costly and slow processes. In this work, we present Multi-FinGAN, a fast generative multi-finger grasp sampling method that synthesizes high quality grasps directly from RGB-D images in about a second. We achieve this by training in an end-to-end fashion a coarse-to-fine model composed of a classification network that distinguishes grasp types according to a specific taxonomy and a refinement network that produces refined grasp poses and joint angles. We experimentally validate and benchmark our method against standard grasp-sampling methods on 790 grasps in simulation and 20 grasps on a real Franka Emika Panda. All experimental results using our method show consistent improvements both in terms of grasp quality metrics and grasp success rate. Remarkably, our approach is up to 20-30 times faster than the baseline, a significant improvement that opens the door to feedback-based grasp re-planning and task informative grasping.

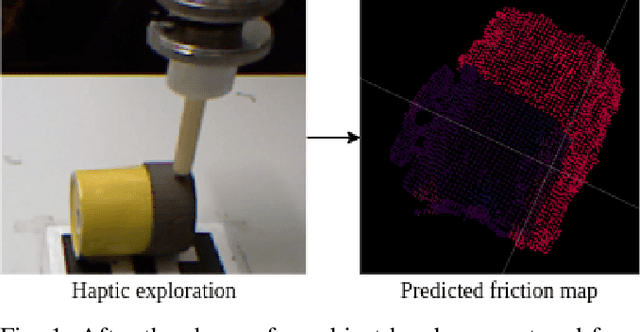

Probabilistic Surface Friction Estimation Based on Visual and Haptic Measurements

Oct 16, 2020

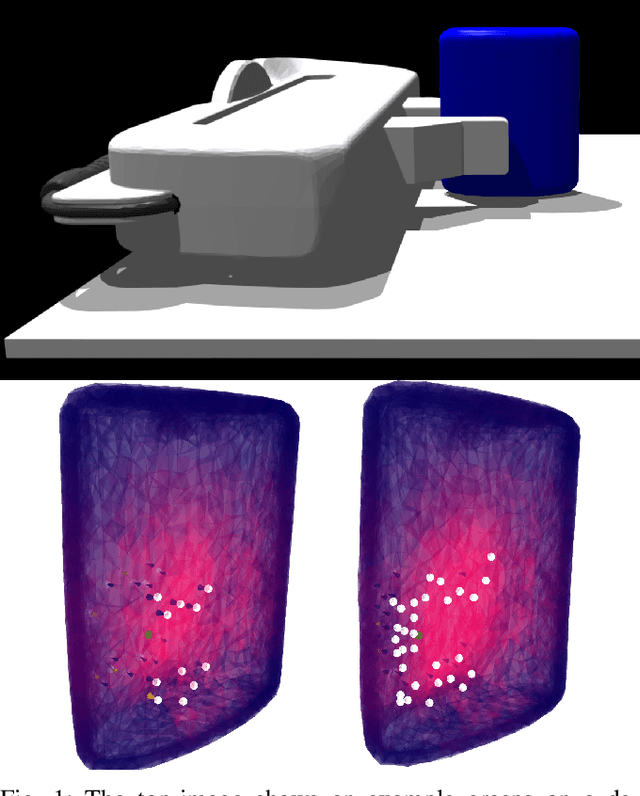

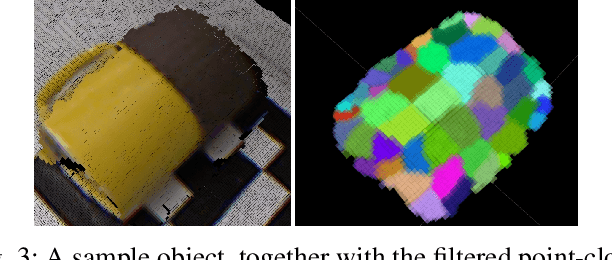

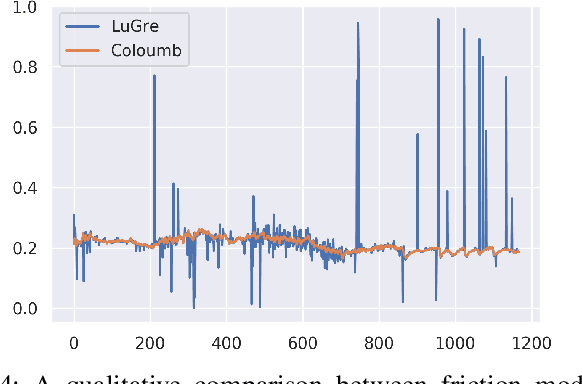

Abstract:Accurately modeling local surface properties of objects is crucial to many robotic applications, from grasping to material recognition. Surface properties like friction are however difficult to estimate, as visual observation of the object does not convey enough information over these properties. In contrast, haptic exploration is time consuming as it only provides information relevant to the explored parts of the object. In this work, we propose a joint visuo-haptic object model that enables the estimation of surface friction coefficient over an entire object by exploiting the correlation of visual and haptic information, together with a limited haptic exploration by a robotic arm. We demonstrate the validity of the proposed method by showing its ability to estimate varying friction coefficients on a range of real multi-material objects. Furthermore, we illustrate how the estimated friction coefficients can improve grasping success rate by guiding a grasp planner toward high friction areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge