Jiri Matas

Koo-Fu CLIP: Closed-Form Adaptation of Vision-Language Models via Fukunaga-Koontz Linear Discriminant Analysis

Feb 01, 2026Abstract:Visual-language models such as CLIP provide powerful general-purpose representations, but their raw embeddings are not optimized for supervised classification, often exhibiting limited class separation and excessive dimensionality. We propose Koo-Fu CLIP, a supervised CLIP adaptation method based on Fukunaga-Koontz Linear Discriminant Analysis, which operates in a whitened embedding space to suppress within-class variation and enhance between-class discrimination. The resulting closed-form linear projection reshapes the geometry of CLIP embeddings, improving class separability while performing effective dimensionality reduction, and provides a lightweight and efficient adaptation of CLIP representations. Across large-scale ImageNet benchmarks, nearest visual prototype classification in the Koo-Fu CLIP space improves top-1 accuracy from 75.1% to 79.1% on ImageNet-1K, with consistent gains persisting as the label space expands to 14K and 21K classes. The method supports substantial compression by up to 10-12x with little or no loss in accuracy, enabling efficient large-scale classification and retrieval.

BBoxMaskPose v2: Expanding Mutual Conditioning to 3D

Jan 21, 2026Abstract:Most 2D human pose estimation benchmarks are nearly saturated, with the exception of crowded scenes. We introduce PMPose, a top-down 2D pose estimator that incorporates the probabilistic formulation and the mask-conditioning. PMPose improves crowded pose estimation without sacrificing performance on standard scenes. Building on this, we present BBoxMaskPose v2 (BMPv2) integrating PMPose and an enhanced SAM-based mask refinement module. BMPv2 surpasses state-of-the-art by 1.5 average precision (AP) points on COCO and 6 AP points on OCHuman, becoming the first method to exceed 50 AP on OCHuman. We demonstrate that BMP's 2D prompting of 3D model improves 3D pose estimation in crowded scenes and that advances in 2D pose quality directly benefit 3D estimation. Results on the new OCHuman-Pose dataset show that multi-person performance is more affected by pose prediction accuracy than by detection. The code, models, and data are available on https://MiraPurkrabek.github.io/BBox-Mask-Pose/.

SAM-pose2seg: Pose-Guided Human Instance Segmentation in Crowds

Jan 16, 2026Abstract:Segment Anything (SAM) provides an unprecedented foundation for human segmentation, but may struggle under occlusion, where keypoints may be partially or fully invisible. We adapt SAM 2.1 for pose-guided segmentation with minimal encoder modifications, retaining its strong generalization. Using a fine-tuning strategy called PoseMaskRefine, we incorporate pose keypoints with high visibility into the iterative correction process originally employed by SAM, yielding improved robustness and accuracy across multiple datasets. During inference, we simplify prompting by selecting only the three keypoints with the highest visibility. This strategy reduces sensitivity to common errors, such as missing body parts or misclassified clothing, and allows accurate mask prediction from as few as a single keypoint. Our results demonstrate that pose-guided fine-tuning of SAM enables effective, occlusion-aware human segmentation while preserving the generalization capabilities of the original model. The code and pretrained models will be available at https://mirapurkrabek.github.io/BBox-Mask-Pose/.

Robust Context-Aware Object Recognition

Oct 01, 2025Abstract:In visual recognition, both the object of interest (referred to as foreground, FG, for simplicity) and its surrounding context (background, BG) play an important role. However, standard supervised learning often leads to unintended over-reliance on the BG, known as shortcut learning of spurious correlations, limiting model robustness in real-world deployment settings. In the literature, the problem is mainly addressed by suppressing the BG, sacrificing context information for improved generalization. We propose RCOR -- Robust Context-Aware Object Recognition -- the first approach that jointly achieves robustness and context-awareness without compromising either. RCOR treats localization as an integral part of recognition to decouple object-centric and context-aware modelling, followed by a robust, non-parametric fusion. It improves the performance of both supervised models and VLM on datasets with both in-domain and out-of-domain BG, even without fine-tuning. The results confirm that localization before recognition is now possible even in complex scenes as in ImageNet-1k.

Image Recognition with Vision and Language Embeddings of VLMs

Sep 11, 2025Abstract:Vision-language models (VLMs) have enabled strong zero-shot classification through image-text alignment. Yet, their purely visual inference capabilities remain under-explored. In this work, we conduct a comprehensive evaluation of both language-guided and vision-only image classification with a diverse set of dual-encoder VLMs, including both well-established and recent models such as SigLIP 2 and RADIOv2.5. The performance is compared in a standard setup on the ImageNet-1k validation set and its label-corrected variant. The key factors affecting accuracy are analysed, including prompt design, class diversity, the number of neighbours in k-NN, and reference set size. We show that language and vision offer complementary strengths, with some classes favouring textual prompts and others better handled by visual similarity. To exploit this complementarity, we introduce a simple, learning-free fusion method based on per-class precision that improves classification performance. The code is available at: https://github.com/gonikisgo/bmvc2025-vlm-image-recognition.

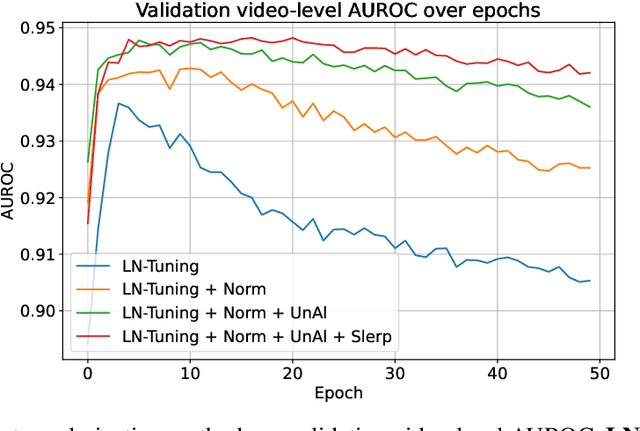

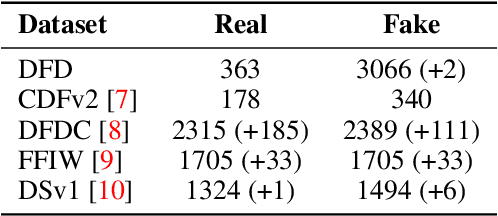

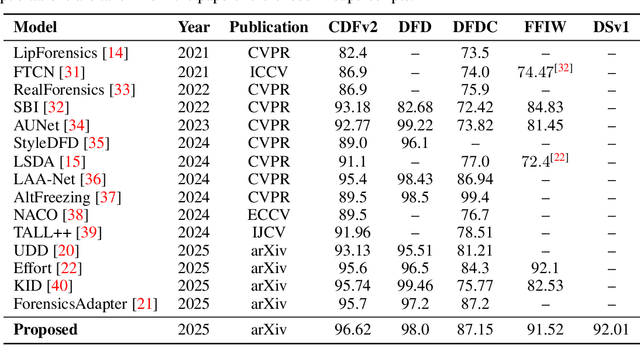

Deepfake Detection that Generalizes Across Benchmarks

Aug 08, 2025Abstract:The generalization of deepfake detectors to unseen manipulation techniques remains a challenge for practical deployment. Although many approaches adapt foundation models by introducing significant architectural complexity, this work demonstrates that robust generalization is achievable through a parameter-efficient adaptation of a pre-trained CLIP vision encoder. The proposed method, LNCLIP-DF, fine-tunes only the Layer Normalization parameters (0.03% of the total) and enhances generalization by enforcing a hyperspherical feature manifold using L2 normalization and latent space augmentations. We conducted an extensive evaluation on 13 benchmark datasets spanning from 2019 to 2025. The proposed method achieves state-of-the-art performance, outperforming more complex, recent approaches in average cross-dataset AUROC. Our analysis yields two primary findings for the field: 1) training on paired real-fake data from the same source video is essential for mitigating shortcut learning and improving generalization, and 2) detection difficulty on academic datasets has not strictly increased over time, with models trained on older, diverse datasets showing strong generalization capabilities. This work delivers a computationally efficient and reproducible method, proving that state-of-the-art generalization is attainable by making targeted, minimal changes to a pre-trained CLIP model. The code will be made publicly available upon acceptance.

BOP Challenge 2024 on Model-Based and Model-Free 6D Object Pose Estimation

Apr 03, 2025Abstract:We present the evaluation methodology, datasets and results of the BOP Challenge 2024, the sixth in a series of public competitions organized to capture the state of the art in 6D object pose estimation and related tasks. In 2024, our goal was to transition BOP from lab-like setups to real-world scenarios. First, we introduced new model-free tasks, where no 3D object models are available and methods need to onboard objects just from provided reference videos. Second, we defined a new, more practical 6D object detection task where identities of objects visible in a test image are not provided as input. Third, we introduced new BOP-H3 datasets recorded with high-resolution sensors and AR/VR headsets, closely resembling real-world scenarios. BOP-H3 include 3D models and onboarding videos to support both model-based and model-free tasks. Participants competed on seven challenge tracks, each defined by a task, object onboarding setup, and dataset group. Notably, the best 2024 method for model-based 6D localization of unseen objects (FreeZeV2.1) achieves 22% higher accuracy on BOP-Classic-Core than the best 2023 method (GenFlow), and is only 4% behind the best 2023 method for seen objects (GPose2023) although being significantly slower (24.9 vs 2.7s per image). A more practical 2024 method for this task is Co-op which takes only 0.8s per image and is 25X faster and 13% more accurate than GenFlow. Methods have a similar ranking on 6D detection as on 6D localization but higher run time. On model-based 2D detection of unseen objects, the best 2024 method (MUSE) achieves 21% relative improvement compared to the best 2023 method (CNOS). However, the 2D detection accuracy for unseen objects is still noticealy (-53%) behind the accuracy for seen objects (GDet2023). The online evaluation system stays open and is available at http://bop.felk.cvut.cz/

A Dataset for Semantic Segmentation in the Presence of Unknowns

Mar 28, 2025Abstract:Before deployment in the real-world deep neural networks require thorough evaluation of how they handle both knowns, inputs represented in the training data, and unknowns (anomalies). This is especially important for scene understanding tasks with safety critical applications, such as in autonomous driving. Existing datasets allow evaluation of only knowns or unknowns - but not both, which is required to establish "in the wild" suitability of deep neural network models. To bridge this gap, we propose a novel anomaly segmentation dataset, ISSU, that features a diverse set of anomaly inputs from cluttered real-world environments. The dataset is twice larger than existing anomaly segmentation datasets, and provides a training, validation and test set for controlled in-domain evaluation. The test set consists of a static and temporal part, with the latter comprised of videos. The dataset provides annotations for both closed-set (knowns) and anomalies, enabling closed-set and open-set evaluation. The dataset covers diverse conditions, such as domain and cross-sensor shift, illumination variation and allows ablation of anomaly detection methods with respect to these variations. Evaluation results of current state-of-the-art methods confirm the need for improvements especially in domain-generalization, small and large object segmentation.

Unlocking the Hidden Potential of CLIP in Generalizable Deepfake Detection

Mar 26, 2025

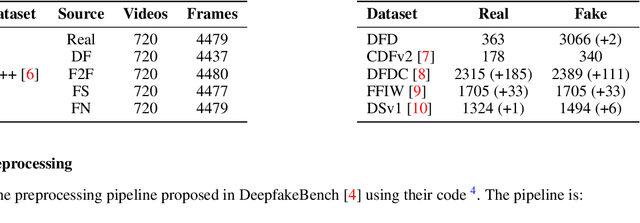

Abstract:This paper tackles the challenge of detecting partially manipulated facial deepfakes, which involve subtle alterations to specific facial features while retaining the overall context, posing a greater detection difficulty than fully synthetic faces. We leverage the Contrastive Language-Image Pre-training (CLIP) model, specifically its ViT-L/14 visual encoder, to develop a generalizable detection method that performs robustly across diverse datasets and unknown forgery techniques with minimal modifications to the original model. The proposed approach utilizes parameter-efficient fine-tuning (PEFT) techniques, such as LN-tuning, to adjust a small subset of the model's parameters, preserving CLIP's pre-trained knowledge and reducing overfitting. A tailored preprocessing pipeline optimizes the method for facial images, while regularization strategies, including L2 normalization and metric learning on a hyperspherical manifold, enhance generalization. Trained on the FaceForensics++ dataset and evaluated in a cross-dataset fashion on Celeb-DF-v2, DFDC, FFIW, and others, the proposed method achieves competitive detection accuracy comparable to or outperforming much more complex state-of-the-art techniques. This work highlights the efficacy of CLIP's visual encoder in facial deepfake detection and establishes a simple, powerful baseline for future research, advancing the field of generalizable deepfake detection. The code is available at: https://github.com/yermandy/deepfake-detection

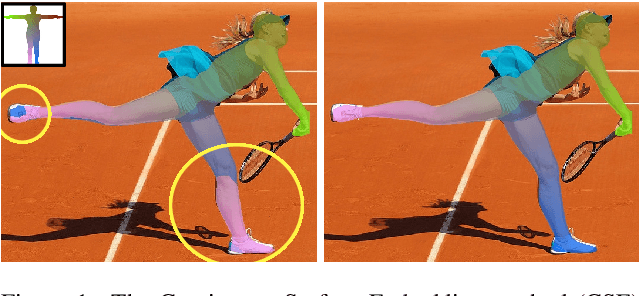

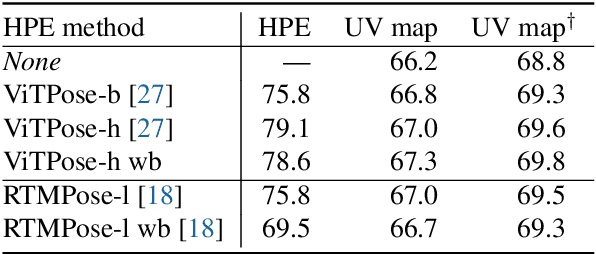

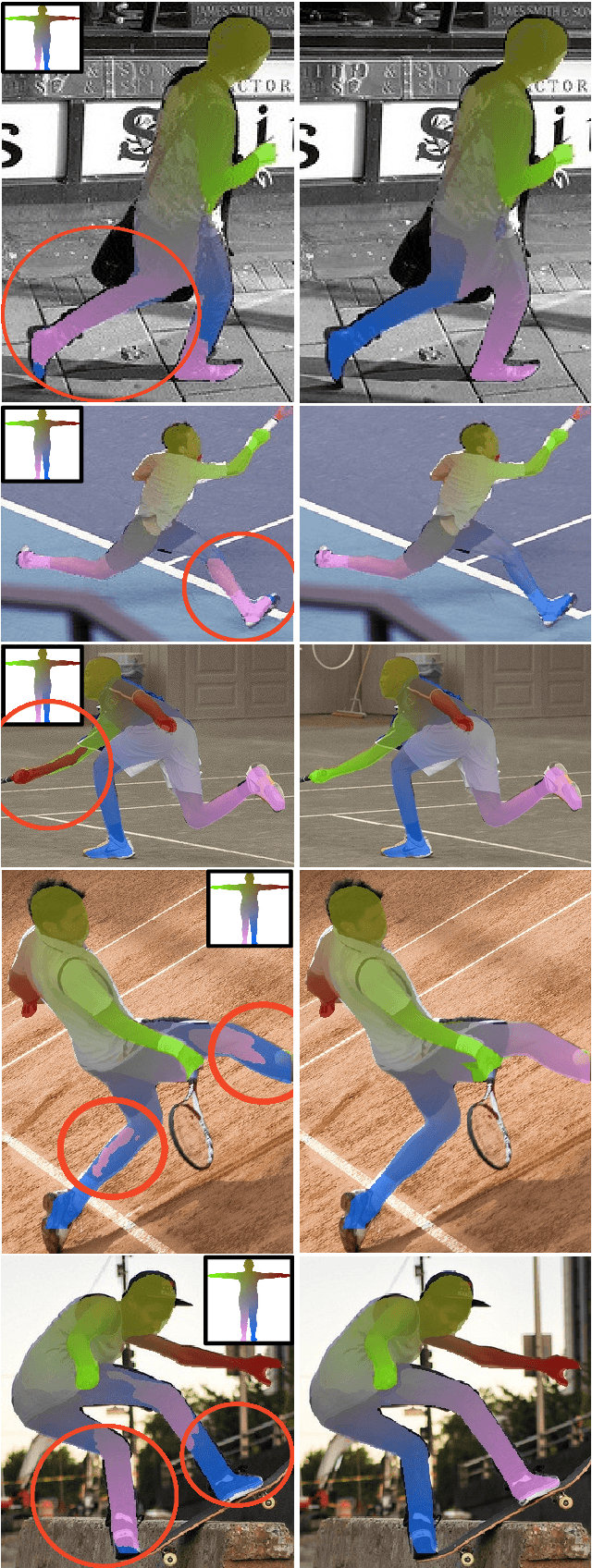

Human Pose-Constrained UV Map Estimation

Jan 15, 2025

Abstract:UV map estimation is used in computer vision for detailed analysis of human posture or activity. Previous methods assign pixels to body model vertices by comparing pixel descriptors independently, without enforcing global coherence or plausibility in the UV map. We propose Pose-Constrained Continuous Surface Embeddings (PC-CSE), which integrates estimated 2D human pose into the pixel-to-vertex assignment process. The pose provides global anatomical constraints, ensuring that UV maps remain coherent while preserving local precision. Evaluation on DensePose COCO demonstrates consistent improvement, regardless of the chosen 2D human pose model. Whole-body poses offer better constraints by incorporating additional details about the hands and feet. Conditioning UV maps with human pose reduces invalid mappings and enhances anatomical plausibility. In addition, we highlight inconsistencies in the ground-truth annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge