Klara Janouskova

Koo-Fu CLIP: Closed-Form Adaptation of Vision-Language Models via Fukunaga-Koontz Linear Discriminant Analysis

Feb 01, 2026Abstract:Visual-language models such as CLIP provide powerful general-purpose representations, but their raw embeddings are not optimized for supervised classification, often exhibiting limited class separation and excessive dimensionality. We propose Koo-Fu CLIP, a supervised CLIP adaptation method based on Fukunaga-Koontz Linear Discriminant Analysis, which operates in a whitened embedding space to suppress within-class variation and enhance between-class discrimination. The resulting closed-form linear projection reshapes the geometry of CLIP embeddings, improving class separability while performing effective dimensionality reduction, and provides a lightweight and efficient adaptation of CLIP representations. Across large-scale ImageNet benchmarks, nearest visual prototype classification in the Koo-Fu CLIP space improves top-1 accuracy from 75.1% to 79.1% on ImageNet-1K, with consistent gains persisting as the label space expands to 14K and 21K classes. The method supports substantial compression by up to 10-12x with little or no loss in accuracy, enabling efficient large-scale classification and retrieval.

Robust Context-Aware Object Recognition

Oct 01, 2025Abstract:In visual recognition, both the object of interest (referred to as foreground, FG, for simplicity) and its surrounding context (background, BG) play an important role. However, standard supervised learning often leads to unintended over-reliance on the BG, known as shortcut learning of spurious correlations, limiting model robustness in real-world deployment settings. In the literature, the problem is mainly addressed by suppressing the BG, sacrificing context information for improved generalization. We propose RCOR -- Robust Context-Aware Object Recognition -- the first approach that jointly achieves robustness and context-awareness without compromising either. RCOR treats localization as an integral part of recognition to decouple object-centric and context-aware modelling, followed by a robust, non-parametric fusion. It improves the performance of both supervised models and VLM on datasets with both in-domain and out-of-domain BG, even without fine-tuning. The results confirm that localization before recognition is now possible even in complex scenes as in ImageNet-1k.

Image Recognition with Vision and Language Embeddings of VLMs

Sep 11, 2025Abstract:Vision-language models (VLMs) have enabled strong zero-shot classification through image-text alignment. Yet, their purely visual inference capabilities remain under-explored. In this work, we conduct a comprehensive evaluation of both language-guided and vision-only image classification with a diverse set of dual-encoder VLMs, including both well-established and recent models such as SigLIP 2 and RADIOv2.5. The performance is compared in a standard setup on the ImageNet-1k validation set and its label-corrected variant. The key factors affecting accuracy are analysed, including prompt design, class diversity, the number of neighbours in k-NN, and reference set size. We show that language and vision offer complementary strengths, with some classes favouring textual prompts and others better handled by visual similarity. To exploit this complementarity, we introduce a simple, learning-free fusion method based on per-class precision that improves classification performance. The code is available at: https://github.com/gonikisgo/bmvc2025-vlm-image-recognition.

Flaws of ImageNet, Computer Vision's Favourite Dataset

Nov 26, 2024Abstract:Since its release, ImageNet-1k dataset has become a gold standard for evaluating model performance. It has served as the foundation for numerous other datasets and training tasks in computer vision. As models have improved in accuracy, issues related to label correctness have become increasingly apparent. In this blog post, we analyze the issues in the ImageNet-1k dataset, including incorrect labels, overlapping or ambiguous class definitions, training-evaluation domain shifts, and image duplicates. The solutions for some problems are straightforward. For others, we hope to start a broader conversation about refining this influential dataset to better serve future research.

Segment to Recognize Robustly -- Enhancing Recognition by Image Decomposition

Nov 24, 2024

Abstract:In image recognition, both foreground (FG) and background (BG) play an important role; however, standard deep image recognition often leads to unintended over-reliance on the BG, limiting model robustness in real-world deployment settings. Current solutions mainly suppress the BG, sacrificing BG information for improved generalization. We propose "Segment to Recognize Robustly" (S2R^2), a novel recognition approach which decouples the FG and BG modelling and combines them in a simple, robust, and interpretable manner. S2R^2 leverages recent advances in zero-shot segmentation to isolate the FG and the BG before or during recognition. By combining FG and BG, potentially also with a standard full-image classifier, S2R^2 achieves state-of-the-art results on in-domain data while maintaining robustness to BG shifts. The results confirm that segmentation before recognition is now possible.

FungiTastic: A multi-modal dataset and benchmark for image categorization

Aug 24, 2024

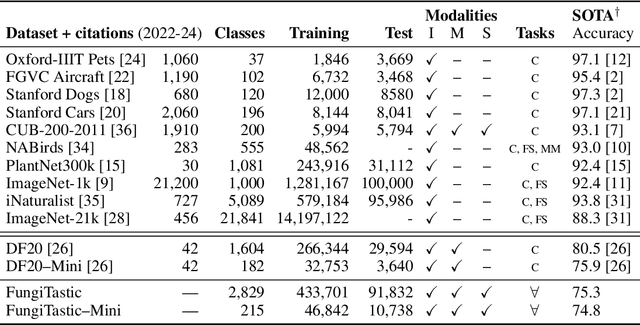

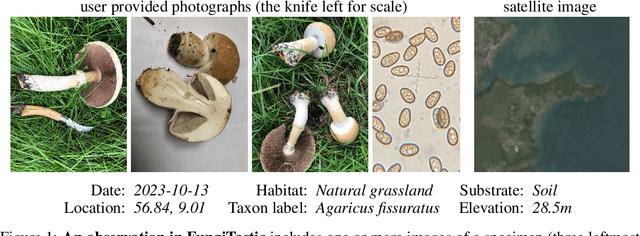

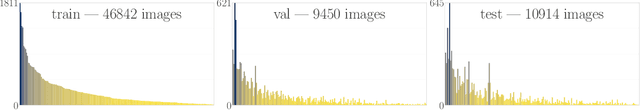

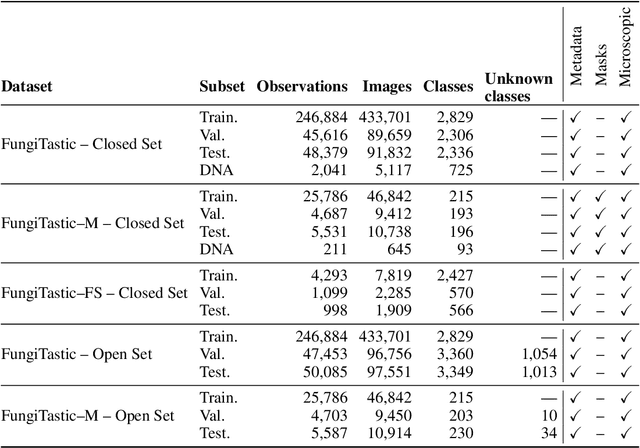

Abstract:We introduce a new, highly challenging benchmark and a dataset -- FungiTastic -- based on data continuously collected over a twenty-year span. The dataset originates in fungal records labeled and curated by experts. It consists of about 350k multi-modal observations that include more than 650k photographs from 5k fine-grained categories and diverse accompanying information, e.g., acquisition metadata, satellite images, and body part segmentation. FungiTastic is the only benchmark that includes a test set with partially DNA-sequenced ground truth of unprecedented label reliability. The benchmark is designed to support (i) standard close-set classification, (ii) open-set classification, (iii) multi-modal classification, (iv) few-shot learning, (v) domain shift, and many more. We provide baseline methods tailored for almost all the use-cases. We provide a multitude of ready-to-use pre-trained models on HuggingFace and a framework for model training. A comprehensive documentation describing the dataset features and the baselines are available at https://bohemianvra.github.io/FungiTastic/ and https://www.kaggle.com/datasets/picekl/fungitastic.

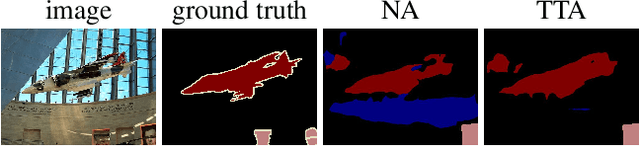

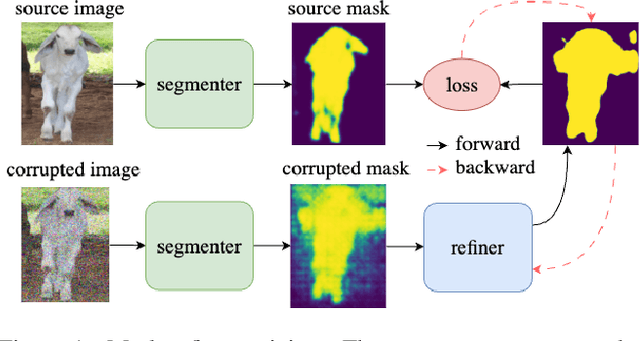

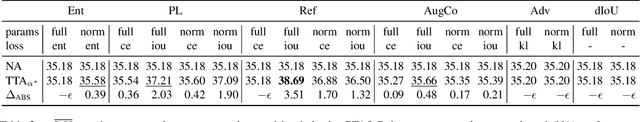

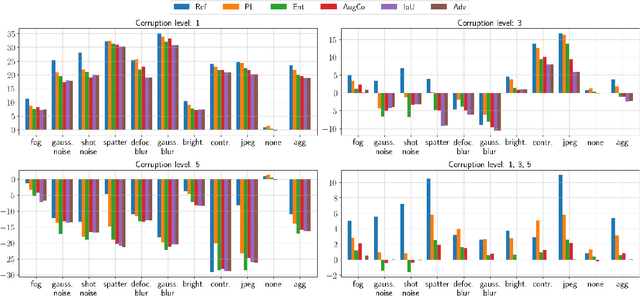

Single Image Test-Time Adaptation for Segmentation

Sep 25, 2023

Abstract:Test-Time Adaptation (TTA) methods improve the robustness of deep neural networks to domain shift on a variety of tasks such as image classification or segmentation. This work explores adapting segmentation models to a single unlabelled image with no other data available at test-time. In particular, this work focuses on adaptation by optimizing self-supervised losses at test-time. Multiple baselines based on different principles are evaluated under diverse conditions and a novel adversarial training is introduced for adaptation with mask refinement. Our additions to the baselines result in a 3.51 and 3.28 % increase over non-adapted baselines, without these improvements, the increase would be 1.7 and 2.16 % only.

Model-Assisted Labeling via Explainability for Visual Inspection of Civil Infrastructures

Sep 22, 2022

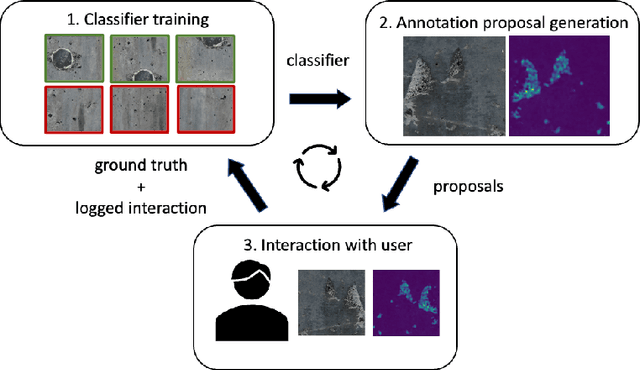

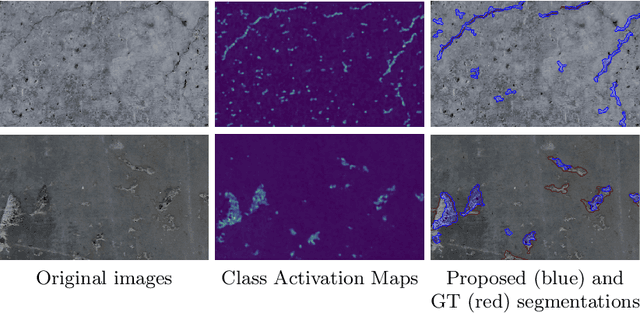

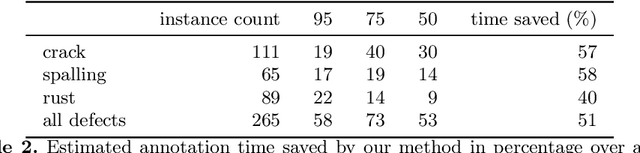

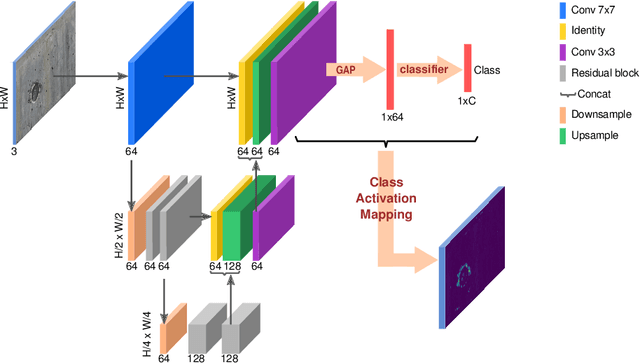

Abstract:Labeling images for visual segmentation is a time-consuming task which can be costly, particularly in application domains where labels have to be provided by specialized expert annotators, such as civil engineering. In this paper, we propose to use attribution methods to harness the valuable interactions between expert annotators and the data to be annotated in the case of defect segmentation for visual inspection of civil infrastructures. Concretely, a classifier is trained to detect defects and coupled with an attribution-based method and adversarial climbing to generate and refine segmentation masks corresponding to the classification outputs. These are used within an assisted labeling framework where the annotators can interact with them as proposal segmentation masks by deciding to accept, reject or modify them, and interactions are logged as weak labels to further refine the classifier. Applied on a real-world dataset resulting from the automated visual inspection of bridges, our proposed method is able to save more than 50\% of annotators' time when compared to manual annotation of defects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge