Single Image Test-Time Adaptation for Segmentation

Paper and Code

Sep 25, 2023

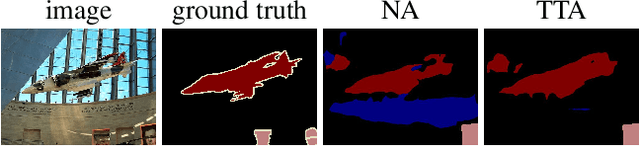

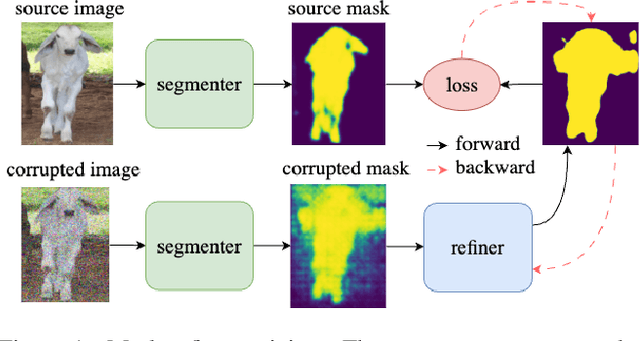

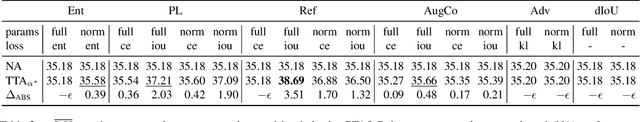

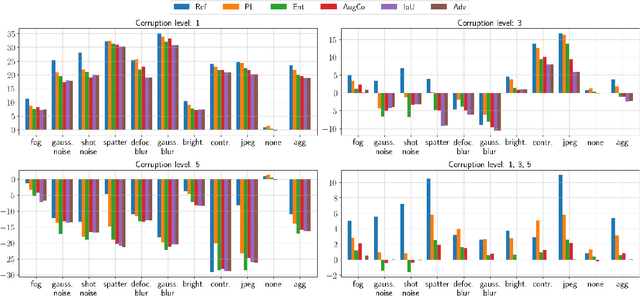

Test-Time Adaptation (TTA) methods improve the robustness of deep neural networks to domain shift on a variety of tasks such as image classification or segmentation. This work explores adapting segmentation models to a single unlabelled image with no other data available at test-time. In particular, this work focuses on adaptation by optimizing self-supervised losses at test-time. Multiple baselines based on different principles are evaluated under diverse conditions and a novel adversarial training is introduced for adaptation with mask refinement. Our additions to the baselines result in a 3.51 and 3.28 % increase over non-adapted baselines, without these improvements, the increase would be 1.7 and 2.16 % only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge