Petr Svarny

Perirobot space representation for HRI: measuring and designing collaborative workspace coverage by diverse sensors

Mar 04, 2023

Abstract:Two regimes permitting safe physical human-robot interaction, speed and separation monitoring and safety-rated monitored stop, depend on reliable perception of the space surrounding the robot. This can be accomplished by visual sensors (like cameras, RGB-D cameras, LIDARs), proximity sensors, or dedicated devices used in industrial settings like pads that are activated by the presence of the operator. The deployment of a particular solution is often ad hoc and no unified representation of the interaction space or its coverage by the different sensors exists. In this work, we make first steps in this direction by defining the spaces to be monitored, representing all sensor data as information about occupancy and using occupancy-based metrics to calculate how a particular sensor covers the workspace. We demonstrate our approach in two (multi-)sensor-placement experiments in three static scenes and one experiment in a dynamic scene. The occupancy representation allow to compare the effectiveness of various sensor setups. Therefore, this approach can serve as a prototyping tool to establish the sensor setup that provides the most efficient coverage for the given metrics and sensor representations.

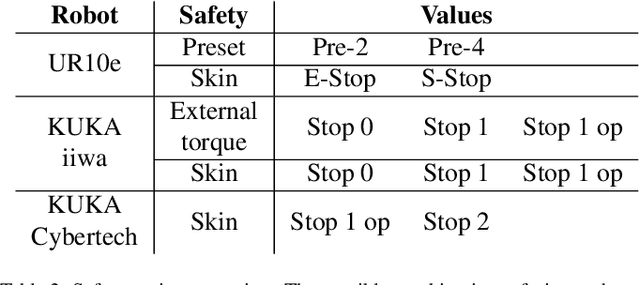

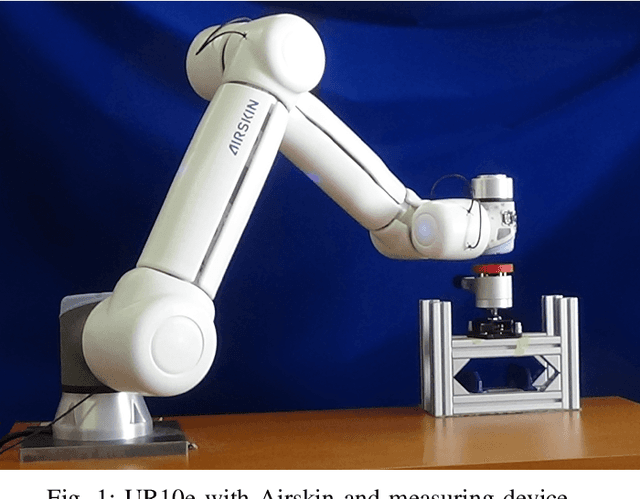

Effect of Active and Passive Protective Soft Skins on Collision Forces in Human-robot Collaboration

Mar 18, 2022

Abstract:Soft electronic skins are one of the means to turn an industrial manipulator into a collaborative robot. For manipulators that are already fit for physical human-robot collaboration, soft skins can make them safer. In this work, we study the after impact behavior of two collaborative manipulators (UR10e and KUKA LBR iiwa) and one classical industrial manipulator (KUKA Cybertech), in the presence or absence of an industrial protective skin (AIRSKIN). In addition, we isolate the effects of the passive padding and the active contribution of the sensor to robot reaction. We present a total of 2250 collision measurements and study the impact force, contact duration, clamping force, and impulse. The dataset is publicly available. We summarize our results as follows. For transient collisions, the passive skin properties lowered the impact forces by about 40 %. During quasi-static contact, the effect of skin covers -- active or passive -- cannot be isolated from the collision detection and reaction by the collaborative robots. Important effects of the stop categories triggered by the active protective skin were found. We systematically compare the different settings and the empirically established safe velocities with prescriptions by the ISO/TS 15066. In some cases, up to the quadruple of the ISO/TS 15066 prescribed velocity can comply with the impact force limits and thus be considered safe. We propose an extension of the formulas relating impact force and permissible velocity that take into account the stiffness and compressible thickness of the protective cover, leading to better predictions of the collision forces. At the same time, this work emphasizes the need for in situ measurements as all the factors we studied -- presence of active/passive skin, safety stop settings, robot collision reaction, impact direction, and, of course, velocity -- have effects on the force evolution after impact.

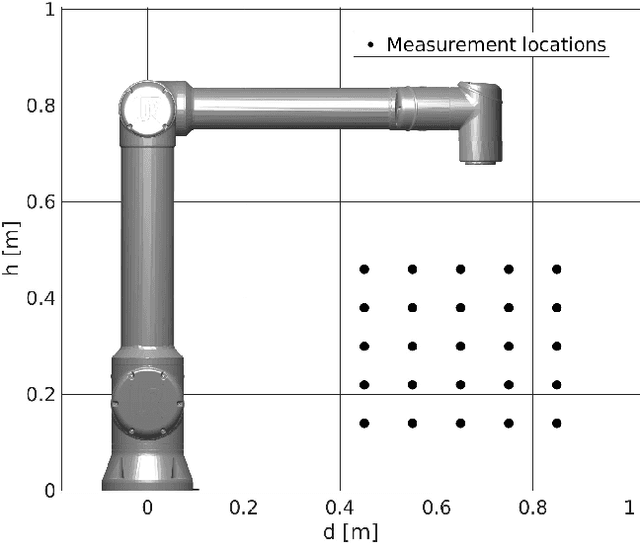

3D Collision-Force-Map for Safe Human-Robot Collaboration

Sep 02, 2020

Abstract:Collaborative robots, i.e. robots designed for direct interaction with a human, present a promising step in robotic manufacturing. However, their performance is limited by the safety demands of standards. In this article, we measure the forces exerted by two robot arms (UR10e and Kuka LBR iiwa) on an impact measuring device in different positions in the robot workspace and with various velocities. Based on these measurements, we investigate the Power and Force Limiting regime presented in ISO/TS 15066. Impact forces are in practice hard to calculate analytically as many properties of the robots are not available (e.g., proprietary control algorithms). This motivates the use of simple, yet reasonably accurate, approximations. Our results show that height of the impact location is also an important factor and that an accurate model of the robot can be created from a limited number of impact samples. Previous work predicted impact forces based on other factors (distance, velocity, weight), yet these predictions are less accurate. This would allow a fast estimation of the impact forces in the robot's workspace and thus make it easier to design a safe human-robot collaboration setup.

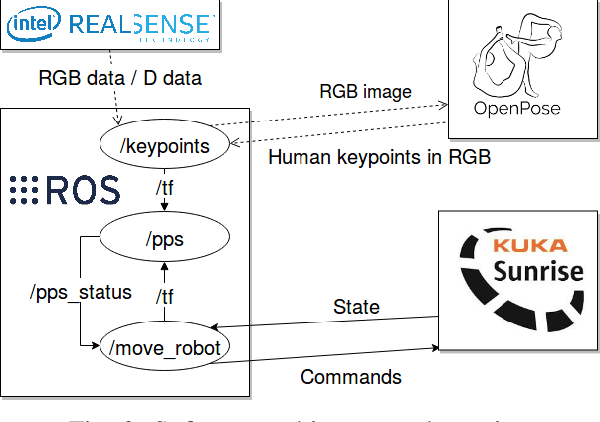

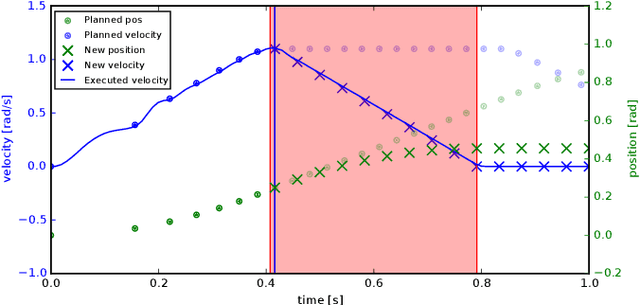

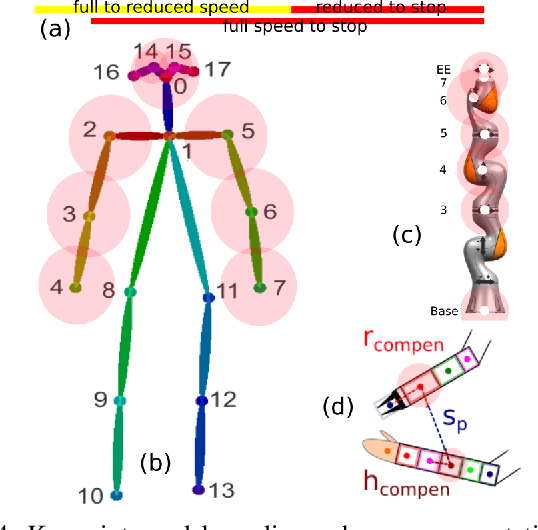

Safe physical HRI: Toward a unified treatment of speed and separation monitoring together with power and force limiting

Aug 08, 2019

Abstract:So-called collaborative robots are a current trend in industrial robotics. However, they still face many problems in practical application such as reduced speed to ascertain their collaborativeness. The standards prescribe two regimes: (i) speed and separation monitoring and (ii) power and force limiting, where the former requires reliable estimation of distances between the robot and human body parts and the latter imposes constraints on the energy absorbed during collisions prior to robot stopping. Following the standards, we deploy the two collaborative regimes in a single application and study the performance in a mock collaborative task under the individual regimes, including transitions between them. Additionally, we compare the performance under "safety zone monitoring" with keypoint pair-wise separation distance assessment relying on an RGB-D sensor and skeleton extraction algorithm to track human body parts in the workspace. Best performance has been achieved in the following setting: robot operates at full speed until a distance threshold between any robot and human body part is crossed; then, reduced robot speed per power and force limiting is triggered. Robot is halted only when the operator's head crosses a predefined distance from selected robot parts. We demonstrate our methodology on a setup combining a KUKA LBR iiwa robot, Intel RealSense RGB-D sensor and OpenPose for human pose estimation.

* 8 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge