Victoria Clerico

George Mason Unviersity

Edge Training and Inference with Analog ReRAM Technology for Hand Gesture Recognition

Feb 25, 2025

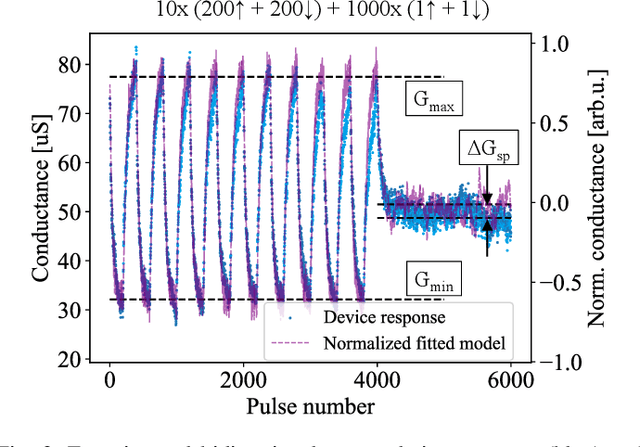

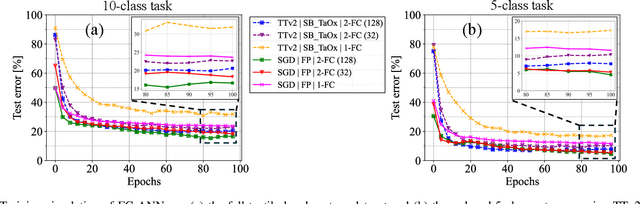

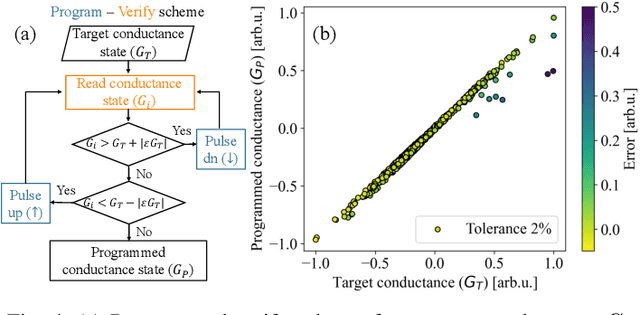

Abstract:Tactile hand gesture recognition is a crucial task for user control in the automotive sector, where Human-Machine Interactions (HMI) demand low latency and high energy efficiency. This study addresses the challenges of power-constrained edge training and inference by utilizing analog Resistive Random Access Memory (ReRAM) technology in conjunction with a real tactile hand gesture dataset. By optimizing the input space through a feature engineering strategy, we avoid relying on large-scale crossbar arrays, making the system more suitable for edge deployment. Through realistic hardware-aware simulations that account for device non-idealities derived from experimental data, we demonstrate the functionalities of our analog ReRAM-based analog in-memory computing for on-chip training, utilizing the state-of-the-art Tiki-Taka algorithm. Furthermore, we validate the classification accuracy of approximately 91.4% for post-deployment inference of hand gestures. The results highlight the potential of analog ReRAM technology and crossbar architecture with fully parallelized matrix computations for real-time HMI systems at the Edge.

Wandering around: A bioinspired approach to visual attention through object motion sensitivity

Feb 10, 2025

Abstract:Active vision enables dynamic visual perception, offering an alternative to static feedforward architectures in computer vision, which rely on large datasets and high computational resources. Biological selective attention mechanisms allow agents to focus on salient Regions of Interest (ROIs), reducing computational demand while maintaining real-time responsiveness. Event-based cameras, inspired by the mammalian retina, enhance this capability by capturing asynchronous scene changes enabling efficient low-latency processing. To distinguish moving objects while the event-based camera is in motion the agent requires an object motion segmentation mechanism to accurately detect targets and center them in the visual field (fovea). Integrating event-based sensors with neuromorphic algorithms represents a paradigm shift, using Spiking Neural Networks to parallelize computation and adapt to dynamic environments. This work presents a Spiking Convolutional Neural Network bioinspired attention system for selective attention through object motion sensitivity. The system generates events via fixational eye movements using a Dynamic Vision Sensor integrated into the Speck neuromorphic hardware, mounted on a Pan-Tilt unit, to identify the ROI and saccade toward it. The system, characterized using ideal gratings and benchmarked against the Event Camera Motion Segmentation Dataset, reaches a mean IoU of 82.2% and a mean SSIM of 96% in multi-object motion segmentation. The detection of salient objects reaches 88.8% accuracy in office scenarios and 89.8% in low-light conditions on the Event-Assisted Low-Light Video Object Segmentation Dataset. A real-time demonstrator shows the system's 0.12 s response to dynamic scenes. Its learning-free design ensures robustness across perceptual scenes, making it a reliable foundation for real-time robotic applications serving as a basis for more complex architectures.

Retina-inspired Object Motion Segmentation

Aug 18, 2024Abstract:Dynamic Vision Sensors (DVS) have emerged as a revolutionary technology with a high temporal resolution that far surpasses RGB cameras. DVS technology draws biological inspiration from photoreceptors and the initial retinal synapse. Our research showcases the potential of additional retinal functionalities to extract visual features. We provide a domain-agnostic and efficient algorithm for ego-motion compensation based on Object Motion Sensitivity (OMS), one of the multiple robust features computed within the mammalian retina. We develop a framework based on experimental neuroscience that translates OMS' biological circuitry to a low-overhead algorithm. OMS processes DVS data from dynamic scenes to perform pixel-wise object motion segmentation. Using a real and a synthetic dataset, we highlight OMS' ability to differentiate object motion from ego-motion, bypassing the need for deep networks. This paper introduces a bio-inspired computer vision method that dramatically reduces the number of parameters by a factor of 1000 compared to prior works. Our work paves the way for robust, high-speed, and low-bandwidth decision-making for in-sensor computations.

Hardware-Algorithm Re-engineering of Retinal Circuit for Intelligent Object Motion Segmentation

Jul 31, 2024

Abstract:Recent advances in retinal neuroscience have fueled various hardware and algorithmic efforts to develop retina-inspired solutions for computer vision tasks. In this work, we focus on a fundamental visual feature within the mammalian retina, Object Motion Sensitivity (OMS). Using DVS data from EV-IMO dataset, we analyze the performance of an algorithmic implementation of OMS circuitry for motion segmentation in presence of ego-motion. This holistic analysis considers the underlying constraints arising from the hardware circuit implementation. We present novel CMOS circuits that implement OMS functionality inside image sensors, while providing run-time re-configurability for key algorithmic parameters. In-sensor technologies for dynamical environment adaptation are crucial for ensuring high system performance. Finally, we verify the functionality and re-configurability of the proposed CMOS circuit designs through Cadence simulations in 180nm technology. In summary, the presented work lays foundation for hardware-algorithm re-engineering of known biological circuits to suit application needs.

Transductive Spiking Graph Neural Networks for Loihi

Apr 25, 2024

Abstract:Graph neural networks have emerged as a specialized branch of deep learning, designed to address problems where pairwise relations between objects are crucial. Recent advancements utilize graph convolutional neural networks to extract features within graph structures. Despite promising results, these methods face challenges in real-world applications due to sparse features, resulting in inefficient resource utilization. Recent studies draw inspiration from the mammalian brain and employ spiking neural networks to model and learn graph structures. However, these approaches are limited to traditional Von Neumann-based computing systems, which still face hardware inefficiencies. In this study, we present a fully neuromorphic implementation of spiking graph neural networks designed for Loihi 2. We optimize network parameters using Lava Bayesian Optimization, a novel hyperparameter optimization system compatible with neuromorphic computing architectures. We showcase the performance benefits of combining neuromorphic Bayesian optimization with our approach for citation graph classification using fixed-precision spiking neurons. Our results demonstrate the capability of integer-precision, Loihi 2 compatible spiking neural networks in performing citation graph classification with comparable accuracy to existing floating point implementations.

LSTM Framework for Classification of Radar and Communications Signals

May 04, 2023

Abstract:Although radar and communications signal classification are usually treated separately, they share similar characteristics, and methods applied in one domain can be potentially applied in the other. We propose a simple and unified scheme for the classification of radar and communications signals using Long Short-Term Memory (LSTM) neural networks. This proposal provides an improvement of the state of the art on radar signals where LSTM models are starting to be applied within schemes of higher complexity. To date, there is no standard public dataset for radar signals. Therefore, we propose DeepRadar2022, a radar dataset used in our systematic evaluations that is available publicly and will facilitate a standard comparison between methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge