Shay Snyder

George Mason University

A Retina-Inspired Pathway to Real-Time Motion Prediction inside Image Sensors for Extreme-Edge Intelligence

Apr 02, 2025Abstract:The ability to predict motion in real time is fundamental to many maneuvering activities in animals, particularly those critical for survival, such as attack and escape responses. Given its significance, it is no surprise that motion prediction in animals begins in the retina. Similarly, autonomous systems utilizing computer vision could greatly benefit from the capability to predict motion in real time. Therefore, for computer vision applications, motion prediction should be integrated directly at the camera pixel level. Towards that end, we present a retina-inspired neuromorphic framework capable of performing real-time, energy-efficient MP directly within camera pixels. Our hardware-algorithm framework, implemented using GlobalFoundries 22nm FDSOI technology, integrates key retinal MP compute blocks, including a biphasic filter, spike adder, nonlinear circuit, and a 2D array for multi-directional motion prediction. Additionally, integrating the sensor and MP compute die using a 3D Cu-Cu hybrid bonding approach improves design compactness by minimizing area usage and simplifying routing complexity. Validated on real-world object stimuli, the model delivers efficient, low-latency MP for decision-making scenarios reliant on predictive visual computation, while consuming only 18.56 pJ/MP in our mixed-signal hardware implementation.

On the Privacy-Preserving Properties of Spiking Neural Networks with Unique Surrogate Gradients and Quantization Levels

Feb 25, 2025

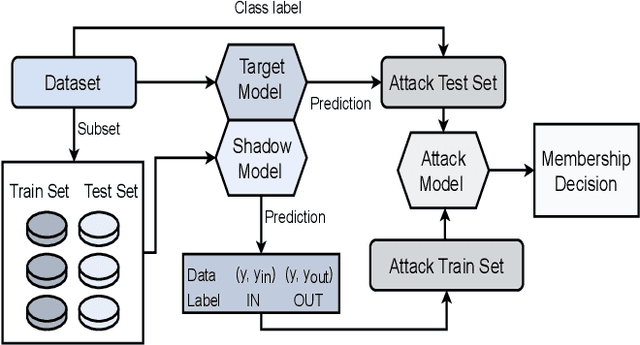

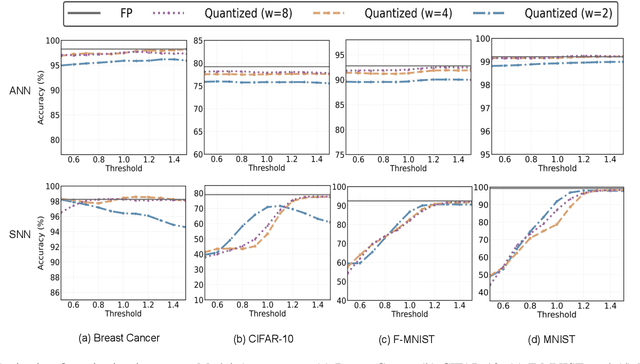

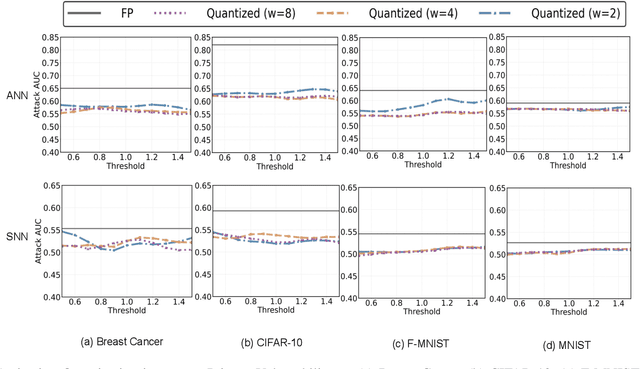

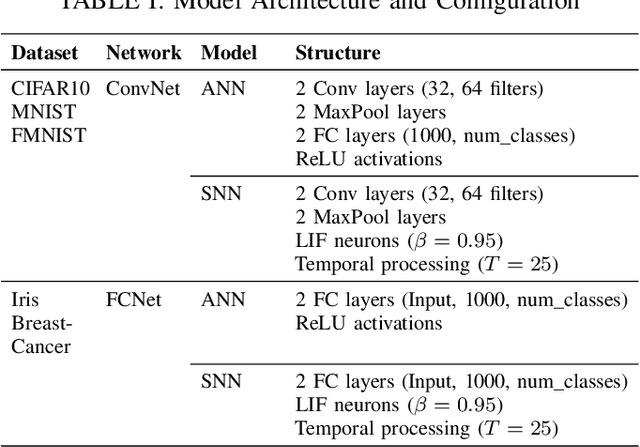

Abstract:As machine learning models increasingly process sensitive data, understanding their vulnerability to privacy attacks is vital. Membership inference attacks (MIAs) exploit model responses to infer whether specific data points were used during training, posing a significant privacy risk. Prior research suggests that spiking neural networks (SNNs), which rely on event-driven computation and discrete spike-based encoding, exhibit greater resilience to MIAs than artificial neural networks (ANNs). This resilience stems from their non-differentiable activations and inherent stochasticity, which obscure the correlation between model responses and individual training samples. To enhance privacy in SNNs, we explore two techniques: quantization and surrogate gradients. Quantization, which reduces precision to limit information leakage, has improved privacy in ANNs. Given SNNs' sparse and irregular activations, quantization may further disrupt the activation patterns exploited by MIAs. We assess the vulnerability of SNNs and ANNs under weight and activation quantization across multiple datasets, using the attack model's receiver operating characteristic (ROC) curve area under the curve (AUC) metric, where lower values indicate stronger privacy, and evaluate the privacy-accuracy trade-off. Our findings show that quantization enhances privacy in both architectures with minimal performance loss, though full-precision SNNs remain more resilient than quantized ANNs. Additionally, we examine the impact of surrogate gradients on privacy in SNNs. Among five evaluated gradients, spike rate escape provides the best privacy-accuracy trade-off, while arctangent increases vulnerability to MIAs. These results reinforce SNNs' inherent privacy advantages and demonstrate that quantization and surrogate gradient selection significantly influence privacy-accuracy trade-offs in SNNs.

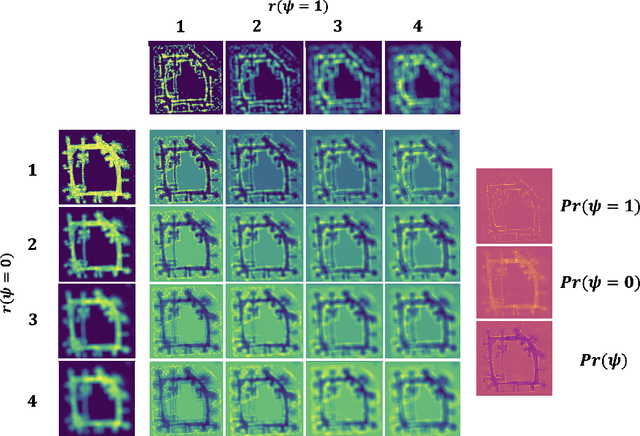

Generalizable Reinforcement Learning with Biologically Inspired Hyperdimensional Occupancy Grid Maps for Exploration and Goal-Directed Path Planning

Feb 13, 2025

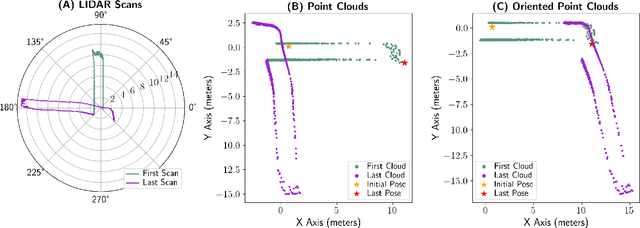

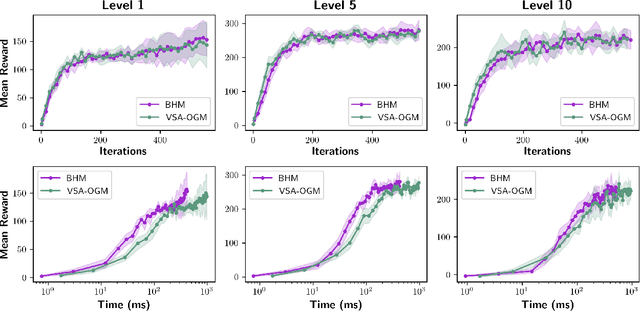

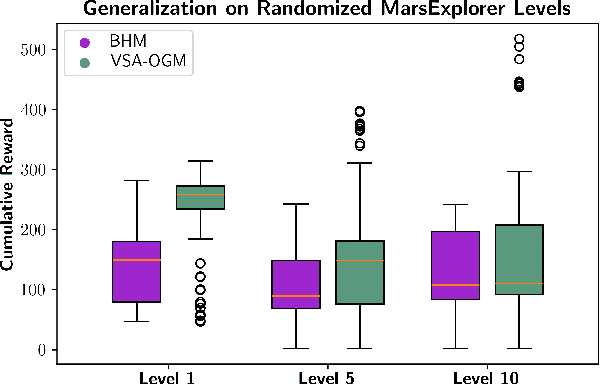

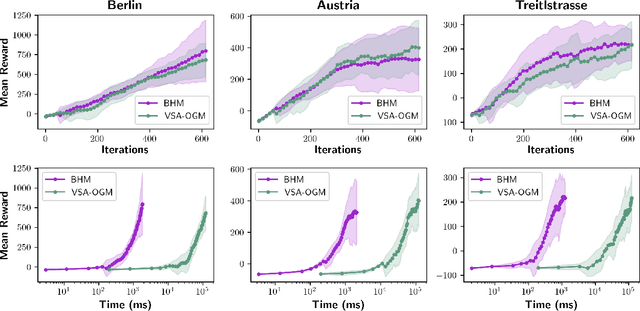

Abstract:Real-time autonomous systems utilize multi-layer computational frameworks to perform critical tasks such as perception, goal finding, and path planning. Traditional methods implement perception using occupancy grid mapping (OGM), segmenting the environment into discretized cells with probabilistic information. This classical approach is well-established and provides a structured input for downstream processes like goal finding and path planning algorithms. Recent approaches leverage a biologically inspired mathematical framework known as vector symbolic architectures (VSA), commonly known as hyperdimensional computing, to perform probabilistic OGM in hyperdimensional space. This approach, VSA-OGM, provides native compatibility with spiking neural networks, positioning VSA-OGM as a potential neuromorphic alternative to conventional OGM. However, for large-scale integration, it is essential to assess the performance implications of VSA-OGM on downstream tasks compared to established OGM methods. This study examines the efficacy of VSA-OGM against a traditional OGM approach, Bayesian Hilbert Maps (BHM), within reinforcement learning based goal finding and path planning frameworks, across a controlled exploration environment and an autonomous driving scenario inspired by the F1-Tenth challenge. Our results demonstrate that VSA-OGM maintains comparable learning performance across single and multi-scenario training configurations while improving performance on unseen environments by approximately 47%. These findings highlight the increased generalizability of policy networks trained with VSA-OGM over BHM, reinforcing its potential for real-world deployment in diverse environments.

Spiking Neural Networks as a Controller for Emergent Swarm Agents

Oct 21, 2024Abstract:Drones which can swarm and loiter in a certain area cost hundreds of dollars, but mosquitos can do the same and are essentially worthless. To control swarms of low-cost robots, researchers may end up spending countless hours brainstorming robot configurations and policies to ``organically" create behaviors which do not need expensive sensors and perception. Existing research explores the possible emergent behaviors in swarms of robots with only a binary sensor and a simple but hand-picked controller structure. Even agents in this highly limited sensing, actuation, and computational capability class can exhibit relatively complex global behaviors such as aggregation, milling, and dispersal, but finding the local interaction rules that enable more collective behaviors remains a significant challenge. This paper investigates the feasibility of training spiking neural networks to find those local interaction rules that result in particular emergent behaviors. In this paper, we focus on simulating a specific milling behavior already known to be producible using very simple binary sensing and acting agents. To do this, we use evolutionary algorithms to evolve not only the parameters (the weights, biases, and delays) of a spiking neural network, but also its structure. To create a baseline, we also show an evolutionary search strategy over the parameters for the incumbent hand-picked binary controller structure. Our simulations show that spiking neural networks can be evolved in binary sensing agents to form a mill.

Retina-inspired Object Motion Segmentation

Aug 18, 2024Abstract:Dynamic Vision Sensors (DVS) have emerged as a revolutionary technology with a high temporal resolution that far surpasses RGB cameras. DVS technology draws biological inspiration from photoreceptors and the initial retinal synapse. Our research showcases the potential of additional retinal functionalities to extract visual features. We provide a domain-agnostic and efficient algorithm for ego-motion compensation based on Object Motion Sensitivity (OMS), one of the multiple robust features computed within the mammalian retina. We develop a framework based on experimental neuroscience that translates OMS' biological circuitry to a low-overhead algorithm. OMS processes DVS data from dynamic scenes to perform pixel-wise object motion segmentation. Using a real and a synthetic dataset, we highlight OMS' ability to differentiate object motion from ego-motion, bypassing the need for deep networks. This paper introduces a bio-inspired computer vision method that dramatically reduces the number of parameters by a factor of 1000 compared to prior works. Our work paves the way for robust, high-speed, and low-bandwidth decision-making for in-sensor computations.

Brain Inspired Probabilistic Occupancy Grid Mapping with Hyperdimensional Computing

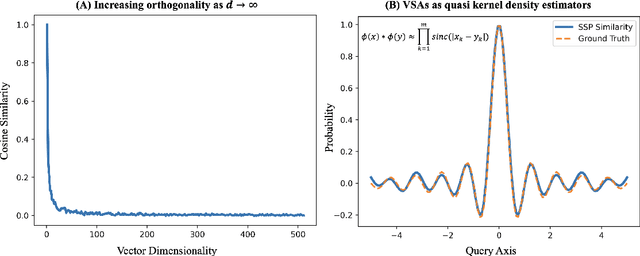

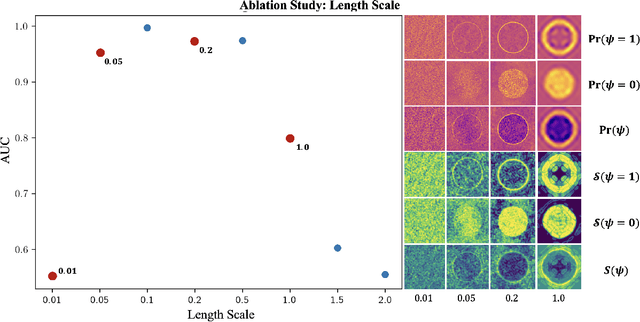

Aug 17, 2024

Abstract:Real-time robotic systems require advanced perception, computation, and action capability. However, the main bottleneck in current autonomous systems is the trade-off between computational capability, energy efficiency and model determinism. World modeling, a key objective of many robotic systems, commonly uses occupancy grid mapping (OGM) as the first step towards building an end-to-end robotic system with perception, planning, autonomous maneuvering, and decision making capabilities. OGM divides the environment into discrete cells and assigns probability values to attributes such as occupancy and traversability. Existing methods fall into two categories: traditional methods and neural methods. Traditional methods rely on dense statistical calculations, while neural methods employ deep learning for probabilistic information processing. Recent works formulate a deterministic theory of neural computation at the intersection of cognitive science and vector symbolic architectures. In this study, we propose a Fourier-based hyperdimensional OGM system, VSA-OGM, combined with a novel application of Shannon entropy that retains the interpretability and stability of traditional methods along with the improved computational efficiency of neural methods. Our approach, validated across multiple datasets, achieves similar accuracy to covariant traditional methods while approximately reducing latency by 200x and memory by 1000x. Compared to invariant traditional methods, we see similar accuracy values while reducing latency by 3.7x. Moreover, we achieve 1.5x latency reductions compared to neural methods while eliminating the need for domain-specific model training.

Hardware-Algorithm Re-engineering of Retinal Circuit for Intelligent Object Motion Segmentation

Jul 31, 2024

Abstract:Recent advances in retinal neuroscience have fueled various hardware and algorithmic efforts to develop retina-inspired solutions for computer vision tasks. In this work, we focus on a fundamental visual feature within the mammalian retina, Object Motion Sensitivity (OMS). Using DVS data from EV-IMO dataset, we analyze the performance of an algorithmic implementation of OMS circuitry for motion segmentation in presence of ego-motion. This holistic analysis considers the underlying constraints arising from the hardware circuit implementation. We present novel CMOS circuits that implement OMS functionality inside image sensors, while providing run-time re-configurability for key algorithmic parameters. In-sensor technologies for dynamical environment adaptation are crucial for ensuring high system performance. Finally, we verify the functionality and re-configurability of the proposed CMOS circuit designs through Cadence simulations in 180nm technology. In summary, the presented work lays foundation for hardware-algorithm re-engineering of known biological circuits to suit application needs.

Transductive Spiking Graph Neural Networks for Loihi

Apr 25, 2024

Abstract:Graph neural networks have emerged as a specialized branch of deep learning, designed to address problems where pairwise relations between objects are crucial. Recent advancements utilize graph convolutional neural networks to extract features within graph structures. Despite promising results, these methods face challenges in real-world applications due to sparse features, resulting in inefficient resource utilization. Recent studies draw inspiration from the mammalian brain and employ spiking neural networks to model and learn graph structures. However, these approaches are limited to traditional Von Neumann-based computing systems, which still face hardware inefficiencies. In this study, we present a fully neuromorphic implementation of spiking graph neural networks designed for Loihi 2. We optimize network parameters using Lava Bayesian Optimization, a novel hyperparameter optimization system compatible with neuromorphic computing architectures. We showcase the performance benefits of combining neuromorphic Bayesian optimization with our approach for citation graph classification using fixed-precision spiking neurons. Our results demonstrate the capability of integer-precision, Loihi 2 compatible spiking neural networks in performing citation graph classification with comparable accuracy to existing floating point implementations.

Zespol: A Lightweight Environment for Training Swarming Agents

Jun 30, 2023

Abstract:Agent-based modeling (ABM) and simulation have emerged as important tools for studying emergent behaviors, especially in the context of swarming algorithms for robotic systems. Despite significant research in this area, there is a lack of standardized simulation environments, which hinders the development and deployment of real-world robotic swarms. To address this issue, we present Zespol, a modular, Python-based simulation environment that enables the development and testing of multi-agent control algorithms. Zespol provides a flexible and extensible sandbox for initial research, with the potential for scaling to real-world applications. We provide a topological overview of the system and detailed descriptions of its plug-and-play elements. We demonstrate the fidelity of Zespol in simulated and real-word robotics by replicating existing works highlighting the simulation to real gap with the milling behavior. We plan to leverage Zespol's plug-and-play feature for neuromorphic computing in swarming scenarios, which involves using the modules in Zespol to simulate the behavior of neurons and their connections as synapses. This will enable optimizing and studying the emergent behavior of swarm systems in complex environments. Our goal is to gain a better understanding of the interplay between environmental factors and neural-like computations in swarming systems.

Neuromorphic Bayesian Optimization in Lava

May 18, 2023

Abstract:The ever-increasing demands of computationally expensive and high-dimensional problems require novel optimization methods to find near-optimal solutions in a reasonable amount of time. Bayesian Optimization (BO) stands as one of the best methodologies for learning the underlying relationships within multi-variate problems. This allows users to optimize time consuming and computationally expensive black-box functions in feasible time frames. Existing BO implementations use traditional von-Neumann architectures, in which data and memory are separate. In this work, we introduce Lava Bayesian Optimization (LavaBO) as a contribution to the open-source Lava Software Framework. LavaBO is the first step towards developing a BO system compatible with heterogeneous, fine-grained parallel, in-memory neuromorphic computing architectures (e.g., Intel's Loihi platform). We evaluate the algorithmic performance of the LavaBO system on multiple problems such as training state-of-the-art spiking neural network through back-propagation and evolutionary learning. Compared to traditional algorithms (such as grid and random search), we highlight the ability of LavaBO to explore the parameter search space with fewer expensive function evaluations, while discovering the optimal solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge