Md Abdullah-Al Kaiser

University of Southern, California

A Retina-Inspired Pathway to Real-Time Motion Prediction inside Image Sensors for Extreme-Edge Intelligence

Apr 02, 2025Abstract:The ability to predict motion in real time is fundamental to many maneuvering activities in animals, particularly those critical for survival, such as attack and escape responses. Given its significance, it is no surprise that motion prediction in animals begins in the retina. Similarly, autonomous systems utilizing computer vision could greatly benefit from the capability to predict motion in real time. Therefore, for computer vision applications, motion prediction should be integrated directly at the camera pixel level. Towards that end, we present a retina-inspired neuromorphic framework capable of performing real-time, energy-efficient MP directly within camera pixels. Our hardware-algorithm framework, implemented using GlobalFoundries 22nm FDSOI technology, integrates key retinal MP compute blocks, including a biphasic filter, spike adder, nonlinear circuit, and a 2D array for multi-directional motion prediction. Additionally, integrating the sensor and MP compute die using a 3D Cu-Cu hybrid bonding approach improves design compactness by minimizing area usage and simplifying routing complexity. Validated on real-world object stimuli, the model delivers efficient, low-latency MP for decision-making scenarios reliant on predictive visual computation, while consuming only 18.56 pJ/MP in our mixed-signal hardware implementation.

Voltage-Controlled Magnetic Tunnel Junction based ADC-less Global Shutter Processing-in-Pixel for Extreme-Edge Intelligence

Oct 14, 2024

Abstract:The vast amount of data generated by camera sensors has prompted the exploration of energy-efficient processing solutions for deploying computer vision tasks on edge devices. Among the various approaches studied, processing-in-pixel integrates massively parallel analog computational capabilities at the extreme-edge, i.e., within the pixel array and exhibits enhanced energy and bandwidth efficiency by generating the output activations of the first neural network layer rather than the raw sensory data. In this article, we propose an energy and bandwidth efficient ADC-less processing-in-pixel architecture. This architecture implements an optimized binary activation neural network trained using Hoyer regularizer for high accuracy on complex vision tasks. In addition, we also introduce a global shutter burst memory read scheme utilizing fast and disturb-free read operation leveraging innovative use of nanoscale voltage-controlled magnetic tunnel junctions (VC-MTJs). Moreover, we develop an algorithmic framework incorporating device and circuit constraints (characteristic device switching behavior and circuit non-linearity) based on state-of-the-art fabricated VC-MTJ characteristics and extensive circuit simulations using commercial GlobalFoundries 22nm FDX technology. Finally, we evaluate the proposed system's performance on two complex datasets - CIFAR10 and ImageNet, showing improvements in front-end and communication energy efficiency by 8.2x and 8.5x respectively and reduction in bandwidth by 6x compared to traditional computer vision systems, without any significant drop in the test accuracy.

Energy-Efficient & Real-Time Computer Vision with Intelligent Skipping via Reconfigurable CMOS Image Sensors

Sep 25, 2024

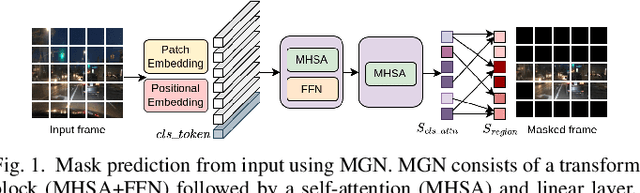

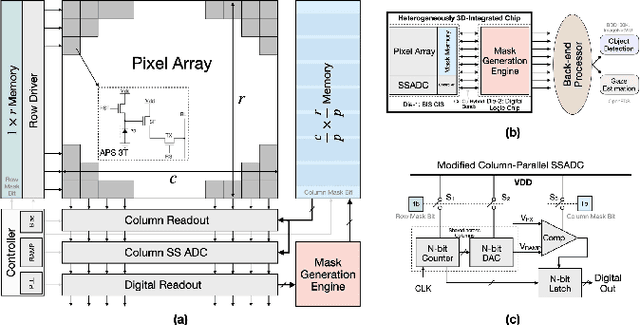

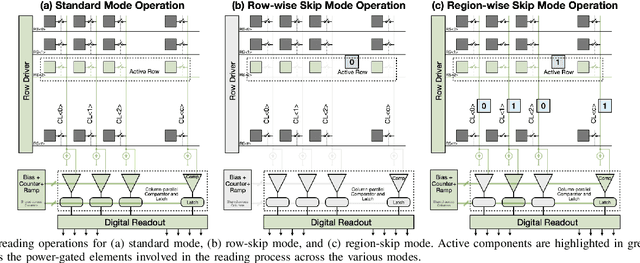

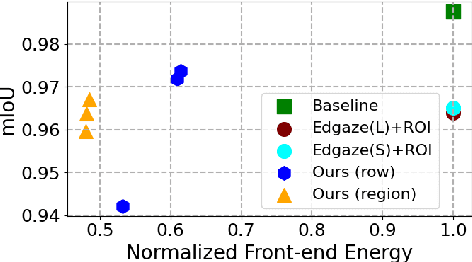

Abstract:Current video-based computer vision (CV) applications typically suffer from high energy consumption due to reading and processing all pixels in a frame, regardless of their significance. While previous works have attempted to reduce this energy by skipping input patches or pixels and using feedback from the end task to guide the skipping algorithm, the skipping is not performed during the sensor read phase. As a result, these methods can not optimize the front-end sensor energy. Moreover, they may not be suitable for real-time applications due to the long latency of modern CV networks that are deployed in the back-end. To address this challenge, this paper presents a custom-designed reconfigurable CMOS image sensor (CIS) system that improves energy efficiency by selectively skipping uneventful regions or rows within a frame during the sensor's readout phase, and the subsequent analog-to-digital conversion (ADC) phase. A novel masking algorithm intelligently directs the skipping process in real-time, optimizing both the front-end sensor and back-end neural networks for applications including autonomous driving and augmented/virtual reality (AR/VR). Our system can also operate in standard mode without skipping, depending on application needs. We evaluate our hardware-algorithm co-design framework on object detection based on BDD100K and ImageNetVID, and gaze estimation based on OpenEDS, achieving up to 53% reduction in front-end sensor energy while maintaining state-of-the-art (SOTA) accuracy.

Retina-inspired Object Motion Segmentation

Aug 18, 2024Abstract:Dynamic Vision Sensors (DVS) have emerged as a revolutionary technology with a high temporal resolution that far surpasses RGB cameras. DVS technology draws biological inspiration from photoreceptors and the initial retinal synapse. Our research showcases the potential of additional retinal functionalities to extract visual features. We provide a domain-agnostic and efficient algorithm for ego-motion compensation based on Object Motion Sensitivity (OMS), one of the multiple robust features computed within the mammalian retina. We develop a framework based on experimental neuroscience that translates OMS' biological circuitry to a low-overhead algorithm. OMS processes DVS data from dynamic scenes to perform pixel-wise object motion segmentation. Using a real and a synthetic dataset, we highlight OMS' ability to differentiate object motion from ego-motion, bypassing the need for deep networks. This paper introduces a bio-inspired computer vision method that dramatically reduces the number of parameters by a factor of 1000 compared to prior works. Our work paves the way for robust, high-speed, and low-bandwidth decision-making for in-sensor computations.

Hardware-Algorithm Re-engineering of Retinal Circuit for Intelligent Object Motion Segmentation

Jul 31, 2024

Abstract:Recent advances in retinal neuroscience have fueled various hardware and algorithmic efforts to develop retina-inspired solutions for computer vision tasks. In this work, we focus on a fundamental visual feature within the mammalian retina, Object Motion Sensitivity (OMS). Using DVS data from EV-IMO dataset, we analyze the performance of an algorithmic implementation of OMS circuitry for motion segmentation in presence of ego-motion. This holistic analysis considers the underlying constraints arising from the hardware circuit implementation. We present novel CMOS circuits that implement OMS functionality inside image sensors, while providing run-time re-configurability for key algorithmic parameters. In-sensor technologies for dynamical environment adaptation are crucial for ensuring high system performance. Finally, we verify the functionality and re-configurability of the proposed CMOS circuit designs through Cadence simulations in 180nm technology. In summary, the presented work lays foundation for hardware-algorithm re-engineering of known biological circuits to suit application needs.

Toward High Performance, Programmable Extreme-Edge Intelligence for Neuromorphic Vision Sensors utilizing Magnetic Domain Wall Motion-based MTJ

Feb 23, 2024

Abstract:The desire to empower resource-limited edge devices with computer vision (CV) must overcome the high energy consumption of collecting and processing vast sensory data. To address the challenge, this work proposes an energy-efficient non-von-Neumann in-pixel processing solution for neuromorphic vision sensors employing emerging (X) magnetic domain wall magnetic tunnel junction (MDWMTJ) for the first time, in conjunction with CMOS-based neuromorphic pixels. Our hybrid CMOS+X approach performs in-situ massively parallel asynchronous analog convolution, exhibiting low power consumption and high accuracy across various CV applications by leveraging the non-volatility and programmability of the MDWMTJ. Moreover, our developed device-circuit-algorithm co-design framework captures device constraints (low tunnel-magnetoresistance, low dynamic range) and circuit constraints (non-linearity, process variation, area consideration) based on monte-carlo simulations and device parameters utilizing GF22nm FD-SOI technology. Our experimental results suggest we can achieve an average of 45.3% reduction in backend-processor energy, maintaining similar front-end energy compared to the state-of-the-art and high accuracy of 79.17% and 95.99% on the DVS-CIFAR10 and IBM DVS128-Gesture datasets, respectively.

Technology-Circuit-Algorithm Tri-Design for Processing-in-Pixel-in-Memory (P2M)

Apr 06, 2023Abstract:The massive amounts of data generated by camera sensors motivate data processing inside pixel arrays, i.e., at the extreme-edge. Several critical developments have fueled recent interest in the processing-in-pixel-in-memory paradigm for a wide range of visual machine intelligence tasks, including (1) advances in 3D integration technology to enable complex processing inside each pixel in a 3D integrated manner while maintaining pixel density, (2) analog processing circuit techniques for massively parallel low-energy in-pixel computations, and (3) algorithmic techniques to mitigate non-idealities associated with analog processing through hardware-aware training schemes. This article presents a comprehensive technology-circuit-algorithm landscape that connects technology capabilities, circuit design strategies, and algorithmic optimizations to power, performance, area, bandwidth reduction, and application-level accuracy metrics. We present our results using a comprehensive co-design framework incorporating hardware and algorithmic optimizations for various complex real-life visual intelligence tasks mapped onto our P2M paradigm.

Object Motion Sensitivity: A Bio-inspired Solution to the Ego-motion Problem for Event-based Cameras

Mar 27, 2023

Abstract:Neuromorphic (event-based) image sensors draw inspiration from the human-retina to create an electronic device that can process visual stimuli in a way that closely resembles its biological counterpart. These sensors process information significantly different than the traditional RGB sensors. Specifically, the sensory information generated by event-based image sensors are orders of magnitude sparser compared to that of RGB sensors. The first generation of neuromorphic image sensors, Dynamic Vision Sensor (DVS), are inspired by the computations confined to the photoreceptors and the first retinal synapse. In this work, we highlight the capability of the second generation of neuromorphic image sensors, Integrated Retinal Functionality in CMOS Image Sensors (IRIS), which aims to mimic full retinal computations from photoreceptors to output of the retina (retinal ganglion cells) for targeted feature-extraction. The feature of choice in this work is Object Motion Sensitivity (OMS) that is processed locally in the IRIS sensor. We study the capability of OMS in solving the ego-motion problem of the event-based cameras. Our results show that OMS can accomplish standard computer vision tasks with similar efficiency to conventional RGB and DVS solutions but offers drastic bandwidth reduction. This cuts the wireless and computing power budgets and opens up vast opportunities in high-speed, robust, energy-efficient, and low-bandwidth real-time decision making.

Neuromorphic-P2M: Processing-in-Pixel-in-Memory Paradigm for Neuromorphic Image Sensors

Jan 22, 2023Abstract:Edge devices equipped with computer vision must deal with vast amounts of sensory data with limited computing resources. Hence, researchers have been exploring different energy-efficient solutions such as near-sensor processing, in-sensor processing, and in-pixel processing, bringing the computation closer to the sensor. In particular, in-pixel processing embeds the computation capabilities inside the pixel array and achieves high energy efficiency by generating low-level features instead of the raw data stream from CMOS image sensors. Many different in-pixel processing techniques and approaches have been demonstrated on conventional frame-based CMOS imagers, however, the processing-in-pixel approach for neuromorphic vision sensors has not been explored so far. In this work, we for the first time, propose an asynchronous non-von-Neumann analog processing-in-pixel paradigm to perform convolution operations by integrating in-situ multi-bit multi-channel convolution inside the pixel array performing analog multiply and accumulate (MAC) operations that consume significantly less energy than their digital MAC alternative. To make this approach viable, we incorporate the circuit's non-ideality, leakage, and process variations into a novel hardware-algorithm co-design framework that leverages extensive HSpice simulations of our proposed circuit using the GF22nm FD-SOI technology node. We verified our framework on state-of-the-art neuromorphic vision sensor datasets and show that our solution consumes ~2x lower backend-processor energy while maintaining almost similar front-end (sensor) energy on the IBM DVS128-Gesture dataset than the state-of-the-art while maintaining a high test accuracy of 88.36%.

In-Sensor & Neuromorphic Computing are all you need for Energy Efficient Computer Vision

Dec 21, 2022Abstract:Due to the high activation sparsity and use of accumulates (AC) instead of expensive multiply-and-accumulates (MAC), neuromorphic spiking neural networks (SNNs) have emerged as a promising low-power alternative to traditional DNNs for several computer vision (CV) applications. However, most existing SNNs require multiple time steps for acceptable inference accuracy, hindering real-time deployment and increasing spiking activity and, consequently, energy consumption. Recent works proposed direct encoding that directly feeds the analog pixel values in the first layer of the SNN in order to significantly reduce the number of time steps. Although the overhead for the first layer MACs with direct encoding is negligible for deep SNNs and the CV processing is efficient using SNNs, the data transfer between the image sensors and the downstream processing costs significant bandwidth and may dominate the total energy. To mitigate this concern, we propose an in-sensor computing hardware-software co-design framework for SNNs targeting image recognition tasks. Our approach reduces the bandwidth between sensing and processing by 12-96x and the resulting total energy by 2.32x compared to traditional CV processing, with a 3.8% reduction in accuracy on ImageNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge