Akhilesh Jaiswal

University of Southern, California

A Pathway to Near Tissue Computing through Processing-in-CTIA Pixels for Biomedical Applications

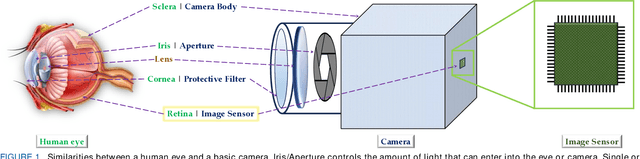

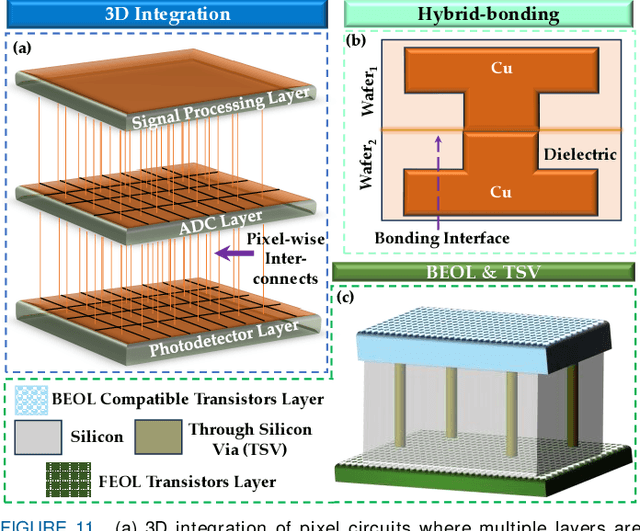

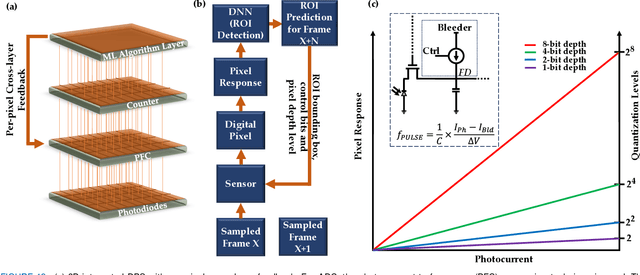

Mar 21, 2025Abstract:Near-tissue computing requires sensor-level processing of high-resolution images, essential for real-time biomedical diagnostics and surgical guidance. To address this need, we introduce a novel Capacitive Transimpedance Amplifier-based In-Pixel Computing (CTIA-IPC) architecture. Our design leverages CTIA pixels that are widely used for biomedical imaging owing to the inherent advantages of excellent linearity, low noise, and robust operation under low-light conditions. We augment CTIA pixels with IPC to enable precise deep learning computations including multi-channel, multi-bit convolution operations along with integrated batch normalization (BN) and Rectified Linear Unit (ReLU) functionalities in the peripheral ADC (Analog to Digital Converters). This design improves the linearity of Multiply and Accumulate (MAC) operations while enhancing computational efficiency. Leveraging 3D integration to embed pixel circuitry and weight storage, CTIA-IPC maintains pixel density comparable to standard CTIA designs. Moreover, our algorithm-circuit co-design approach enables efficient real-time diagnostics and AI-driven medical analysis. Evaluated on the EndoVis tissu dataset (1280x1024), CTIA-IPC achieves approximately 12x reduction in data bandwidth, yielding segmentation IoUs of 75.91% (parts), and 28.58% (instrument)-a minimal accuracy reduction (1.3%-2.5%) compared to baseline methods. Achieving 1.98 GOPS throughput and 3.39 GOPS/W efficiency, our CTIA-IPC architecture offers a promising computational framework tailored specifically for biomedical near-tissue computing.

Retina-inspired Object Motion Segmentation

Aug 18, 2024Abstract:Dynamic Vision Sensors (DVS) have emerged as a revolutionary technology with a high temporal resolution that far surpasses RGB cameras. DVS technology draws biological inspiration from photoreceptors and the initial retinal synapse. Our research showcases the potential of additional retinal functionalities to extract visual features. We provide a domain-agnostic and efficient algorithm for ego-motion compensation based on Object Motion Sensitivity (OMS), one of the multiple robust features computed within the mammalian retina. We develop a framework based on experimental neuroscience that translates OMS' biological circuitry to a low-overhead algorithm. OMS processes DVS data from dynamic scenes to perform pixel-wise object motion segmentation. Using a real and a synthetic dataset, we highlight OMS' ability to differentiate object motion from ego-motion, bypassing the need for deep networks. This paper introduces a bio-inspired computer vision method that dramatically reduces the number of parameters by a factor of 1000 compared to prior works. Our work paves the way for robust, high-speed, and low-bandwidth decision-making for in-sensor computations.

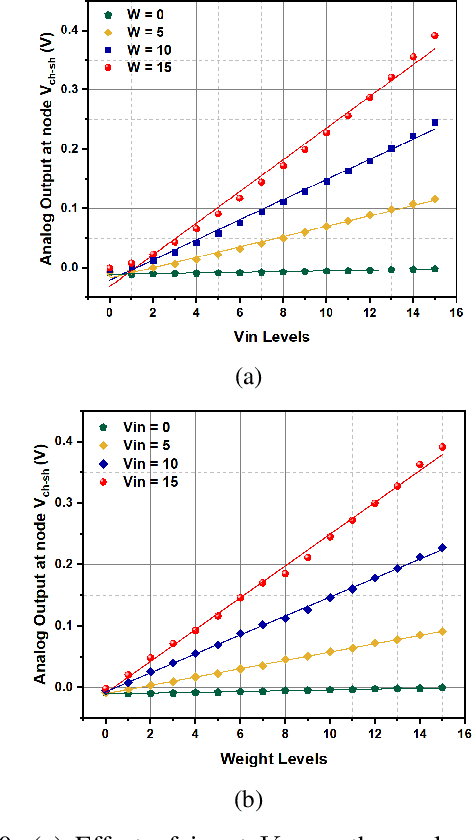

FPCA: Field-Programmable Pixel Convolutional Array for Extreme-Edge Intelligence

Aug 03, 2024

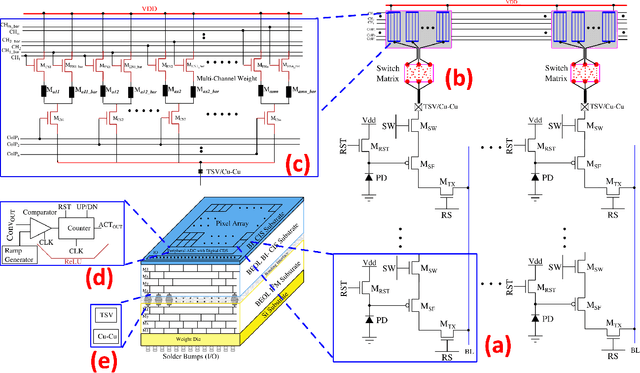

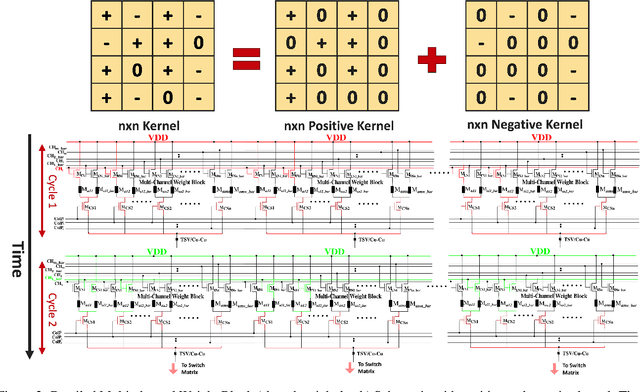

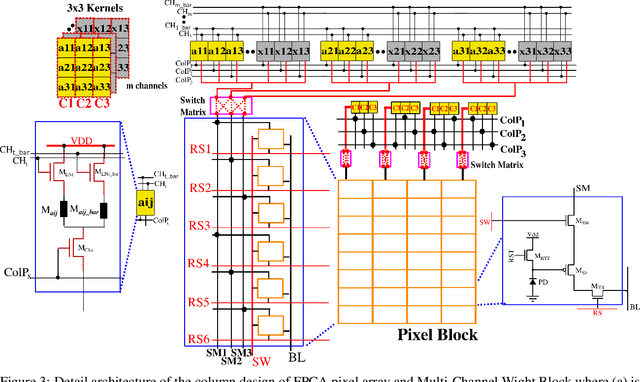

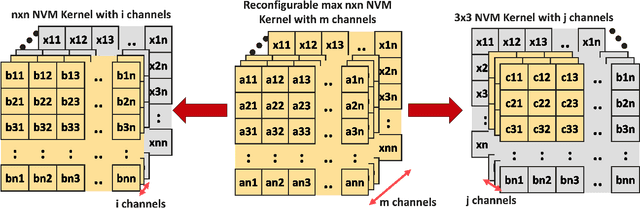

Abstract:The rapid advancement of neural network applications necessitates hardware that not only accelerates computation but also adapts efficiently to dynamic processing requirements. While processing-in-pixel has emerged as a promising solution to overcome the bottlenecks of traditional architectures at the extreme-edge, existing implementations face limitations in reconfigurability and scalability due to their static nature and inefficient area usage. Addressing these challenges, we present a novel architecture that significantly enhances the capabilities of processing-in-pixel for convolutional neural networks. Our design innovatively integrates non-volatile memory (NVM) with novel unit pixel circuit design, enabling dynamic reconfiguration of synaptic weights, kernel size, channel size and stride size. Thus offering unprecedented flexibility and adaptability. With using a separate die for pixel circuit and storing synaptic weights, our circuit achieves a substantial reduction in the required area per pixel thereby increasing the density and scalability of the pixel array. Simulation results demonstrate dot product operations of the circuit, the non-linearity of its analog output and a novel bucket-select curvefit model is proposed to capture it. This work not only addresses the limitations of current in-pixel computing approaches but also opens new avenues for developing more efficient, flexible, and scalable neural network hardware, paving the way for advanced AI applications.

Hardware-Algorithm Re-engineering of Retinal Circuit for Intelligent Object Motion Segmentation

Jul 31, 2024

Abstract:Recent advances in retinal neuroscience have fueled various hardware and algorithmic efforts to develop retina-inspired solutions for computer vision tasks. In this work, we focus on a fundamental visual feature within the mammalian retina, Object Motion Sensitivity (OMS). Using DVS data from EV-IMO dataset, we analyze the performance of an algorithmic implementation of OMS circuitry for motion segmentation in presence of ego-motion. This holistic analysis considers the underlying constraints arising from the hardware circuit implementation. We present novel CMOS circuits that implement OMS functionality inside image sensors, while providing run-time re-configurability for key algorithmic parameters. In-sensor technologies for dynamical environment adaptation are crucial for ensuring high system performance. Finally, we verify the functionality and re-configurability of the proposed CMOS circuit designs through Cadence simulations in 180nm technology. In summary, the presented work lays foundation for hardware-algorithm re-engineering of known biological circuits to suit application needs.

A Review on Digital Pixel Sensors

Feb 07, 2024

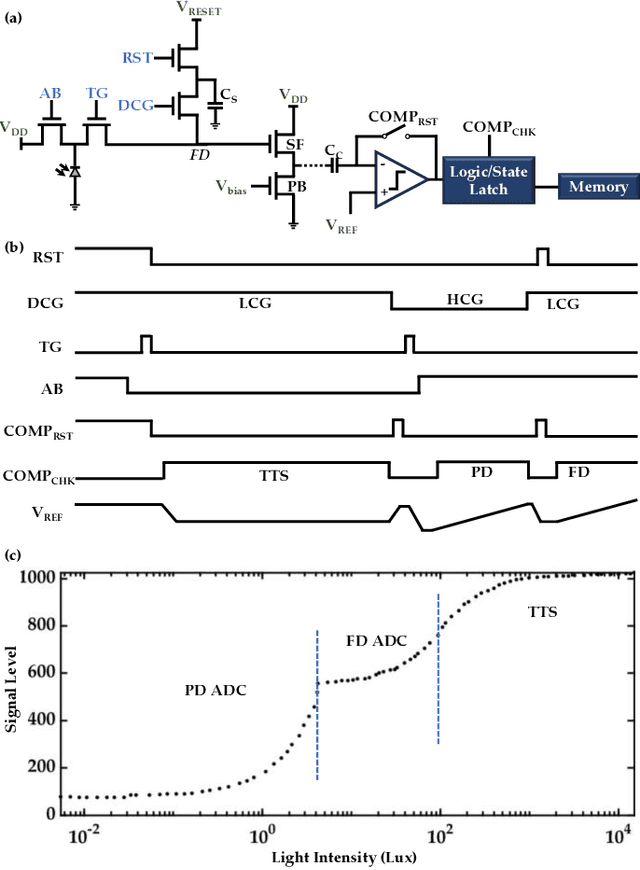

Abstract:Digital pixel sensor (DPS) has evolved as a pivotal component in modern imaging systems and has the potential to revolutionize various fields such as medical imaging, astronomy, surveillance, IoT devices, etc. Compared to analog pixel sensors, the DPS offers high speed and good image quality. However, the introduced intrinsic complexity within each pixel, primarily attributed to the accommodation of the ADC circuit, engenders a substantial increase in the pixel pitch. Unfortunately, such a pronounced escalation in pixel pitch drastically undermines the feasibility of achieving high-density integration, which is an obstacle that significantly narrows down the field of potential applications. Nonetheless, designing compact conversion circuits along with strategic integration of 3D architectural paradigms can be a potential remedy to the prevailing situation. This review article presents a comprehensive overview of the vast area of DPS technology. The operating principles, advantages, and challenges of different types of DPS circuits have been analyzed. We categorize the schemes into several categories based on ADC operation. A comparative study based on different performance metrics has also been showcased for a well-rounded understanding.

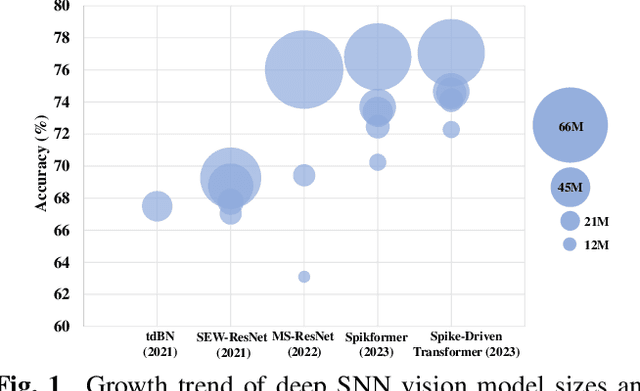

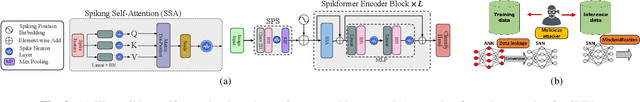

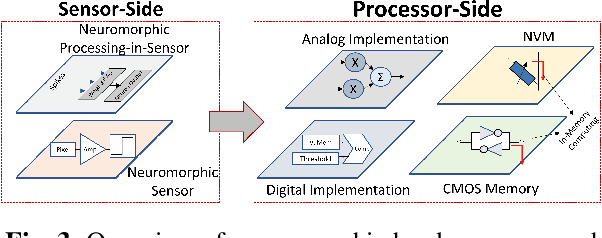

Recent Advances in Scalable Energy-Efficient and Trustworthy Spiking Neural networks: from Algorithms to Technology

Dec 02, 2023

Abstract:Neuromorphic computing and, in particular, spiking neural networks (SNNs) have become an attractive alternative to deep neural networks for a broad range of signal processing applications, processing static and/or temporal inputs from different sensory modalities, including audio and vision sensors. In this paper, we start with a description of recent advances in algorithmic and optimization innovations to efficiently train and scale low-latency, and energy-efficient spiking neural networks (SNNs) for complex machine learning applications. We then discuss the recent efforts in algorithm-architecture co-design that explores the inherent trade-offs between achieving high energy-efficiency and low latency while still providing high accuracy and trustworthiness. We then describe the underlying hardware that has been developed to leverage such algorithmic innovations in an efficient way. In particular, we describe a hybrid method to integrate significant portions of the model's computation within both memory components as well as the sensor itself. Finally, we discuss the potential path forward for research in building deployable SNN systems identifying key challenges in the algorithm-hardware-application co-design space with an emphasis on trustworthiness.

Object Motion Sensitivity: A Bio-inspired Solution to the Ego-motion Problem for Event-based Cameras

Mar 27, 2023

Abstract:Neuromorphic (event-based) image sensors draw inspiration from the human-retina to create an electronic device that can process visual stimuli in a way that closely resembles its biological counterpart. These sensors process information significantly different than the traditional RGB sensors. Specifically, the sensory information generated by event-based image sensors are orders of magnitude sparser compared to that of RGB sensors. The first generation of neuromorphic image sensors, Dynamic Vision Sensor (DVS), are inspired by the computations confined to the photoreceptors and the first retinal synapse. In this work, we highlight the capability of the second generation of neuromorphic image sensors, Integrated Retinal Functionality in CMOS Image Sensors (IRIS), which aims to mimic full retinal computations from photoreceptors to output of the retina (retinal ganglion cells) for targeted feature-extraction. The feature of choice in this work is Object Motion Sensitivity (OMS) that is processed locally in the IRIS sensor. We study the capability of OMS in solving the ego-motion problem of the event-based cameras. Our results show that OMS can accomplish standard computer vision tasks with similar efficiency to conventional RGB and DVS solutions but offers drastic bandwidth reduction. This cuts the wireless and computing power budgets and opens up vast opportunities in high-speed, robust, energy-efficient, and low-bandwidth real-time decision making.

IRIS: Integrated Retinal Functionality in Image Sensors

Aug 14, 2022Abstract:Neuromorphic image sensors draw inspiration from the biological retina to implement visual computations in electronic hardware. Gain control in phototransduction and temporal differentiation at the first retinal synapse inspired the first generation of neuromorphic sensors, but processing in downstream retinal circuits, much of which has been discovered in the past decade, has not been implemented in image sensor technology. We present a technology-circuit co-design solution that implements two motion computations occurring at the output of the retina that could have wide applications for vision based decision making in dynamic environments. Our simulations on Globalfoundries 22nm technology node show that, by taking advantage of the recent advances in semiconductor chip stacking technology, the proposed retina-inspired circuits can be fabricated on image sensing platforms in existing semiconductor foundries. Integrated Retinal Functionality in Image Sensors (IRIS) technology could drive advances in machine vision applications that demand robust, high-speed, energy-efficient and low-bandwidth real-time decision making.

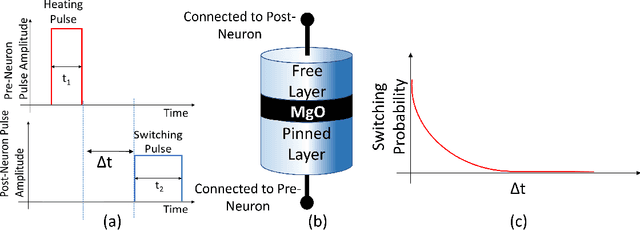

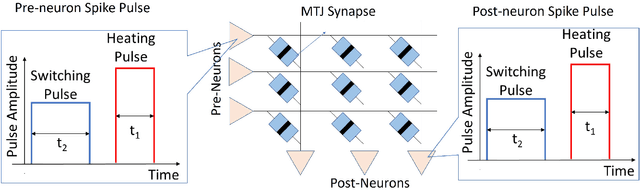

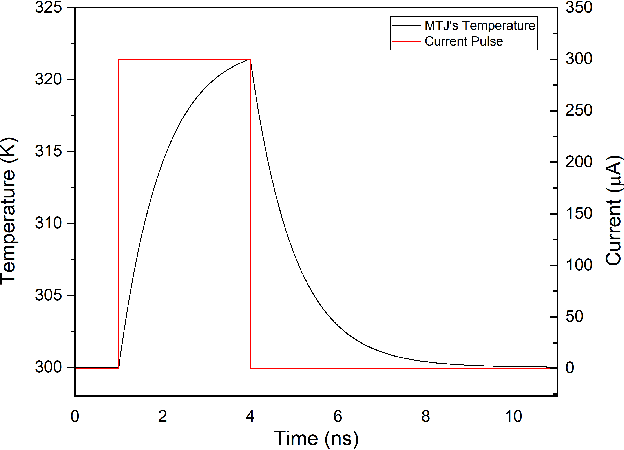

Intrinsic Spike Timing Dependent Plasticity in Stochastic Magnetic Tunnel Junctions Mediated by Heat Dynamics

Aug 28, 2021

Abstract:The quest for highly efficient cognitive computing has led to extensive research interest for the field of neuromorphic computing. Neuromorphic computing aims to mimic the behavior of biological neurons and synapses using solid-state devices and circuits. Among various approaches, emerging non-volatile memory technologies are of special interest for mimicking neuro-synaptic behavior. These devices allow the mapping of the rich dynamics of biological neurons and synapses onto their intrinsic device physics. In this letter, we focus on Spike Timing Dependent Plasticity (STDP) behavior of biological synapses and propose a method to implement the STDP behavior in Magnetic Tunnel Junction (MTJ) devices. Specifically, we exploit the time-dependent heat dynamics and the response of an MTJ to the instantaneous temperature to imitate the STDP behavior. Our simulations, based on a macro-spin model for magnetization dynamics, show that, STDP can be imitated in stochastic magnetic tunnel junctions by applying simple voltage waveforms as the spiking response of pre- and post-neurons across an MTJ device.

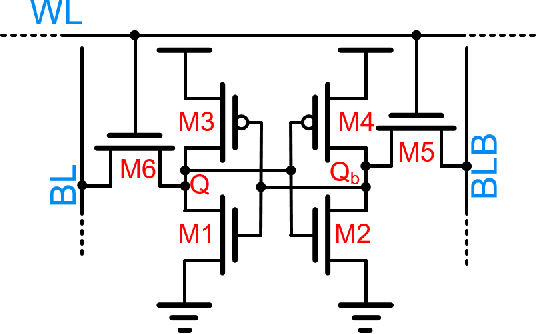

IMAC: In-memory multi-bit Multiplication andACcumulation in 6T SRAM Array

Mar 27, 2020

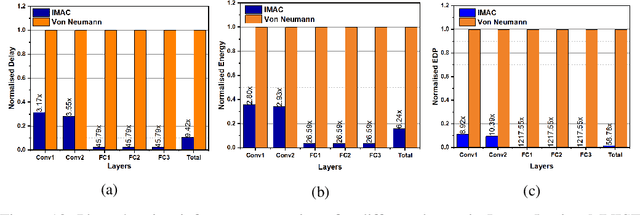

Abstract:`In-memory computing' is being widely explored as a novel computing paradigm to mitigate the well known memory bottleneck. This emerging paradigm aims at embedding some aspects of computations inside the memory array, thereby avoiding frequent and expensive movement of data between the compute unit and the storage memory. In-memory computing with respect to Silicon memories has been widely explored on various memory bit-cells. Embedding computation inside the 6 transistor (6T) SRAM array is of special interest since it is the most widely used on-chip memory. In this paper, we present a novel in-memory multiplication followed by accumulation operation capable of performing parallel dot products within 6T SRAM without any changes to the standard bitcell. We, further, study the effect of circuit non-idealities and process variations on the accuracy of the LeNet-5 and VGG neural network architectures against the MNIST and CIFAR-10 datasets, respectively. The proposed in-memory dot-product mechanism achieves 88.8% and 99% accuracy for the CIFAR-10 and MNIST, respectively. Compared to the standard von Neumann system, the proposed system is 6.24x better in energy consumption and 9.42x better in delay.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge