Hamed Poursiami

Izhikevich-Inspired Temporal Dynamics for Enhancing Privacy, Efficiency, and Transferability in Spiking Neural Networks

May 07, 2025

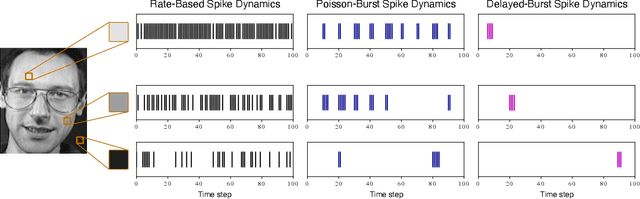

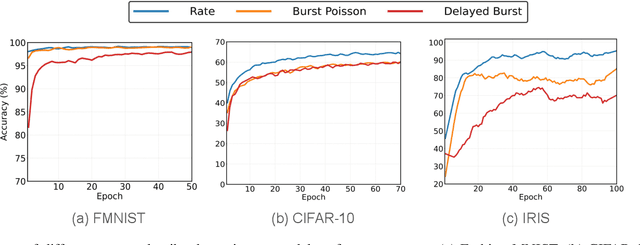

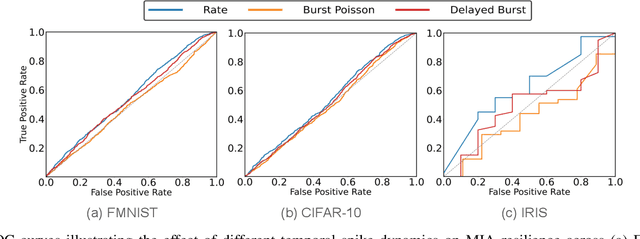

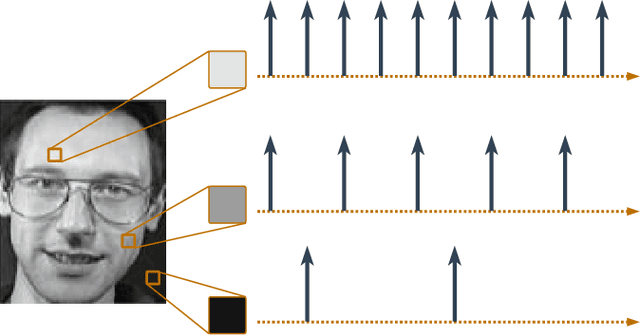

Abstract:Biological neurons exhibit diverse temporal spike patterns, which are believed to support efficient, robust, and adaptive neural information processing. While models such as Izhikevich can replicate a wide range of these firing dynamics, their complexity poses challenges for directly integrating them into scalable spiking neural networks (SNN) training pipelines. In this work, we propose two probabilistically driven, input-level temporal spike transformations: Poisson-Burst and Delayed-Burst that introduce biologically inspired temporal variability directly into standard Leaky Integrate-and-Fire (LIF) neurons. This enables scalable training and systematic evaluation of how spike timing dynamics affect privacy, generalization, and learning performance. Poisson-Burst modulates burst occurrence based on input intensity, while Delayed-Burst encodes input strength through burst onset timing. Through extensive experiments across multiple benchmarks, we demonstrate that Poisson-Burst maintains competitive accuracy and lower resource overhead while exhibiting enhanced privacy robustness against membership inference attacks, whereas Delayed-Burst provides stronger privacy protection at a modest accuracy trade-off. These findings highlight the potential of biologically grounded temporal spike dynamics in improving the privacy, generalization and biological plausibility of neuromorphic learning systems.

On the Privacy-Preserving Properties of Spiking Neural Networks with Unique Surrogate Gradients and Quantization Levels

Feb 25, 2025

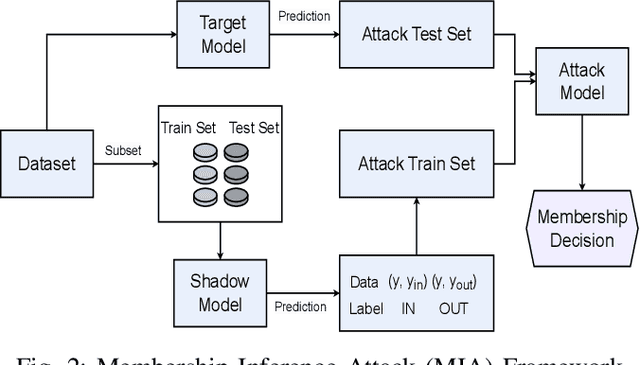

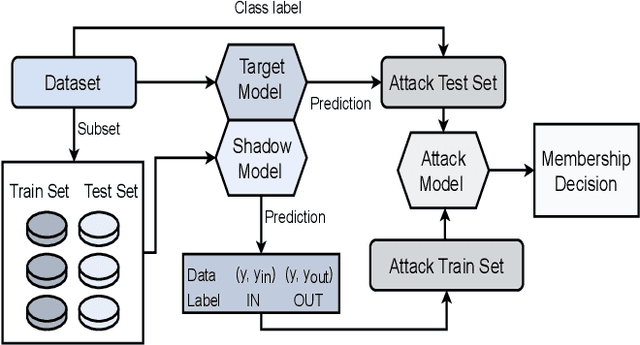

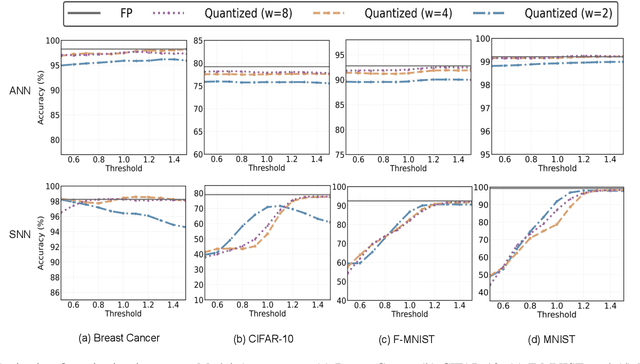

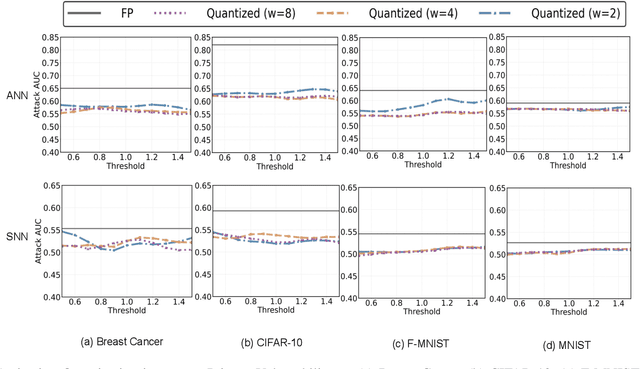

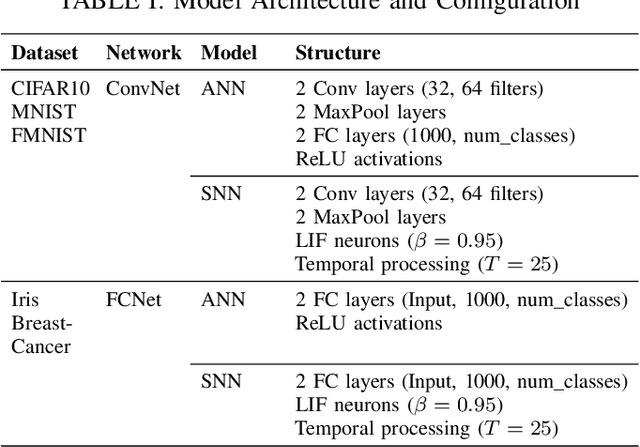

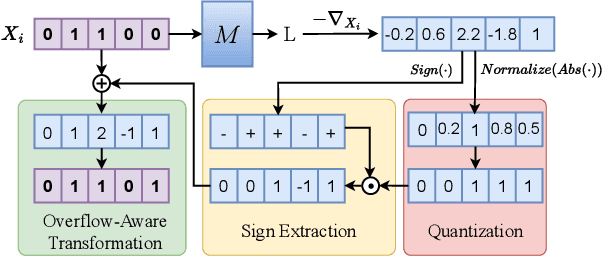

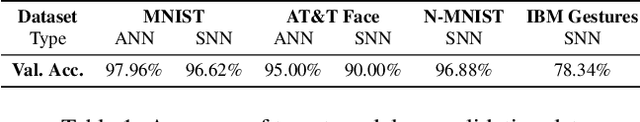

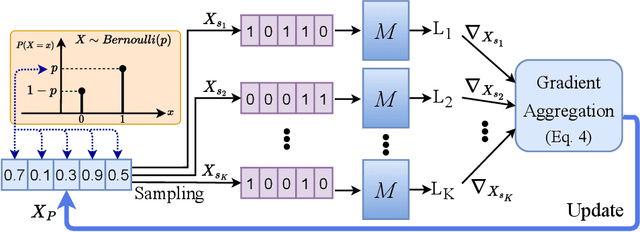

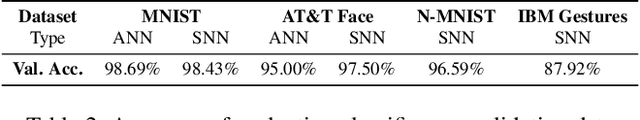

Abstract:As machine learning models increasingly process sensitive data, understanding their vulnerability to privacy attacks is vital. Membership inference attacks (MIAs) exploit model responses to infer whether specific data points were used during training, posing a significant privacy risk. Prior research suggests that spiking neural networks (SNNs), which rely on event-driven computation and discrete spike-based encoding, exhibit greater resilience to MIAs than artificial neural networks (ANNs). This resilience stems from their non-differentiable activations and inherent stochasticity, which obscure the correlation between model responses and individual training samples. To enhance privacy in SNNs, we explore two techniques: quantization and surrogate gradients. Quantization, which reduces precision to limit information leakage, has improved privacy in ANNs. Given SNNs' sparse and irregular activations, quantization may further disrupt the activation patterns exploited by MIAs. We assess the vulnerability of SNNs and ANNs under weight and activation quantization across multiple datasets, using the attack model's receiver operating characteristic (ROC) curve area under the curve (AUC) metric, where lower values indicate stronger privacy, and evaluate the privacy-accuracy trade-off. Our findings show that quantization enhances privacy in both architectures with minimal performance loss, though full-precision SNNs remain more resilient than quantized ANNs. Additionally, we examine the impact of surrogate gradients on privacy in SNNs. Among five evaluated gradients, spike rate escape provides the best privacy-accuracy trade-off, while arctangent increases vulnerability to MIAs. These results reinforce SNNs' inherent privacy advantages and demonstrate that quantization and surrogate gradient selection significantly influence privacy-accuracy trade-offs in SNNs.

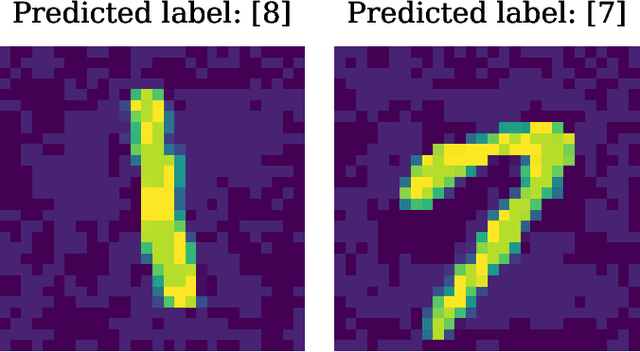

Do Spikes Protect Privacy? Investigating Black-Box Model Inversion Attacks in Spiking Neural Networks

Feb 08, 2025

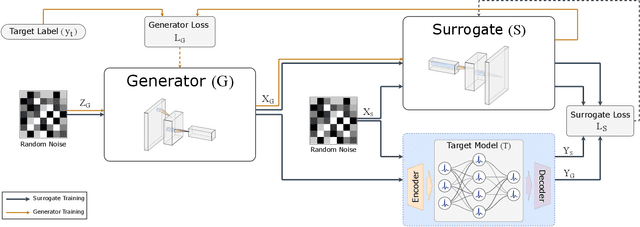

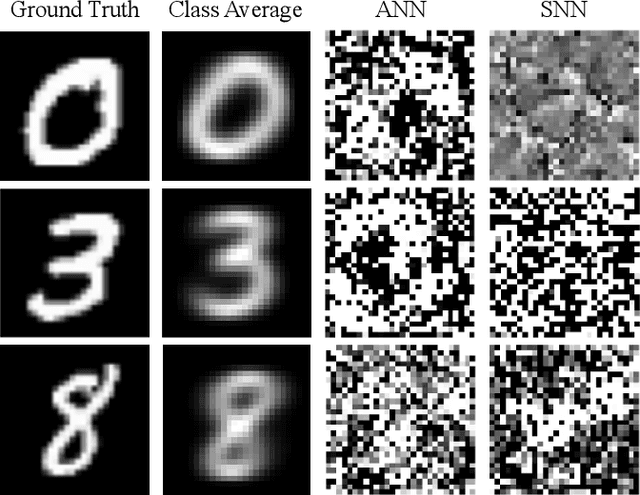

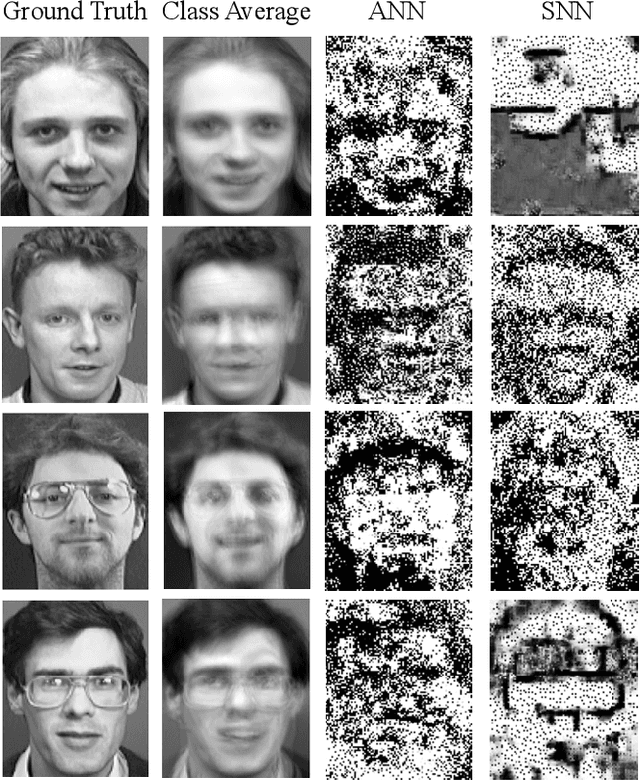

Abstract:As machine learning models become integral to security-sensitive applications, concerns over data leakage from adversarial attacks continue to rise. Model Inversion (MI) attacks pose a significant privacy threat by enabling adversaries to reconstruct training data from model outputs. While MI attacks on Artificial Neural Networks (ANNs) have been widely studied, Spiking Neural Networks (SNNs) remain largely unexplored in this context. Due to their event-driven and discrete computations, SNNs introduce fundamental differences in information processing that may offer inherent resistance to such attacks. A critical yet underexplored aspect of this threat lies in black-box settings, where attackers operate through queries without direct access to model parameters or gradients-representing a more realistic adversarial scenario in deployed systems. This work presents the first study of black-box MI attacks on SNNs. We adapt a generative adversarial MI framework to the spiking domain by incorporating rate-based encoding for input transformation and decoding mechanisms for output interpretation. Our results show that SNNs exhibit significantly greater resistance to MI attacks than ANNs, as demonstrated by degraded reconstructions, increased instability in attack convergence, and overall reduced attack effectiveness across multiple evaluation metrics. Further analysis suggests that the discrete and temporally distributed nature of SNN decision boundaries disrupts surrogate modeling, limiting the attacker's ability to approximate the target model.

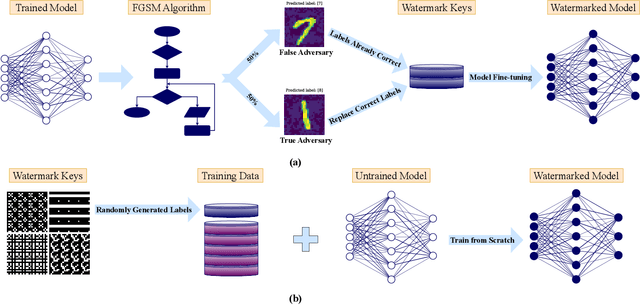

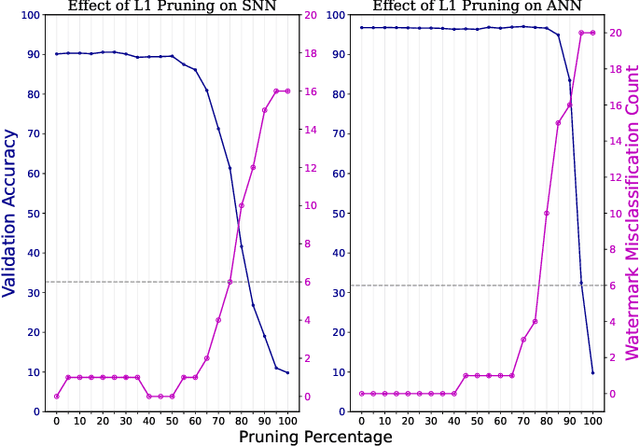

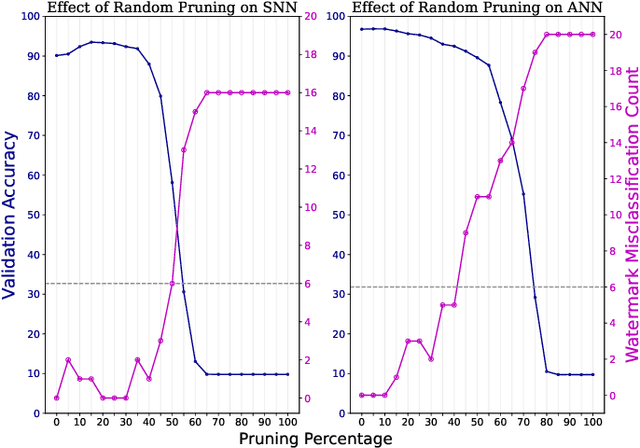

Watermarking Neuromorphic Brains: Intellectual Property Protection in Spiking Neural Networks

May 07, 2024

Abstract:As spiking neural networks (SNNs) gain traction in deploying neuromorphic computing solutions, protecting their intellectual property (IP) has become crucial. Without adequate safeguards, proprietary SNN architectures are at risk of theft, replication, or misuse, which could lead to significant financial losses for the owners. While IP protection techniques have been extensively explored for artificial neural networks (ANNs), their applicability and effectiveness for the unique characteristics of SNNs remain largely unexplored. In this work, we pioneer an investigation into adapting two prominent watermarking approaches, namely, fingerprint-based and backdoor-based mechanisms to secure proprietary SNN architectures. We conduct thorough experiments to evaluate the impact on fidelity, resilience against overwrite threats, and resistance to compression attacks when applying these watermarking techniques to SNNs, drawing comparisons with their ANN counterparts. This study lays the groundwork for developing neuromorphic-aware IP protection strategies tailored to the distinctive dynamics of SNNs.

BrainLeaks: On the Privacy-Preserving Properties of Neuromorphic Architectures against Model Inversion Attacks

Feb 01, 2024

Abstract:With the mainstream integration of machine learning into security-sensitive domains such as healthcare and finance, concerns about data privacy have intensified. Conventional artificial neural networks (ANNs) have been found vulnerable to several attacks that can leak sensitive data. Particularly, model inversion (MI) attacks enable the reconstruction of data samples that have been used to train the model. Neuromorphic architectures have emerged as a paradigm shift in neural computing, enabling asynchronous and energy-efficient computation. However, little to no existing work has investigated the privacy of neuromorphic architectures against model inversion. Our study is motivated by the intuition that the non-differentiable aspect of spiking neural networks (SNNs) might result in inherent privacy-preserving properties, especially against gradient-based attacks. To investigate this hypothesis, we propose a thorough exploration of SNNs' privacy-preserving capabilities. Specifically, we develop novel inversion attack strategies that are comprehensively designed to target SNNs, offering a comparative analysis with their conventional ANN counterparts. Our experiments, conducted on diverse event-based and static datasets, demonstrate the effectiveness of the proposed attack strategies and therefore questions the assumption of inherent privacy-preserving in neuromorphic architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge