Generalizable Reinforcement Learning with Biologically Inspired Hyperdimensional Occupancy Grid Maps for Exploration and Goal-Directed Path Planning

Paper and Code

Feb 13, 2025

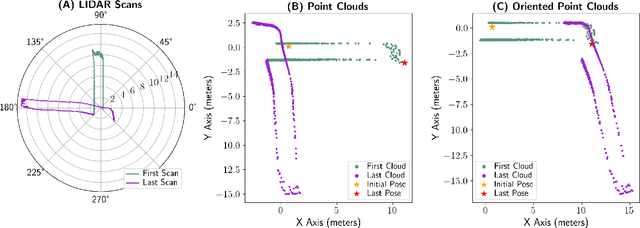

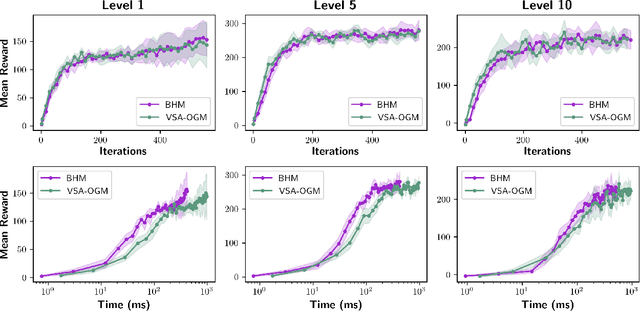

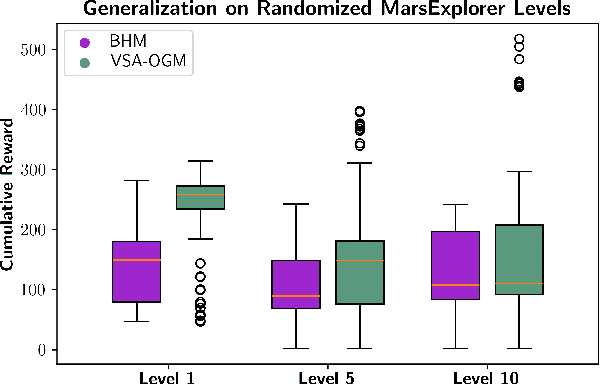

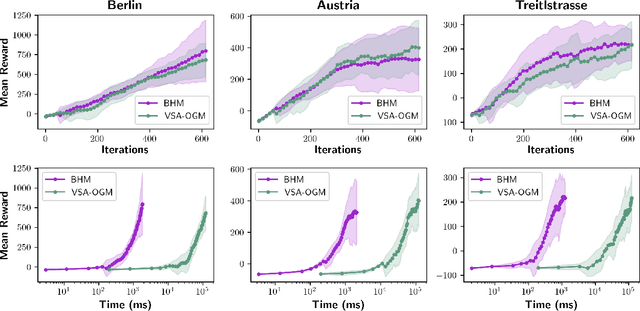

Real-time autonomous systems utilize multi-layer computational frameworks to perform critical tasks such as perception, goal finding, and path planning. Traditional methods implement perception using occupancy grid mapping (OGM), segmenting the environment into discretized cells with probabilistic information. This classical approach is well-established and provides a structured input for downstream processes like goal finding and path planning algorithms. Recent approaches leverage a biologically inspired mathematical framework known as vector symbolic architectures (VSA), commonly known as hyperdimensional computing, to perform probabilistic OGM in hyperdimensional space. This approach, VSA-OGM, provides native compatibility with spiking neural networks, positioning VSA-OGM as a potential neuromorphic alternative to conventional OGM. However, for large-scale integration, it is essential to assess the performance implications of VSA-OGM on downstream tasks compared to established OGM methods. This study examines the efficacy of VSA-OGM against a traditional OGM approach, Bayesian Hilbert Maps (BHM), within reinforcement learning based goal finding and path planning frameworks, across a controlled exploration environment and an autonomous driving scenario inspired by the F1-Tenth challenge. Our results demonstrate that VSA-OGM maintains comparable learning performance across single and multi-scenario training configurations while improving performance on unseen environments by approximately 47%. These findings highlight the increased generalizability of policy networks trained with VSA-OGM over BHM, reinforcing its potential for real-world deployment in diverse environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge