Andrew Capodieci

Neya Robotics

Generalizable Reinforcement Learning with Biologically Inspired Hyperdimensional Occupancy Grid Maps for Exploration and Goal-Directed Path Planning

Feb 13, 2025

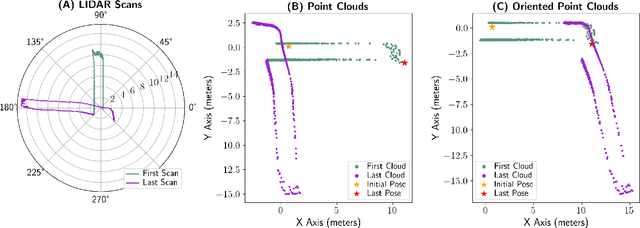

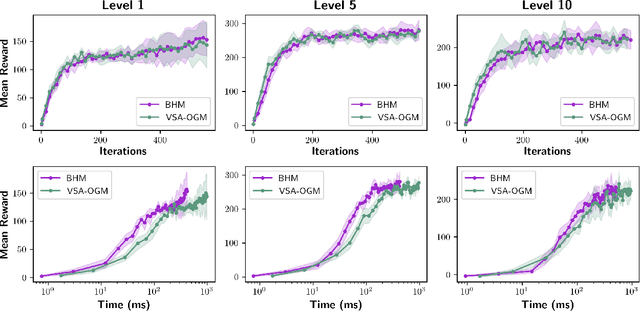

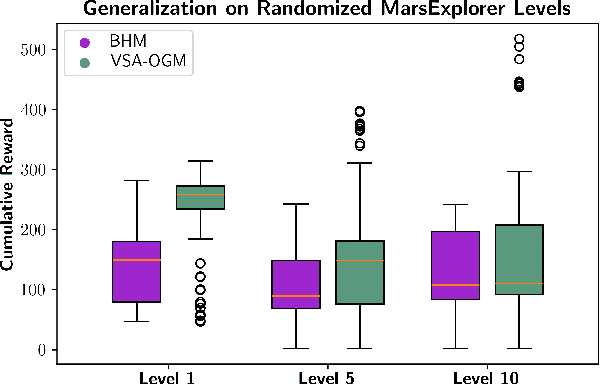

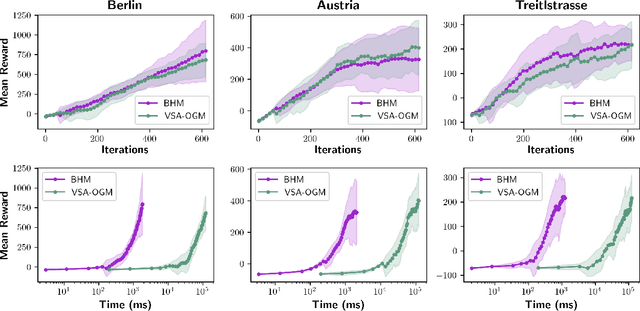

Abstract:Real-time autonomous systems utilize multi-layer computational frameworks to perform critical tasks such as perception, goal finding, and path planning. Traditional methods implement perception using occupancy grid mapping (OGM), segmenting the environment into discretized cells with probabilistic information. This classical approach is well-established and provides a structured input for downstream processes like goal finding and path planning algorithms. Recent approaches leverage a biologically inspired mathematical framework known as vector symbolic architectures (VSA), commonly known as hyperdimensional computing, to perform probabilistic OGM in hyperdimensional space. This approach, VSA-OGM, provides native compatibility with spiking neural networks, positioning VSA-OGM as a potential neuromorphic alternative to conventional OGM. However, for large-scale integration, it is essential to assess the performance implications of VSA-OGM on downstream tasks compared to established OGM methods. This study examines the efficacy of VSA-OGM against a traditional OGM approach, Bayesian Hilbert Maps (BHM), within reinforcement learning based goal finding and path planning frameworks, across a controlled exploration environment and an autonomous driving scenario inspired by the F1-Tenth challenge. Our results demonstrate that VSA-OGM maintains comparable learning performance across single and multi-scenario training configurations while improving performance on unseen environments by approximately 47%. These findings highlight the increased generalizability of policy networks trained with VSA-OGM over BHM, reinforcing its potential for real-world deployment in diverse environments.

Brain Inspired Probabilistic Occupancy Grid Mapping with Hyperdimensional Computing

Aug 17, 2024

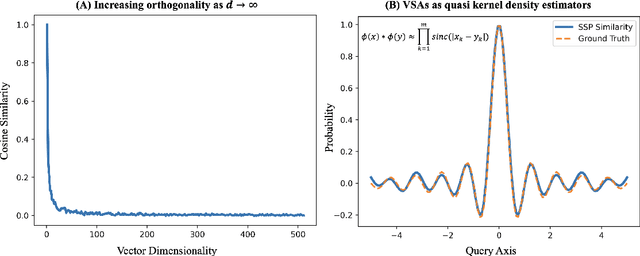

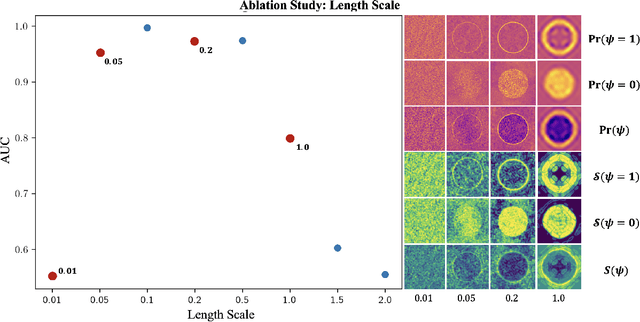

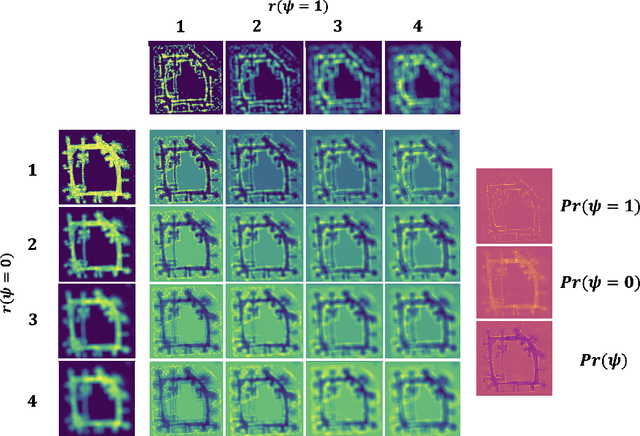

Abstract:Real-time robotic systems require advanced perception, computation, and action capability. However, the main bottleneck in current autonomous systems is the trade-off between computational capability, energy efficiency and model determinism. World modeling, a key objective of many robotic systems, commonly uses occupancy grid mapping (OGM) as the first step towards building an end-to-end robotic system with perception, planning, autonomous maneuvering, and decision making capabilities. OGM divides the environment into discrete cells and assigns probability values to attributes such as occupancy and traversability. Existing methods fall into two categories: traditional methods and neural methods. Traditional methods rely on dense statistical calculations, while neural methods employ deep learning for probabilistic information processing. Recent works formulate a deterministic theory of neural computation at the intersection of cognitive science and vector symbolic architectures. In this study, we propose a Fourier-based hyperdimensional OGM system, VSA-OGM, combined with a novel application of Shannon entropy that retains the interpretability and stability of traditional methods along with the improved computational efficiency of neural methods. Our approach, validated across multiple datasets, achieves similar accuracy to covariant traditional methods while approximately reducing latency by 200x and memory by 1000x. Compared to invariant traditional methods, we see similar accuracy values while reducing latency by 3.7x. Moreover, we achieve 1.5x latency reductions compared to neural methods while eliminating the need for domain-specific model training.

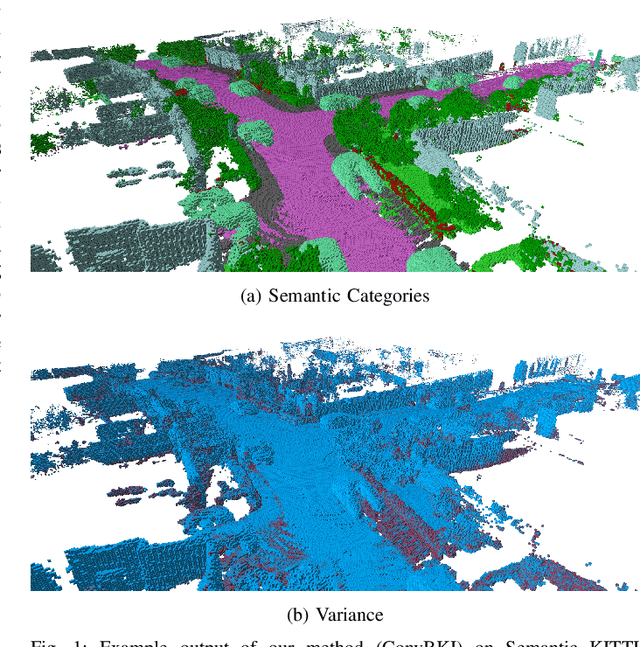

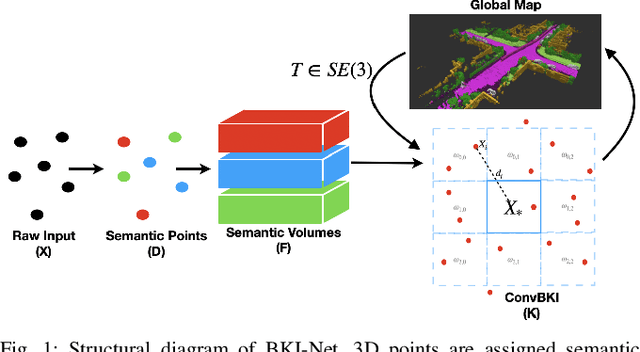

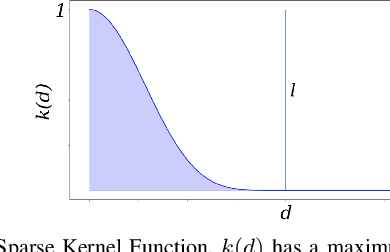

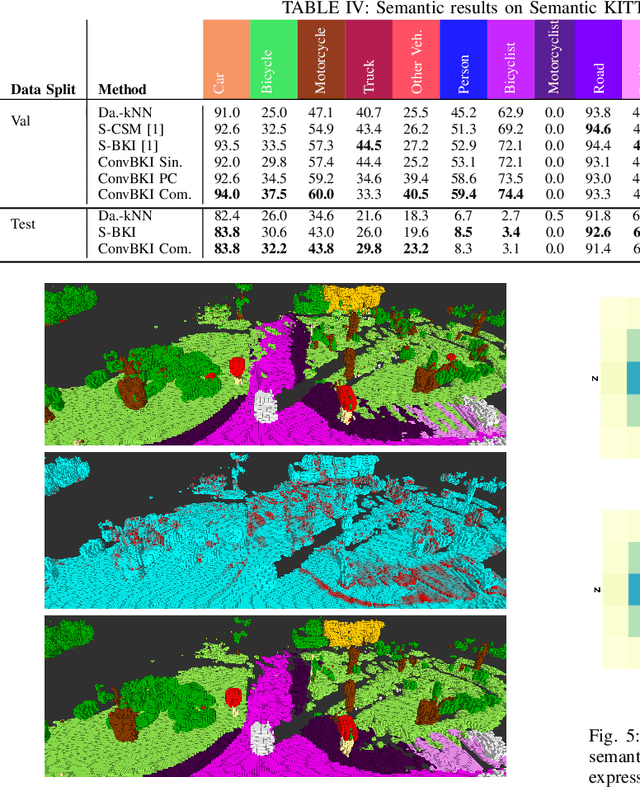

ConvBKI: Real-Time Probabilistic Semantic Mapping Network with Quantifiable Uncertainty

Oct 26, 2023

Abstract:In this paper, we develop a modular neural network for real-time semantic mapping in uncertain environments, which explicitly updates per-voxel probabilistic distributions within a neural network layer. Our approach combines the reliability of classical probabilistic algorithms with the performance and efficiency of modern neural networks. Although robotic perception is often divided between modern differentiable methods and classical explicit methods, a union of both is necessary for real-time and trustworthy performance. We introduce a novel Convolutional Bayesian Kernel Inference (ConvBKI) layer which incorporates semantic segmentation predictions online into a 3D map through a depthwise convolution layer by leveraging conjugate priors. We compare ConvBKI against state-of-the-art deep learning approaches and probabilistic algorithms for mapping to evaluate reliability and performance. We also create a Robot Operating System (ROS) package of ConvBKI and test it on real-world perceptually challenging off-road driving data.

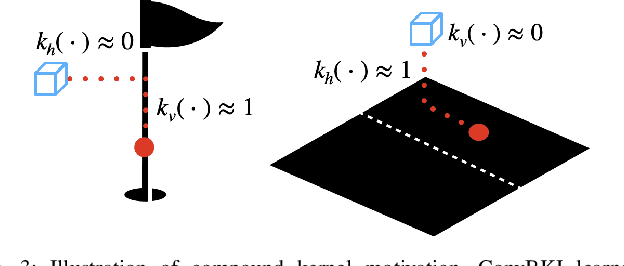

Convolutional Bayesian Kernel Inference for 3D Semantic Mapping

Sep 21, 2022

Abstract:Robotic perception is currently at a cross-roads between modern methods which operate in an efficient latent space, and classical methods which are mathematically founded and provide interpretable, trustworthy results. In this paper, we introduce a Convolutional Bayesian Kernel Inference (ConvBKI) layer which explicitly performs Bayesian inference within a depthwise separable convolution layer to simultaneously maximize efficiency while maintaining reliability. We apply our layer to the task of 3D semantic mapping, where we learn semantic-geometric probability distributions for LiDAR sensor information in real time. We evaluate our network against state-of-the-art semantic mapping algorithms on the KITTI data set, and demonstrate improved latency with comparable semantic results.

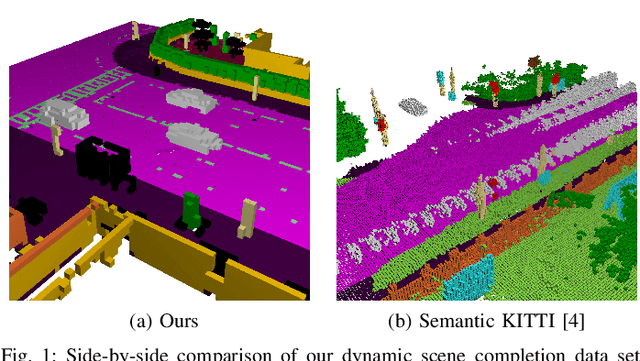

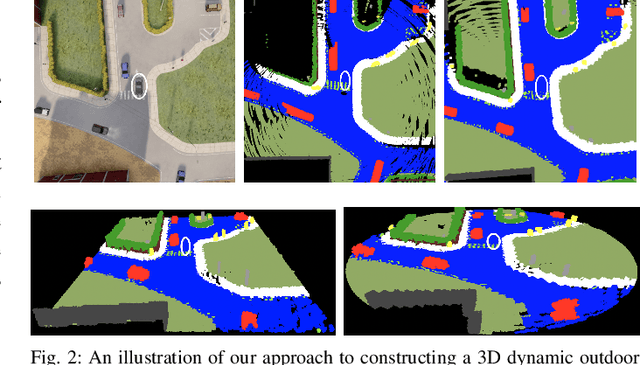

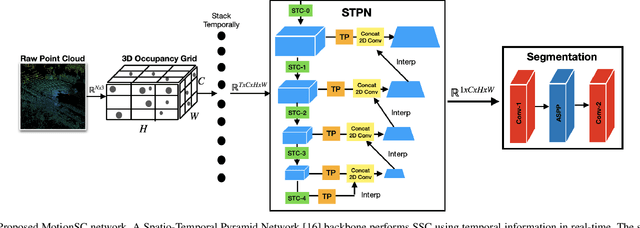

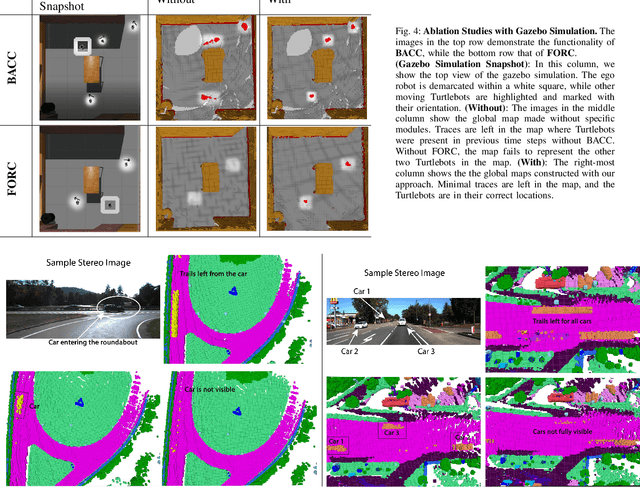

MotionSC: Data Set and Network for Real-Time Semantic Mapping in Dynamic Environments

Mar 14, 2022

Abstract:This work addresses a gap in semantic scene completion (SSC) data by creating a novel outdoor data set with accurate and complete dynamic scenes. Our data set is formed from randomly sampled views of the world at each time step, which supervises generalizability to complete scenes without occlusions or traces. We create SSC baselines from state-of-the-art open source networks and construct a benchmark real-time dense local semantic mapping algorithm, MotionSC, by leveraging recent 3D deep learning architectures to enhance SSC with temporal information. Our network shows that the proposed data set can quantify and supervise accurate scene completion in the presence of dynamic objects, which can lead to the development of improved dynamic mapping algorithms. All software is available at https://github.com/UMich-CURLY/3DMapping.

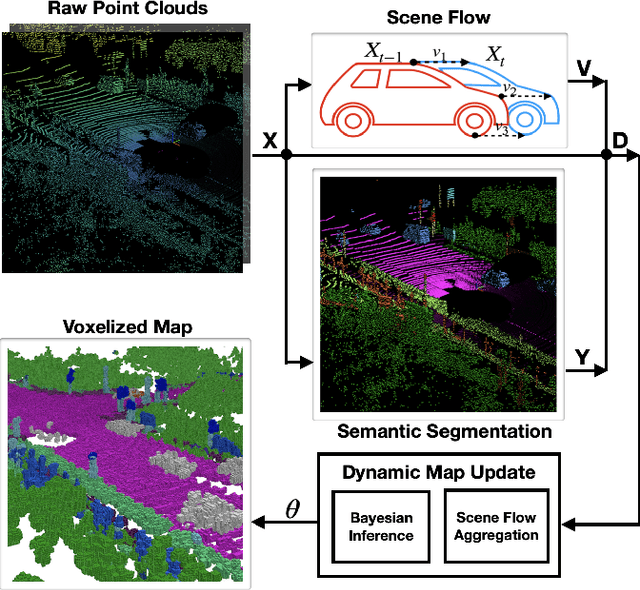

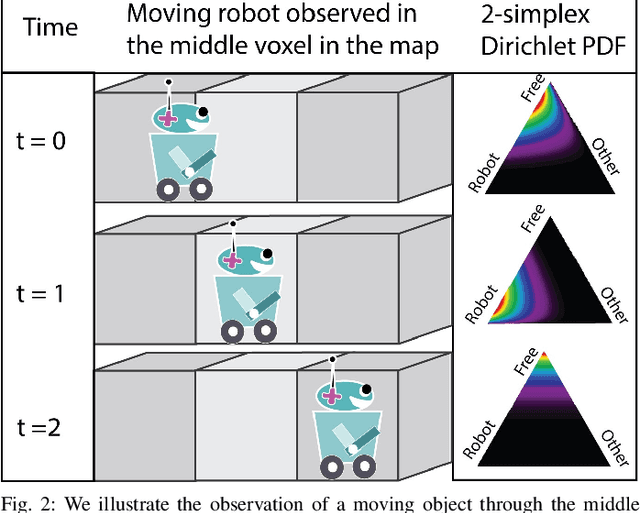

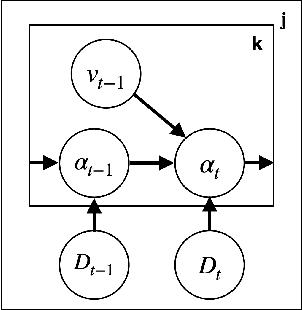

Dynamic Semantic Occupancy Mapping using 3D Scene Flow and Closed-Form Bayesian Inference

Aug 06, 2021

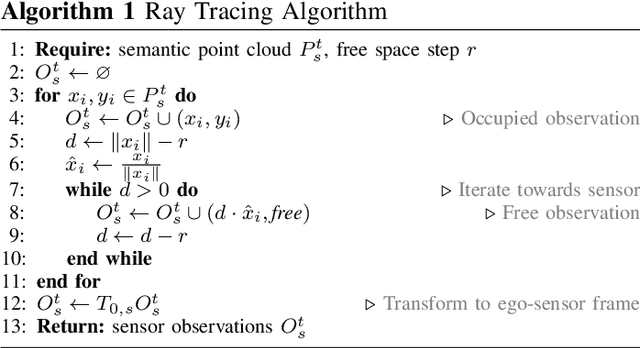

Abstract:This paper reports on a dynamic semantic mapping framework that incorporates 3D scene flow measurements into a closed-form Bayesian inference model. Existence of dynamic objects in the environment cause artifacts and traces in current mapping algorithms, leading to an inconsistent map posterior. We leverage state-of-the-art semantic segmentation and 3D flow estimation using deep learning to provide measurements for map inference. We develop a continuous (i.e., can be queried at arbitrary resolution) Bayesian model that propagates the scene with flow and infers a 3D semantic occupancy map with better performance than its static counterpart. Experimental results using publicly available data sets show that the proposed framework generalizes its predecessors and improves over direct measurements from deep neural networks consistently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge