Paramsothy Jayakumar

Variational quantum and neural quantum states algorithms for the linear complementarity problem

Apr 10, 2025

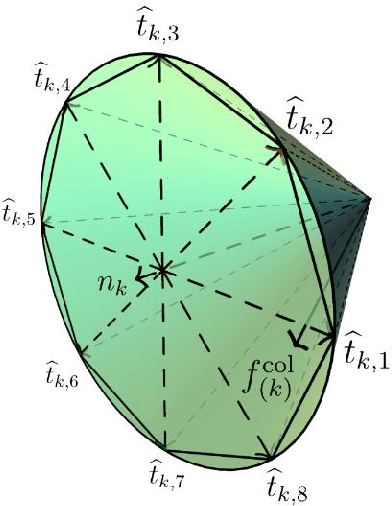

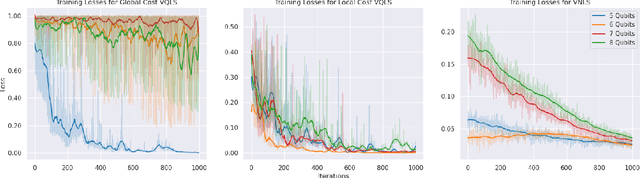

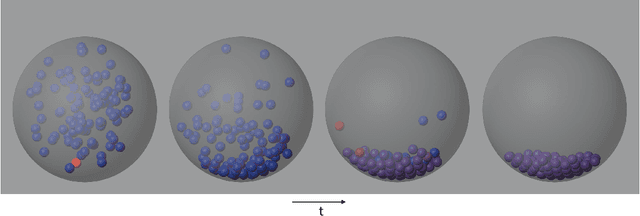

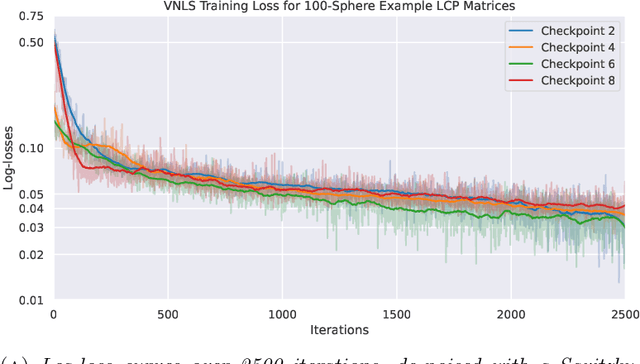

Abstract:Variational quantum algorithms (VQAs) are promising hybrid quantum-classical methods designed to leverage the computational advantages of quantum computing while mitigating the limitations of current noisy intermediate-scale quantum (NISQ) hardware. Although VQAs have been demonstrated as proofs of concept, their practical utility in solving real-world problems -- and whether quantum-inspired classical algorithms can match their performance -- remains an open question. We present a novel application of the variational quantum linear solver (VQLS) and its classical neural quantum states-based counterpart, the variational neural linear solver (VNLS), as key components within a minimum map Newton solver for a complementarity-based rigid body contact model. We demonstrate using the VNLS that our solver accurately simulates the dynamics of rigid spherical bodies during collision events. These results suggest that quantum and quantum-inspired linear algebra algorithms can serve as viable alternatives to standard linear algebra solvers for modeling certain physical systems.

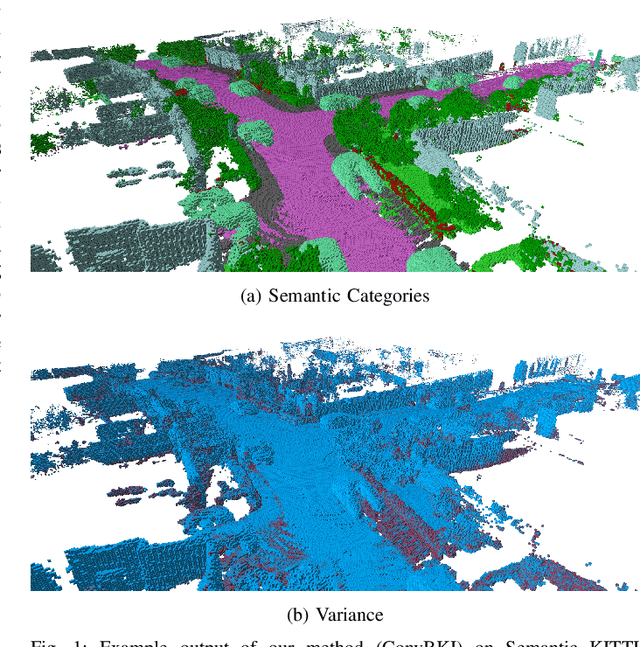

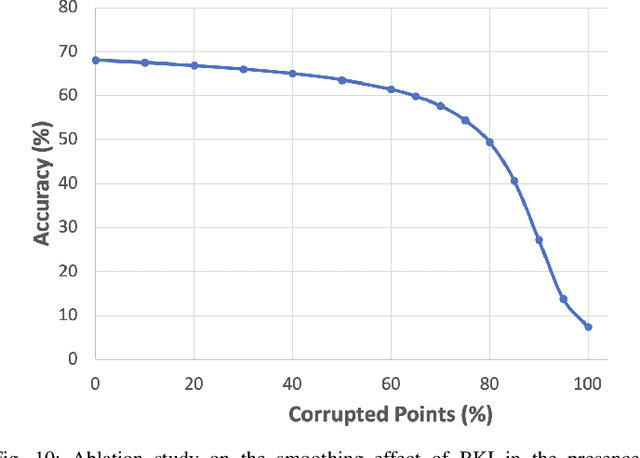

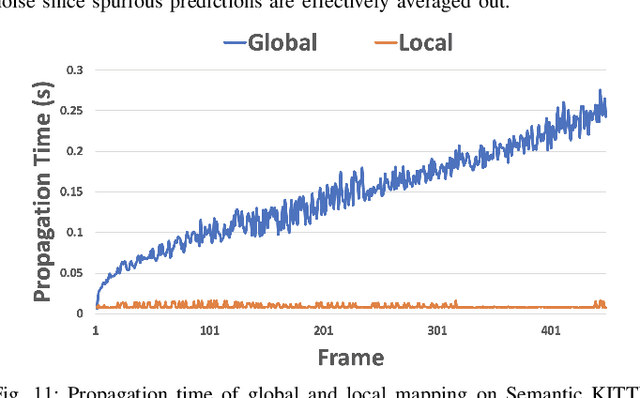

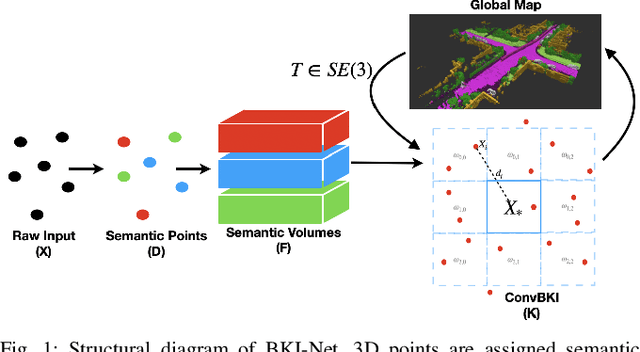

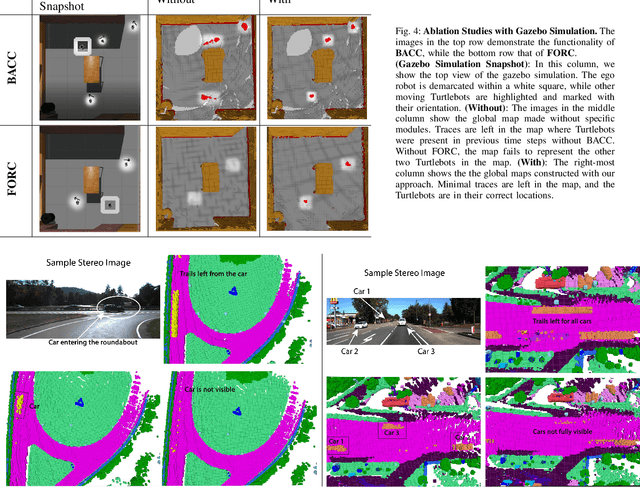

ConvBKI: Real-Time Probabilistic Semantic Mapping Network with Quantifiable Uncertainty

Oct 26, 2023

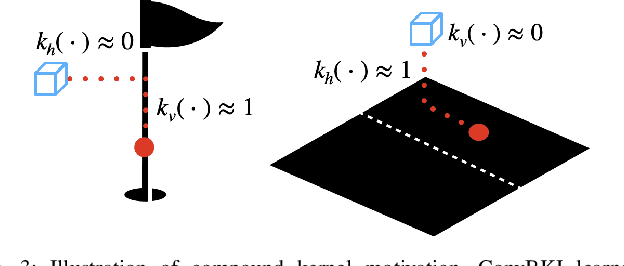

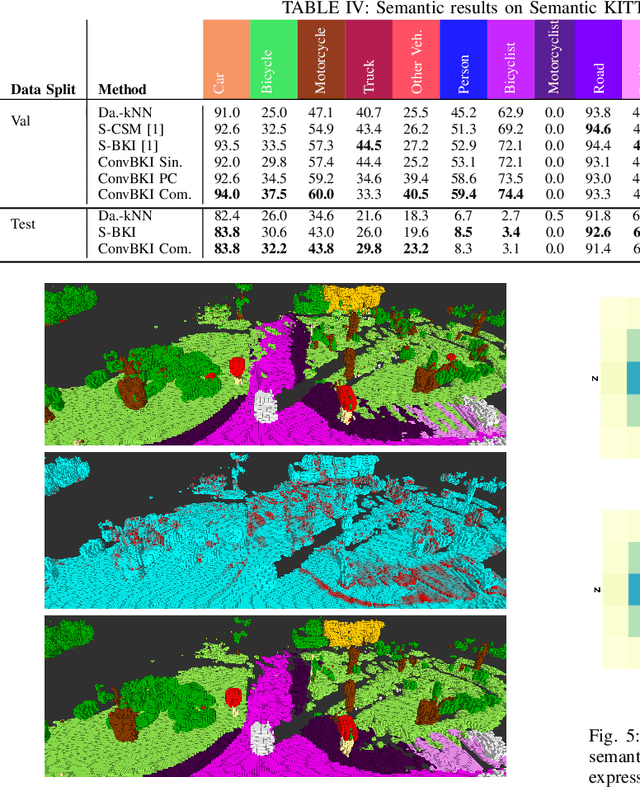

Abstract:In this paper, we develop a modular neural network for real-time semantic mapping in uncertain environments, which explicitly updates per-voxel probabilistic distributions within a neural network layer. Our approach combines the reliability of classical probabilistic algorithms with the performance and efficiency of modern neural networks. Although robotic perception is often divided between modern differentiable methods and classical explicit methods, a union of both is necessary for real-time and trustworthy performance. We introduce a novel Convolutional Bayesian Kernel Inference (ConvBKI) layer which incorporates semantic segmentation predictions online into a 3D map through a depthwise convolution layer by leveraging conjugate priors. We compare ConvBKI against state-of-the-art deep learning approaches and probabilistic algorithms for mapping to evaluate reliability and performance. We also create a Robot Operating System (ROS) package of ConvBKI and test it on real-world perceptually challenging off-road driving data.

Convolutional Bayesian Kernel Inference for 3D Semantic Mapping

Sep 21, 2022

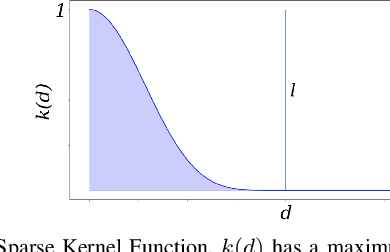

Abstract:Robotic perception is currently at a cross-roads between modern methods which operate in an efficient latent space, and classical methods which are mathematically founded and provide interpretable, trustworthy results. In this paper, we introduce a Convolutional Bayesian Kernel Inference (ConvBKI) layer which explicitly performs Bayesian inference within a depthwise separable convolution layer to simultaneously maximize efficiency while maintaining reliability. We apply our layer to the task of 3D semantic mapping, where we learn semantic-geometric probability distributions for LiDAR sensor information in real time. We evaluate our network against state-of-the-art semantic mapping algorithms on the KITTI data set, and demonstrate improved latency with comparable semantic results.

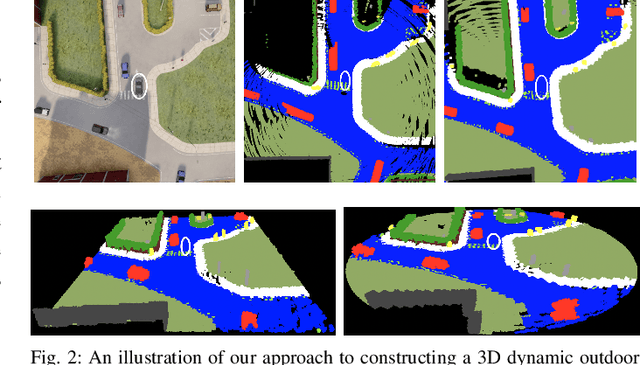

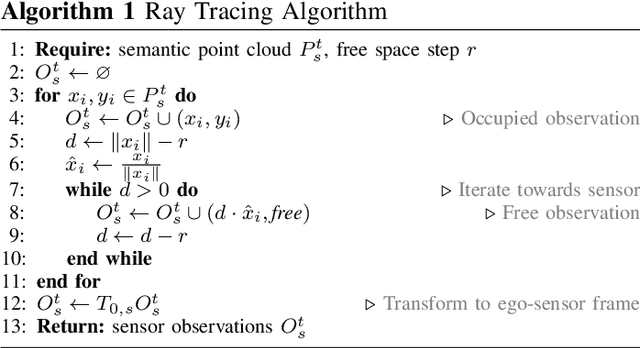

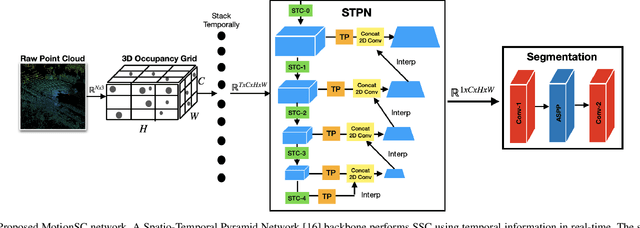

MotionSC: Data Set and Network for Real-Time Semantic Mapping in Dynamic Environments

Mar 14, 2022

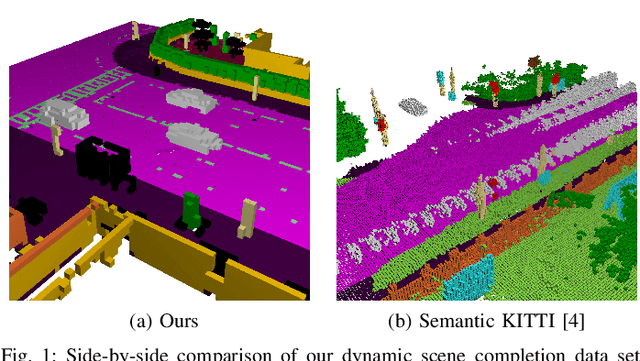

Abstract:This work addresses a gap in semantic scene completion (SSC) data by creating a novel outdoor data set with accurate and complete dynamic scenes. Our data set is formed from randomly sampled views of the world at each time step, which supervises generalizability to complete scenes without occlusions or traces. We create SSC baselines from state-of-the-art open source networks and construct a benchmark real-time dense local semantic mapping algorithm, MotionSC, by leveraging recent 3D deep learning architectures to enhance SSC with temporal information. Our network shows that the proposed data set can quantify and supervise accurate scene completion in the presence of dynamic objects, which can lead to the development of improved dynamic mapping algorithms. All software is available at https://github.com/UMich-CURLY/3DMapping.

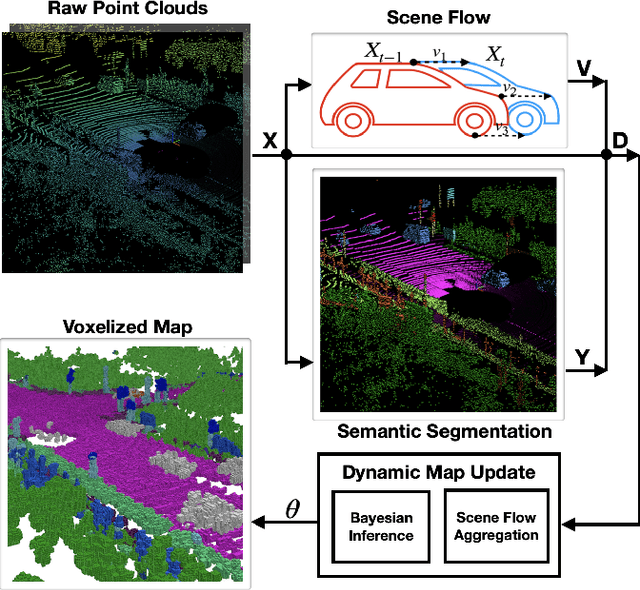

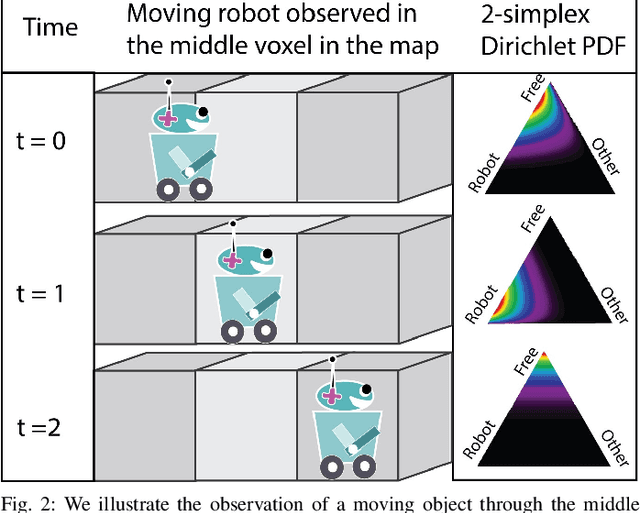

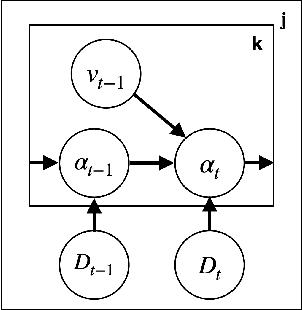

Dynamic Semantic Occupancy Mapping using 3D Scene Flow and Closed-Form Bayesian Inference

Aug 06, 2021

Abstract:This paper reports on a dynamic semantic mapping framework that incorporates 3D scene flow measurements into a closed-form Bayesian inference model. Existence of dynamic objects in the environment cause artifacts and traces in current mapping algorithms, leading to an inconsistent map posterior. We leverage state-of-the-art semantic segmentation and 3D flow estimation using deep learning to provide measurements for map inference. We develop a continuous (i.e., can be queried at arbitrary resolution) Bayesian model that propagates the scene with flow and infers a 3D semantic occupancy map with better performance than its static counterpart. Experimental results using publicly available data sets show that the proposed framework generalizes its predecessors and improves over direct measurements from deep neural networks consistently.

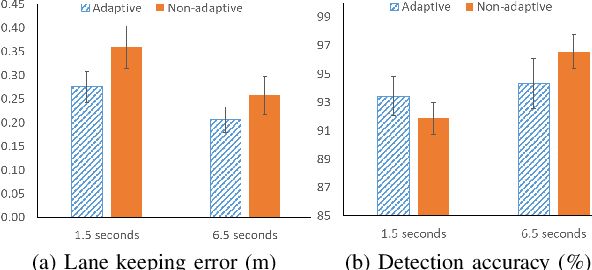

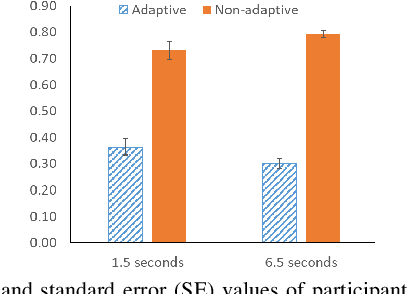

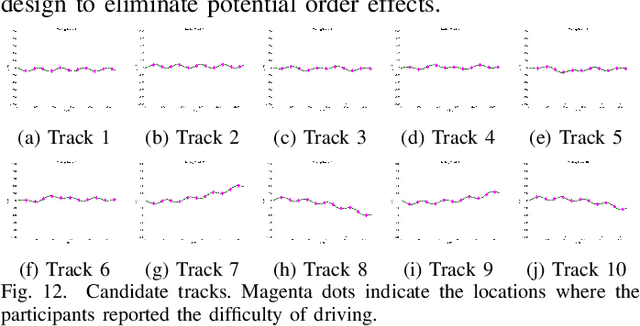

A Workload Adaptive Haptic Shared Control Scheme for Semi-Autonomous Driving

Mar 31, 2020

Abstract:Haptic shared control is used to manage the control authority allocation between a human and an autonomous agent in semi-autonomous driving. Existing haptic shared control schemes, however, do not take full consideration of the human agent. To fill this research gap, this study presents a haptic shared control scheme that adapts to a human operator's workload, eyes on road and input torque in real-time. We conducted human-in-the-loop experiments with 24 participants. In the experiment, a human operator and an autonomy module for navigation shared the control of a simulated notional High Mobility Multipurpose Wheeled Vehicle (HMMWV) at a fixed speed. At the same time, the human operator performed a target detection task for surveillance. The autonomy could be either adaptive or non-adaptive to the above-mentioned human factors. Results indicate that the adaptive haptic control scheme resulted in significantly lower workload, higher trust in autonomy, better driving task performance and smaller control effort.

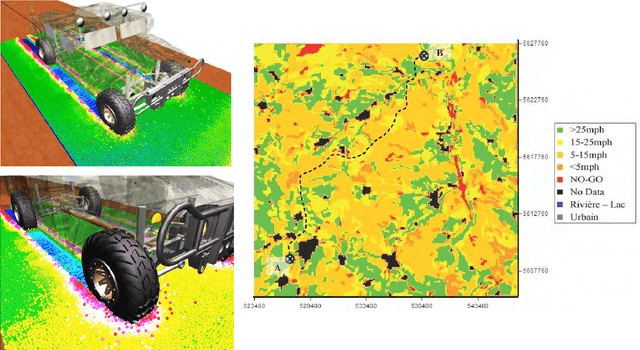

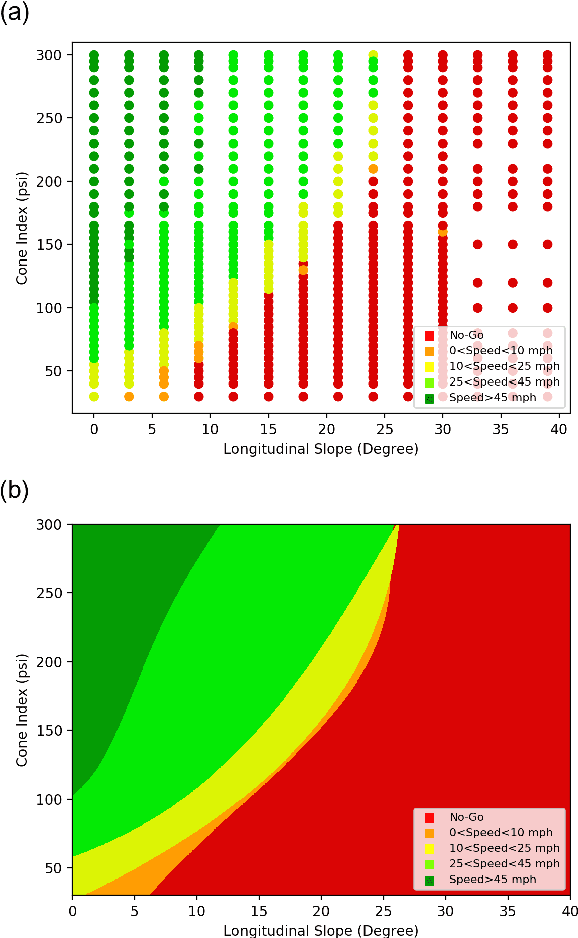

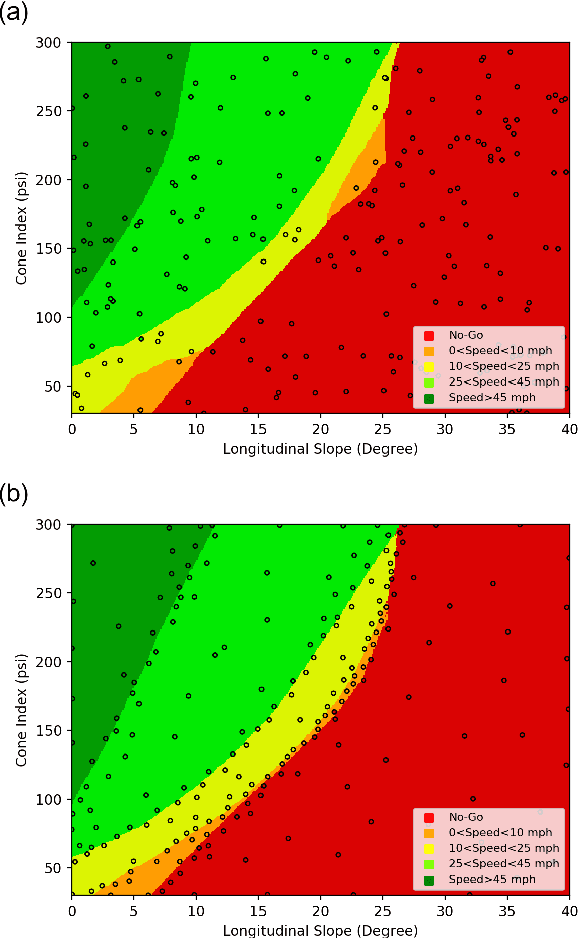

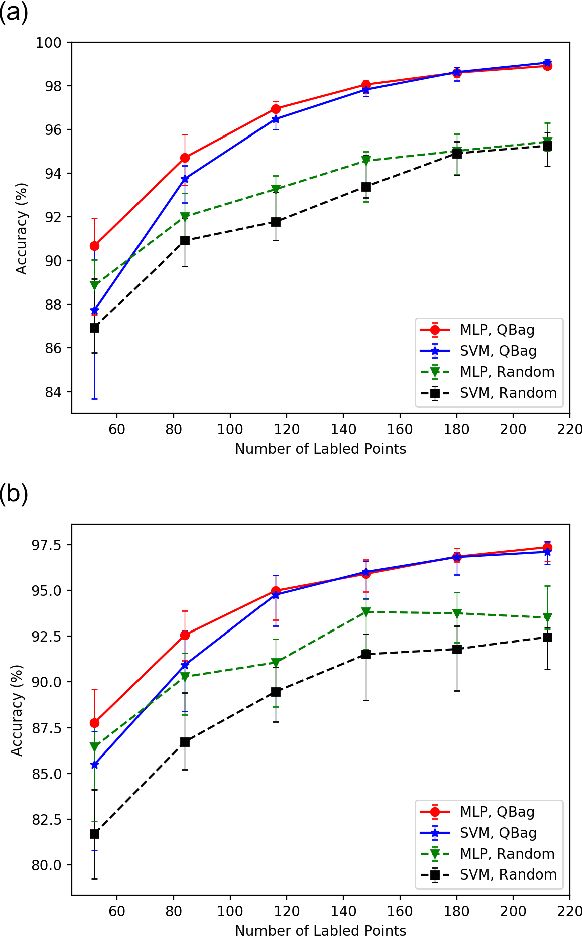

An Active Learning Framework for Constructing High-fidelity Mobility Maps

Mar 07, 2020

Abstract:A mobility map, which provides maximum achievable speed on a given terrain, is essential for path planning of autonomous ground vehicles in off-road settings. While physics-based simulations play a central role in creating next-generation, high-fidelity mobility maps, they are cumbersome and expensive. For instance, a typical simulation can take weeks to run on a supercomputer and each map requires thousands of such simulations. Recent work at the U.S. Army CCDC Ground Vehicle Systems Center has shown that trained machine learning classifiers can greatly improve the efficiency of this process. However, deciding which simulations to run in order to train the classifier efficiently is still an open problem. According to PAC learning theory, data that can be separated by a classifier is expected to require $\mathcal{O}(1/\epsilon)$ randomly selected points (simulations) to train the classifier with error less than $\epsilon$. In this paper, building on existing algorithms, we introduce an active learning paradigm that substantially reduces the number of simulations needed to train a machine learning classifier without sacrificing accuracy. Experimental results suggest that our sampling algorithm can train a neural network, with higher accuracy, using less than half the number of simulations when compared to random sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge