Kira Barton

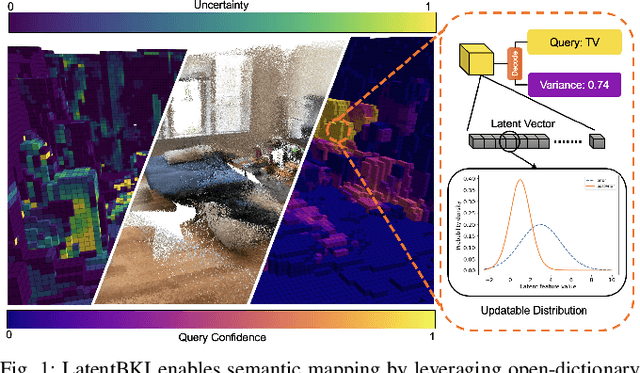

Latent BKI: Open-Dictionary Continuous Mapping in Visual-Language Latent Spaces with Quantifiable Uncertainty

Oct 15, 2024

Abstract:This paper introduces a novel probabilistic mapping algorithm, Latent BKI, which enables open-vocabulary mapping with quantifiable uncertainty. Traditionally, semantic mapping algorithms focus on a fixed set of semantic categories which limits their applicability for complex robotic tasks. Vision-Language (VL) models have recently emerged as a technique to jointly model language and visual features in a latent space, enabling semantic recognition beyond a predefined, fixed set of semantic classes. Latent BKI recurrently incorporates neural embeddings from VL models into a voxel map with quantifiable uncertainty, leveraging the spatial correlations of nearby observations through Bayesian Kernel Inference (BKI). Latent BKI is evaluated against similar explicit semantic mapping and VL mapping frameworks on the popular MatterPort-3D and Semantic KITTI data sets, demonstrating that Latent BKI maintains the probabilistic benefits of continuous mapping with the additional benefit of open-dictionary queries. Real-world experiments demonstrate applicability to challenging indoor environments.

MDMP: Multi-modal Diffusion for supervised Motion Predictions with uncertainty

Oct 04, 2024

Abstract:This paper introduces a Multi-modal Diffusion model for Motion Prediction (MDMP) that integrates and synchronizes skeletal data and textual descriptions of actions to generate refined long-term motion predictions with quantifiable uncertainty. Existing methods for motion forecasting or motion generation rely solely on either prior motions or text prompts, facing limitations with precision or control, particularly over extended durations. The multi-modal nature of our approach enhances the contextual understanding of human motion, while our graph-based transformer framework effectively capture both spatial and temporal motion dynamics. As a result, our model consistently outperforms existing generative techniques in accurately predicting long-term motions. Additionally, by leveraging diffusion models' ability to capture different modes of prediction, we estimate uncertainty, significantly improving spatial awareness in human-robot interactions by incorporating zones of presence with varying confidence levels for each body joint.

Sequential Manipulation of Deformable Linear Object Networks with Endpoint Pose Measurements using Adaptive Model Predictive Control

Feb 15, 2024

Abstract:Robotic manipulation of deformable linear objects (DLOs) is an active area of research, though emerging applications, like automotive wire harness installation, introduce constraints that have not been considered in prior work. Confined workspaces and limited visibility complicate prior assumptions of multi-robot manipulation and direct measurement of DLO configuration (state). This work focuses on single-arm manipulation of stiff DLOs (StDLOs) connected to form a DLO network (DLON), for which the measurements (output) are the endpoint poses of the DLON, which are subject to unknown dynamics during manipulation. To demonstrate feasibility of output-based control without state estimation, direct input-output dynamics are shown to exist by training neural network models on simulated trajectories. Output dynamics are then approximated with polynomials and found to contain well-known rigid body dynamics terms. A composite model consisting of a rigid body model and an online data-driven residual is developed, which predicts output dynamics more accurately than either model alone, and without prior experience with the system. An adaptive model predictive controller is developed with the composite model for DLON manipulation, which completes DLON installation tasks, both in simulation and with a physical automotive wire harness.

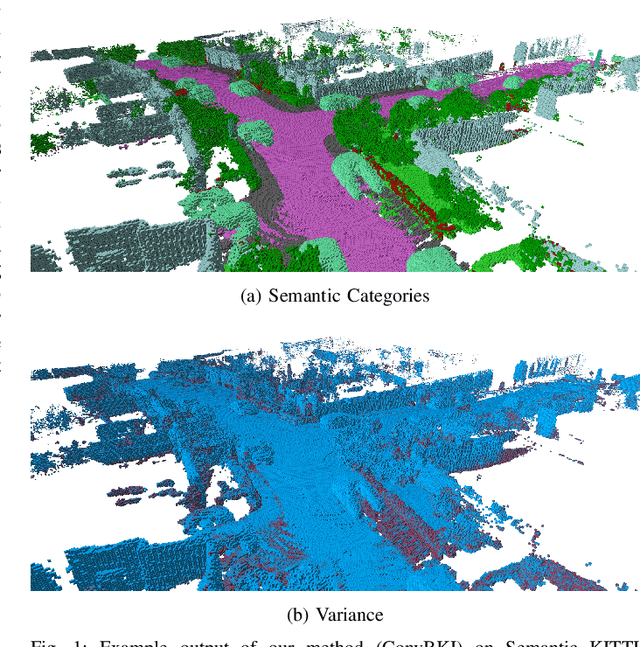

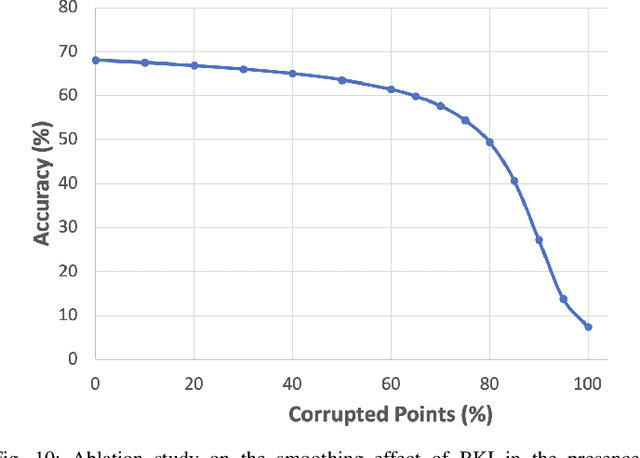

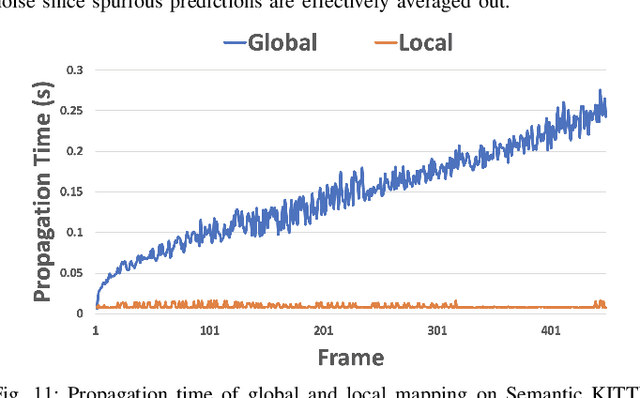

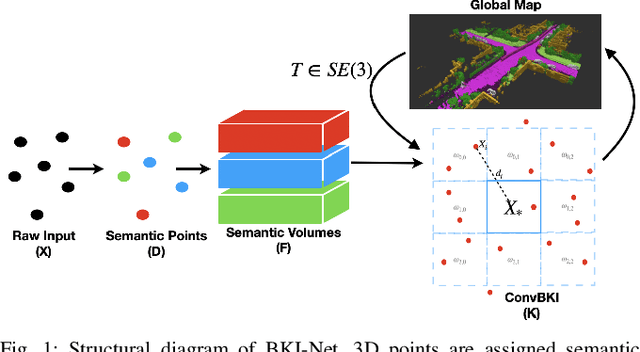

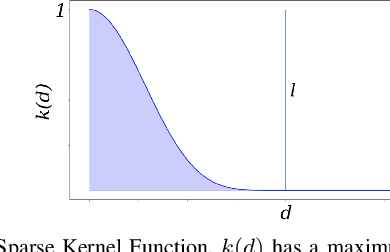

ConvBKI: Real-Time Probabilistic Semantic Mapping Network with Quantifiable Uncertainty

Oct 26, 2023

Abstract:In this paper, we develop a modular neural network for real-time semantic mapping in uncertain environments, which explicitly updates per-voxel probabilistic distributions within a neural network layer. Our approach combines the reliability of classical probabilistic algorithms with the performance and efficiency of modern neural networks. Although robotic perception is often divided between modern differentiable methods and classical explicit methods, a union of both is necessary for real-time and trustworthy performance. We introduce a novel Convolutional Bayesian Kernel Inference (ConvBKI) layer which incorporates semantic segmentation predictions online into a 3D map through a depthwise convolution layer by leveraging conjugate priors. We compare ConvBKI against state-of-the-art deep learning approaches and probabilistic algorithms for mapping to evaluate reliability and performance. We also create a Robot Operating System (ROS) package of ConvBKI and test it on real-world perceptually challenging off-road driving data.

Human-robot Matching and Routing for Multi-robot Tour Guiding under Time Uncertainty

Sep 27, 2023

Abstract:This work presents a framework for multi-robot tour guidance in a partially known environment with uncertainty, such as a museum. A simultaneous matching and routing problem (SMRP) is formulated to match the humans with robot guides according to their requested places of interest (POIs) and generate the routes for the robots according to uncertain time estimation. A large neighborhood search algorithm is developed to efficiently find sub-optimal low-cost solutions for the SMRP. The scalability and optimality of the multi-robot planner are evaluated computationally. The largest case tested involves 50 robots, 250 humans, and 50 POIs. A photo-realistic multi-robot simulation was developed to verify the tour guiding performance in an uncertain indoor environment.

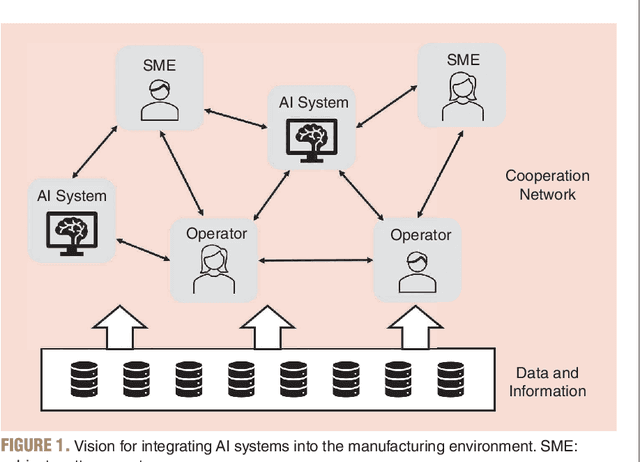

Opportunities and Challenges to Integrate Artificial Intelligence into Manufacturing Systems: Thoughts from a Panel Discussion

Mar 20, 2023

Abstract:Rapid advances in artificial intelligence (AI) have the potential to significantly increase the productivity, quality, and profitability in future manufacturing systems. Traditional mass-production will give way to personalized production, with each item made to order, at the low cost and high-quality consumers have come to expect. Manufacturing systems will have the intelligence to be resilient to multiple disruptions, from small-scale machine breakdowns, to large-scale natural disasters. Products will be made with higher precision and lower variability. While gains have been made towards the development of these factories of the future, many challenges remain to fully realize this vision. To consider the challenges and opportunities associated with this topic, a panel of experts from Industry, Academia, and Government was invited to participate in an active discussion at the 2022 Modeling, Estimation and Control Conference (MECC) held in Jersey City, New Jersey from October 3- 5, 2022. The panel discussion focused on the challenges and opportunities to more fully integrate AI into manufacturing systems. Three overarching themes emerged from the panel discussion. First, to be successful, AI will need to work seamlessly, and in an integrated manner with humans (and vice versa). Second, significant gaps in the infrastructure needed to enable the full potential of AI into the manufacturing ecosystem, including sufficient data availability, storage, and analysis, must be addressed. And finally, improved coordination between universities, industry, and government agencies can facilitate greater opportunities to push the field forward. This article briefly summarizes these three themes, and concludes with a discussion of promising directions.

Learning Task Requirements and Agent Capabilities for Multi-agent Task Allocation

Nov 08, 2022

Abstract:This paper presents a learning framework to estimate an agent capability and task requirement model for multi-agent task allocation. With a set of team configurations and the corresponding task performances as the training data, linear task constraints can be learned to be embedded in many existing optimization-based task allocation frameworks. Comprehensive computational evaluations are conducted to test the scalability and prediction accuracy of the learning framework with a limited number of team configurations and performance pairs. A ROS and Gazebo-based simulation environment is developed to validate the proposed requirements learning and task allocation framework in practical multi-agent exploration and manipulation tasks. Results show that the learning process for scenarios with 40 tasks and 6 types of agents uses around 12 seconds, ending up with prediction errors in the range of 0.5-2%.

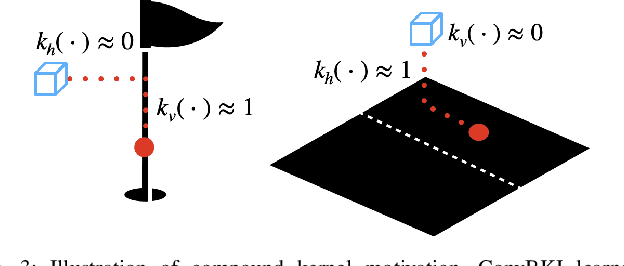

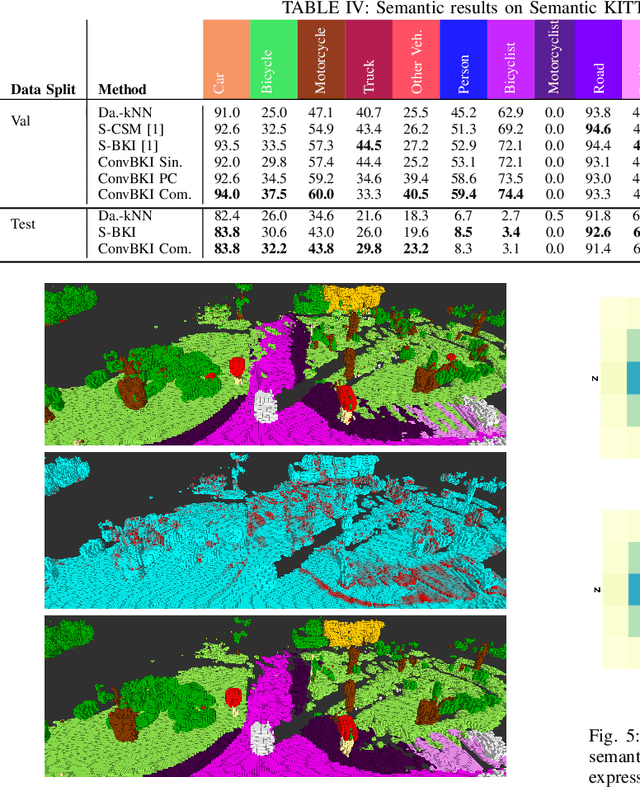

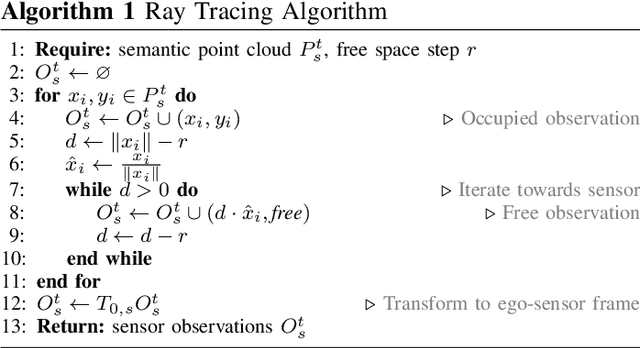

Convolutional Bayesian Kernel Inference for 3D Semantic Mapping

Sep 21, 2022

Abstract:Robotic perception is currently at a cross-roads between modern methods which operate in an efficient latent space, and classical methods which are mathematically founded and provide interpretable, trustworthy results. In this paper, we introduce a Convolutional Bayesian Kernel Inference (ConvBKI) layer which explicitly performs Bayesian inference within a depthwise separable convolution layer to simultaneously maximize efficiency while maintaining reliability. We apply our layer to the task of 3D semantic mapping, where we learn semantic-geometric probability distributions for LiDAR sensor information in real time. We evaluate our network against state-of-the-art semantic mapping algorithms on the KITTI data set, and demonstrate improved latency with comparable semantic results.

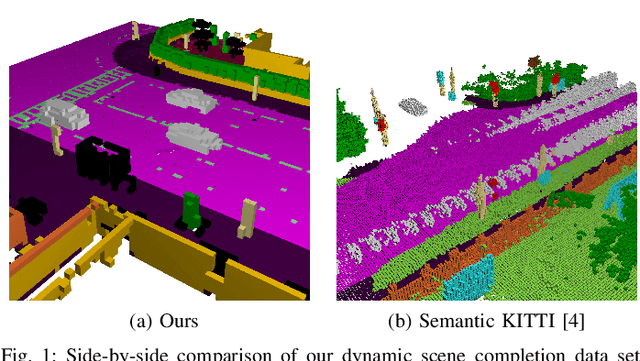

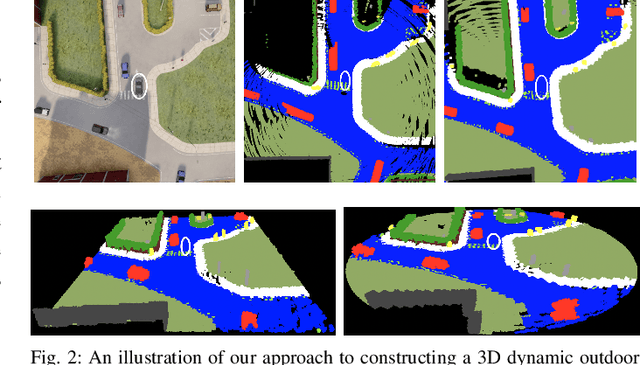

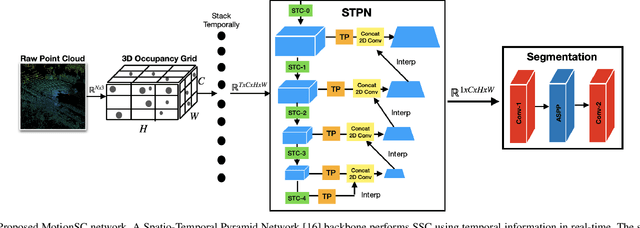

MotionSC: Data Set and Network for Real-Time Semantic Mapping in Dynamic Environments

Mar 14, 2022

Abstract:This work addresses a gap in semantic scene completion (SSC) data by creating a novel outdoor data set with accurate and complete dynamic scenes. Our data set is formed from randomly sampled views of the world at each time step, which supervises generalizability to complete scenes without occlusions or traces. We create SSC baselines from state-of-the-art open source networks and construct a benchmark real-time dense local semantic mapping algorithm, MotionSC, by leveraging recent 3D deep learning architectures to enhance SSC with temporal information. Our network shows that the proposed data set can quantify and supervise accurate scene completion in the presence of dynamic objects, which can lead to the development of improved dynamic mapping algorithms. All software is available at https://github.com/UMich-CURLY/3DMapping.

Simultaneous Human-robot Matching and Routing for Multi-robot Tour Guiding under Time Uncertainty

Jan 25, 2022

Abstract:This work presents a framework for multi-robot tour guidance in a partially known environment with uncertainty, such as a museum. In the proposed centralized multi-robot planner, a simultaneous matching and routing problem (SMRP) is formulated to match the humans with robot guides according to their selected places of interest (POIs) and generate the routes and schedules for the robots according to uncertain spatial and time estimation. A large neighborhood search algorithm is developed to efficiently find sub-optimal low-cost solutions for the SMRP. The scalability and optimality of the multi-robot planner are evaluated computationally under different numbers of humans, robots, and POIs. The largest case tested involves 50 robots, 250 humans, and 50 POIs. Then, a photo-realistic multi-robot simulation platform was developed based on Habitat-AI to verify the tour guiding performance in an uncertain indoor environment. Results demonstrate that the proposed centralized tour planner is scalable, makes a smooth trade-off in the plans under different environmental constraints, and can lead to robust performance with inaccurate uncertainty estimations (within a certain margin).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge