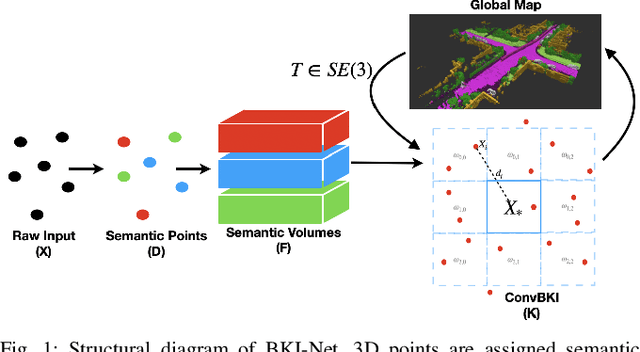

Convolutional Bayesian Kernel Inference for 3D Semantic Mapping

Paper and Code

Sep 21, 2022

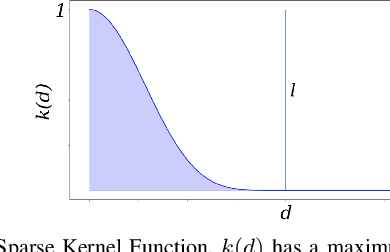

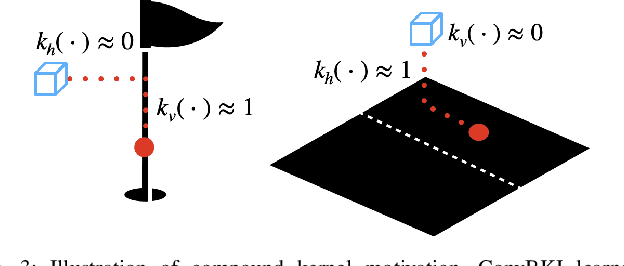

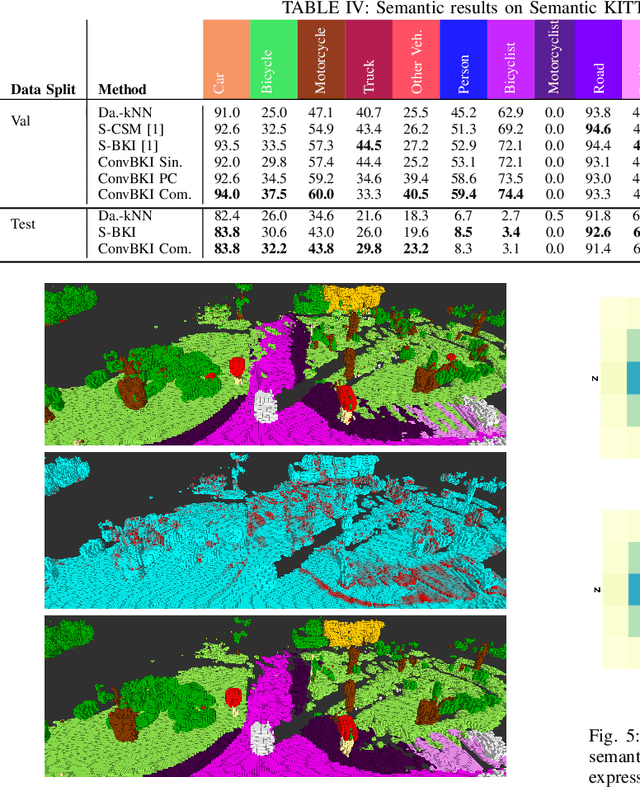

Robotic perception is currently at a cross-roads between modern methods which operate in an efficient latent space, and classical methods which are mathematically founded and provide interpretable, trustworthy results. In this paper, we introduce a Convolutional Bayesian Kernel Inference (ConvBKI) layer which explicitly performs Bayesian inference within a depthwise separable convolution layer to simultaneously maximize efficiency while maintaining reliability. We apply our layer to the task of 3D semantic mapping, where we learn semantic-geometric probability distributions for LiDAR sensor information in real time. We evaluate our network against state-of-the-art semantic mapping algorithms on the KITTI data set, and demonstrate improved latency with comparable semantic results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge