Brahim Tamadazte

iMatcher: Improve matching in point cloud registration via local-to-global geometric consistency learning

Sep 10, 2025Abstract:This paper presents iMatcher, a fully differentiable framework for feature matching in point cloud registration. The proposed method leverages learned features to predict a geometrically consistent confidence matrix, incorporating both local and global consistency. First, a local graph embedding module leads to an initialization of the score matrix. A subsequent repositioning step refines this matrix by considering bilateral source-to-target and target-to-source matching via nearest neighbor search in 3D space. The paired point features are then stacked together to be refined through global geometric consistency learning to predict a point-wise matching probability. Extensive experiments on real-world outdoor (KITTI, KITTI-360) and indoor (3DMatch) datasets, as well as on 6-DoF pose estimation (TUD-L) and partial-to-partial matching (MVP-RG), demonstrate that iMatcher significantly improves rigid registration performance. The method achieves state-of-the-art inlier ratios, scoring 95% - 97% on KITTI, 94% - 97% on KITTI-360, and up to 81.1% on 3DMatch, highlighting its robustness across diverse settings.

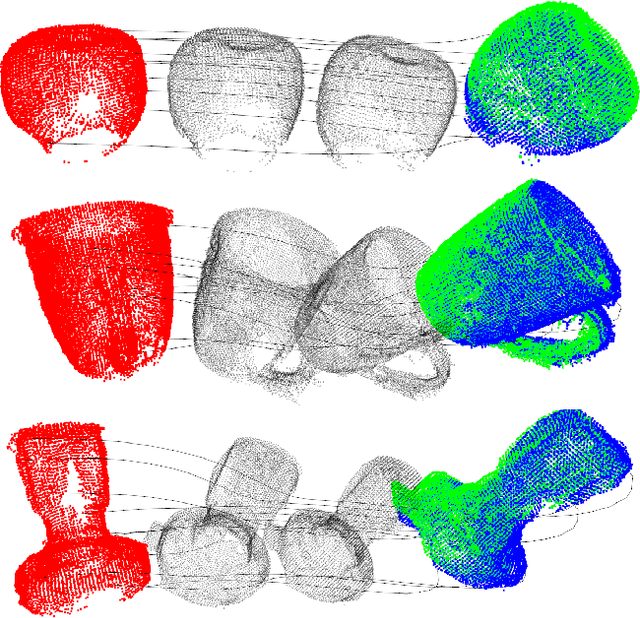

Geometrically-Aware One-Shot Skill Transfer of Category-Level Objects

Mar 19, 2025Abstract:Robotic manipulation of unfamiliar objects in new environments is challenging and requires extensive training or laborious pre-programming. We propose a new skill transfer framework, which enables a robot to transfer complex object manipulation skills and constraints from a single human demonstration. Our approach addresses the challenge of skill acquisition and task execution by deriving geometric representations from demonstrations focusing on object-centric interactions. By leveraging the Functional Maps (FM) framework, we efficiently map interaction functions between objects and their environments, allowing the robot to replicate task operations across objects of similar topologies or categories, even when they have significantly different shapes. Additionally, our method incorporates a Task-Space Imitation Algorithm (TSIA) which generates smooth, geometrically-aware robot paths to ensure the transferred skills adhere to the demonstrated task constraints. We validate the effectiveness and adaptability of our approach through extensive experiments, demonstrating successful skill transfer and task execution in diverse real-world environments without requiring additional training.

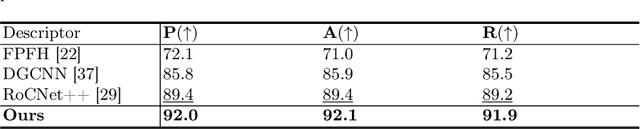

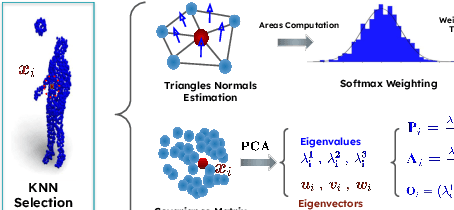

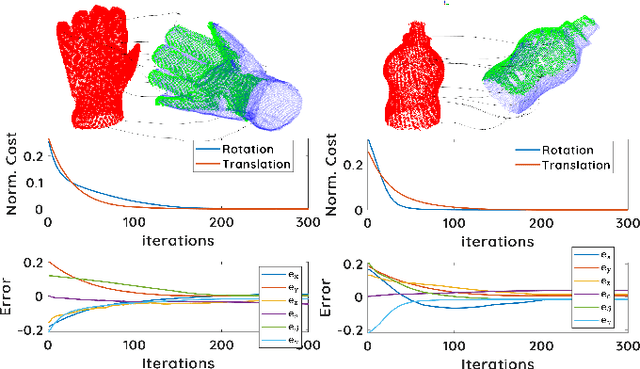

LoGDesc: Local geometric features aggregation for robust point cloud registration

Oct 03, 2024

Abstract:This paper introduces a new hybrid descriptor for 3D point matching and point cloud registration, combining local geometrical properties and learning-based feature propagation for each point's neighborhood structure description. The proposed architecture first extracts prior geometrical information by computing each point's planarity, anisotropy, and omnivariance using a Principal Components Analysis (PCA). This prior information is completed by a descriptor based on the normal vectors estimated thanks to constructing a neighborhood based on triangles. The final geometrical descriptor is propagated between the points using local graph convolutions and attention mechanisms. The new feature extractor is evaluated on ModelNet40, Bunny Stanford dataset, KITTI and MVP (Multi-View Partial)-RG for point cloud registration and shows interesting results, particularly on noisy and low overlapping point clouds.

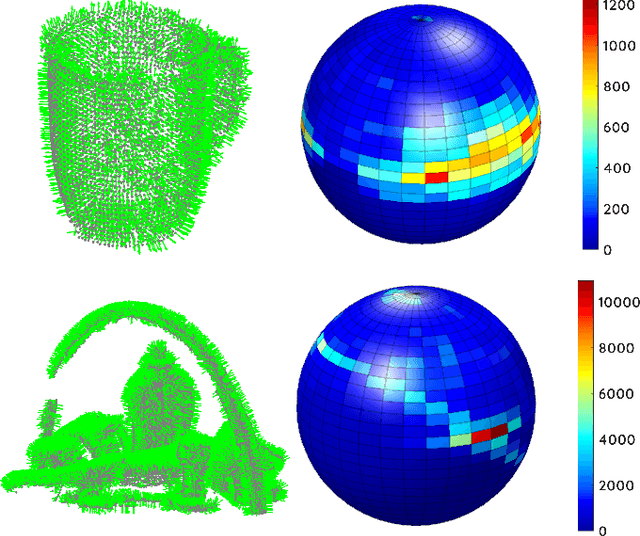

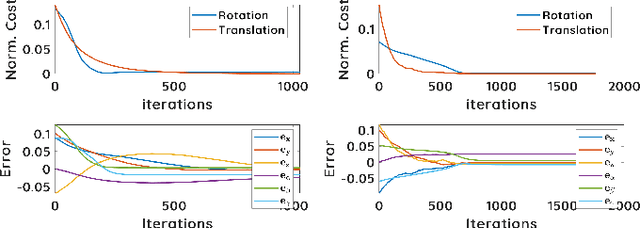

Asservissement visuel 3D direct dans le domaine spectral

Apr 03, 2023Abstract:This paper presents a direct 3D visual servo scheme for the automatic alignment of point clouds (respectively, objects) using visual information in the spectral domain. Specifically, we propose an alignment method for 3D models/point clouds that works by estimating the global transformation between a reference point cloud and a target point cloud using harmonic domain data analysis. A 3D discrete Fourier transform (DFT) in $\mathbb{R}^3$ is used for translation estimation and real spherical harmonics in $SO(3)$ are used for rotation estimation. This approach allows us to derive a decoupled visual servo controller with 6 degrees of freedom. We then show how this approach can be used as a controller for a robotic arm to perform a positioning task. Unlike existing 3D visual servo methods, our method works well with partial point clouds and in cases of large initial transformations between the initial and desired position. Additionally, using spectral data (instead of spatial data) for the transformation estimation makes our method robust to sensor-induced noise and partial occlusions. Our method has been successfully validated experimentally on point clouds obtained with a depth camera mounted on a robotic arm.

3D Spectral Domain Registration-Based Visual Servoing

Mar 28, 2023

Abstract:This paper presents a spectral domain registration-based visual servoing scheme that works on 3D point clouds. Specifically, we propose a 3D model/point cloud alignment method, which works by finding a global transformation between reference and target point clouds using spectral analysis. A 3D Fast Fourier Transform (FFT) in R3 is used for the translation estimation, and the real spherical harmonics in SO(3) are used for the rotations estimation. Such an approach allows us to derive a decoupled 6 degrees of freedom (DoF) controller, where we use gradient ascent optimisation to minimise translation and rotational costs. We then show how this methodology can be used to regulate a robot arm to perform a positioning task. In contrast to the existing state-of-the-art depth-based visual servoing methods that either require dense depth maps or dense point clouds, our method works well with partial point clouds and can effectively handle larger transformations between the reference and the target positions. Furthermore, the use of spectral data (instead of spatial data) for transformation estimation makes our method robust to sensor-induced noise and partial occlusions. We validate our approach by performing experiments using point clouds acquired by a robot-mounted depth camera. Obtained results demonstrate the effectiveness of our visual servoing approach.

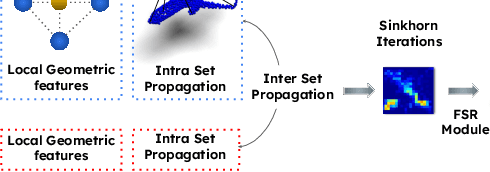

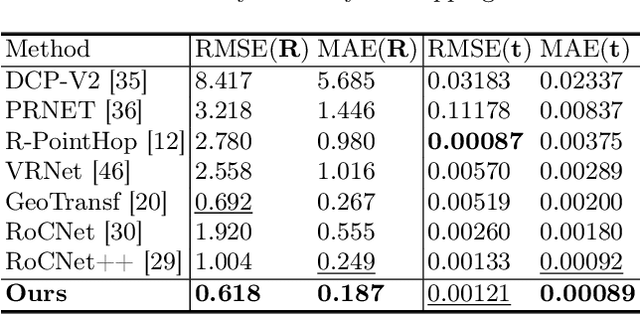

RoCNet: 3D Robust Registration of Point-Clouds using Deep Learning

Mar 14, 2023Abstract:This paper introduces a new method for 3D point cloud registration based on deep learning. The architecture is composed of three distinct blocs: (i) an encoder composed of a convolutional graph-based descriptor that encodes the immediate neighbourhood of each point and an attention mechanism that encodes the variations of the surface normals. Such descriptors are refined by highlighting attention between the points of the same set and then between the points of the two sets. (ii) a matching process that estimates a matrix of correspondences using the Sinkhorn algorithm. (iii) Finally, the rigid transformation between the two point clouds is calculated by RANSAC using the Kc best scores from the correspondence matrix. We conduct experiments on the ModelNet40 dataset, and our proposed architecture shows very promising results, outperforming state-of-the-art methods in most of the simulated configurations, including partial overlap and data augmentation with Gaussian noise.

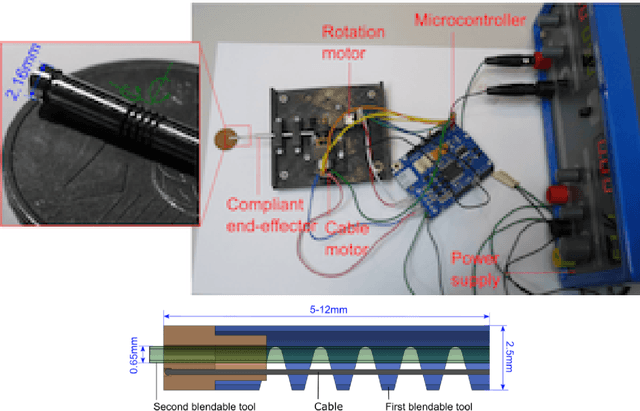

Safe Path following for Middle Ear Surgery

Jan 03, 2023

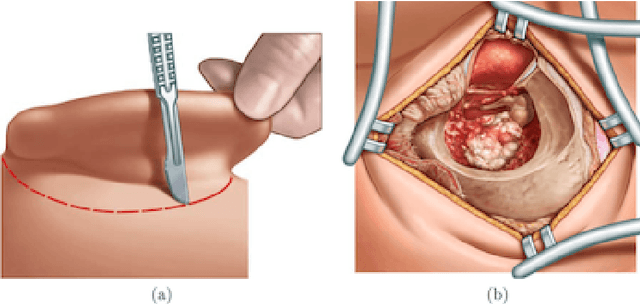

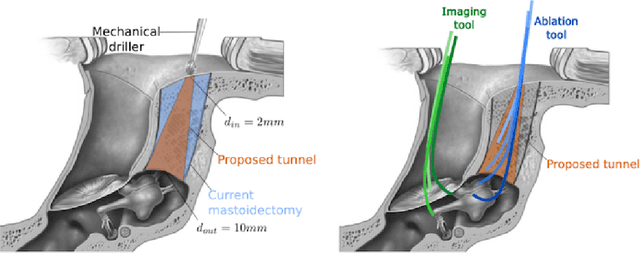

Abstract:This article formulates a generic representation of a path-following controller operating under contained motion, which was developed in the context of surgical robotics. It reports two types of constrained motion: i) Bilateral Constrained Motion, also called Remote Center Motion (RCM), and ii) Unilaterally Constrained Motion (UCM). In the first case, the incision hole has almost the same diameter as the robotic tool. In contrast, in the second state, the diameter of the incision orifice is larger than the tool diameter. The second case offers more space where the surgical instrument moves freely without constraints before touching the incision wall. The proposed method combines two tasks that must operate hierarchically: i) respect the RCM or UCM constraints formulated by equality or inequality, respectively, and ii) perform a surgical assignment, e.g., scanning or ablation expressed as a 3D path-following task. The proposed methods and materials were tested first on our simulator that mimics realistic conditions of middle ear surgery, and then on an experimental platform. Different validation scenarios were carried out experimentally to assess quantitatively and qualitatively each developed approach. Although ultimate precision was not the goal of this work, our concept is validated with enough accuracy (inferior to 100 micrometres) for ear surgery.

Contributions à l'asservissement visuel et à l'imagerie en médecine

Aug 22, 2022

Abstract:This manuscript gives an overview of my research work carried out within the FEMTO-ST institute in Besan\c{c}on, more particularly in the Automatic and Micro-Mechatronic Systems (AS2M) department. It is above all the result of my (co)-supervision of interns, PhD students and postdocs. I would like to pay tribute to them, for their major contribution to scientific research, here and elsewhere.

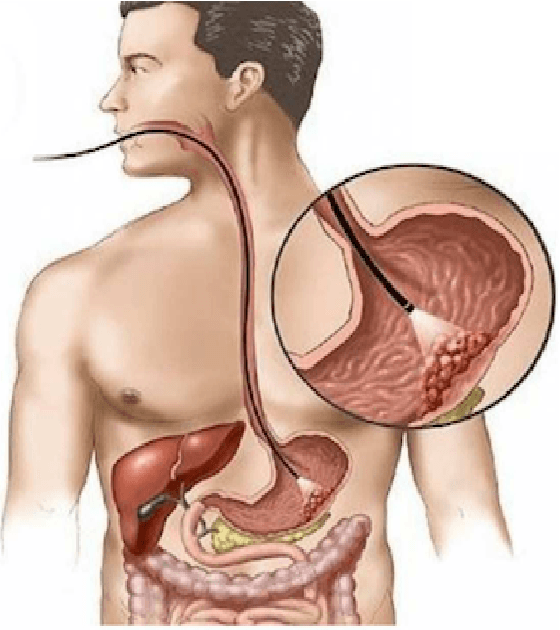

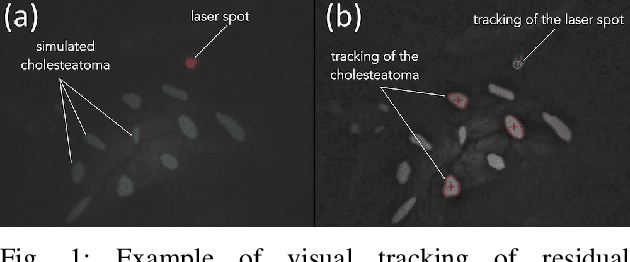

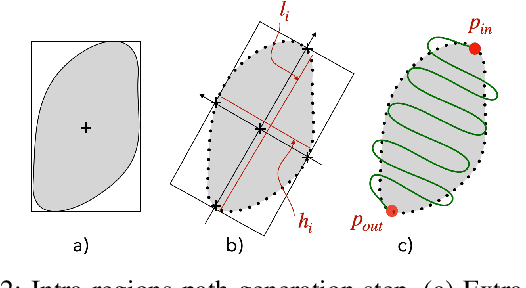

Automatic laser steering for middle ear surgery

Aug 18, 2022

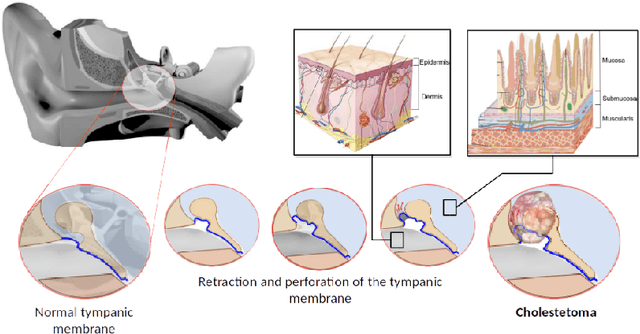

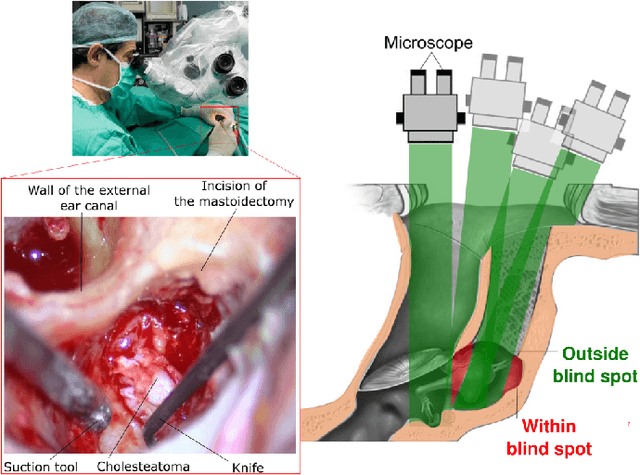

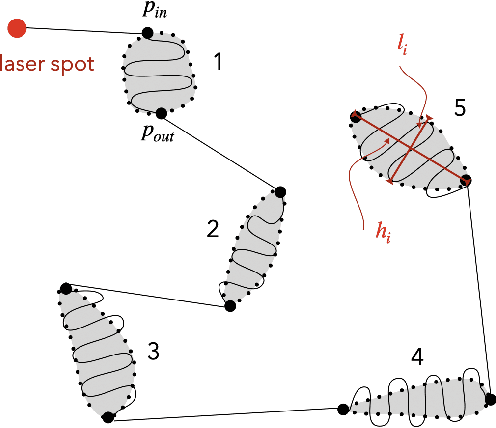

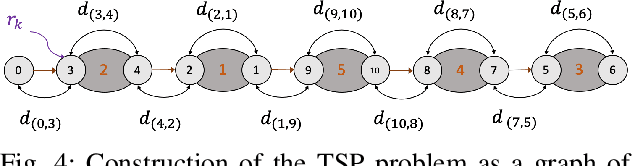

Abstract:This paper deals with the control of laser spot in the context of minimally invasive surgery of the middle ear, e.g., cholesteatoma removal. More precisely, our work is concerned with the exhaustive burring of residual infected cells after primary mechanical resection of the pathological tissues since the latter cannot guarantee the treatment of all the infected tissues, the remaining infected cells cause regeneration of the diseases in 20%-25\-% of cases, which require a second surgery 12-18 months later. To tackle such a complex surgery, we have developed a robotic platform that consists of the combination of a macro-scale system (7 degrees of freedom (DoFs) robotic arm) and a micro-scale flexible system (2 DoFs) which operates inside the middle ear cavity. To be able to treat the residual cholesteatoma regions, we proposed a method to automatically generate optimal laser scanning trajectories inside the regions and between them. The trajectories are tacked using an image-based control scheme. The proposed method and materials were validated experimentally using the lab-made robotic platform. The obtained results in terms of accuracy and behaviour meet perfectly the laser surgery requirements.

Robust RGB-D Fusion for Saliency Detection

Aug 02, 2022

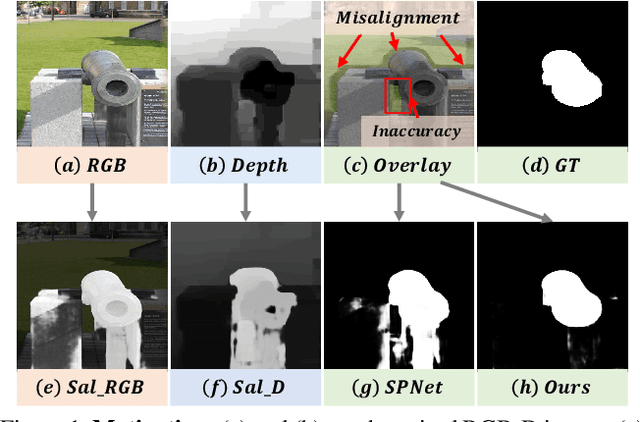

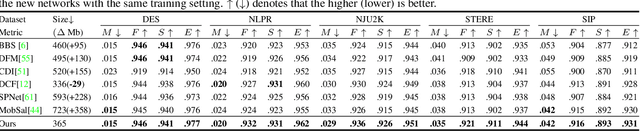

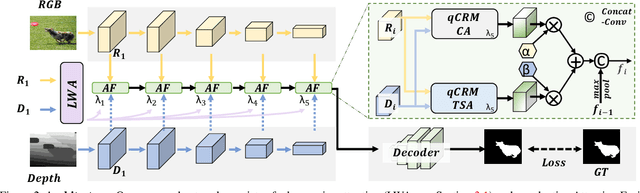

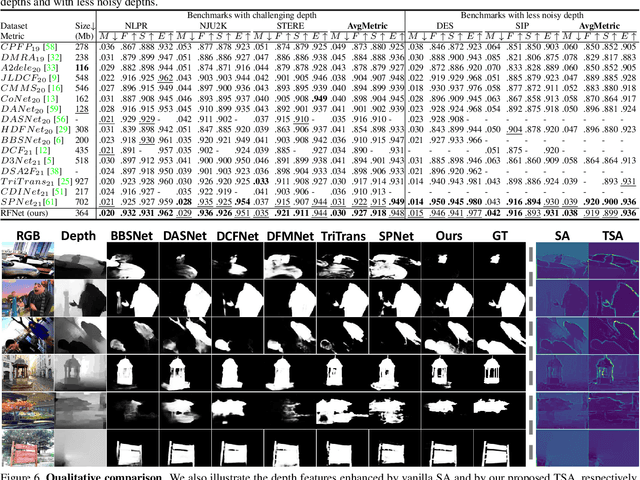

Abstract:Efficiently exploiting multi-modal inputs for accurate RGB-D saliency detection is a topic of high interest. Most existing works leverage cross-modal interactions to fuse the two streams of RGB-D for intermediate features' enhancement. In this process, a practical aspect of the low quality of the available depths has not been fully considered yet. In this work, we aim for RGB-D saliency detection that is robust to the low-quality depths which primarily appear in two forms: inaccuracy due to noise and the misalignment to RGB. To this end, we propose a robust RGB-D fusion method that benefits from (1) layer-wise, and (2) trident spatial, attention mechanisms. On the one hand, layer-wise attention (LWA) learns the trade-off between early and late fusion of RGB and depth features, depending upon the depth accuracy. On the other hand, trident spatial attention (TSA) aggregates the features from a wider spatial context to address the depth misalignment problem. The proposed LWA and TSA mechanisms allow us to efficiently exploit the multi-modal inputs for saliency detection while being robust against low-quality depths. Our experiments on five benchmark datasets demonstrate that the proposed fusion method performs consistently better than the state-of-the-art fusion alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge