Maxime Adjigble

Geometrically-Aware One-Shot Skill Transfer of Category-Level Objects

Mar 19, 2025Abstract:Robotic manipulation of unfamiliar objects in new environments is challenging and requires extensive training or laborious pre-programming. We propose a new skill transfer framework, which enables a robot to transfer complex object manipulation skills and constraints from a single human demonstration. Our approach addresses the challenge of skill acquisition and task execution by deriving geometric representations from demonstrations focusing on object-centric interactions. By leveraging the Functional Maps (FM) framework, we efficiently map interaction functions between objects and their environments, allowing the robot to replicate task operations across objects of similar topologies or categories, even when they have significantly different shapes. Additionally, our method incorporates a Task-Space Imitation Algorithm (TSIA) which generates smooth, geometrically-aware robot paths to ensure the transferred skills adhere to the demonstrated task constraints. We validate the effectiveness and adaptability of our approach through extensive experiments, demonstrating successful skill transfer and task execution in diverse real-world environments without requiring additional training.

Haptic-guided assisted telemanipulation approach for grasping desired objects from heaps

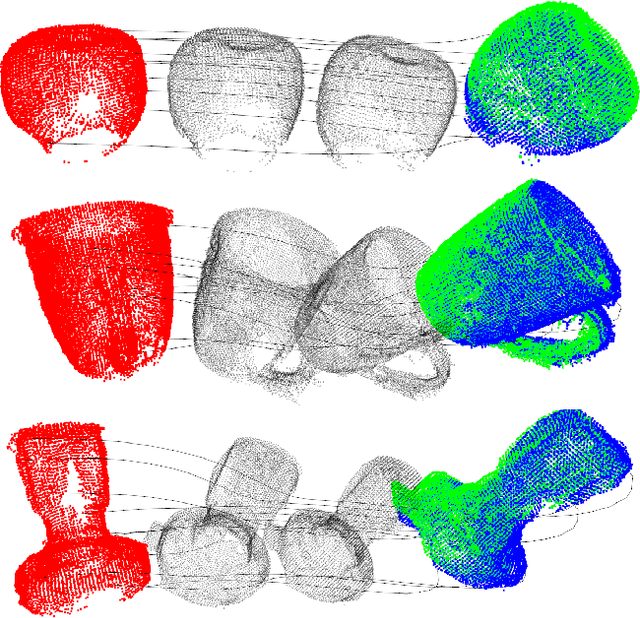

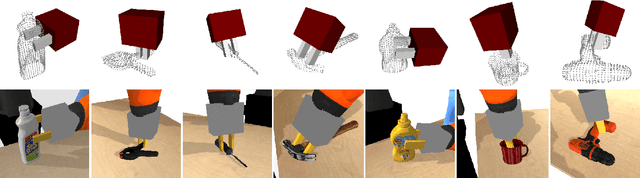

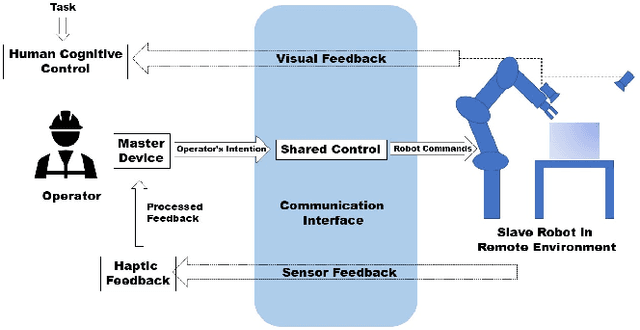

Jul 13, 2023Abstract:This paper presents an assisted telemanipulation framework for reaching and grasping desired objects from clutter. Specifically, the developed system allows an operator to select an object from a cluttered heap and effortlessly grasp it, with the system assisting in selecting the best grasp and guiding the operator to reach it. To this end, we propose an object pose estimation scheme, a dynamic grasp re-ranking strategy, and a reach-to-grasp hybrid force/position trajectory guidance controller. We integrate them, along with our previous SpectGRASP grasp planner, into a classical bilateral teleoperation system that allows to control the robot using a haptic device while providing force feedback to the operator. For a user-selected object, our system first identifies the object in the heap and estimates its full six degrees of freedom (DoF) pose. Then, SpectGRASP generates a set of ordered, collision-free grasps for this object. Based on the current location of the robot gripper, the proposed grasp re-ranking strategy dynamically updates the best grasp. In assisted mode, the hybrid controller generates a zero force-torque path along the reach-to-grasp trajectory while automatically controlling the orientation of the robot. We conducted real-world experiments using a haptic device and a 7-DoF cobot with a 2-finger gripper to validate individual components of our telemanipulation system and its overall functionality. Obtained results demonstrate the effectiveness of our system in assisting humans to clear cluttered scenes.

Asservissement visuel 3D direct dans le domaine spectral

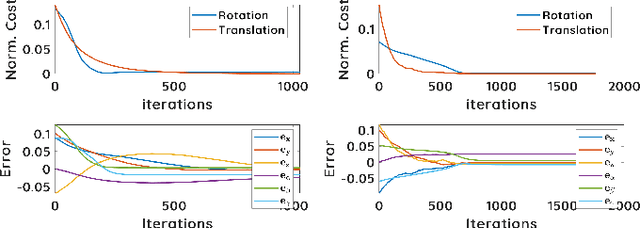

Apr 03, 2023Abstract:This paper presents a direct 3D visual servo scheme for the automatic alignment of point clouds (respectively, objects) using visual information in the spectral domain. Specifically, we propose an alignment method for 3D models/point clouds that works by estimating the global transformation between a reference point cloud and a target point cloud using harmonic domain data analysis. A 3D discrete Fourier transform (DFT) in $\mathbb{R}^3$ is used for translation estimation and real spherical harmonics in $SO(3)$ are used for rotation estimation. This approach allows us to derive a decoupled visual servo controller with 6 degrees of freedom. We then show how this approach can be used as a controller for a robotic arm to perform a positioning task. Unlike existing 3D visual servo methods, our method works well with partial point clouds and in cases of large initial transformations between the initial and desired position. Additionally, using spectral data (instead of spatial data) for the transformation estimation makes our method robust to sensor-induced noise and partial occlusions. Our method has been successfully validated experimentally on point clouds obtained with a depth camera mounted on a robotic arm.

3D Spectral Domain Registration-Based Visual Servoing

Mar 28, 2023

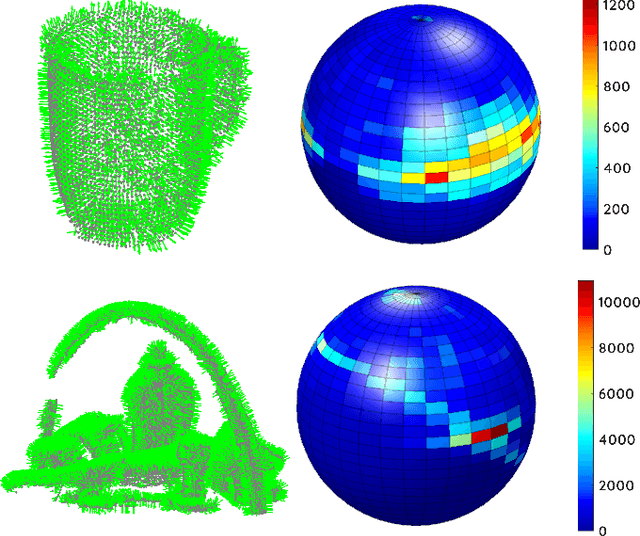

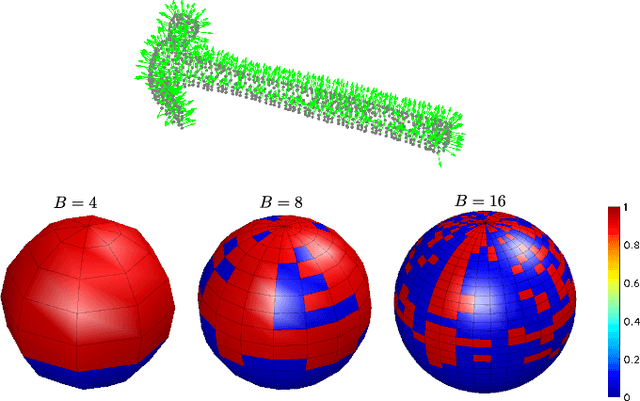

Abstract:This paper presents a spectral domain registration-based visual servoing scheme that works on 3D point clouds. Specifically, we propose a 3D model/point cloud alignment method, which works by finding a global transformation between reference and target point clouds using spectral analysis. A 3D Fast Fourier Transform (FFT) in R3 is used for the translation estimation, and the real spherical harmonics in SO(3) are used for the rotations estimation. Such an approach allows us to derive a decoupled 6 degrees of freedom (DoF) controller, where we use gradient ascent optimisation to minimise translation and rotational costs. We then show how this methodology can be used to regulate a robot arm to perform a positioning task. In contrast to the existing state-of-the-art depth-based visual servoing methods that either require dense depth maps or dense point clouds, our method works well with partial point clouds and can effectively handle larger transformations between the reference and the target positions. Furthermore, the use of spectral data (instead of spatial data) for transformation estimation makes our method robust to sensor-induced noise and partial occlusions. We validate our approach by performing experiments using point clouds acquired by a robot-mounted depth camera. Obtained results demonstrate the effectiveness of our visual servoing approach.

SpectGRASP: Robotic Grasping by Spectral Correlation

Jul 26, 2021

Abstract:This paper presents a spectral correlation-based method (SpectGRASP) for robotic grasping of arbitrarily shaped, unknown objects. Given a point cloud of an object, SpectGRASP extracts contact points on the object's surface matching the hand configuration. It neither requires offline training nor a-priori object models. We propose a novel Binary Extended Gaussian Image (BEGI), which represents the point cloud surface normals of both object and robot fingers as signals on a 2-sphere. Spherical harmonics are then used to estimate the correlation between fingers and object BEGIs. The resulting spectral correlation density function provides a similarity measure of gripper and object surface normals. This is highly efficient in that it is simultaneously evaluated at all possible finger rotations in SO(3). A set of contact points are then extracted for each finger using rotations with high correlation values. We then use our previous work, Local Contact Moment (LoCoMo) similarity metric, to sequentially rank the generated grasps such that the one with maximum likelihood is executed. We evaluate the performance of SpectGRASP by conducting experiments with a 7-axis robot fitted with a parallel-jaw gripper, in a physics simulation environment. Obtained results indicate that the method not only can grasp individual objects, but also can successfully clear randomly organized groups of objects. The SpectGRASP method also outperforms the closest state-of-the-art method in terms of grasp generation time and grasp-efficiency.

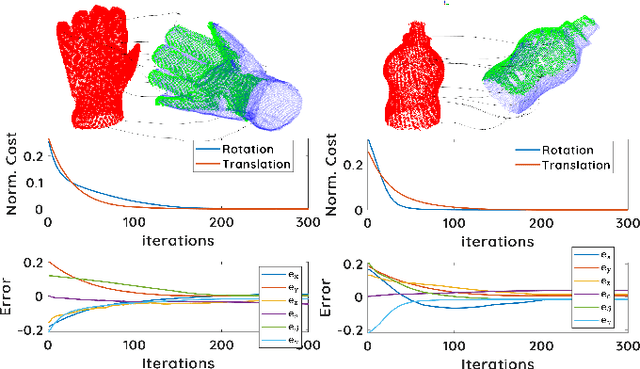

Dual Quaternion-Based Visual Servoing for Grasping Moving Objects

Jul 17, 2021

Abstract:This paper presents a new dual quaternion-based formulation for pose-based visual servoing. Extending our previous work on local contact moment (LoCoMo) based grasp planning, we demonstrate grasping of arbitrarily moving objects in 3D space. Instead of using the conventional axis-angle parameterization, dual quaternions allow designing the visual servoing task in a more compact manner and provide robustness to manipulator singularities. Given an object point cloud, LoCoMo generates a ranked list of grasp and pre-grasp poses, which are used as desired poses for visual servoing. Whenever the object moves (tracked by visual marker tracking), the desired pose updates automatically. For this, capitalising on the dual quaternion spatial distance error, we propose a dynamic grasp re-ranking metric to select the best feasible grasp for the moving object. This allows the robot to readily track and grasp arbitrarily moving objects. In addition, we also explore the robot null-space with our controller to avoid joint limits so as to achieve smooth trajectories while following moving objects. We evaluate the performance of the proposed visual servoing by conducting simulation experiments of grasping various objects using a 7-axis robot fitted with a 2-finger gripper. Obtained results demonstrate the efficiency of our proposed visual servoing.

Metrics and Benchmarks for Remote Shared Controllers in Industrial Applications

Jun 19, 2019

Abstract:Remote manipulation is emerging as one of the key robotics tasks needed in extreme environments. Several researchers have investigated how to add AI components into shared controllers to improve their reliability. Nonetheless, the impact of novel research approaches in real-world applications can have a very slow in-take. We propose a set of benchmarks and metrics to evaluate how the AI components of remote shared control algorithms can improve the effectiveness of such frameworks for real industrial applications. We also present an empirical evaluation of a simple intelligent share controller against a manually operated manipulator in a tele-operated grasping scenario.

Hypothesis-based Belief Planning for Dexterous Grasping

Mar 13, 2019

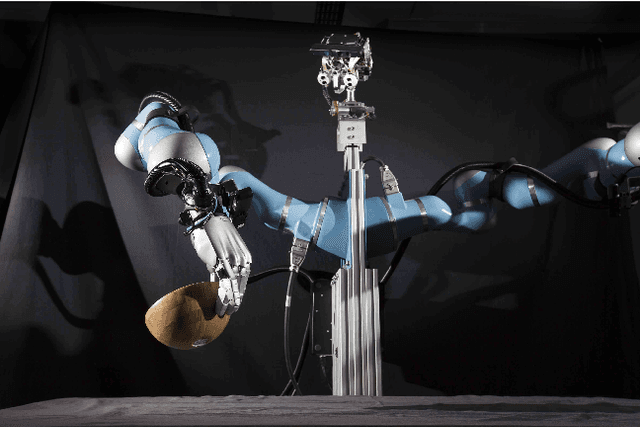

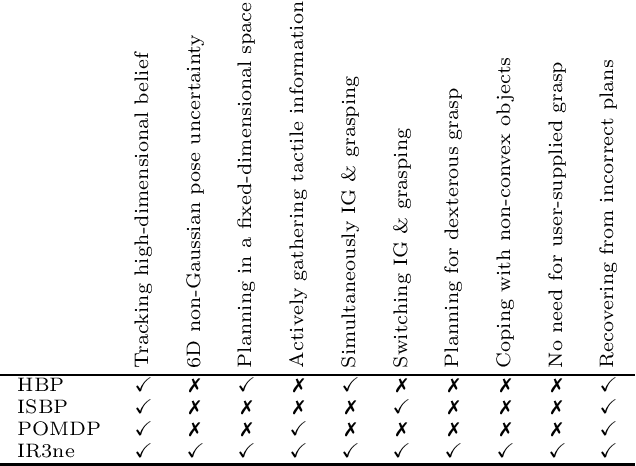

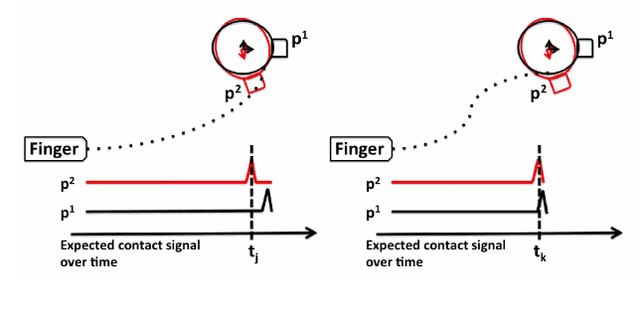

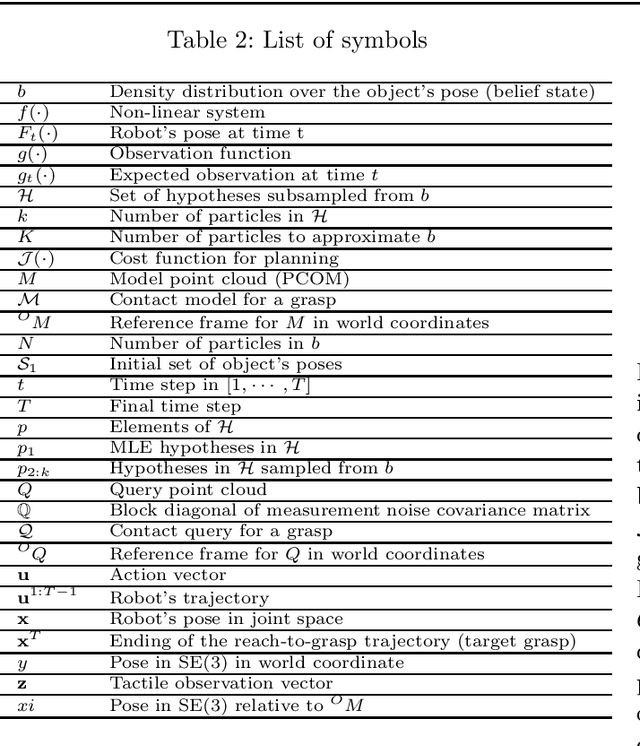

Abstract:Belief space planning is a viable alternative to formalise partially observable control problems and, in the recent years, its application to robot manipulation problems has grown. However, this planning approach was tried successfully only on simplified control problems. In this paper, we apply belief space planning to the problem of planning dexterous reach-to-grasp trajectories under object pose uncertainty. In our framework, the robot perceives the object to be grasped on-the-fly as a point cloud and compute a full 6D, non-Gaussian distribution over the object's pose (our belief space). The system has no limitations on the geometry of the object, i.e., non-convex objects can be represented, nor assumes that the point cloud is a complete representation of the object. A plan in the belief space is then created to reach and grasp the object, such that the information value of expected contacts along the trajectory is maximised to compensate for the pose uncertainty. If an unexpected contact occurs when performing the action, such information is used to refine the pose distribution and triggers a re-planning. Experimental results show that our planner (IR3ne) improves grasp reliability and compensates for the pose uncertainty such that it doubles the proportion of grasps that succeed on a first attempt.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge