Marek Kopicki

3D Reconstruction of non-visible surfaces of objects from a Single Depth View -- Comparative Study

Jan 27, 2025

Abstract:Scene and object reconstruction is an important problem in robotics, in particular in planning collision-free trajectories or in object manipulation. This paper compares two strategies for the reconstruction of nonvisible parts of the object surface from a single RGB-D camera view. The first method, named DeepSDF predicts the Signed Distance Transform to the object surface for a given point in 3D space. The second method, named MirrorNet reconstructs the occluded objects' parts by generating images from the other side of the observed object. Experiments performed with objects from the ShapeNet dataset, show that the view-dependent MirrorNet is faster and has smaller reconstruction errors in most categories.

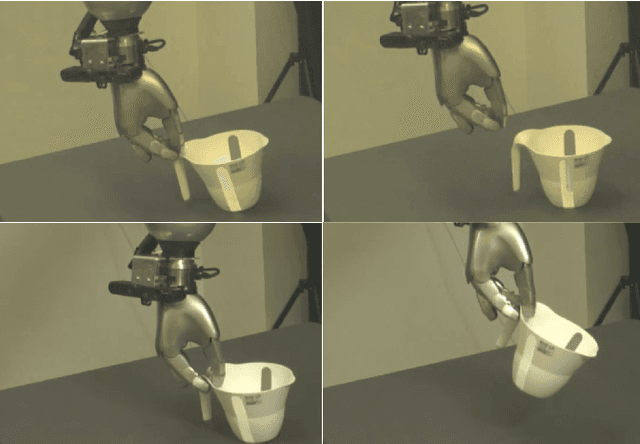

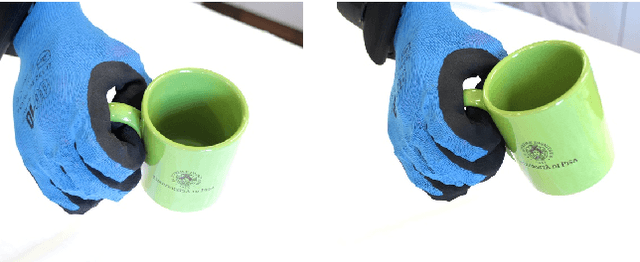

Underactuated dexterous robotic grasping with reconfigurable passive joints

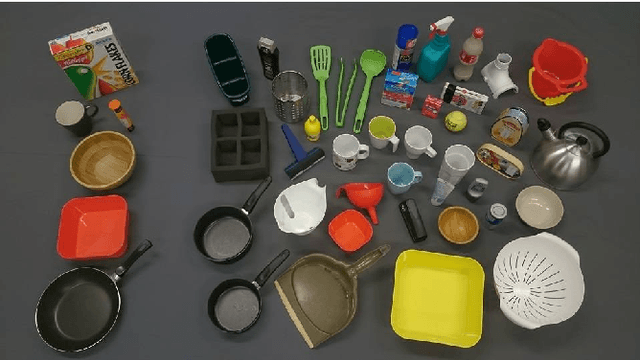

Jan 27, 2025Abstract:We introduce a novel reconfigurable passive joint (RP-joint), which has been implemented and tested on an underactuated three-finger robotic gripper. RP-joint has no actuation, but instead it is lightweight and compact. It can be easily reconfigured by applying external forces and locked to perform complex dexterous manipulation tasks, but only after tension is applied to the connected tendon. Additionally, we present an approach that allows learning dexterous grasps from single examples with underactuated grippers and automatically configures the RP-joints for dexterous manipulation. This is enhanced by integrating kinaesthetic contact optimization, which improves grasp performance even further. The proposed RP-joint gripper and grasp planner have been tested on over 370 grasps executed on 42 IKEA objects and on the YCB object dataset, achieving grasping success rates of 80% and 87%, on IKEA and YCB, respectively.

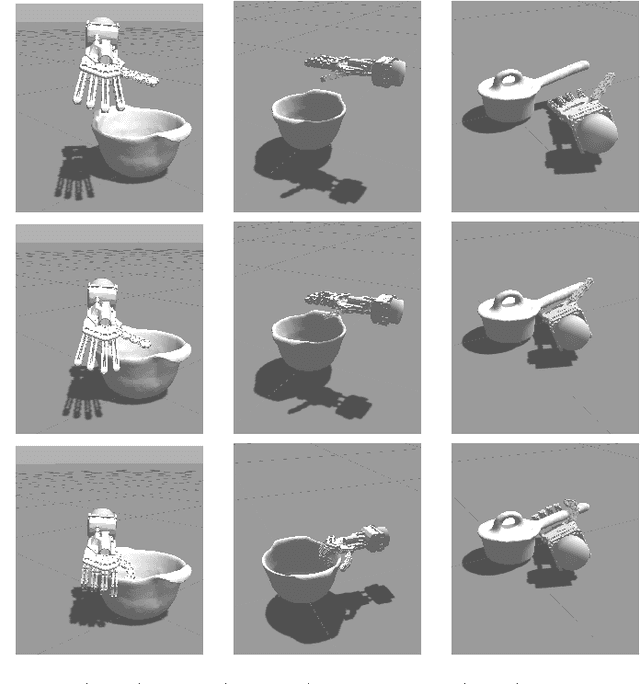

Deep Dexterous Grasping of Novel Objects from a Single View

Aug 10, 2019

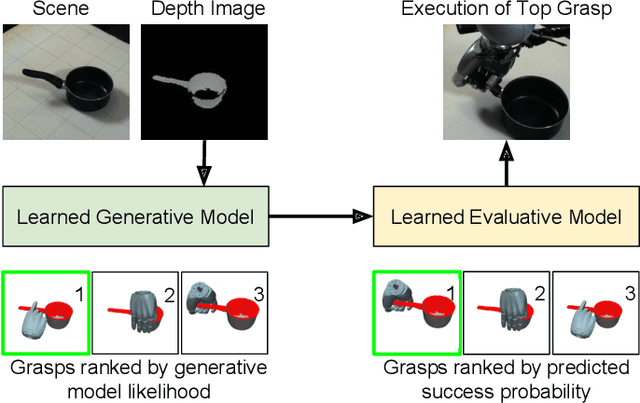

Abstract:Dexterous grasping of a novel object given a single view is an open problem. This paper makes several contributions to its solution. First, we present a simulator for generating and testing dexterous grasps. Second we present a data set, generated by this simulator, of 2.4 million simulated dexterous grasps of variations of 294 base objects drawn from 20 categories. Third, we present a basic architecture for generation and evaluation of dexterous grasps that may be trained in a supervised manner. Fourth, we present three different evaluative architectures, employing ResNet-50 or VGG16 as their visual backbone. Fifth, we train, and evaluate seventeen variants of generative-evaluative architectures on this simulated data set, showing improvement from 69.53% grasp success rate to 90.49%. Finally, we present a real robot implementation and evaluate the four most promising variants, executing 196 real robot grasps in total. We show that our best architectural variant achieves a grasp success rate of 87.8% on real novel objects seen from a single view, improving on a baseline of 57.1%.

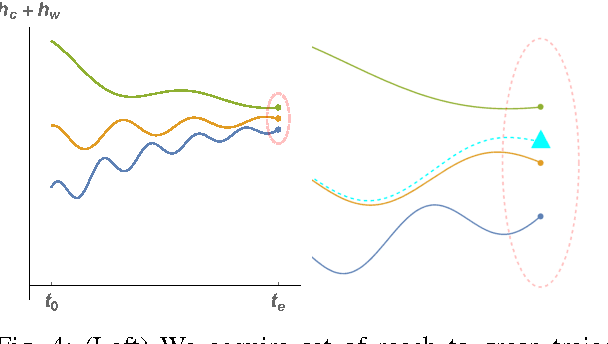

Learning better generative models for dexterous, single-view grasping of novel objects

Jul 13, 2019

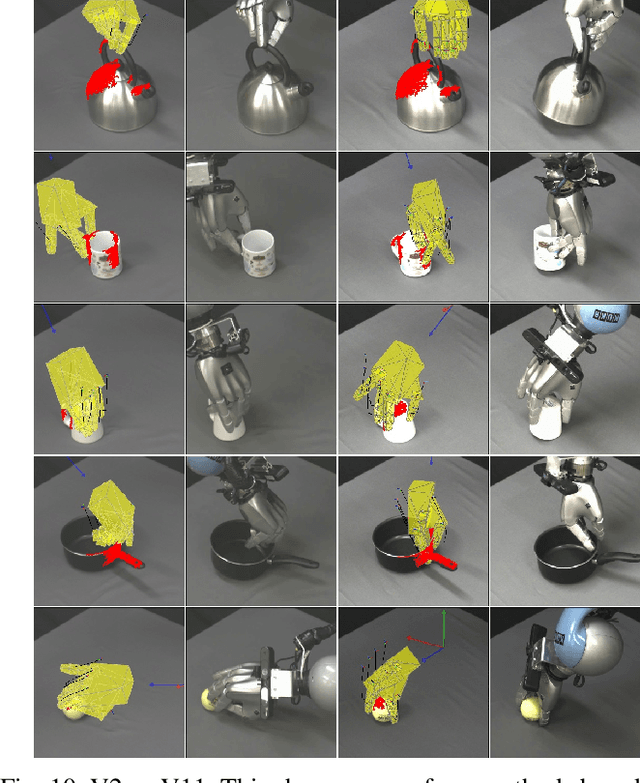

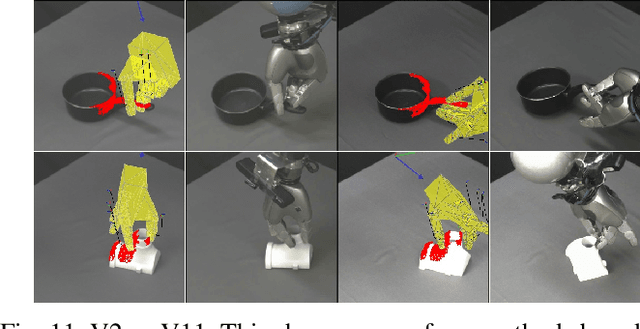

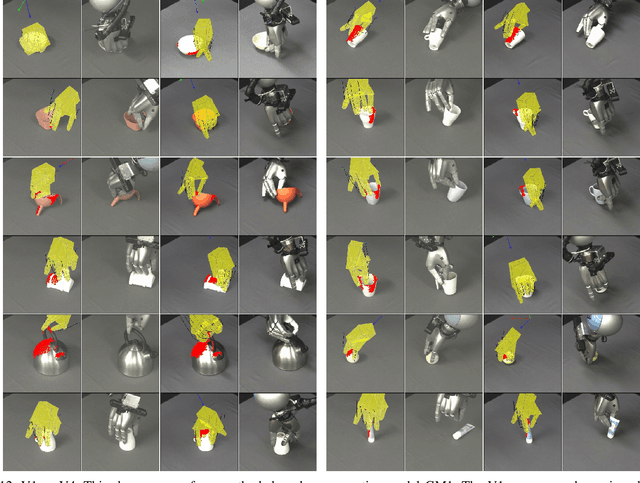

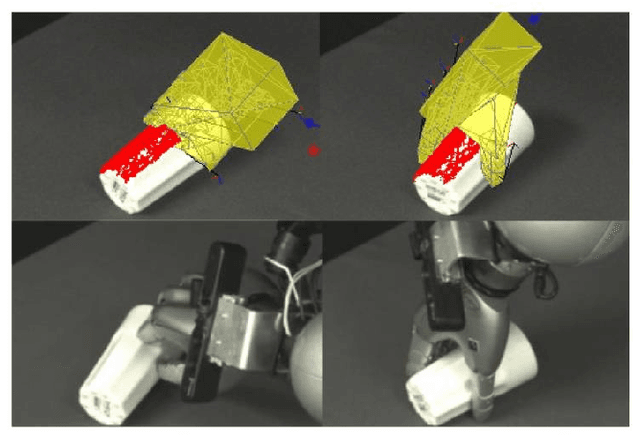

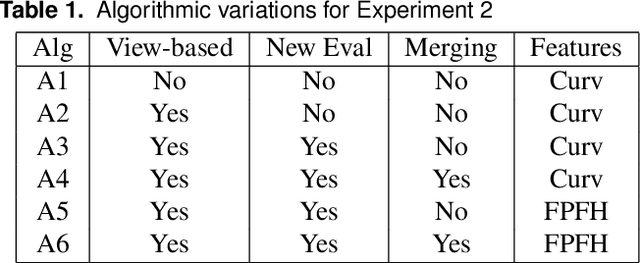

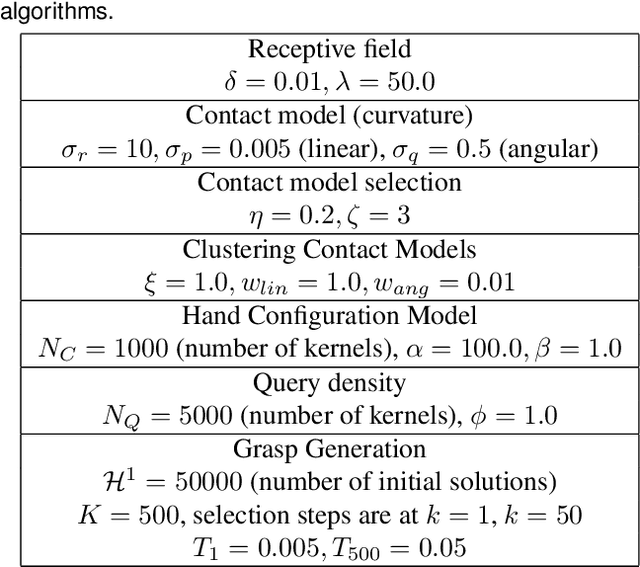

Abstract:This paper concerns the problem of how to learn to grasp dexterously, so as to be able to then grasp novel objects seen only from a single view-point. Recently, progress has been made in data-efficient learning of generative grasp models which transfer well to novel objects. These generative grasp models are learned from demonstration (LfD). One weakness is that, as this paper shall show, grasp transfer under challenging single view conditions is unreliable. Second, the number of generative model elements rises linearly in the number of training examples. This, in turn, limits the potential of these generative models for generalisation and continual improvement. In this paper, it is shown how to address these problems. Several technical contributions are made: (i) a view-based model of a grasp; (ii) a method for combining and compressing multiple grasp models; (iii) a new way of evaluating contacts that is used both to generate and to score grasps. These, together, improve both grasp performance and reduce the number of models learned for grasp transfer. These advances, in turn, also allow the introduction of autonomous training, in which the robot learns from self-generated grasps. Evaluation on a challenging test set shows that, with innovations (i)-(iii) deployed, grasp transfer success rises from 55.1% to 81.6%. By adding autonomous training this rises to 87.8%. These differences are statistically significant. In total, across all experiments, 539 test grasps were executed on real objects.

Generative grasp synthesis from demonstration using parametric mixtures

Jun 27, 2019

Abstract:We present a parametric formulation for learning generative models for grasp synthesis from a demonstration. We cast new light on this family of approaches, proposing a parametric formulation for grasp synthesis that is computationally faster compared to related work and indicates better grasp success rate performance in simulated experiments, showing a gain of at least 10% success rate (p < 0.05) in all the tested conditions. The proposed implementation is also able to incorporate arbitrary constraints for grasp ranking that may include task-specific constraints. Results are reported followed by a brief discussion on the merits of the proposed methods noted so far.

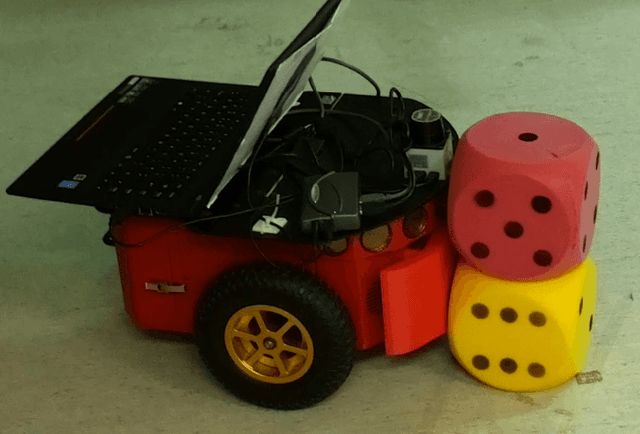

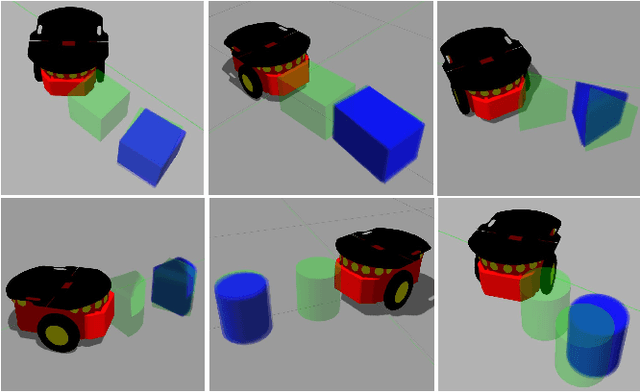

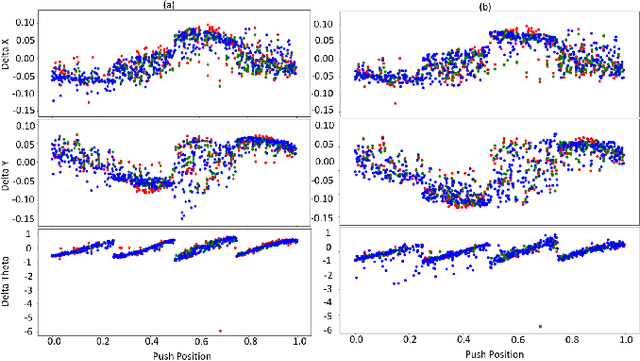

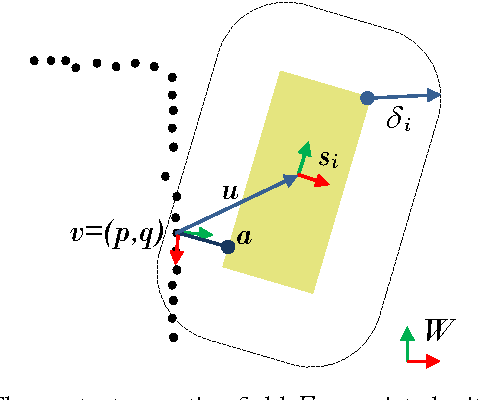

Feature-Based Transfer Learning for Robotic Push Manipulation

May 09, 2019

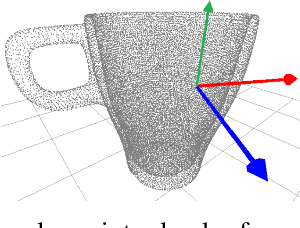

Abstract:This paper presents a data-efficient approach to learning transferable forward models for robotic push manipulation. Our approach extends our previous work on contact-based predictors by leveraging information on the pushed object's local surface features. We test the hypothesis that, by conditioning predictions on local surface features, we can achieve generalisation across objects of different shapes. In doing so, we do not require a CAD model of the object but rather rely on a point cloud object model (PCOM). Our approach involves learning motion models that are specific to contact models. Contact models encode the contacts seen during training time and allow generating similar contacts at prediction time. Predicting on familiar ground reduces the motion models' sample complexity while using local contact information for prediction increases their transferability. In extensive experiments in simulation, our approach is capable of transfer learning for various test objects, outperforming a baseline predictor. We support those results with a proof of concept on a real robot.

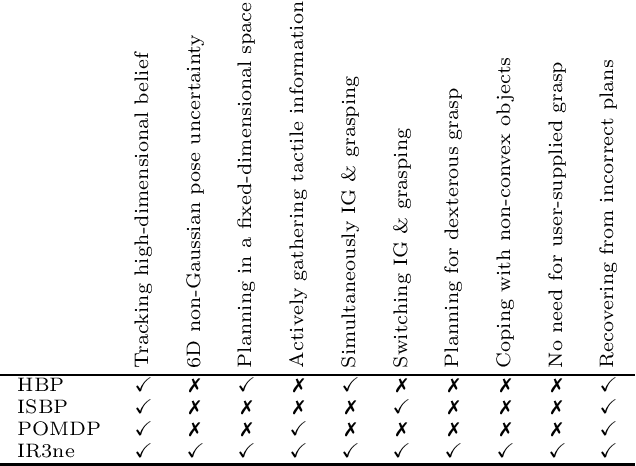

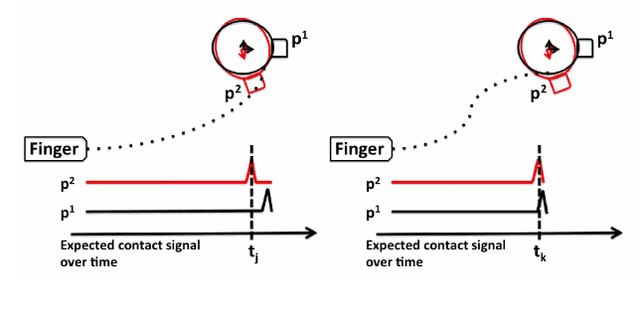

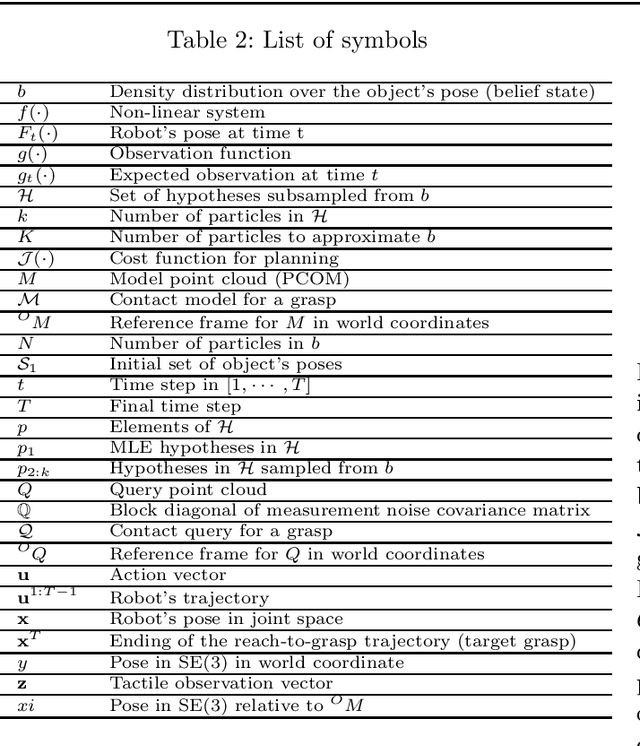

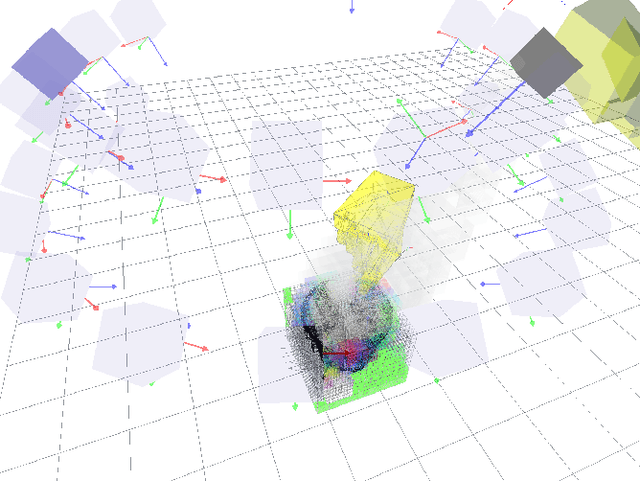

Hypothesis-based Belief Planning for Dexterous Grasping

Mar 13, 2019

Abstract:Belief space planning is a viable alternative to formalise partially observable control problems and, in the recent years, its application to robot manipulation problems has grown. However, this planning approach was tried successfully only on simplified control problems. In this paper, we apply belief space planning to the problem of planning dexterous reach-to-grasp trajectories under object pose uncertainty. In our framework, the robot perceives the object to be grasped on-the-fly as a point cloud and compute a full 6D, non-Gaussian distribution over the object's pose (our belief space). The system has no limitations on the geometry of the object, i.e., non-convex objects can be represented, nor assumes that the point cloud is a complete representation of the object. A plan in the belief space is then created to reach and grasp the object, such that the information value of expected contacts along the trajectory is maximised to compensate for the pose uncertainty. If an unexpected contact occurs when performing the action, such information is used to refine the pose distribution and triggers a re-planning. Experimental results show that our planner (IR3ne) improves grasp reliability and compensates for the pose uncertainty such that it doubles the proportion of grasps that succeed on a first attempt.

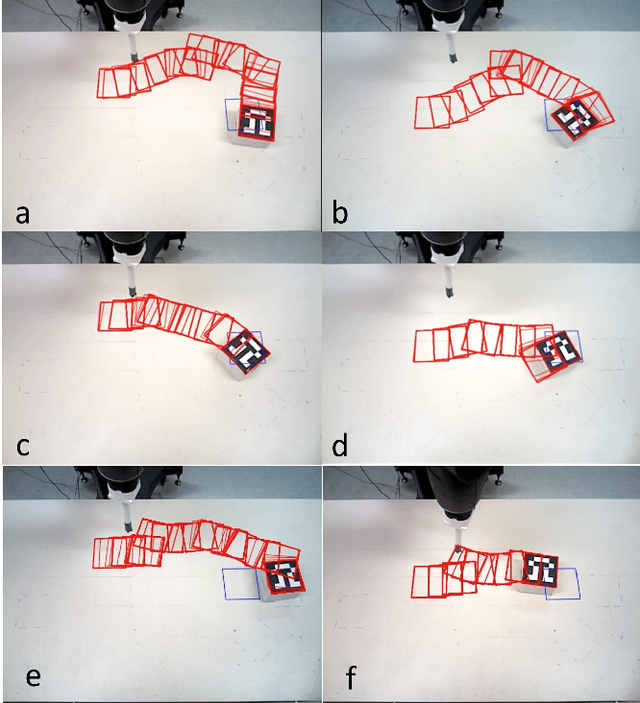

Uncertainty Averse Pushing with Model Predictive Path Integral Control

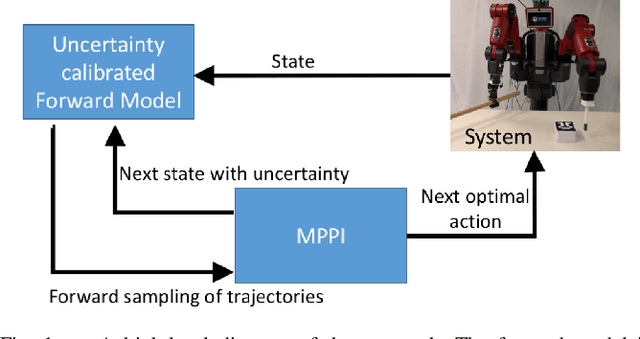

Oct 15, 2017

Abstract:Planning robust robot manipulation requires good forward models that enable robust plans to be found. This work shows how to achieve this using a forward model learned from robot data to plan push manipulations. We explore learning methods (Gaussian Process Regression, and an Ensemble of Mixture Density Networks) that give estimates of the uncertainty in their predictions. These learned models are utilised by a model predictive path integral (MPPI) controller to plan how to push the box to a goal location. The planner avoids regions of high predictive uncertainty in the forward model. This includes both inherent uncertainty in dynamics, and meta uncertainty due to limited data. Thus, pushing tasks are completed in a robust fashion with respect to estimated uncertainty in the forward model and without the need of differentiable cost functions. We demonstrate the method on a real robot, and show that learning can outperform physics simulation. Using simulation, we also show the ability to plan uncertainty averse paths.

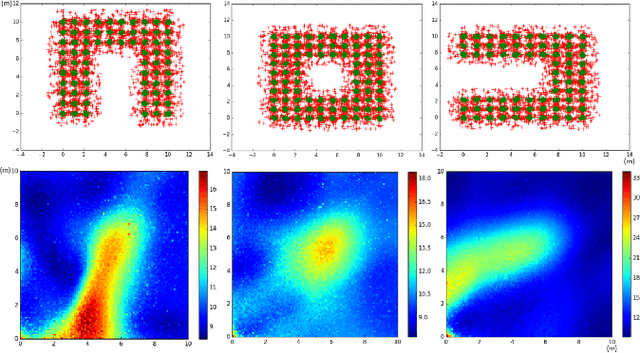

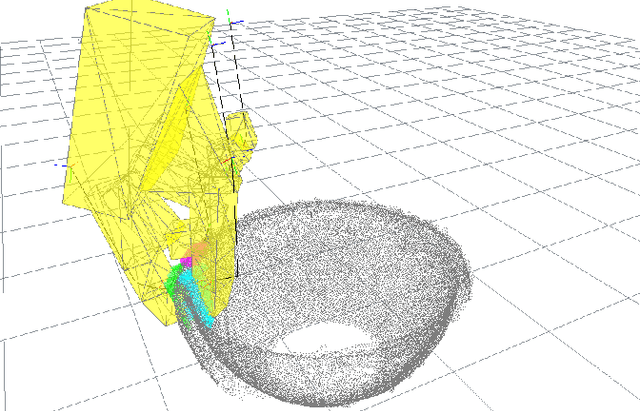

Active vision for dexterous grasping of novel objects

Aug 14, 2017

Abstract:How should a robot direct active vision so as to ensure reliable grasping? We answer this question for the case of dexterous grasping of unfamiliar objects. By dexterous grasping we simply mean grasping by any hand with more than two fingers, such that the robot has some choice about where to place each finger. Such grasps typically fail in one of two ways, either unmodeled objects in the scene cause collisions or object reconstruction is insufficient to ensure that the grasp points provide a stable force closure. These problems can be solved more easily if active sensing is guided by the anticipated actions. Our approach has three stages. First, we take a single view and generate candidate grasps from the resulting partial object reconstruction. Second, we drive the active vision approach to maximise surface reconstruction quality around the planned contact points. During this phase, the anticipated grasp is continually refined. Third, we direct gaze to improve the safety of the planned reach to grasp trajectory. We show, on a dexterous manipulator with a camera on the wrist, that our approach (80.4% success rate) outperforms a randomised algorithm (64.3% success rate).

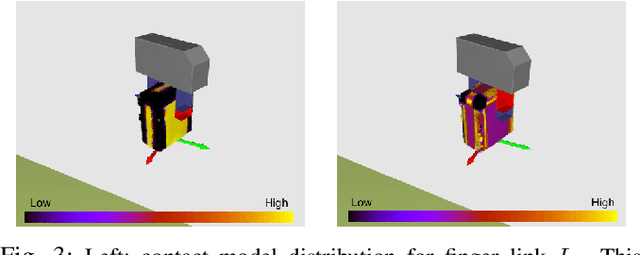

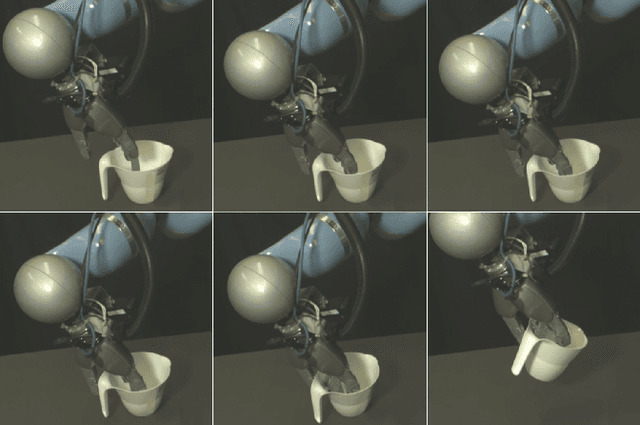

Learning and Inference of Dexterous Grasps for Novel Objects with Underactuated Hands

Sep 24, 2016

Abstract:Recent advances have been made in learning of grasps for fully actuated hands. A typical approach learns the target locations of finger links on the object. When a new object must be grasped, new finger locations are generated, and a collision free reach-to-grasp trajectory is planned. This assumes a collision free trajectory to the final grasp. This is not possible with underactuated hands, which cannot be guaranteed to avoid contact, and in fact exploit contacts with the object during grasping, so as to reach an equilibrium state in which the object is held securely. Unfortunately, these contact interactions are i) not directly controllable, and ii) hard to monitor during a real grasp. We overcome these problems so as to permit learning of transferrable grasps for underactuated hands. We make two main technical innovations. First, we model contact interactions during the grasp implicitly. We do this by modelling motor commands that lead reliably to the equilibrium state, rather than modelling contact changes themselves. This alters our reach-to-grasp model. Second, we extend our contact model learning algorithm to work with multiple training examples for each grasp type. This requires the ability to learn which parts of the hand reliably interact with the object during a particular grasp. Our approach learns from a rigid body simulation. This enables us to learn how to approach the object and close the underactuated hand from a variety of poses. From nine training grasps on three objects the method transferred grasps to previously unseen, novel objects, that differ significantly from the training objects, with an 80% success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge