Dasharadhan Mahalingam

Synthesizing Grasps and Regrasps for Complex Manipulation Tasks

Jan 30, 2025

Abstract:In complex manipulation tasks, e.g., manipulation by pivoting, the motion of the object being manipulated has to satisfy path constraints that can change during the motion. Therefore, a single grasp may not be sufficient for the entire path, and the object may need to be regrasped. Additionally, geometric data for objects from a sensor are usually available in the form of point clouds. The problem of computing grasps and regrasps from point-cloud representation of objects for complex manipulation tasks is a key problem in endowing robots with manipulation capabilities beyond pick-and-place. In this paper, we formalize the problem of grasping/regrasping for complex manipulation tasks with objects represented by (partial) point clouds and present an algorithm to solve it. We represent a complex manipulation task as a sequence of constant screw motions. Using a manipulation plan skeleton as a sequence of constant screw motions, we use a grasp metric to find graspable regions on the object for every constant screw segment. The overlap of the graspable regions for contiguous screws are then used to determine when and how many times the object needs to be regrasped. We present experimental results on point cloud data collected from RGB-D sensors to illustrate our approach.

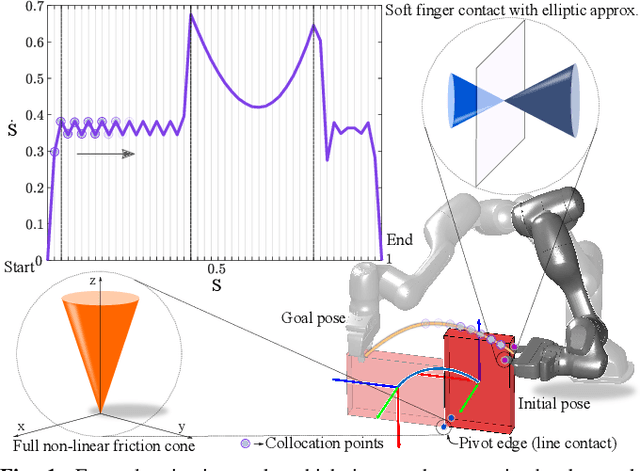

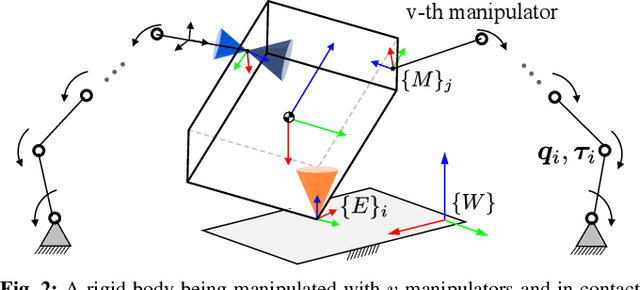

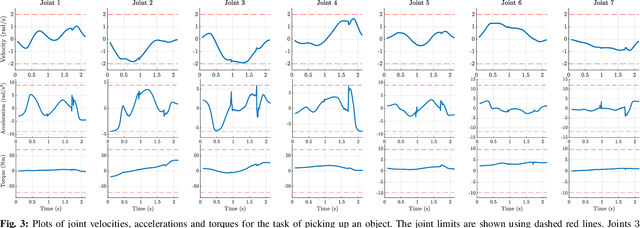

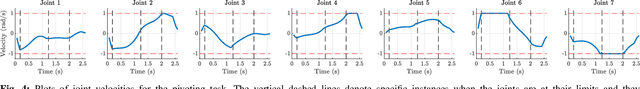

A General Formulation for Path Constrained Time-Optimized Trajectory Planning with Environmental and Object Contacts

Oct 08, 2024

Abstract:A typical manipulation task consists of a manipulator equipped with a gripper to grasp and move an object with constraints on the motion of the hand-held object, which may be due to the nature of the task itself or from object-environment contacts. In this paper, we study the problem of computing joint torques and grasping forces for time-optimal motion of an object, while ensuring that the grasp is not lost and any constraints on the motion of the object, either due to dynamics, environment contact, or no-slip requirements, are also satisfied. We present a second-order cone program (SOCP) formulation of the time-optimal trajectory planning problem that considers nonlinear friction cone constraints at the hand-object and object-environment contacts. Since SOCPs are convex optimization problems that can be solved optimally in polynomial time using interior point methods, we can solve the trajectory optimization problem efficiently. We present simulation results on three examples, including a non-prehensile manipulation task, which shows the generality and effectiveness of our approach.

Containerized Vertical Farming Using Cobots

Oct 23, 2023Abstract:Containerized vertical farming is a type of vertical farming practice using hydroponics in which plants are grown in vertical layers within a mobile shipping container. Space limitations within shipping containers make the automation of different farming operations challenging. In this paper, we explore the use of cobots (i.e., collaborative robots) to automate two key farming operations, namely, the transplantation of saplings and the harvesting of grown plants. Our method uses a single demonstration from a farmer to extract the motion constraints associated with the tasks, namely, transplanting and harvesting, and can then generalize to different instances of the same task. For transplantation, the motion constraint arises during insertion of the sapling within the growing tube, whereas for harvesting, it arises during extraction from the growing tube. We present experimental results to show that using RGBD camera images (obtained from an eye-in-hand configuration) and one demonstration for each task, it is feasible to perform transplantation of saplings and harvesting of leafy greens using a cobot, without task-specific programming.

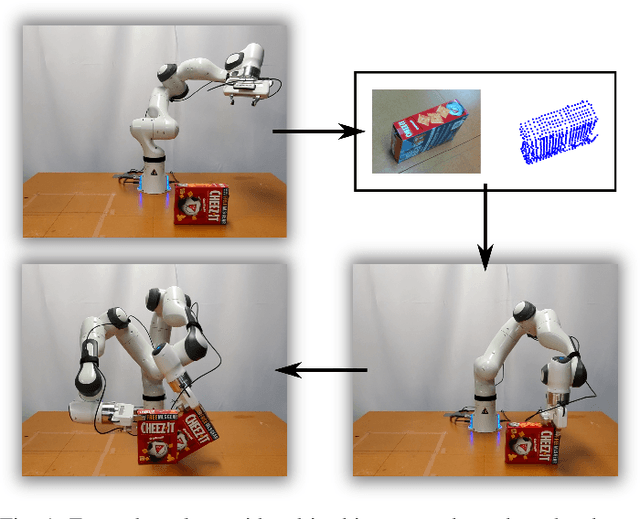

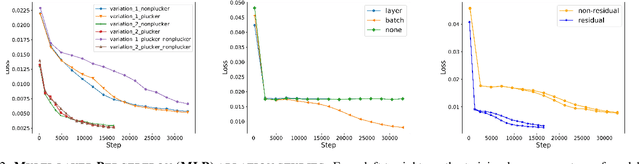

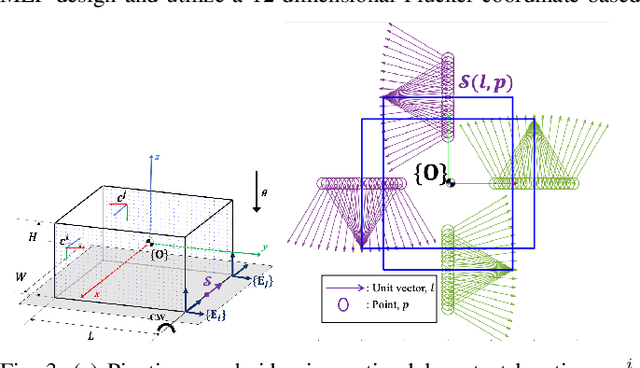

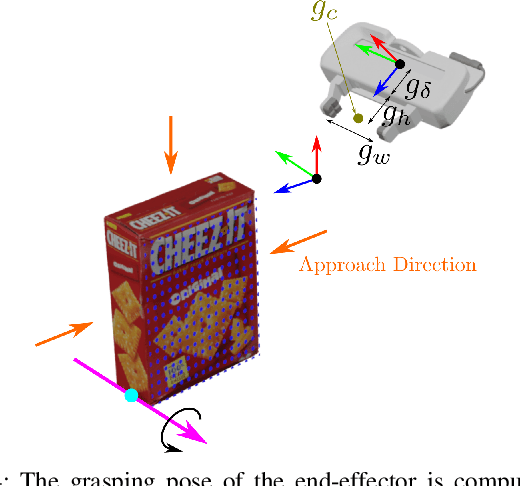

Task-Oriented Grasping with Point Cloud Representation of Objects

Sep 20, 2023

Abstract:In this paper, we study the problem of task-oriented grasp synthesis from partial point cloud data using an eye-in-hand camera configuration. In task-oriented grasp synthesis, a grasp has to be selected so that the object is not lost during manipulation, and it is also ensured that adequate force/moment can be applied to perform the task. We formalize the notion of a gross manipulation task as a constant screw motion (or a sequence of constant screw motions) to be applied to the object after grasping. Using this notion of task, and a corresponding grasp quality metric developed in our prior work, we use a neural network to approximate a function for predicting the grasp quality metric on a cuboid shape. We show that by using a bounding box obtained from the partial point cloud of an object, and the grasp quality metric mentioned above, we can generate a good grasping region on the bounding box that can be used to compute an antipodal grasp on the actual object. Our algorithm does not use any manually labeled data or grasping simulator, thus making it very efficient to implement and integrate with screw linear interpolation-based motion planners. We present simulation as well as experimental results that show the effectiveness of our approach.

Human-Guided Planning for Complex Manipulation Tasks Using the Screw Geometry of Motion

Sep 24, 2022

Abstract:In this paper, we present a novel method of motion planning for performing complex manipulation tasks by using human demonstration and exploiting the screw geometry of motion. We consider complex manipulation tasks where there are constraints on the motion of the end effector of the robot. Examples of such tasks include opening a door, opening a drawer, transferring granular material from one container to another with a spoon, and loading dishes to a dishwasher. Our approach consists of two steps: First, using the fact that a motion in the task space of the robot can be approximated by using a sequence of constant screw motions, we segment a human demonstration into a sequence of constant screw motions. Second, we use the segmented screws to generate motion plans via screw-linear interpolation for other instances of the same task. The use of screw segmentation allows us to capture the invariants of the demonstrations in a coordinate-free fashion, thus allowing us to plan for different task instances from just one example. We present extensive experimental results on a variety of manipulation scenarios showing that our method can be used across a wide range of manipulation tasks.

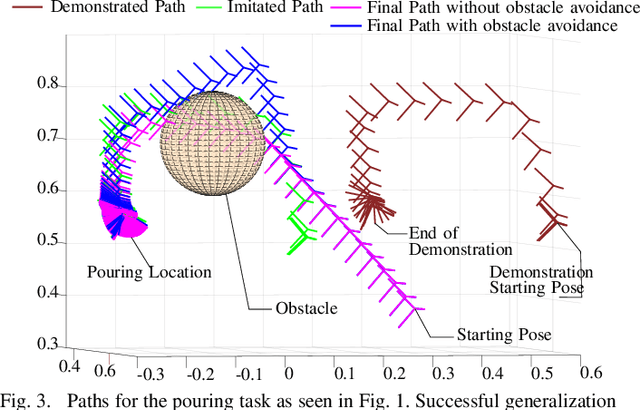

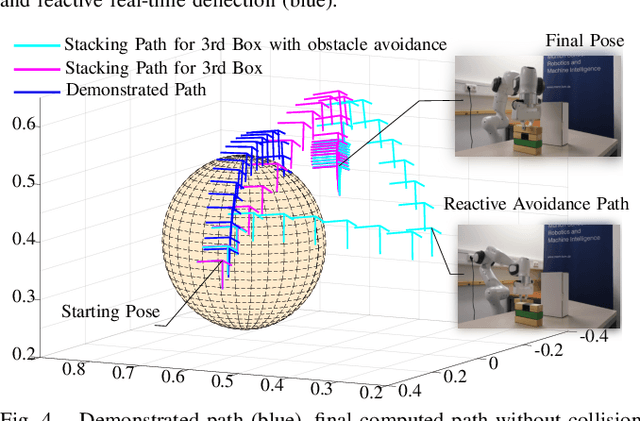

Coordinate Invariant User-Guided Constrained Path Planning with Reactive Rapidly Expanding Plane-Oriented Escaping Trees

Mar 20, 2022

Abstract:As collaborative robots move closer to human environments, motion generation and reactive planning strategies that allow for elaborate task execution with minimal easy-to-implement guidance whilst coping with changes in the environment is of paramount importance. In this paper, we present a novel approach for generating real-time motion plans for point-to-point tasks using a single successful human demonstration. Our approach is based on screw linear interpolation,which allows us to respect the underlying geometric constraints that characterize the task and are implicitly present in the demonstration. We also integrate an original reactive collision avoidance approach with our planner. We present extensive experimental results to demonstrate that with our approach,by using a single demonstration of moving one block, we can generate motion plans for complex tasks like stacking multiple blocks (in a dynamic environment). Analogous generalization abilities are also shown for tasks like pouring and loading shelves. For the pouring task, we also show that a demonstration given for one-armed pouring can be used for planning pouring with a dual-armed manipulator of different kinematic structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge