Aditya Patankar

Transferring Kinesthetic Demonstrations across Diverse Objects for Manipulation Planning

Mar 13, 2025Abstract:Given a demonstration of a complex manipulation task such as pouring liquid from one container to another, we seek to generate a motion plan for a new task instance involving objects with different geometries. This is non-trivial since we need to simultaneously ensure that the implicit motion constraints are satisfied (glass held upright while moving), the motion is collision-free, and that the task is successful (e.g. liquid is poured into the target container). We solve this problem by identifying positions of critical locations and associating a reference frame (called motion transfer frames) on the manipulated object and the target, selected based on their geometries and the task at hand. By tracking and transferring the path of the motion transfer frames, we generate motion plans for arbitrary task instances with objects of different geometries and poses. We show results from simulation as well as robot experiments on physical objects to evaluate the effectiveness of our solution.

Synthesizing Grasps and Regrasps for Complex Manipulation Tasks

Jan 30, 2025

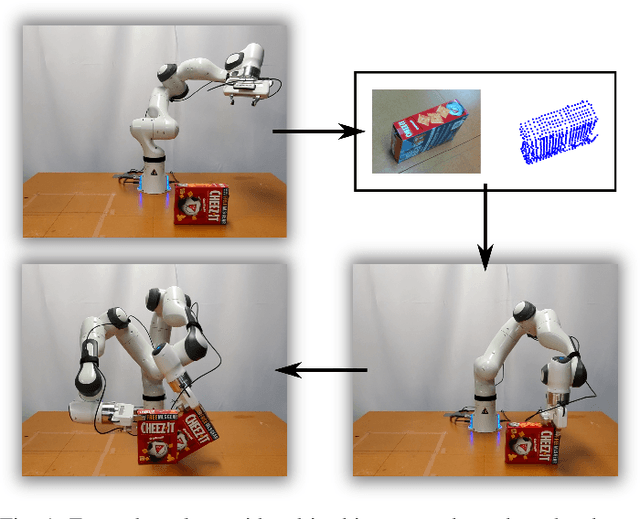

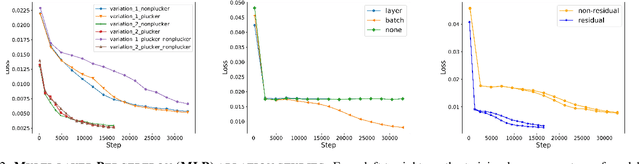

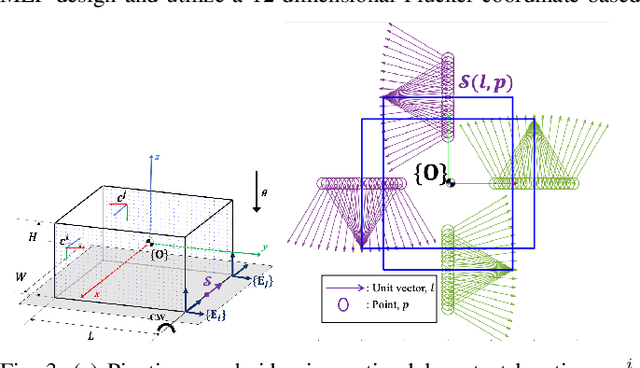

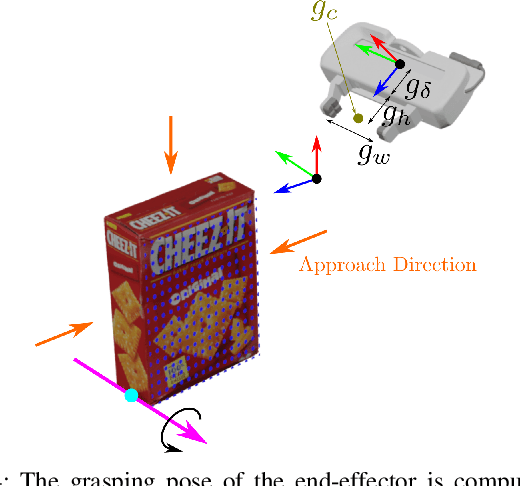

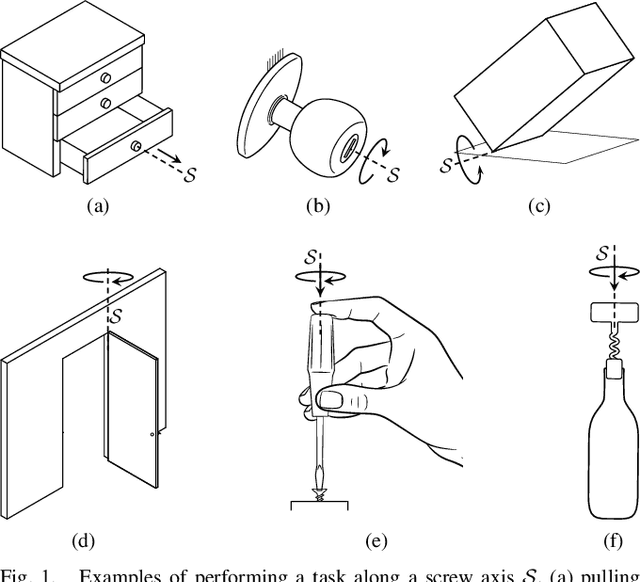

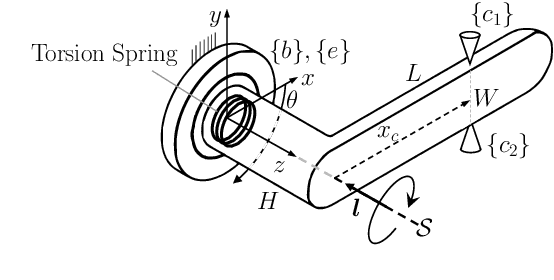

Abstract:In complex manipulation tasks, e.g., manipulation by pivoting, the motion of the object being manipulated has to satisfy path constraints that can change during the motion. Therefore, a single grasp may not be sufficient for the entire path, and the object may need to be regrasped. Additionally, geometric data for objects from a sensor are usually available in the form of point clouds. The problem of computing grasps and regrasps from point-cloud representation of objects for complex manipulation tasks is a key problem in endowing robots with manipulation capabilities beyond pick-and-place. In this paper, we formalize the problem of grasping/regrasping for complex manipulation tasks with objects represented by (partial) point clouds and present an algorithm to solve it. We represent a complex manipulation task as a sequence of constant screw motions. Using a manipulation plan skeleton as a sequence of constant screw motions, we use a grasp metric to find graspable regions on the object for every constant screw segment. The overlap of the graspable regions for contiguous screws are then used to determine when and how many times the object needs to be regrasped. We present experimental results on point cloud data collected from RGB-D sensors to illustrate our approach.

Screw Geometry Meets Bandits: Incremental Acquisition of Demonstrations to Generate Manipulation Plans

Oct 23, 2024Abstract:In this paper, we study the problem of methodically obtaining a sufficient set of kinesthetic demonstrations, one at a time, such that a robot can be confident of its ability to perform a complex manipulation task in a given region of its workspace. Although Learning from Demonstrations has been an active area of research, the problems of checking whether a set of demonstrations is sufficient, and systematically seeking additional demonstrations have remained open. We present a novel approach to address these open problems using (i) a screw geometric representation to generate manipulation plans from demonstrations, which makes the sufficiency of a set of demonstrations measurable; (ii) a sampling strategy based on PAC-learning from multi-armed bandit optimization to evaluate the robot's ability to generate manipulation plans in a subregion of its task space; and (iii) a heuristic to seek additional demonstration from areas of weakness. Thus, we present an approach for the robot to incrementally and actively ask for new demonstration examples until the robot can assess with high confidence that it can perform the task successfully. We present experimental results on two example manipulation tasks, namely, pouring and scooping, to illustrate our approach. A short video on the method: https://youtu.be/R-qICICdEos

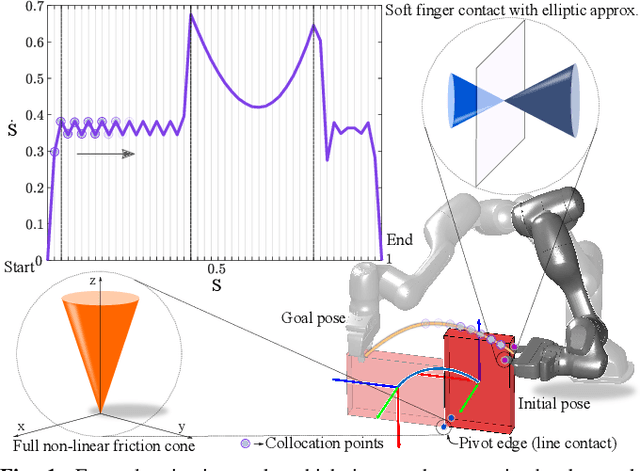

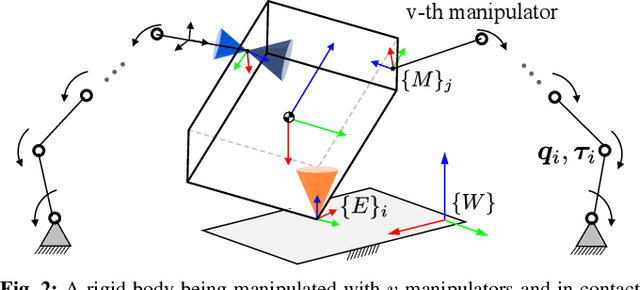

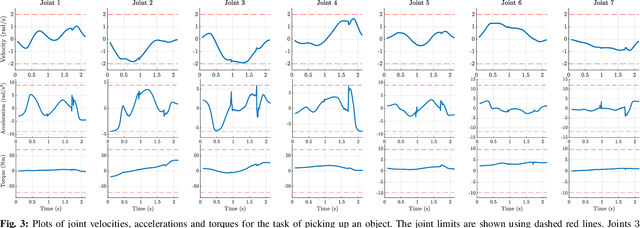

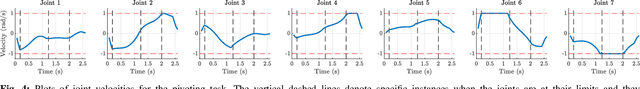

A General Formulation for Path Constrained Time-Optimized Trajectory Planning with Environmental and Object Contacts

Oct 08, 2024

Abstract:A typical manipulation task consists of a manipulator equipped with a gripper to grasp and move an object with constraints on the motion of the hand-held object, which may be due to the nature of the task itself or from object-environment contacts. In this paper, we study the problem of computing joint torques and grasping forces for time-optimal motion of an object, while ensuring that the grasp is not lost and any constraints on the motion of the object, either due to dynamics, environment contact, or no-slip requirements, are also satisfied. We present a second-order cone program (SOCP) formulation of the time-optimal trajectory planning problem that considers nonlinear friction cone constraints at the hand-object and object-environment contacts. Since SOCPs are convex optimization problems that can be solved optimally in polynomial time using interior point methods, we can solve the trajectory optimization problem efficiently. We present simulation results on three examples, including a non-prehensile manipulation task, which shows the generality and effectiveness of our approach.

Containerized Vertical Farming Using Cobots

Oct 23, 2023Abstract:Containerized vertical farming is a type of vertical farming practice using hydroponics in which plants are grown in vertical layers within a mobile shipping container. Space limitations within shipping containers make the automation of different farming operations challenging. In this paper, we explore the use of cobots (i.e., collaborative robots) to automate two key farming operations, namely, the transplantation of saplings and the harvesting of grown plants. Our method uses a single demonstration from a farmer to extract the motion constraints associated with the tasks, namely, transplanting and harvesting, and can then generalize to different instances of the same task. For transplantation, the motion constraint arises during insertion of the sapling within the growing tube, whereas for harvesting, it arises during extraction from the growing tube. We present experimental results to show that using RGBD camera images (obtained from an eye-in-hand configuration) and one demonstration for each task, it is feasible to perform transplantation of saplings and harvesting of leafy greens using a cobot, without task-specific programming.

Task-Oriented Grasping with Point Cloud Representation of Objects

Sep 20, 2023

Abstract:In this paper, we study the problem of task-oriented grasp synthesis from partial point cloud data using an eye-in-hand camera configuration. In task-oriented grasp synthesis, a grasp has to be selected so that the object is not lost during manipulation, and it is also ensured that adequate force/moment can be applied to perform the task. We formalize the notion of a gross manipulation task as a constant screw motion (or a sequence of constant screw motions) to be applied to the object after grasping. Using this notion of task, and a corresponding grasp quality metric developed in our prior work, we use a neural network to approximate a function for predicting the grasp quality metric on a cuboid shape. We show that by using a bounding box obtained from the partial point cloud of an object, and the grasp quality metric mentioned above, we can generate a good grasping region on the bounding box that can be used to compute an antipodal grasp on the actual object. Our algorithm does not use any manually labeled data or grasping simulator, thus making it very efficient to implement and integrate with screw linear interpolation-based motion planners. We present simulation as well as experimental results that show the effectiveness of our approach.

Computing a Task-Dependent Grasp Metric Using Second Order Cone Programs

Apr 25, 2021

Abstract:Evaluating a grasp generated by a set of hand-object contact locations is a key component of many grasp planning algorithms. In this paper, we present a novel second order cone program (SOCP) based optimization formulation for evaluating a grasps' ability to apply wrenches to generate a linear motion along a given direction and/or an angular motion about the given direction. Our quality measure can be computed efficiently, since the SOCP is a convex optimization problem, which can be solved optimally with interior point methods. A key feature of our approach is that we can consider the effect of contact wrenches from any contact of the object with the environment. This is different from the extant literature where only the effect of finger-object contacts is considered. Exploiting the environmental contact is useful in many manipulation scenarios either to enhance the dexterity of simple hands or improve the payload capability of the manipulator. In contrast to most existing approaches, our approach also takes into account the practical constraint that the maximum contact force that can be applied at a finger-object contact can be different for each contact. We can also include the effect of external forces like gravity, as well as the joint torque constraints of the fingers/manipulators. Furthermore, for a given motion path as a constant screw motion or a sequence of constant screw motions, we can discretize the path and compute a global grasp metric to accomplish the whole task with a chosen set of finger-object contact locations.

Motion and Force Planning for Manipulating Heavy Objects by Pivoting

Dec 10, 2020

Abstract:Manipulation of objects by exploiting their contact with the environment can enhance both the dexterity and payload capability of robotic manipulators. A common way to manipulate heavy objects beyond the payload capability of a robot is to use a sequence of pivoting motions, wherein, an object is moved while some contact points between the object and a support surface are kept fixed. The goal of this paper is to develop an algorithmic approach for automated plan generation for object manipulation with a sequence of pivoting motions. A plan for manipulating a heavy object consists of a sequence of joint angles of the manipulator, the corresponding object poses, as well as the joint torques required to move the object. The constraint of maintaining object contact with the ground during manipulation results in nonlinear constraints in the configuration space of the robot, which is challenging for motion planning algorithms. Exploiting the fact that pivoting motion corresponds to movements in a subgroup of the group of rigid body motions, SE(3), we present a novel task-space based planning approach for computing a motion plan for both the manipulator and the object while satisfying contact constraints. We also combine our motion planning algorithm with a grasping force synthesis algorithm to ensure that friction constraints at the contacts and actuator torque constraints are satisfied. We present simulation results with a dual-armed Baxter robot to demonstrate our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge