I. V. Ramakrishnan

Transferring Kinesthetic Demonstrations across Diverse Objects for Manipulation Planning

Mar 13, 2025Abstract:Given a demonstration of a complex manipulation task such as pouring liquid from one container to another, we seek to generate a motion plan for a new task instance involving objects with different geometries. This is non-trivial since we need to simultaneously ensure that the implicit motion constraints are satisfied (glass held upright while moving), the motion is collision-free, and that the task is successful (e.g. liquid is poured into the target container). We solve this problem by identifying positions of critical locations and associating a reference frame (called motion transfer frames) on the manipulated object and the target, selected based on their geometries and the task at hand. By tracking and transferring the path of the motion transfer frames, we generate motion plans for arbitrary task instances with objects of different geometries and poses. We show results from simulation as well as robot experiments on physical objects to evaluate the effectiveness of our solution.

Data Augmentation for Automated Adaptive Rodent Training

Oct 23, 2024Abstract:Fully optimized automation of behavioral training protocols for lab animals like rodents has long been a coveted goal for researchers. It is an otherwise labor-intensive and time-consuming process that demands close interaction between the animal and the researcher. In this work, we used a data-driven approach to optimize the way rodents are trained in labs. In pursuit of our goal, we looked at data augmentation, a technique that scales well in data-poor environments. Using data augmentation, we built several artificial rodent models, which in turn would be used to build an efficient and automatic trainer. Then we developed a novel similarity metric based on the action probability distribution to measure the behavioral resemblance of our models to that of real rodents.

Screw Geometry Meets Bandits: Incremental Acquisition of Demonstrations to Generate Manipulation Plans

Oct 23, 2024Abstract:In this paper, we study the problem of methodically obtaining a sufficient set of kinesthetic demonstrations, one at a time, such that a robot can be confident of its ability to perform a complex manipulation task in a given region of its workspace. Although Learning from Demonstrations has been an active area of research, the problems of checking whether a set of demonstrations is sufficient, and systematically seeking additional demonstrations have remained open. We present a novel approach to address these open problems using (i) a screw geometric representation to generate manipulation plans from demonstrations, which makes the sufficiency of a set of demonstrations measurable; (ii) a sampling strategy based on PAC-learning from multi-armed bandit optimization to evaluate the robot's ability to generate manipulation plans in a subregion of its task space; and (iii) a heuristic to seek additional demonstration from areas of weakness. Thus, we present an approach for the robot to incrementally and actively ask for new demonstration examples until the robot can assess with high confidence that it can perform the task successfully. We present experimental results on two example manipulation tasks, namely, pouring and scooping, to illustrate our approach. A short video on the method: https://youtu.be/R-qICICdEos

Enhancing Image-Text Matching with Adaptive Feature Aggregation

Jan 18, 2024Abstract:Image-text matching aims to find matched cross-modal pairs accurately. While current methods often rely on projecting cross-modal features into a common embedding space, they frequently suffer from imbalanced feature representations across different modalities, leading to unreliable retrieval results. To address these limitations, we introduce a novel Feature Enhancement Module that adaptively aggregates single-modal features for more balanced and robust image-text retrieval. Additionally, we propose a new loss function that overcomes the shortcomings of original triplet ranking loss, thereby significantly improving retrieval performance. The proposed model has been evaluated on two public datasets and achieves competitive retrieval performance when compared with several state-of-the-art models. Implementation codes can be found here.

Surgical Phase Recognition in Laparoscopic Cholecystectomy

Jun 14, 2022

Abstract:Automatic recognition of surgical phases in surgical videos is a fundamental task in surgical workflow analysis. In this report, we propose a Transformer-based method that utilizes calibrated confidence scores for a 2-stage inference pipeline, which dynamically switches between a baseline model and a separately trained transition model depending on the calibrated confidence level. Our method outperforms the baseline model on the Cholec80 dataset, and can be applied to a variety of action segmentation methods.

Inference in Probabilistic Logic Programs with Continuous Random Variables

Oct 08, 2012

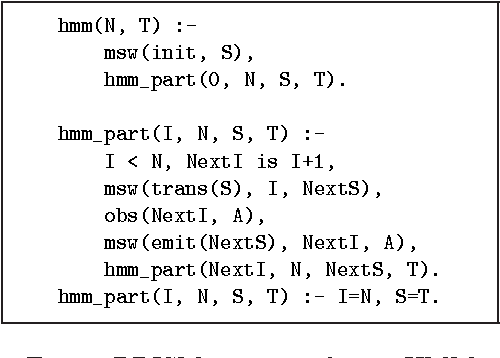

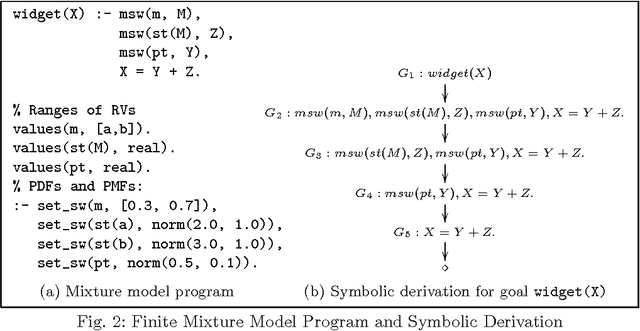

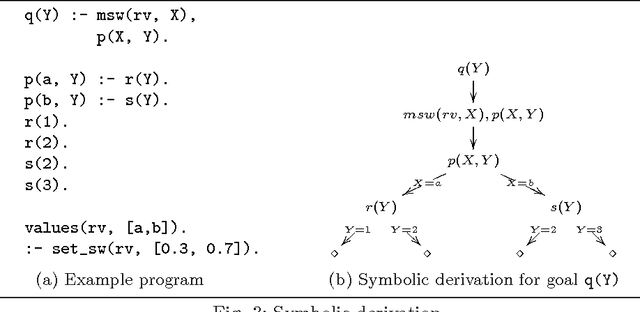

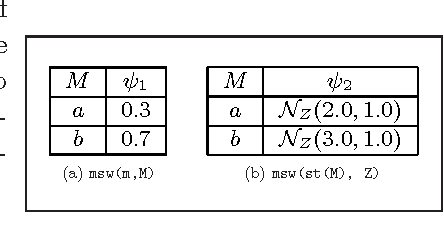

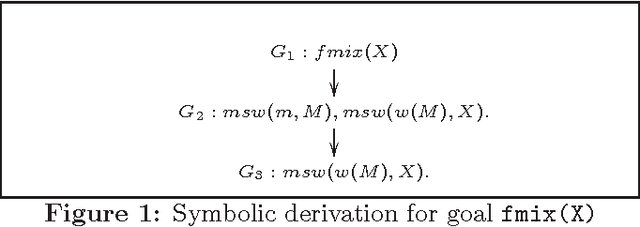

Abstract:Probabilistic Logic Programming (PLP), exemplified by Sato and Kameya's PRISM, Poole's ICL, Raedt et al's ProbLog and Vennekens et al's LPAD, is aimed at combining statistical and logical knowledge representation and inference. A key characteristic of PLP frameworks is that they are conservative extensions to non-probabilistic logic programs which have been widely used for knowledge representation. PLP frameworks extend traditional logic programming semantics to a distribution semantics, where the semantics of a probabilistic logic program is given in terms of a distribution over possible models of the program. However, the inference techniques used in these works rely on enumerating sets of explanations for a query answer. Consequently, these languages permit very limited use of random variables with continuous distributions. In this paper, we present a symbolic inference procedure that uses constraints and represents sets of explanations without enumeration. This permits us to reason over PLPs with Gaussian or Gamma-distributed random variables (in addition to discrete-valued random variables) and linear equality constraints over reals. We develop the inference procedure in the context of PRISM; however the procedure's core ideas can be easily applied to other PLP languages as well. An interesting aspect of our inference procedure is that PRISM's query evaluation process becomes a special case in the absence of any continuous random variables in the program. The symbolic inference procedure enables us to reason over complex probabilistic models such as Kalman filters and a large subclass of Hybrid Bayesian networks that were hitherto not possible in PLP frameworks. (To appear in Theory and Practice of Logic Programming).

* 12 pages. arXiv admin note: substantial text overlap with arXiv:1203.4287

Parameter Learning in PRISM Programs with Continuous Random Variables

Mar 19, 2012

Abstract:Probabilistic Logic Programming (PLP), exemplified by Sato and Kameya's PRISM, Poole's ICL, De Raedt et al's ProbLog and Vennekens et al's LPAD, combines statistical and logical knowledge representation and inference. Inference in these languages is based on enumerative construction of proofs over logic programs. Consequently, these languages permit very limited use of random variables with continuous distributions. In this paper, we extend PRISM with Gaussian random variables and linear equality constraints, and consider the problem of parameter learning in the extended language. Many statistical models such as finite mixture models and Kalman filter can be encoded in extended PRISM. Our EM-based learning algorithm uses a symbolic inference procedure that represents sets of derivations without enumeration. This permits us to learn the distribution parameters of extended PRISM programs with discrete as well as Gaussian variables. The learning algorithm naturally generalizes the ones used for PRISM and Hybrid Bayesian Networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge