Khiem Phi

Paying Attention to Deflections: Mining Pragmatic Nuances for Whataboutism Detection in Online Discourse

Feb 15, 2024

Abstract:Whataboutism, a potent tool for disrupting narratives and sowing distrust, remains under-explored in quantitative NLP research. Moreover, past work has not distinguished its use as a strategy for misinformation and propaganda from its use as a tool for pragmatic and semantic framing. We introduce new datasets from Twitter and YouTube, revealing overlaps as well as distinctions between whataboutism, propaganda, and the tu quoque fallacy. Furthermore, drawing on recent work in linguistic semantics, we differentiate the `what about' lexical construct from whataboutism. Our experiments bring to light unique challenges in its accurate detection, prompting the introduction of a novel method using attention weights for negative sample mining. We report significant improvements of 4% and 10% over previous state-of-the-art methods in our Twitter and YouTube collections, respectively.

Containerized Vertical Farming Using Cobots

Oct 23, 2023Abstract:Containerized vertical farming is a type of vertical farming practice using hydroponics in which plants are grown in vertical layers within a mobile shipping container. Space limitations within shipping containers make the automation of different farming operations challenging. In this paper, we explore the use of cobots (i.e., collaborative robots) to automate two key farming operations, namely, the transplantation of saplings and the harvesting of grown plants. Our method uses a single demonstration from a farmer to extract the motion constraints associated with the tasks, namely, transplanting and harvesting, and can then generalize to different instances of the same task. For transplantation, the motion constraint arises during insertion of the sapling within the growing tube, whereas for harvesting, it arises during extraction from the growing tube. We present experimental results to show that using RGBD camera images (obtained from an eye-in-hand configuration) and one demonstration for each task, it is feasible to perform transplantation of saplings and harvesting of leafy greens using a cobot, without task-specific programming.

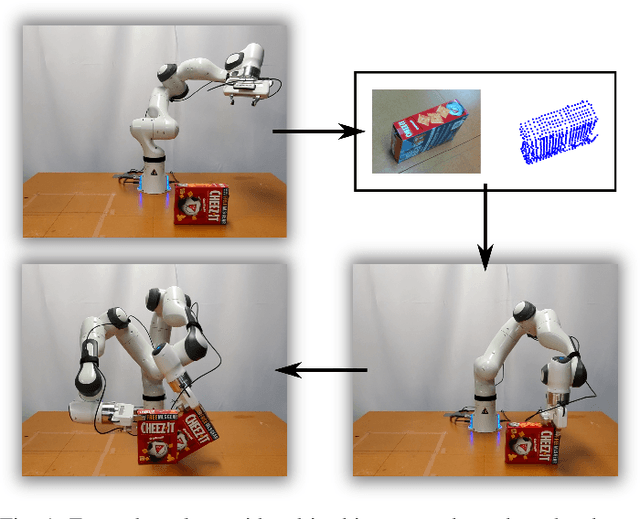

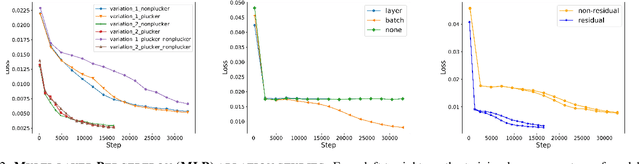

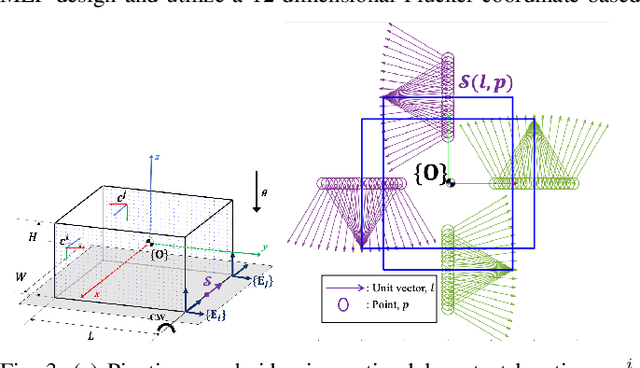

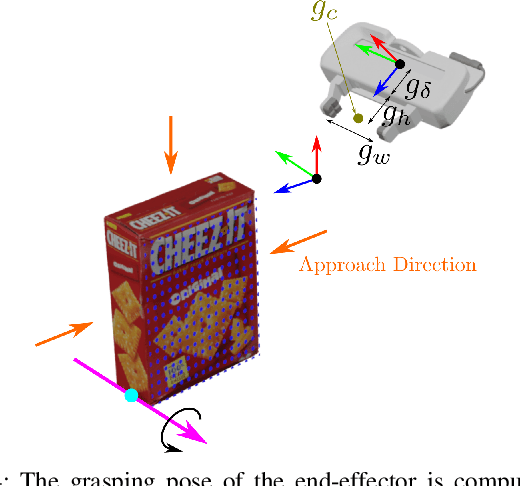

Task-Oriented Grasping with Point Cloud Representation of Objects

Sep 20, 2023

Abstract:In this paper, we study the problem of task-oriented grasp synthesis from partial point cloud data using an eye-in-hand camera configuration. In task-oriented grasp synthesis, a grasp has to be selected so that the object is not lost during manipulation, and it is also ensured that adequate force/moment can be applied to perform the task. We formalize the notion of a gross manipulation task as a constant screw motion (or a sequence of constant screw motions) to be applied to the object after grasping. Using this notion of task, and a corresponding grasp quality metric developed in our prior work, we use a neural network to approximate a function for predicting the grasp quality metric on a cuboid shape. We show that by using a bounding box obtained from the partial point cloud of an object, and the grasp quality metric mentioned above, we can generate a good grasping region on the bounding box that can be used to compute an antipodal grasp on the actual object. Our algorithm does not use any manually labeled data or grasping simulator, thus making it very efficient to implement and integrate with screw linear interpolation-based motion planners. We present simulation as well as experimental results that show the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge