Lanqing Guo

Improving Flexible Image Tokenizers for Autoregressive Image Generation

Jan 04, 2026Abstract:Flexible image tokenizers aim to represent an image using an ordered 1D variable-length token sequence. This flexible tokenization is typically achieved through nested dropout, where a portion of trailing tokens is randomly truncated during training, and the image is reconstructed using the remaining preceding sequence. However, this tail-truncation strategy inherently concentrates the image information in the early tokens, limiting the effectiveness of downstream AutoRegressive (AR) image generation as the token length increases. To overcome these limitations, we propose \textbf{ReToK}, a flexible tokenizer with \underline{Re}dundant \underline{Tok}en Padding and Hierarchical Semantic Regularization, designed to fully exploit all tokens for enhanced latent modeling. Specifically, we introduce \textbf{Redundant Token Padding} to activate tail tokens more frequently, thereby alleviating information over-concentration in the early tokens. In addition, we apply \textbf{Hierarchical Semantic Regularization} to align the decoding features of earlier tokens with those from a pre-trained vision foundation model, while progressively reducing the regularization strength toward the tail to allow finer low-level detail reconstruction. Extensive experiments demonstrate the effectiveness of ReTok: on ImageNet 256$\times$256, our method achieves superior generation performance compared with both flexible and fixed-length tokenizers. Code will be available at: \href{https://github.com/zfu006/ReTok}{https://github.com/zfu006/ReTok}

Demystifying the Visual Quality Paradox in Multimodal Large Language Models

Jun 18, 2025Abstract:Recent Multimodal Large Language Models (MLLMs) excel on benchmark vision-language tasks, yet little is known about how input visual quality shapes their responses. Does higher perceptual quality of images already translate to better MLLM understanding? We conduct the first systematic study spanning leading MLLMs and a suite of vision-language benchmarks, applying controlled degradations and stylistic shifts to each image. Surprisingly, we uncover a visual-quality paradox: model, task, and even individual-instance performance can improve when images deviate from human-perceived fidelity. Off-the-shelf restoration pipelines fail to reconcile these idiosyncratic preferences. To close the gap, we introduce Visual-Quality Test-Time Tuning (VQ-TTT)-a lightweight adaptation module that: (1) inserts a learnable, low-rank kernel before the frozen vision encoder to modulate frequency content; and (2) fine-tunes only shallow vision-encoder layers via LoRA. VQ-TTT dynamically adjusts each input image in a single forward pass, aligning it with task-specific model preferences. Across the evaluated MLLMs and all datasets, VQ-TTT lifts significant average accuracy, with no external models, cached features, or extra training data. These findings redefine ``better'' visual inputs for MLLMs and highlight the need for adaptive, rather than universally ``clean'', imagery, in the new era of AI being the main data customer.

Person Recognition at Altitude and Range: Fusion of Face, Body Shape and Gait

May 07, 2025

Abstract:We address the problem of whole-body person recognition in unconstrained environments. This problem arises in surveillance scenarios such as those in the IARPA Biometric Recognition and Identification at Altitude and Range (BRIAR) program, where biometric data is captured at long standoff distances, elevated viewing angles, and under adverse atmospheric conditions (e.g., turbulence and high wind velocity). To this end, we propose FarSight, a unified end-to-end system for person recognition that integrates complementary biometric cues across face, gait, and body shape modalities. FarSight incorporates novel algorithms across four core modules: multi-subject detection and tracking, recognition-aware video restoration, modality-specific biometric feature encoding, and quality-guided multi-modal fusion. These components are designed to work cohesively under degraded image conditions, large pose and scale variations, and cross-domain gaps. Extensive experiments on the BRIAR dataset, one of the most comprehensive benchmarks for long-range, multi-modal biometric recognition, demonstrate the effectiveness of FarSight. Compared to our preliminary system, this system achieves a 34.1% absolute gain in 1:1 verification accuracy (TAR@0.1% FAR), a 17.8% increase in closed-set identification (Rank-20), and a 34.3% reduction in open-set identification errors (FNIR@1% FPIR). Furthermore, FarSight was evaluated in the 2025 NIST RTE Face in Video Evaluation (FIVE), which conducts standardized face recognition testing on the BRIAR dataset. These results establish FarSight as a state-of-the-art solution for operational biometric recognition in challenging real-world conditions.

NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images: Methods and Results

Apr 19, 2025

Abstract:This paper reviews the NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images. This challenge received a wide range of impressive solutions, which are developed and evaluated using our collected real-world Raindrop Clarity dataset. Unlike existing deraining datasets, our Raindrop Clarity dataset is more diverse and challenging in degradation types and contents, which includes day raindrop-focused, day background-focused, night raindrop-focused, and night background-focused degradations. This dataset is divided into three subsets for competition: 14,139 images for training, 240 images for validation, and 731 images for testing. The primary objective of this challenge is to establish a new and powerful benchmark for the task of removing raindrops under varying lighting and focus conditions. There are a total of 361 participants in the competition, and 32 teams submitting valid solutions and fact sheets for the final testing phase. These submissions achieved state-of-the-art (SOTA) performance on the Raindrop Clarity dataset. The project can be found at https://lixinustc.github.io/CVPR-NTIRE2025-RainDrop-Competition.github.io/.

Digital Staining with Knowledge Distillation: A Unified Framework for Unpaired and Paired-But-Misaligned Data

Apr 14, 2025Abstract:Staining is essential in cell imaging and medical diagnostics but poses significant challenges, including high cost, time consumption, labor intensity, and irreversible tissue alterations. Recent advances in deep learning have enabled digital staining through supervised model training. However, collecting large-scale, perfectly aligned pairs of stained and unstained images remains difficult. In this work, we propose a novel unsupervised deep learning framework for digital cell staining that reduces the need for extensive paired data using knowledge distillation. We explore two training schemes: (1) unpaired and (2) paired-but-misaligned settings. For the unpaired case, we introduce a two-stage pipeline, comprising light enhancement followed by colorization, as a teacher model. Subsequently, we obtain a student staining generator through knowledge distillation with hybrid non-reference losses. To leverage the pixel-wise information between adjacent sections, we further extend to the paired-but-misaligned setting, adding the Learning to Align module to utilize pixel-level information. Experiment results on our dataset demonstrate that our proposed unsupervised deep staining method can generate stained images with more accurate positions and shapes of the cell targets in both settings. Compared with competing methods, our method achieves improved results both qualitatively and quantitatively (e.g., NIQE and PSNR).We applied our digital staining method to the White Blood Cell (WBC) dataset, investigating its potential for medical applications.

Training-Free Text-Guided Image Editing with Visual Autoregressive Model

Mar 31, 2025Abstract:Text-guided image editing is an essential task that enables users to modify images through natural language descriptions. Recent advances in diffusion models and rectified flows have significantly improved editing quality, primarily relying on inversion techniques to extract structured noise from input images. However, inaccuracies in inversion can propagate errors, leading to unintended modifications and compromising fidelity. Moreover, even with perfect inversion, the entanglement between textual prompts and image features often results in global changes when only local edits are intended. To address these challenges, we propose a novel text-guided image editing framework based on VAR (Visual AutoRegressive modeling), which eliminates the need for explicit inversion while ensuring precise and controlled modifications. Our method introduces a caching mechanism that stores token indices and probability distributions from the original image, capturing the relationship between the source prompt and the image. Using this cache, we design an adaptive fine-grained masking strategy that dynamically identifies and constrains modifications to relevant regions, preventing unintended changes. A token reassembling approach further refines the editing process, enhancing diversity, fidelity, and control. Our framework operates in a training-free manner and achieves high-fidelity editing with faster inference speeds, processing a 1K resolution image in as fast as 1.2 seconds. Extensive experiments demonstrate that our method achieves performance comparable to, or even surpassing, existing diffusion- and rectified flow-based approaches in both quantitative metrics and visual quality. The code will be released.

Reconciling Stochastic and Deterministic Strategies for Zero-shot Image Restoration using Diffusion Model in Dual

Mar 03, 2025Abstract:Plug-and-play (PnP) methods offer an iterative strategy for solving image restoration (IR) problems in a zero-shot manner, using a learned \textit{discriminative denoiser} as the implicit prior. More recently, a sampling-based variant of this approach, which utilizes a pre-trained \textit{generative diffusion model}, has gained great popularity for solving IR problems through stochastic sampling. The IR results using PnP with a pre-trained diffusion model demonstrate distinct advantages compared to those using discriminative denoisers, \ie improved perceptual quality while sacrificing the data fidelity. The unsatisfactory results are due to the lack of integration of these strategies in the IR tasks. In this work, we propose a novel zero-shot IR scheme, dubbed Reconciling Diffusion Model in Dual (RDMD), which leverages only a \textbf{single} pre-trained diffusion model to construct \textbf{two} complementary regularizers. Specifically, the diffusion model in RDMD will iteratively perform deterministic denoising and stochastic sampling, aiming to achieve high-fidelity image restoration with appealing perceptual quality. RDMD also allows users to customize the distortion-perception tradeoff with a single hyperparameter, enhancing the adaptability of the restoration process in different practical scenarios. Extensive experiments on several IR tasks demonstrate that our proposed method could achieve superior results compared to existing approaches on both the FFHQ and ImageNet datasets.

Robust and Transferable Backdoor Attacks Against Deep Image Compression With Selective Frequency Prior

Dec 02, 2024

Abstract:Recent advancements in deep learning-based compression techniques have surpassed traditional methods. However, deep neural networks remain vulnerable to backdoor attacks, where pre-defined triggers induce malicious behaviors. This paper introduces a novel frequency-based trigger injection model for launching backdoor attacks with multiple triggers on learned image compression models. Inspired by the widely used DCT in compression codecs, triggers are embedded in the DCT domain. We design attack objectives tailored to diverse scenarios, including: 1) degrading compression quality in terms of bit-rate and reconstruction accuracy; 2) targeting task-driven measures like face recognition and semantic segmentation. To improve training efficiency, we propose a dynamic loss function that balances loss terms with fewer hyper-parameters, optimizing attack objectives effectively. For advanced scenarios, we evaluate the attack's resistance to defensive preprocessing and propose a two-stage training schedule with robust frequency selection to enhance resilience. To improve cross-model and cross-domain transferability for downstream tasks, we adjust the classification boundary in the attack loss during training. Experiments show that our trigger injection models, combined with minor modifications to encoder parameters, successfully inject multiple backdoors and their triggers into a single compression model, demonstrating strong performance and versatility. (*Due to the notification of arXiv "The Abstract field cannot be longer than 1,920 characters", the appeared Abstract is shortened. For the full Abstract, please download the Article.)

Temporal As a Plugin: Unsupervised Video Denoising with Pre-Trained Image Denoisers

Sep 17, 2024

Abstract:Recent advancements in deep learning have shown impressive results in image and video denoising, leveraging extensive pairs of noisy and noise-free data for supervision. However, the challenge of acquiring paired videos for dynamic scenes hampers the practical deployment of deep video denoising techniques. In contrast, this obstacle is less pronounced in image denoising, where paired data is more readily available. Thus, a well-trained image denoiser could serve as a reliable spatial prior for video denoising. In this paper, we propose a novel unsupervised video denoising framework, named ``Temporal As a Plugin'' (TAP), which integrates tunable temporal modules into a pre-trained image denoiser. By incorporating temporal modules, our method can harness temporal information across noisy frames, complementing its power of spatial denoising. Furthermore, we introduce a progressive fine-tuning strategy that refines each temporal module using the generated pseudo clean video frames, progressively enhancing the network's denoising performance. Compared to other unsupervised video denoising methods, our framework demonstrates superior performance on both sRGB and raw video denoising datasets.

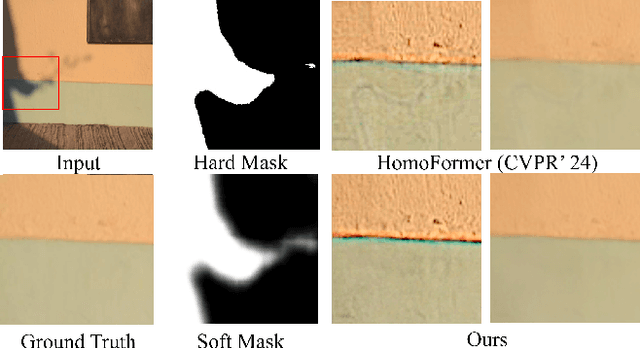

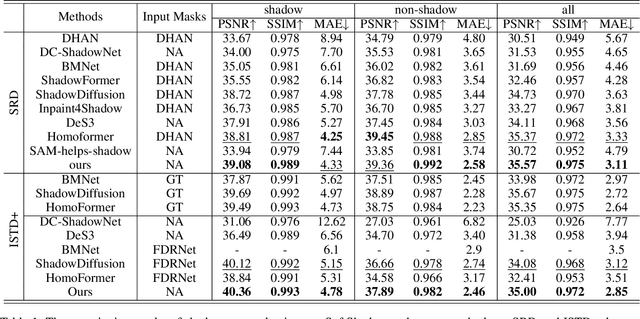

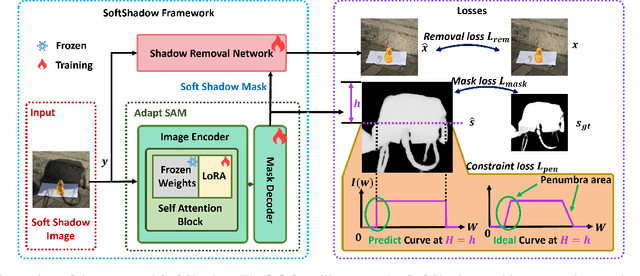

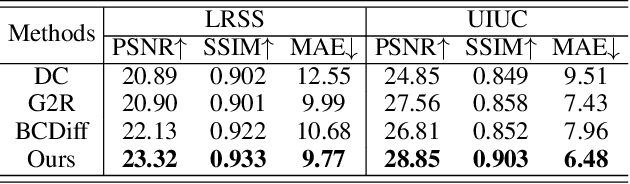

SoftShadow: Leveraging Penumbra-Aware Soft Masks for Shadow Removal

Sep 11, 2024

Abstract:Recent advancements in deep learning have yielded promising results for the image shadow removal task. However, most existing methods rely on binary pre-generated shadow masks. The binary nature of such masks could potentially lead to artifacts near the boundary between shadow and non-shadow areas. In view of this, inspired by the physical model of shadow formation, we introduce novel soft shadow masks specifically designed for shadow removal. To achieve such soft masks, we propose a \textit{SoftShadow} framework by leveraging the prior knowledge of pretrained SAM and integrating physical constraints. Specifically, we jointly tune the SAM and the subsequent shadow removal network using penumbra formation constraint loss and shadow removal loss. This framework enables accurate predictions of penumbra (partially shaded regions) and umbra (fully shaded regions) areas while simultaneously facilitating end-to-end shadow removal. Through extensive experiments on popular datasets, we found that our SoftShadow framework, which generates soft masks, can better restore boundary artifacts, achieve state-of-the-art performance, and demonstrate superior generalizability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge