Nicholas Chimitt

Person Recognition at Altitude and Range: Fusion of Face, Body Shape and Gait

May 07, 2025

Abstract:We address the problem of whole-body person recognition in unconstrained environments. This problem arises in surveillance scenarios such as those in the IARPA Biometric Recognition and Identification at Altitude and Range (BRIAR) program, where biometric data is captured at long standoff distances, elevated viewing angles, and under adverse atmospheric conditions (e.g., turbulence and high wind velocity). To this end, we propose FarSight, a unified end-to-end system for person recognition that integrates complementary biometric cues across face, gait, and body shape modalities. FarSight incorporates novel algorithms across four core modules: multi-subject detection and tracking, recognition-aware video restoration, modality-specific biometric feature encoding, and quality-guided multi-modal fusion. These components are designed to work cohesively under degraded image conditions, large pose and scale variations, and cross-domain gaps. Extensive experiments on the BRIAR dataset, one of the most comprehensive benchmarks for long-range, multi-modal biometric recognition, demonstrate the effectiveness of FarSight. Compared to our preliminary system, this system achieves a 34.1% absolute gain in 1:1 verification accuracy (TAR@0.1% FAR), a 17.8% increase in closed-set identification (Rank-20), and a 34.3% reduction in open-set identification errors (FNIR@1% FPIR). Furthermore, FarSight was evaluated in the 2025 NIST RTE Face in Video Evaluation (FIVE), which conducts standardized face recognition testing on the BRIAR dataset. These results establish FarSight as a state-of-the-art solution for operational biometric recognition in challenging real-world conditions.

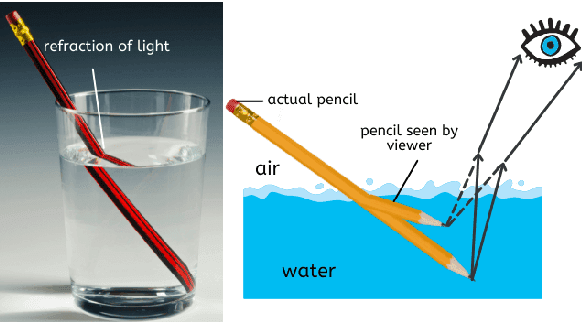

Wavefront Estimation From a Single Measurement: Uniqueness and Algorithms

Apr 13, 2025Abstract:Wavefront estimation is an essential component of adaptive optics where the goal is to recover the underlying phase from its Fourier magnitude. While this may sound identical to classical phase retrieval, wavefront estimation faces more strict requirements regarding uniqueness as adaptive optics systems need a unique phase to compensate for the distorted wavefront. Existing real-time wavefront estimation methodologies are dominated by sensing via specialized optical hardware due to their high speed, but they often have a low spatial resolution. A computational method that can perform both fast and accurate wavefront estimation with a single measurement can improve resolution and bring new applications such as real-time passive wavefront estimation, opening the door to a new generation of medical and defense applications. In this paper, we tackle the wavefront estimation problem by observing that the non-uniqueness is related to the geometry of the pupil shape. By analyzing the source of ambiguities and breaking the symmetry, we present a joint optics-algorithm approach by co-designing the shape of the pupil and the reconstruction neural network. Using our proposed lightweight neural network, we demonstrate wavefront estimation of a phase of size $128\times 128$ at $5,200$ frames per second on a CPU computer, achieving an average Strehl ratio up to $0.98$ in the noiseless case. We additionally test our method on real measurements using a spatial light modulator. Code is available at https://pages.github.itap.purdue.edu/StanleyChanGroup/wavefront-estimation/.

Learning Phase Distortion with Selective State Space Models for Video Turbulence Mitigation

Apr 03, 2025Abstract:Atmospheric turbulence is a major source of image degradation in long-range imaging systems. Although numerous deep learning-based turbulence mitigation (TM) methods have been proposed, many are slow, memory-hungry, and do not generalize well. In the spatial domain, methods based on convolutional operators have a limited receptive field, so they cannot handle a large spatial dependency required by turbulence. In the temporal domain, methods relying on self-attention can, in theory, leverage the lucky effects of turbulence, but their quadratic complexity makes it difficult to scale to many frames. Traditional recurrent aggregation methods face parallelization challenges. In this paper, we present a new TM method based on two concepts: (1) A turbulence mitigation network based on the Selective State Space Model (MambaTM). MambaTM provides a global receptive field in each layer across spatial and temporal dimensions while maintaining linear computational complexity. (2) Learned Latent Phase Distortion (LPD). LPD guides the state space model. Unlike classical Zernike-based representations of phase distortion, the new LPD map uniquely captures the actual effects of turbulence, significantly improving the model's capability to estimate degradation by reducing the ill-posedness. Our proposed method exceeds current state-of-the-art networks on various synthetic and real-world TM benchmarks with significantly faster inference speed. The code is available at http://github.com/xg416/MambaTM.

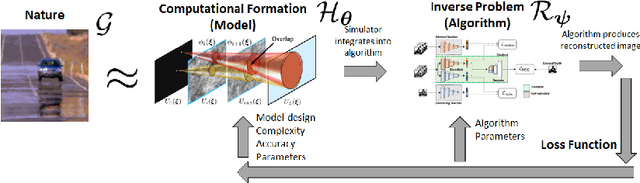

Computational Imaging Through Atmospheric Turbulence

Nov 01, 2024

Abstract:Since the seminal work of Andrey Kolmogorov in the early 1940's, imaging through atmospheric turbulence has grown from a pure scientific pursuit to an important subject across a multitude of civilian, space-mission, and national security applications. Fueled by the recent advancement of deep learning, the field is further experiencing a new wave of momentum. However, for these deep learning methods to perform well, new efforts are needed to build faster and more accurate computational models while at the same time maximizing the performance of image reconstruction. The book is written primarily for image processing engineers, computer vision scientists, and engineering students who are interested in the field of atmospheric turbulence, statistical optics, and image processing. The book can be used as a graduate text, or advanced topic classes for undergraduates.

Spatio-Temporal Turbulence Mitigation: A Translational Perspective

Jan 08, 2024Abstract:Recovering images distorted by atmospheric turbulence is a challenging inverse problem due to the stochastic nature of turbulence. Although numerous turbulence mitigation (TM) algorithms have been proposed, their efficiency and generalization to real-world dynamic scenarios remain severely limited. Building upon the intuitions of classical TM algorithms, we present the Deep Atmospheric TUrbulence Mitigation network (DATUM). DATUM aims to overcome major challenges when transitioning from classical to deep learning approaches. By carefully integrating the merits of classical multi-frame TM methods into a deep network structure, we demonstrate that DATUM can efficiently perform long-range temporal aggregation using a recurrent fashion, while deformable attention and temporal-channel attention seamlessly facilitate pixel registration and lucky imaging. With additional supervision, tilt and blur degradation can be jointly mitigated. These inductive biases empower DATUM to significantly outperform existing methods while delivering a tenfold increase in processing speed. A large-scale training dataset, ATSyn, is presented as a co-invention to enable generalization in real turbulence. Our code and datasets will be available at \href{https://xg416.github.io/DATUM}{\textcolor{pink}{https://xg416.github.io/DATUM}}

FarSight: A Physics-Driven Whole-Body Biometric System at Large Distance and Altitude

Jun 29, 2023

Abstract:Whole-body biometric recognition is an important area of research due to its vast applications in law enforcement, border security, and surveillance. This paper presents the end-to-end design, development and evaluation of FarSight, an innovative software system designed for whole-body (fusion of face, gait and body shape) biometric recognition. FarSight accepts videos from elevated platforms and drones as input and outputs a candidate list of identities from a gallery. The system is designed to address several challenges, including (i) low-quality imagery, (ii) large yaw and pitch angles, (iii) robust feature extraction to accommodate large intra-person variabilities and large inter-person similarities, and (iv) the large domain gap between training and test sets. FarSight combines the physics of imaging and deep learning models to enhance image restoration and biometric feature encoding. We test FarSight's effectiveness using the newly acquired IARPA Biometric Recognition and Identification at Altitude and Range (BRIAR) dataset. Notably, FarSight demonstrated a substantial performance increase on the BRIAR dataset, with gains of +11.82% Rank-20 identification and +11.3% TAR@1% FAR.

Scattering and Gathering for Spatially Varying Blurs

Mar 10, 2023Abstract:A spatially varying blur kernel $h(\mathbf{x},\mathbf{u})$ is specified by an input coordinate $\mathbf{u} \in \mathbb{R}^2$ and an output coordinate $\mathbf{x} \in \mathbb{R}^2$. For computational efficiency, we sometimes write $h(\mathbf{x},\mathbf{u})$ as a linear combination of spatially invariant basis functions. The associated pixelwise coefficients, however, can be indexed by either the input coordinate or the output coordinate. While appearing subtle, the two indexing schemes will lead to two different forms of convolutions known as scattering and gathering, respectively. We discuss the origin of the operations. We discuss conditions under which the two operations are identical. We show that scattering is more suitable for simulating how light propagates and gathering is more suitable for image filtering such as denoising.

Real-Time Dense Field Phase-to-Space Simulation of Imaging through Atmospheric Turbulence

Oct 13, 2022

Abstract:Numerical simulation of atmospheric turbulence is one of the biggest bottlenecks in developing computational techniques for solving the inverse problem in long-range imaging. The classical split-step method is based upon numerical wave propagation which splits the propagation path into many segments and propagates every pixel in each segment individually via the Fresnel integral. This repeated evaluation becomes increasingly time-consuming for larger images. As a result, the split-step simulation is often done only on a sparse grid of points followed by an interpolation to the other pixels. Even so, the computation is expensive for real-time applications. In this paper, we present a new simulation method that enables \emph{real-time} processing over a \emph{dense} grid of points. Building upon the recently developed multi-aperture model and the phase-to-space transform, we overcome the memory bottleneck in drawing random samples from the Zernike correlation tensor. We show that the cross-correlation of the Zernike modes has an insignificant contribution to the statistics of the random samples. By approximating these cross-correlation blocks in the Zernike tensor, we restore the homogeneity of the tensor which then enables Fourier-based random sampling. On a $512\times512$ image, the new simulator achieves 0.025 seconds per frame over a dense field. On a $3840 \times 2160$ image which would have taken 13 hours to simulate using the split-step method, the new simulator can run at approximately 60 seconds per frame.

Imaging through the Atmosphere using Turbulence Mitigation Transformer

Jul 13, 2022

Abstract:Restoring images distorted by atmospheric turbulence is a long-standing problem due to the spatially varying nature of the distortion, nonlinearity of the image formation process, and scarcity of training and testing data. Existing methods often have strong statistical assumptions on the distortion model which in many cases will lead to a limited performance in real-world scenarios as they do not generalize. To overcome the challenge, this paper presents an end-to-end physics-driven approach that is efficient and can generalize to real-world turbulence. On the data synthesis front, we significantly increase the image resolution that can be handled by the SOTA turbulence simulator by approximating the random field via wide-sense stationarity. The new data synthesis process enables the generation of large-scale multi-level turbulence and ground truth pairs for training. On the network design front, we propose the turbulence mitigation transformer (TMT), a two stage U-Net shaped multi-frame restoration network which has a noval efficient self-attention mechanism named temporal channel joint attention (TCJA). We also introduce a new training scheme that is enabled by the new simulator, and we design new transformer units to reduce the memory consumption. Experimental results on both static and dynamic scenes are promising, including various real turbulence scenarios.

Accelerating Atmospheric Turbulence Simulation via Learned Phase-to-Space Transform

Aug 20, 2021

Abstract:Fast and accurate simulation of imaging through atmospheric turbulence is essential for developing turbulence mitigation algorithms. Recognizing the limitations of previous approaches, we introduce a new concept known as the phase-to-space (P2S) transform to significantly speed up the simulation. P2S is build upon three ideas: (1) reformulating the spatially varying convolution as a set of invariant convolutions with basis functions, (2) learning the basis function via the known turbulence statistics models, (3) implementing the P2S transform via a light-weight network that directly convert the phase representation to spatial representation. The new simulator offers 300x -- 1000x speed up compared to the mainstream split-step simulators while preserving the essential turbulence statistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge