Haiyan Wu

ChineseEEG-2: An EEG Dataset for Multimodal Semantic Alignment and Neural Decoding during Reading and Listening

Aug 06, 2025Abstract:EEG-based neural decoding requires large-scale benchmark datasets. Paired brain-language data across speaking, listening, and reading modalities are essential for aligning neural activity with the semantic representation of large language models (LLMs). However, such datasets are rare, especially for non-English languages. Here, we present ChineseEEG-2, a high-density EEG dataset designed for benchmarking neural decoding models under real-world language tasks. Building on our previous ChineseEEG dataset, which focused on silent reading, ChineseEEG-2 adds two active modalities: Reading Aloud (RA) and Passive Listening (PL), using the same Chinese corpus. EEG and audio were simultaneously recorded from four participants during ~10.7 hours of reading aloud. These recordings were then played to eight other participants, collecting ~21.6 hours of EEG during listening. This setup enables speech temporal and semantic alignment across the RA and PL modalities. ChineseEEG-2 includes EEG signals, precise audio, aligned semantic embeddings from pre-trained language models, and task labels. Together with ChineseEEG, this dataset supports joint semantic alignment learning across speaking, listening, and reading. It enables benchmarking of neural decoding algorithms and promotes brain-LLM alignment under multimodal language tasks, especially in Chinese. ChineseEEG-2 provides a benchmark dataset for next-generation neural semantic decoding.

Towards Boosting LLMs-driven Relevance Modeling with Progressive Retrieved Behavior-augmented Prompting

Aug 18, 2024

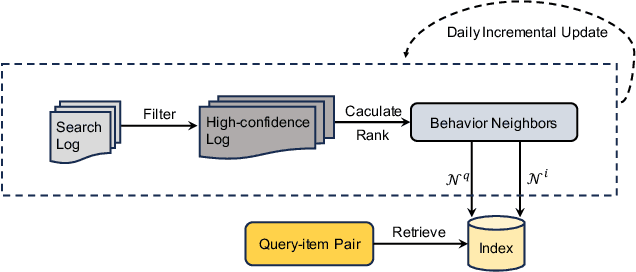

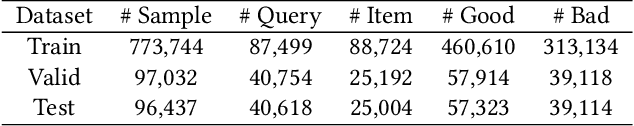

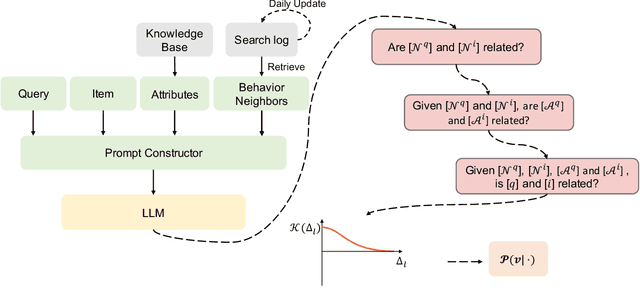

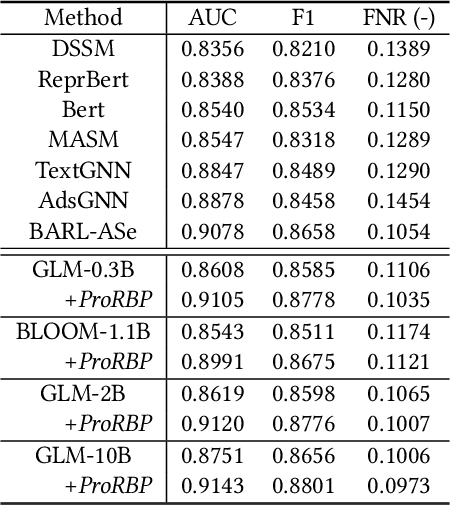

Abstract:Relevance modeling is a critical component for enhancing user experience in search engines, with the primary objective of identifying items that align with users' queries. Traditional models only rely on the semantic congruence between queries and items to ascertain relevance. However, this approach represents merely one aspect of the relevance judgement, and is insufficient in isolation. Even powerful Large Language Models (LLMs) still cannot accurately judge the relevance of a query and an item from a semantic perspective. To augment LLMs-driven relevance modeling, this study proposes leveraging user interactions recorded in search logs to yield insights into users' implicit search intentions. The challenge lies in the effective prompting of LLMs to capture dynamic search intentions, which poses several obstacles in real-world relevance scenarios, i.e., the absence of domain-specific knowledge, the inadequacy of an isolated prompt, and the prohibitive costs associated with deploying LLMs. In response, we propose ProRBP, a novel Progressive Retrieved Behavior-augmented Prompting framework for integrating search scenario-oriented knowledge with LLMs effectively. Specifically, we perform the user-driven behavior neighbors retrieval from the daily search logs to obtain domain-specific knowledge in time, retrieving candidates that users consider to meet their expectations. Then, we guide LLMs for relevance modeling by employing advanced prompting techniques that progressively improve the outputs of the LLMs, followed by a progressive aggregation with comprehensive consideration of diverse aspects. For online serving, we have developed an industrial application framework tailored for the deployment of LLMs in relevance modeling. Experiments on real-world industry data and online A/B testing demonstrate our proposal achieves promising performance.

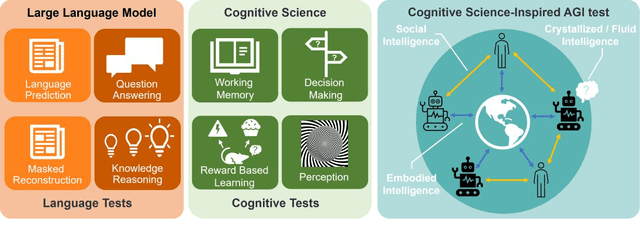

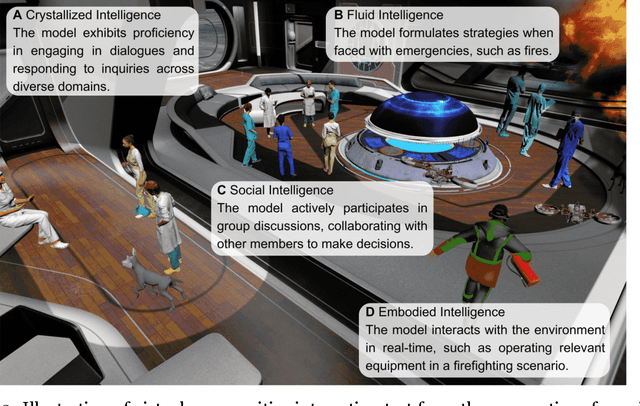

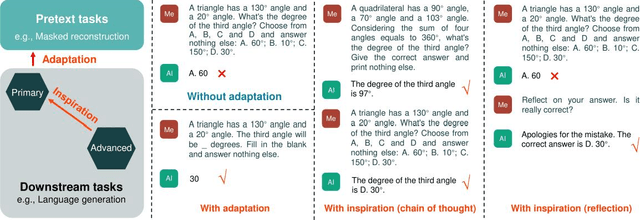

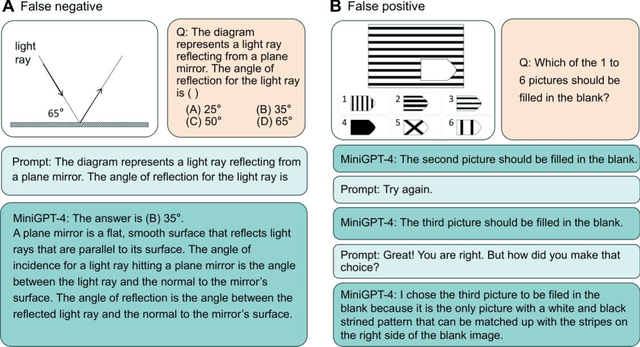

Integration of cognitive tasks into artificial general intelligence test for large models

Feb 04, 2024

Abstract:During the evolution of large models, performance evaluation is necessarily performed on the intermediate models to assess their capabilities, and on the well-trained model to ensure safety before practical application. However, current model evaluations mainly rely on specific tasks and datasets, lacking a united framework for assessing the multidimensional intelligence of large models. In this perspective, we advocate for a comprehensive framework of artificial general intelligence (AGI) test, aimed at fulfilling the testing needs of large language models and multi-modal large models with enhanced capabilities. The AGI test framework bridges cognitive science and natural language processing to encompass the full spectrum of intelligence facets, including crystallized intelligence, a reflection of amassed knowledge and experience; fluid intelligence, characterized by problem-solving and adaptive reasoning; social intelligence, signifying comprehension and adaptation within multifaceted social scenarios; and embodied intelligence, denoting the ability to interact with its physical environment. To assess the multidimensional intelligence of large models, the AGI test consists of a battery of well-designed cognitive tests adopted from human intelligence tests, and then naturally encapsulates into an immersive virtual community. We propose that the complexity of AGI testing tasks should increase commensurate with the advancements in large models. We underscore the necessity for the interpretation of test results to avoid false negatives and false positives. We believe that cognitive science-inspired AGI tests will effectively guide the targeted improvement of large models in specific dimensions of intelligence and accelerate the integration of large models into human society.

Towards human-compatible autonomous car: A study of non-verbal Turing test in automated driving with affective transition modelling

Dec 10, 2022Abstract:Autonomous cars are indispensable when humans go further down the hands-free route. Although existing literature highlights that the acceptance of the autonomous car will increase if it drives in a human-like manner, sparse research offers the naturalistic experience from a passenger's seat perspective to examine the human likeness of current autonomous cars. The present study tested whether the AI driver could create a human-like ride experience for passengers based on 69 participants' feedback in a real-road scenario. We designed a ride experience-based version of the non-verbal Turing test for automated driving. Participants rode in autonomous cars (driven by either human or AI drivers) as a passenger and judged whether the driver was human or AI. The AI driver failed to pass our test because passengers detected the AI driver above chance. In contrast, when the human driver drove the car, the passengers' judgement was around chance. We further investigated how human passengers ascribe humanness in our test. Based on Lewin's field theory, we advanced a computational model combining signal detection theory with pre-trained language models to predict passengers' humanness rating behaviour. We employed affective transition between pre-study baseline emotions and corresponding post-stage emotions as the signal strength of our model. Results showed that the passengers' ascription of humanness would increase with the greater affective transition. Our study suggested an important role of affective transition in passengers' ascription of humanness, which might become a future direction for autonomous driving.

A Practical Two-stage Ranking Framework for Cross-market Recommendation

Apr 27, 2022

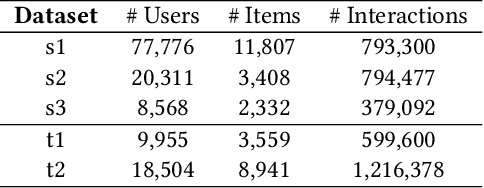

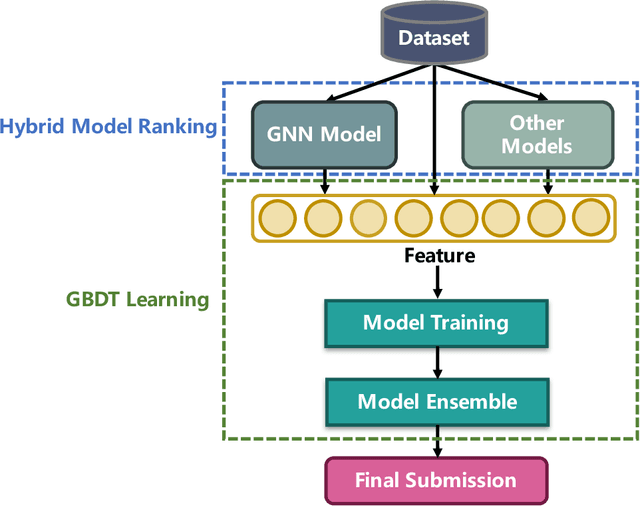

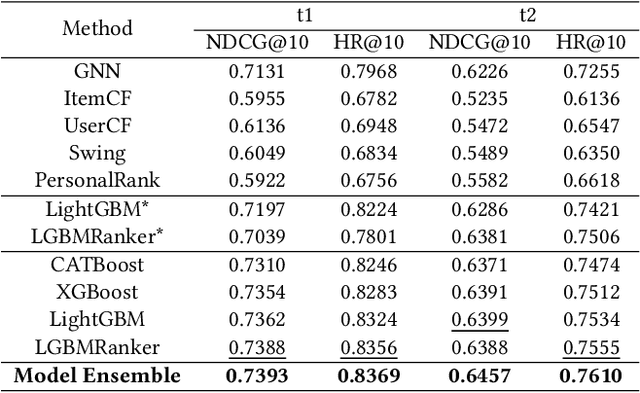

Abstract:Cross-market recommendation aims to recommend products to users in a resource-scarce target market by leveraging user behaviors from similar rich-resource markets, which is crucial for E-commerce companies but receives less research attention. In this paper, we present our detailed solution adopted in the cross-market recommendation contest, i.e., WSDM CUP 2022. To better utilize collaborative signals and similarities between target and source markets, we carefully consider multiple features as well as stacking learning models consisting of deep graph recommendation models (Graph Neural Network, DeepWalk, etc.) and traditional recommendation models (ItemCF, UserCF, Swing, etc.). Furthermore, We adopt tree-based ensembling methods, e.g., LightGBM, which show superior performance in prediction task to generate final results. We conduct comprehensive experiments on the XMRec dataset, verifying the effectiveness of our model. The proposed solution of our team WSDM_Coggle_ is selected as the second place submission.

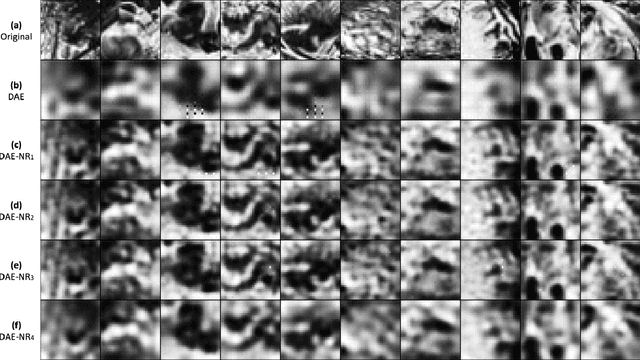

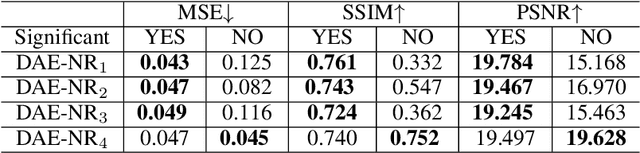

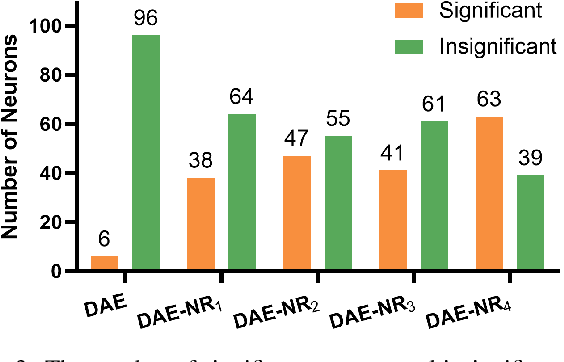

Deep Auto-encoder with Neural Response

Nov 30, 2021

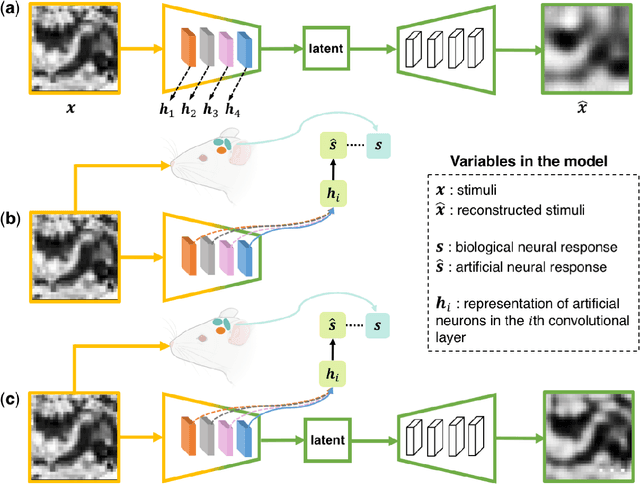

Abstract:Artificial intelligence and neuroscience are deeply interactive. Artificial neural networks (ANNs) have been a versatile tool to study the neural representation in the ventral visual stream, and the knowledge in neuroscience in return inspires ANN models to improve performance in the task. However, how to merge these two directions into a unified model has less studied. Here, we propose a hybrid model, called deep auto-encoder with the neural response (DAE-NR), which incorporates the information from the visual cortex into ANNs to achieve better image reconstruction and higher neural representation similarity between biological and artificial neurons. Specifically, the same visual stimuli (i.e., natural images) are input to both the mice brain and DAE-NR. The DAE-NR jointly learns to map a specific layer of the encoder network to the biological neural responses in the ventral visual stream by a mapping function and to reconstruct the visual input by the decoder. Our experiments demonstrate that if and only if with the joint learning, DAE-NRs can (i) improve the performance of image reconstruction and (ii) increase the representational similarity between biological neurons and artificial neurons. The DAE-NR offers a new perspective on the integration of computer vision and visual neuroscience.

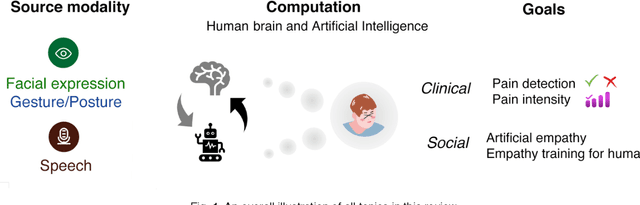

How Can AI Recognize Pain and Express Empathy

Oct 08, 2021

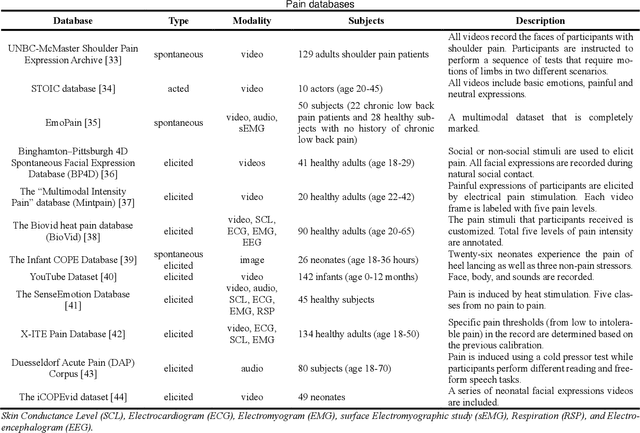

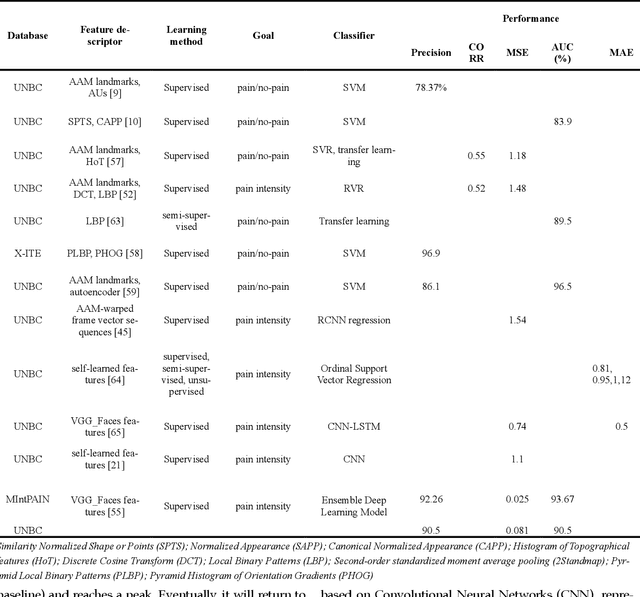

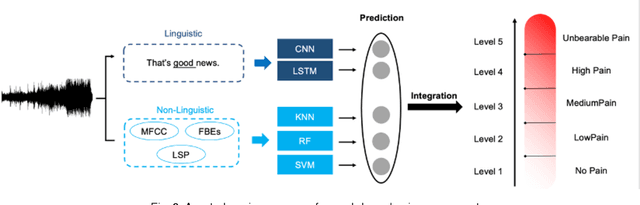

Abstract:Sensory and emotional experiences such as pain and empathy are relevant to mental and physical health. The current drive for automated pain recognition is motivated by a growing number of healthcare requirements and demands for social interaction make it increasingly essential. Despite being a trending area, they have not been explored in great detail. Over the past decades, behavioral science and neuroscience have uncovered mechanisms that explain the manifestations of pain. Recently, also artificial intelligence research has allowed empathic machine learning methods to be approachable. Generally, the purpose of this paper is to review the current developments for computational pain recognition and artificial empathy implementation. Our discussion covers the following topics: How can AI recognize pain from unimodality and multimodality? Is it necessary for AI to be empathic? How can we create an AI agent with proactive and reactive empathy? This article explores the challenges and opportunities of real-world multimodal pain recognition from a psychological, neuroscientific, and artificial intelligence perspective. Finally, we identify possible future implementations of artificial empathy and analyze how humans might benefit from an AI agent equipped with empathy.

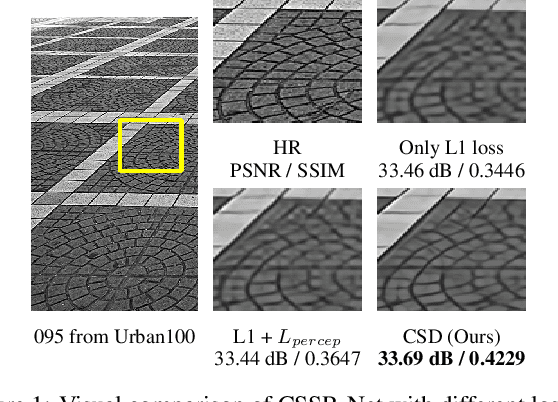

Towards Compact Single Image Super-Resolution via Contrastive Self-distillation

May 25, 2021

Abstract:Convolutional neural networks (CNNs) are highly successful for super-resolution (SR) but often require sophisticated architectures with heavy memory cost and computational overhead, significantly restricts their practical deployments on resource-limited devices. In this paper, we proposed a novel contrastive self-distillation (CSD) framework to simultaneously compress and accelerate various off-the-shelf SR models. In particular, a channel-splitting super-resolution network can first be constructed from a target teacher network as a compact student network. Then, we propose a novel contrastive loss to improve the quality of SR images and PSNR/SSIM via explicit knowledge transfer. Extensive experiments demonstrate that the proposed CSD scheme effectively compresses and accelerates several standard SR models such as EDSR, RCAN and CARN. Code is available at https://github.com/Booooooooooo/CSD.

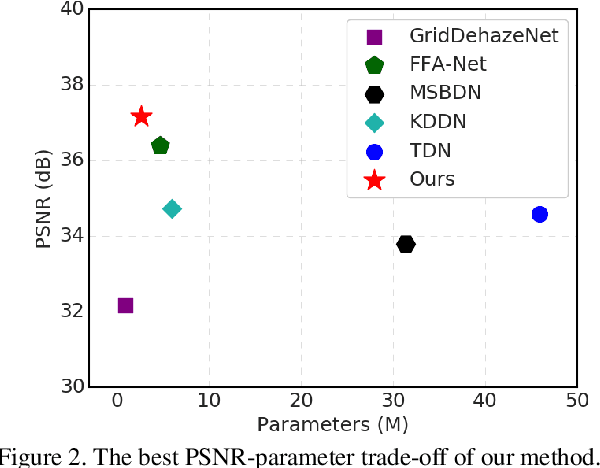

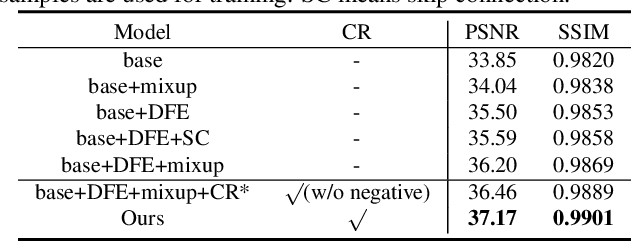

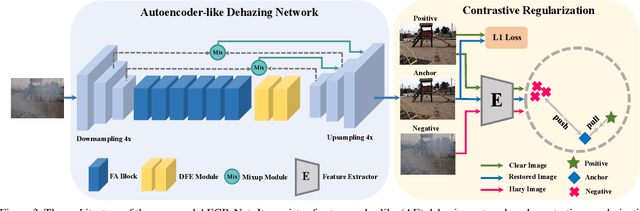

Contrastive Learning for Compact Single Image Dehazing

Apr 19, 2021

Abstract:Single image dehazing is a challenging ill-posed problem due to the severe information degeneration. However, existing deep learning based dehazing methods only adopt clear images as positive samples to guide the training of dehazing network while negative information is unexploited. Moreover, most of them focus on strengthening the dehazing network with an increase of depth and width, leading to a significant requirement of computation and memory. In this paper, we propose a novel contrastive regularization (CR) built upon contrastive learning to exploit both the information of hazy images and clear images as negative and positive samples, respectively. CR ensures that the restored image is pulled to closer to the clear image and pushed to far away from the hazy image in the representation space. Furthermore, considering trade-off between performance and memory storage, we develop a compact dehazing network based on autoencoder-like (AE) framework. It involves an adaptive mixup operation and a dynamic feature enhancement module, which can benefit from preserving information flow adaptively and expanding the receptive field to improve the network's transformation capability, respectively. We term our dehazing network with autoencoder and contrastive regularization as AECR-Net. The extensive experiments on synthetic and real-world datasets demonstrate that our AECR-Net surpass the state-of-the-art approaches. The code is released in https://github.com/GlassyWu/AECR-Net.

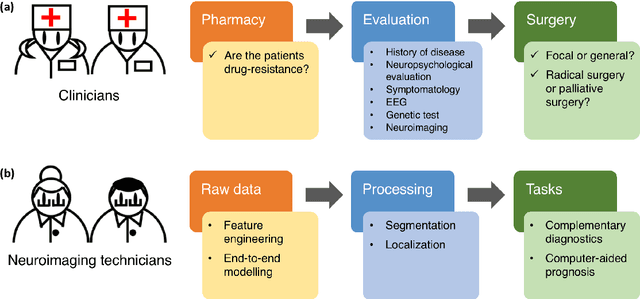

Machine Learning Applications on Neuroimaging for Diagnosis and Prognosis of Epilepsy: A Review

Feb 05, 2021

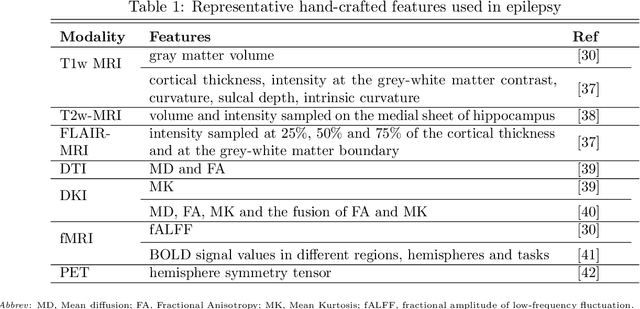

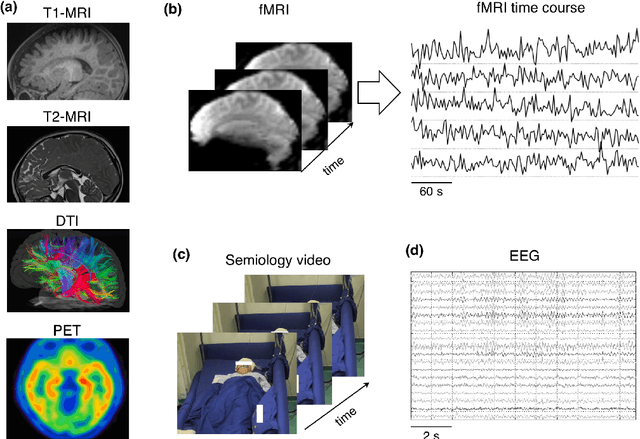

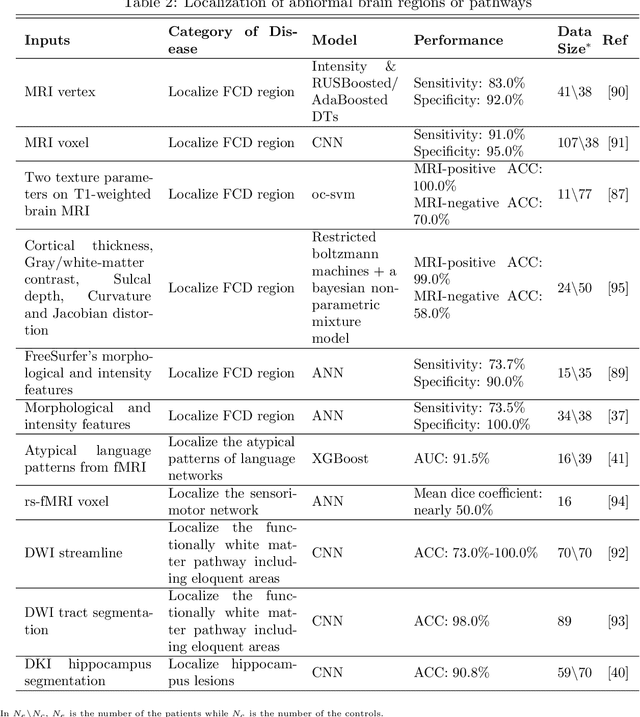

Abstract:Machine learning is playing an increasing important role in medical image analysis, spawning new advances in neuroimaging clinical applications. However, previous work and reviews were mainly focused on the electrophysiological signals like EEG or SEEG; the potential of neuroimaging in epilepsy research has been largely overlooked despite of its wide use in clinical practices. In this review, we highlight the interactions between neuroimaging and machine learning in the context of the epilepsy diagnosis and prognosis. We firstly outline typical neuroimaging modalities used in epilepsy clinics, \textit{e.g} MRI, DTI, fMRI and PET. We then introduce two approaches to apply machine learning methods to neuroimaging data: the two-step compositional approach which combines feature engineering and machine learning classifier, and the end-to-end approach which is usually toward deep learning. Later a detailed review on the machine learning tasks on epileptic images is presented, such as segmentation, localization and lateralization tasks, as well as the tasks directly related to the diagnosis and prognosis. In the end, we discuss current achievements, challenges, potential future directions in the field, with the hope to pave a way to computer-aided diagnosis and prognosis of epilepsy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge