Zhaoning Li

2SFGL: A Simple And Robust Protocol For Graph-Based Fraud Detection

Oct 12, 2023

Abstract:Financial crime detection using graph learning improves financial safety and efficiency. However, criminals may commit financial crimes across different institutions to avoid detection, which increases the difficulty of detection for financial institutions which use local data for graph learning. As most financial institutions are subject to strict regulations in regards to data privacy protection, the training data is often isolated and conventional learning technology cannot handle the problem. Federated learning (FL) allows multiple institutions to train a model without revealing their datasets to each other, hence ensuring data privacy protection. In this paper, we proposes a novel two-stage approach to federated graph learning (2SFGL): The first stage of 2SFGL involves the virtual fusion of multiparty graphs, and the second involves model training and inference on the virtual graph. We evaluate our framework on a conventional fraud detection task based on the FraudAmazonDataset and FraudYelpDataset. Experimental results show that integrating and applying a GCN (Graph Convolutional Network) with our 2SFGL framework to the same task results in a 17.6\%-30.2\% increase in performance on several typical metrics compared to the case only using FedAvg, while integrating GraphSAGE with 2SFGL results in a 6\%-16.2\% increase in performance compared to the case only using FedAvg. We conclude that our proposed framework is a robust and simple protocol which can be simply integrated to pre-existing graph-based fraud detection methods.

Towards human-compatible autonomous car: A study of non-verbal Turing test in automated driving with affective transition modelling

Dec 10, 2022Abstract:Autonomous cars are indispensable when humans go further down the hands-free route. Although existing literature highlights that the acceptance of the autonomous car will increase if it drives in a human-like manner, sparse research offers the naturalistic experience from a passenger's seat perspective to examine the human likeness of current autonomous cars. The present study tested whether the AI driver could create a human-like ride experience for passengers based on 69 participants' feedback in a real-road scenario. We designed a ride experience-based version of the non-verbal Turing test for automated driving. Participants rode in autonomous cars (driven by either human or AI drivers) as a passenger and judged whether the driver was human or AI. The AI driver failed to pass our test because passengers detected the AI driver above chance. In contrast, when the human driver drove the car, the passengers' judgement was around chance. We further investigated how human passengers ascribe humanness in our test. Based on Lewin's field theory, we advanced a computational model combining signal detection theory with pre-trained language models to predict passengers' humanness rating behaviour. We employed affective transition between pre-study baseline emotions and corresponding post-stage emotions as the signal strength of our model. Results showed that the passengers' ascription of humanness would increase with the greater affective transition. Our study suggested an important role of affective transition in passengers' ascription of humanness, which might become a future direction for autonomous driving.

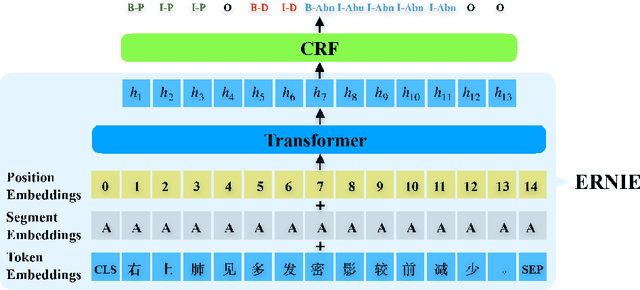

Fine-tuning ERNIE for chest abnormal imaging signs extraction

Nov 08, 2020

Abstract:Chest imaging reports describe the results of chest radiography procedures. Automatic extraction of abnormal imaging signs from chest imaging reports has a pivotal role in clinical research and a wide range of downstream medical tasks. However, there are few studies on information extraction from Chinese chest imaging reports. In this paper, we formulate chest abnormal imaging sign extraction as a sequence tagging and matching problem. On this basis, we propose a transferred abnormal imaging signs extractor with pretrained ERNIE as the backbone, named EASON (fine-tuning ERNIE with CRF for Abnormal Signs ExtractiON), which can address the problem of data insufficiency. In addition, to assign the attributes (the body part and degree) to corresponding abnormal imaging signs from the results of the sequence tagging model, we design a simple but effective tag2relation algorithm based on the nature of chest imaging report text. We evaluate our method on the corpus provided by a medical big data company, and the experimental results demonstrate that our method achieves significant and consistent improvement compared to other baselines.

* 30 pages, 5 figures, 8 tables

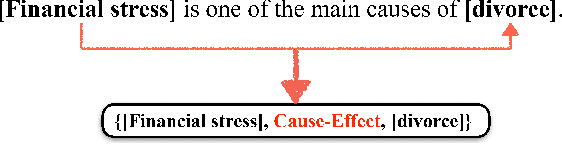

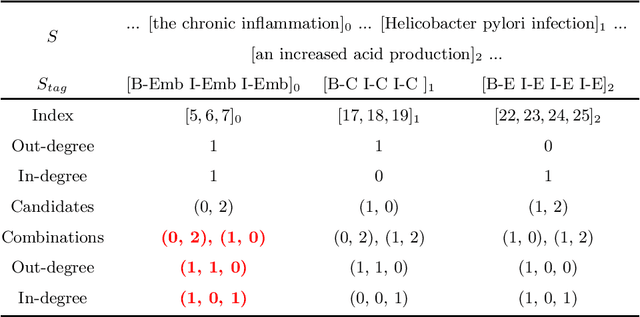

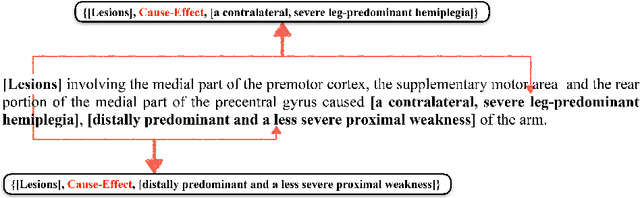

Causality Extraction based on Self-Attentive BiLSTM-CRF with Transferred Embeddings

Apr 29, 2019

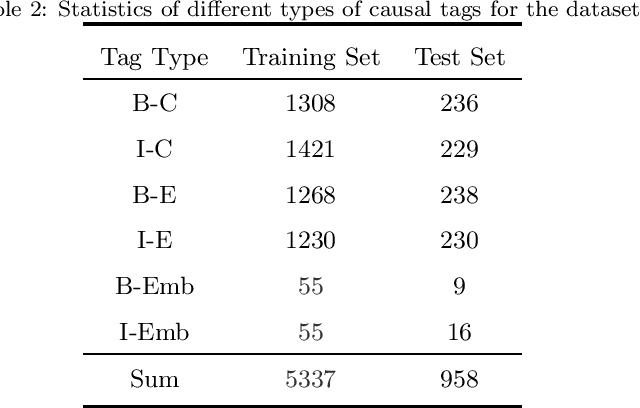

Abstract:Causality extraction from natural language texts is a challenging open problem in artificial intelligence. Existing methods utilize patterns, constraints, and machine learning techniques to extract causality, heavily depend on domain knowledge and require considerable human efforts and time on feature engineering. In this paper, we formulate causality extraction as a sequence tagging problem based on a novel causality tagging scheme. On this basis, we propose a neural causality extractor with BiLSTM-CRF model as the backbone, named SCIFI (Self-Attentive BiLSTM-CRF with Flair Embeddings), which can directly extract Cause and Effect, without extracting candidate causal pairs and identifying their relations separately. To tackle the problem of data insufficiency, we transfer the contextual string embeddings, also known as Flair embeddings, which trained on a large corpus into our task. Besides, to improve the performance of causality extraction, we introduce the multi-head self-attention mechanism into SCIFI to learn the dependencies between causal words. We evaluate our method on a public dataset, and experimental results demonstrate that our method achieves significant and consistent improvement as compared to other baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge