Wenxin Che

Integration of cognitive tasks into artificial general intelligence test for large models

Feb 04, 2024

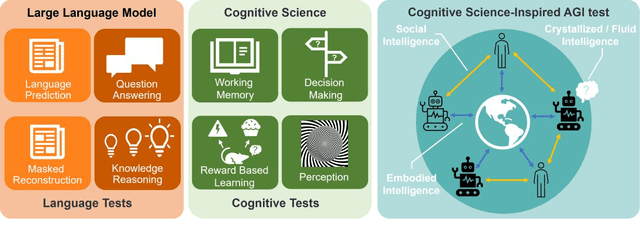

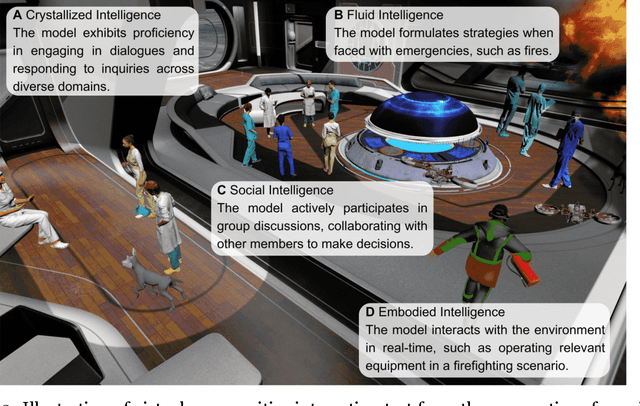

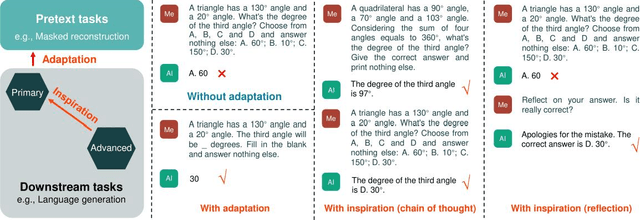

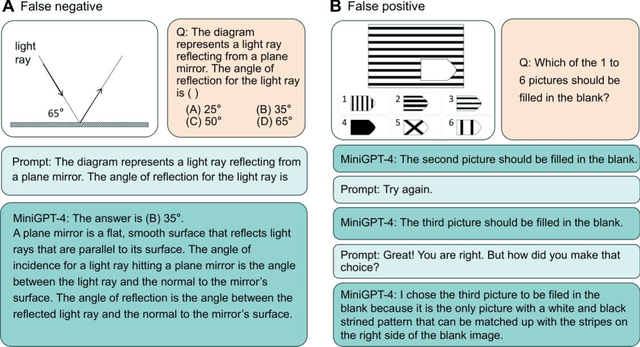

Abstract:During the evolution of large models, performance evaluation is necessarily performed on the intermediate models to assess their capabilities, and on the well-trained model to ensure safety before practical application. However, current model evaluations mainly rely on specific tasks and datasets, lacking a united framework for assessing the multidimensional intelligence of large models. In this perspective, we advocate for a comprehensive framework of artificial general intelligence (AGI) test, aimed at fulfilling the testing needs of large language models and multi-modal large models with enhanced capabilities. The AGI test framework bridges cognitive science and natural language processing to encompass the full spectrum of intelligence facets, including crystallized intelligence, a reflection of amassed knowledge and experience; fluid intelligence, characterized by problem-solving and adaptive reasoning; social intelligence, signifying comprehension and adaptation within multifaceted social scenarios; and embodied intelligence, denoting the ability to interact with its physical environment. To assess the multidimensional intelligence of large models, the AGI test consists of a battery of well-designed cognitive tests adopted from human intelligence tests, and then naturally encapsulates into an immersive virtual community. We propose that the complexity of AGI testing tasks should increase commensurate with the advancements in large models. We underscore the necessity for the interpretation of test results to avoid false negatives and false positives. We believe that cognitive science-inspired AGI tests will effectively guide the targeted improvement of large models in specific dimensions of intelligence and accelerate the integration of large models into human society.

Transfer learning to decode brain states reflecting the relationship between cognitive tasks

Jun 14, 2022

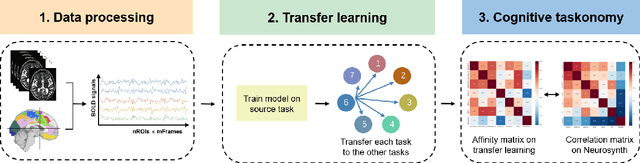

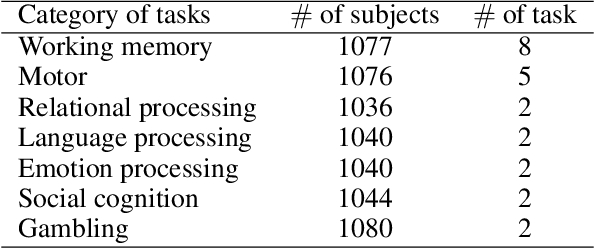

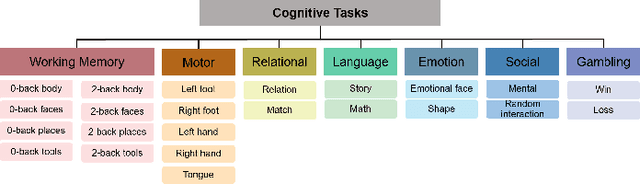

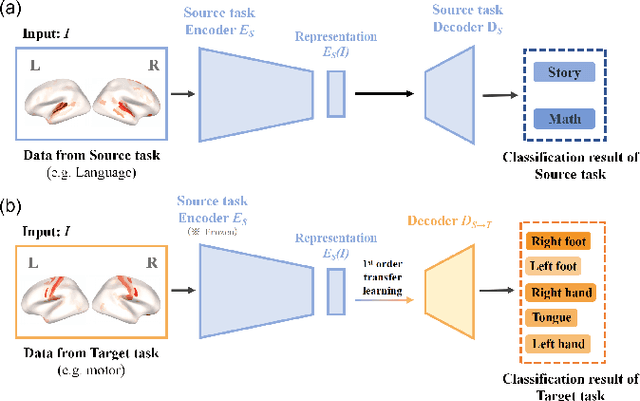

Abstract:Transfer learning improves the performance of the target task by leveraging the data of a specific source task: the closer the relationship between the source and the target tasks, the greater the performance improvement by transfer learning. In neuroscience, the relationship between cognitive tasks is usually represented by similarity of activated brain regions or neural representation. However, no study has linked transfer learning and neuroscience to reveal the relationship between cognitive tasks. In this study, we propose a transfer learning framework to reflect the relationship between cognitive tasks, and compare the task relations reflected by transfer learning and by the overlaps of brain regions (e.g., neurosynth). Our results of transfer learning create cognitive taskonomy to reflect the relationship between cognitive tasks which is well in line with the task relations derived from neurosynth. Transfer learning performs better in task decoding with fMRI data if the source and target cognitive tasks activate similar brain regions. Our study uncovers the relationship of multiple cognitive tasks and provides guidance for source task selection in transfer learning for neural decoding based on small-sample data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge