Zi Wang

Dj

DMP-3DAD: Cross-Category 3D Anomaly Detection via Realistic Depth Map Projection with Few Normal Samples

Feb 11, 2026Abstract:Cross-category anomaly detection for 3D point clouds aims to determine whether an unseen object belongs to a target category using only a few normal examples. Most existing methods rely on category-specific training, which limits their flexibility in few-shot scenarios. In this paper, we propose DMP-3DAD, a training-free framework for cross-category 3D anomaly detection based on multi-view realistic depth map projection. Specifically, by converting point clouds into a fixed set of realistic depth images, our method leverages a frozen CLIP visual encoder to extract multi-view representations and performs anomaly detection via weighted feature similarity, which does not require any fine-tuning or category-dependent adaptation. Extensive experiments on the ShapeNetPart dataset demonstrate that DMP-3DAD achieves state-of-the-art performance under few-shot setting. The results show that the proposed approach provides a simple yet effective solution for practical cross-category 3D anomaly detection.

Interpreting and Controlling Model Behavior via Constitutions for Atomic Concept Edits

Jan 23, 2026Abstract:We introduce a black-box interpretability framework that learns a verifiable constitution: a natural language summary of how changes to a prompt affect a model's specific behavior, such as its alignment, correctness, or adherence to constraints. Our method leverages atomic concept edits (ACEs), which are targeted operations that add, remove, or replace an interpretable concept in the input prompt. By systematically applying ACEs and observing the resulting effects on model behavior across various tasks, our framework learns a causal mapping from edits to predictable outcomes. This learned constitution provides deep, generalizable insights into the model. Empirically, we validate our approach across diverse tasks, including mathematical reasoning and text-to-image alignment, for controlling and understanding model behavior. We found that for text-to-image generation, GPT-Image tends to focus on grammatical adherence, while Imagen 4 prioritizes atmospheric coherence. In mathematical reasoning, distractor variables confuse GPT-5 but leave Gemini 2.5 models and o4-mini largely unaffected. Moreover, our results show that the learned constitutions are highly effective for controlling model behavior, achieving an average of 1.86 times boost in success rate over methods that do not use constitutions.

Enabling Ultra-Fast Cardiovascular Imaging Across Heterogeneous Clinical Environments with a Generalist Foundation Model and Multimodal Database

Dec 25, 2025Abstract:Multimodal cardiovascular magnetic resonance (CMR) imaging provides comprehensive and non-invasive insights into cardiovascular disease (CVD) diagnosis and underlying mechanisms. Despite decades of advancements, its widespread clinical adoption remains constrained by prolonged scan times and heterogeneity across medical environments. This underscores the urgent need for a generalist reconstruction foundation model for ultra-fast CMR imaging, one capable of adapting across diverse imaging scenarios and serving as the essential substrate for all downstream analyses. To enable this goal, we curate MMCMR-427K, the largest and most comprehensive multimodal CMR k-space database to date, comprising 427,465 multi-coil k-space data paired with structured metadata across 13 international centers, 12 CMR modalities, 15 scanners, and 17 CVD categories in populations across three continents. Building on this unprecedented resource, we introduce CardioMM, a generalist reconstruction foundation model capable of dynamically adapting to heterogeneous fast CMR imaging scenarios. CardioMM unifies semantic contextual understanding with physics-informed data consistency to deliver robust reconstructions across varied scanners, protocols, and patient presentations. Comprehensive evaluations demonstrate that CardioMM achieves state-of-the-art performance in the internal centers and exhibits strong zero-shot generalization to unseen external settings. Even at imaging acceleration up to 24x, CardioMM reliably preserves key cardiac phenotypes, quantitative myocardial biomarkers, and diagnostic image quality, enabling a substantial increase in CMR examination throughput without compromising clinical integrity. Together, our open-access MMCMR-427K database and CardioMM framework establish a scalable pathway toward high-throughput, high-quality, and clinically accessible cardiovascular imaging.

VIRAL: Visual Sim-to-Real at Scale for Humanoid Loco-Manipulation

Nov 19, 2025

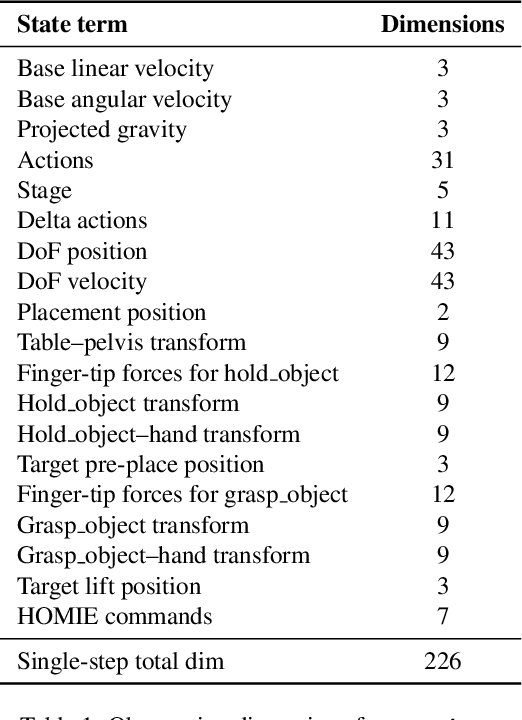

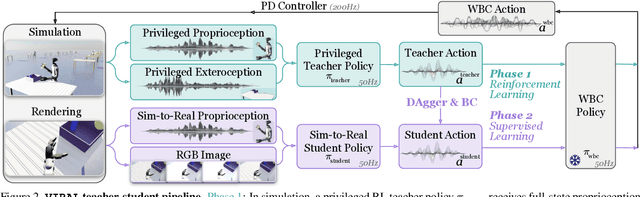

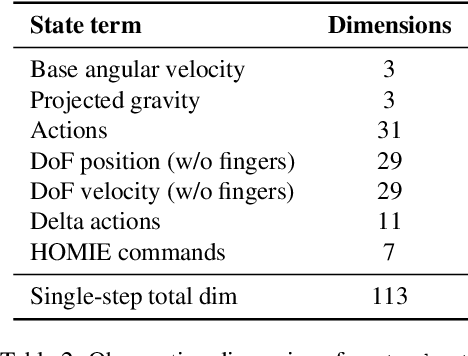

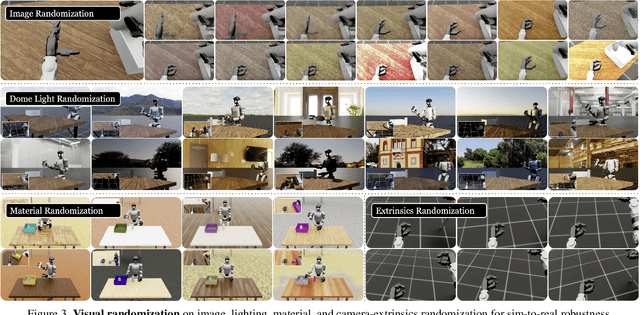

Abstract:A key barrier to the real-world deployment of humanoid robots is the lack of autonomous loco-manipulation skills. We introduce VIRAL, a visual sim-to-real framework that learns humanoid loco-manipulation entirely in simulation and deploys it zero-shot to real hardware. VIRAL follows a teacher-student design: a privileged RL teacher, operating on full state, learns long-horizon loco-manipulation using a delta action space and reference state initialization. A vision-based student policy is then distilled from the teacher via large-scale simulation with tiled rendering, trained with a mixture of online DAgger and behavior cloning. We find that compute scale is critical: scaling simulation to tens of GPUs (up to 64) makes both teacher and student training reliable, while low-compute regimes often fail. To bridge the sim-to-real gap, VIRAL combines large-scale visual domain randomization over lighting, materials, camera parameters, image quality, and sensor delays--with real-to-sim alignment of the dexterous hands and cameras. Deployed on a Unitree G1 humanoid, the resulting RGB-based policy performs continuous loco-manipulation for up to 54 cycles, generalizing to diverse spatial and appearance variations without any real-world fine-tuning, and approaching expert-level teleoperation performance. Extensive ablations dissect the key design choices required to make RGB-based humanoid loco-manipulation work in practice.

SONIC: Supersizing Motion Tracking for Natural Humanoid Whole-Body Control

Nov 11, 2025Abstract:Despite the rise of billion-parameter foundation models trained across thousands of GPUs, similar scaling gains have not been shown for humanoid control. Current neural controllers for humanoids remain modest in size, target a limited behavior set, and are trained on a handful of GPUs over several days. We show that scaling up model capacity, data, and compute yields a generalist humanoid controller capable of creating natural and robust whole-body movements. Specifically, we posit motion tracking as a natural and scalable task for humanoid control, leverageing dense supervision from diverse motion-capture data to acquire human motion priors without manual reward engineering. We build a foundation model for motion tracking by scaling along three axes: network size (from 1.2M to 42M parameters), dataset volume (over 100M frames, 700 hours of high-quality motion data), and compute (9k GPU hours). Beyond demonstrating the benefits of scale, we show the practical utility of our model through two mechanisms: (1) a real-time universal kinematic planner that bridges motion tracking to downstream task execution, enabling natural and interactive control, and (2) a unified token space that supports various motion input interfaces, such as VR teleoperation devices, human videos, and vision-language-action (VLA) models, all using the same policy. Scaling motion tracking exhibits favorable properties: performance improves steadily with increased compute and data diversity, and learned representations generalize to unseen motions, establishing motion tracking at scale as a practical foundation for humanoid control.

GenesisGeo: Technical Report

Sep 26, 2025Abstract:We present GenesisGeo, an automated theorem prover in Euclidean geometry. We have open-sourced a large-scale geometry dataset of 21.8 million geometric problems, over 3 million of which contain auxiliary constructions. Specially, we significantly accelerate the symbolic deduction engine DDARN by 120x through theorem matching, combined with a C++ implementation of its core components. Furthermore, we build our neuro-symbolic prover, GenesisGeo, upon Qwen3-0.6B-Base, which solves 24 of 30 problems (IMO silver medal level) in the IMO-AG-30 benchmark using a single model, and achieves 26 problems (IMO gold medal level) with a dual-model ensemble.

Label-Consistent Dataset Distillation with Detector-Guided Refinement

Jul 17, 2025Abstract:Dataset distillation (DD) aims to generate a compact yet informative dataset that achieves performance comparable to the original dataset, thereby reducing demands on storage and computational resources. Although diffusion models have made significant progress in dataset distillation, the generated surrogate datasets often contain samples with label inconsistencies or insufficient structural detail, leading to suboptimal downstream performance. To address these issues, we propose a detector-guided dataset distillation framework that explicitly leverages a pre-trained detector to identify and refine anomalous synthetic samples, thereby ensuring label consistency and improving image quality. Specifically, a detector model trained on the original dataset is employed to identify anomalous images exhibiting label mismatches or low classification confidence. For each defective image, multiple candidates are generated using a pre-trained diffusion model conditioned on the corresponding image prototype and label. The optimal candidate is then selected by jointly considering the detector's confidence score and dissimilarity to existing qualified synthetic samples, thereby ensuring both label accuracy and intra-class diversity. Experimental results demonstrate that our method can synthesize high-quality representative images with richer details, achieving state-of-the-art performance on the validation set.

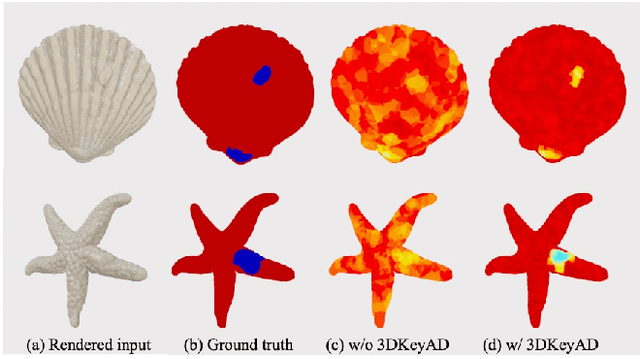

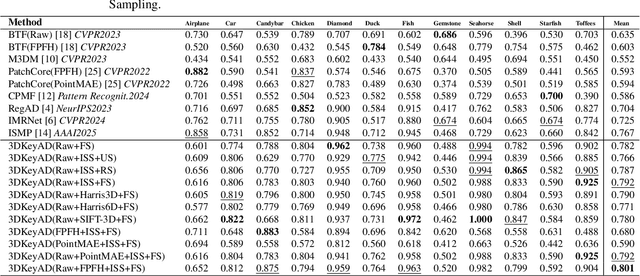

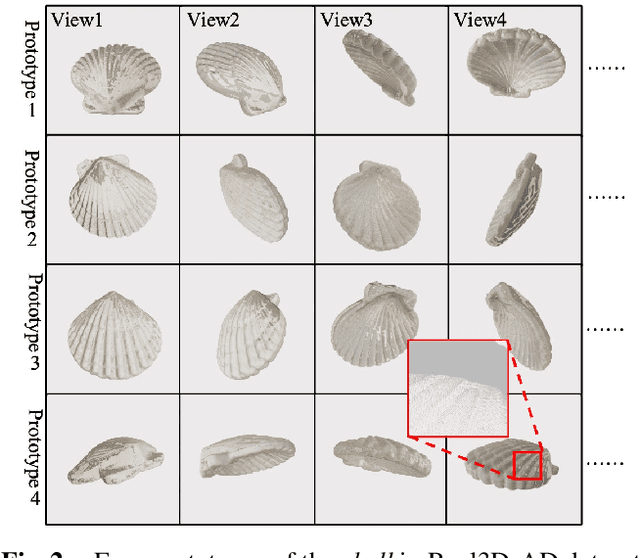

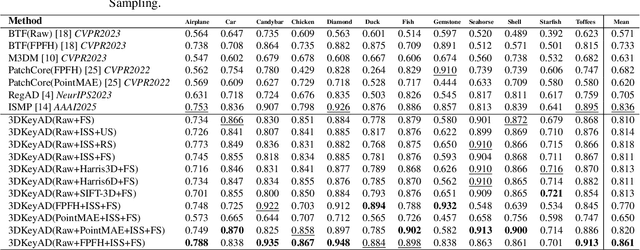

3DKeyAD: High-Resolution 3D Point Cloud Anomaly Detection via Keypoint-Guided Point Clustering

Jul 17, 2025

Abstract:High-resolution 3D point clouds are highly effective for detecting subtle structural anomalies in industrial inspection. However, their dense and irregular nature imposes significant challenges, including high computational cost, sensitivity to spatial misalignment, and difficulty in capturing localized structural differences. This paper introduces a registration-based anomaly detection framework that combines multi-prototype alignment with cluster-wise discrepancy analysis to enable precise 3D anomaly localization. Specifically, each test sample is first registered to multiple normal prototypes to enable direct structural comparison. To evaluate anomalies at a local level, clustering is performed over the point cloud, and similarity is computed between features from the test sample and the prototypes within each cluster. Rather than selecting cluster centroids randomly, a keypoint-guided strategy is employed, where geometrically informative points are chosen as centroids. This ensures that clusters are centered on feature-rich regions, enabling more meaningful and stable distance-based comparisons. Extensive experiments on the Real3D-AD benchmark demonstrate that the proposed method achieves state-of-the-art performance in both object-level and point-level anomaly detection, even using only raw features.

Harmonizing and Merging Source Models for CLIP-based Domain Generalization

Jun 11, 2025Abstract:CLIP-based domain generalization aims to improve model generalization to unseen domains by leveraging the powerful zero-shot classification capabilities of CLIP and multiple source datasets. Existing methods typically train a single model across multiple source domains to capture domain-shared information. However, this paradigm inherently suffers from two types of conflicts: 1) sample conflicts, arising from noisy samples and extreme domain shifts among sources; and 2) optimization conflicts, stemming from competition and trade-offs during multi-source training. Both hinder the generalization and lead to suboptimal solutions. Recent studies have shown that model merging can effectively mitigate the competition of multi-objective optimization and improve generalization performance. Inspired by these findings, we propose Harmonizing and Merging (HAM), a novel source model merging framework for CLIP-based domain generalization. During the training process of the source models, HAM enriches the source samples without conflicting samples, and harmonizes the update directions of all models. Then, a redundancy-aware historical model merging method is introduced to effectively integrate knowledge across all source models. HAM comprehensively consolidates source domain information while enabling mutual enhancement among source models, ultimately yielding a final model with optimal generalization capabilities. Extensive experiments on five widely used benchmark datasets demonstrate the effectiveness of our approach, achieving state-of-the-art performance.

DriveSuprim: Towards Precise Trajectory Selection for End-to-End Planning

Jun 07, 2025Abstract:In complex driving environments, autonomous vehicles must navigate safely. Relying on a single predicted path, as in regression-based approaches, usually does not explicitly assess the safety of the predicted trajectory. Selection-based methods address this by generating and scoring multiple trajectory candidates and predicting the safety score for each, but face optimization challenges in precisely selecting the best option from thousands of possibilities and distinguishing subtle but safety-critical differences, especially in rare or underrepresented scenarios. We propose DriveSuprim to overcome these challenges and advance the selection-based paradigm through a coarse-to-fine paradigm for progressive candidate filtering, a rotation-based augmentation method to improve robustness in out-of-distribution scenarios, and a self-distillation framework to stabilize training. DriveSuprim achieves state-of-the-art performance, reaching 93.5% PDMS in NAVSIM v1 and 87.1% EPDMS in NAVSIM v2 without extra data, demonstrating superior safetycritical capabilities, including collision avoidance and compliance with rules, while maintaining high trajectory quality in various driving scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge