Xiao Tan

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

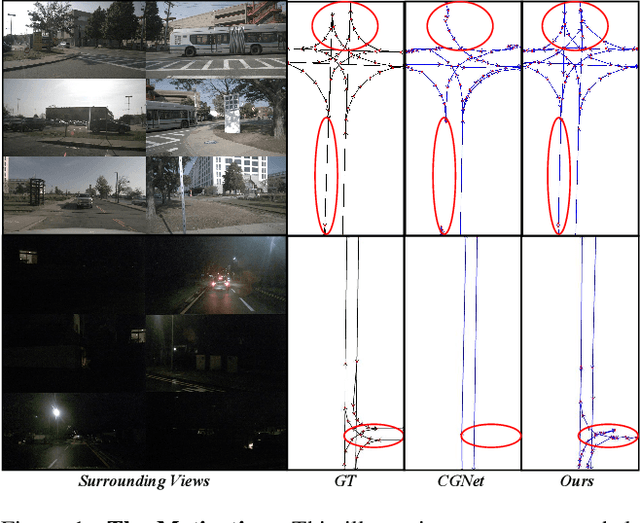

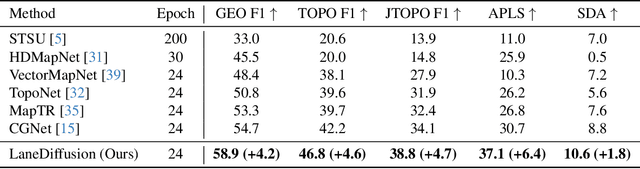

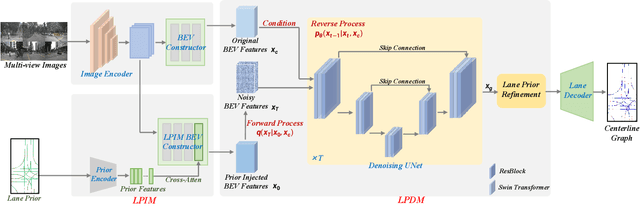

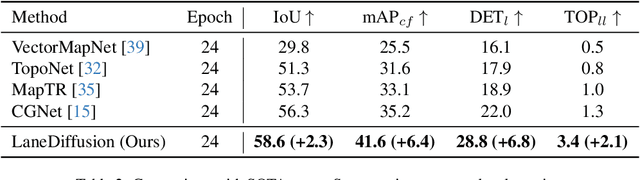

LaneDiffusion: Improving Centerline Graph Learning via Prior Injected BEV Feature Generation

Nov 09, 2025

Abstract:Centerline graphs, crucial for path planning in autonomous driving, are traditionally learned using deterministic methods. However, these methods often lack spatial reasoning and struggle with occluded or invisible centerlines. Generative approaches, despite their potential, remain underexplored in this domain. We introduce LaneDiffusion, a novel generative paradigm for centerline graph learning. LaneDiffusion innovatively employs diffusion models to generate lane centerline priors at the Bird's Eye View (BEV) feature level, instead of directly predicting vectorized centerlines. Our method integrates a Lane Prior Injection Module (LPIM) and a Lane Prior Diffusion Module (LPDM) to effectively construct diffusion targets and manage the diffusion process. Furthermore, vectorized centerlines and topologies are then decoded from these prior-injected BEV features. Extensive evaluations on the nuScenes and Argoverse2 datasets demonstrate that LaneDiffusion significantly outperforms existing methods, achieving improvements of 4.2%, 4.6%, 4.7%, 6.4% and 1.8% on fine-grained point-level metrics (GEO F1, TOPO F1, JTOPO F1, APLS and SDA) and 2.3%, 6.4%, 6.8% and 2.1% on segment-level metrics (IoU, mAP_cf, DET_l and TOP_ll). These results establish state-of-the-art performance in centerline graph learning, offering new insights into generative models for this task.

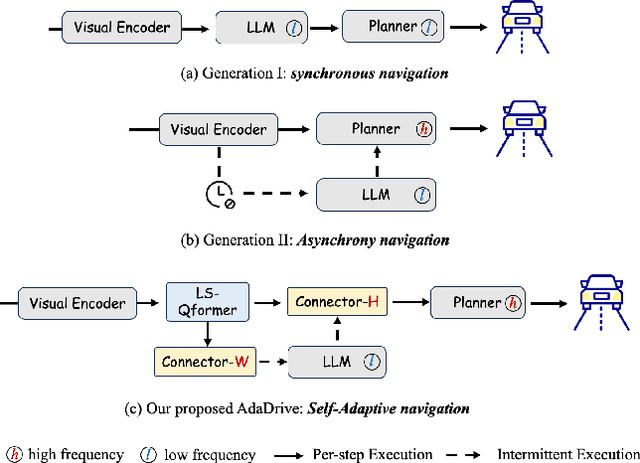

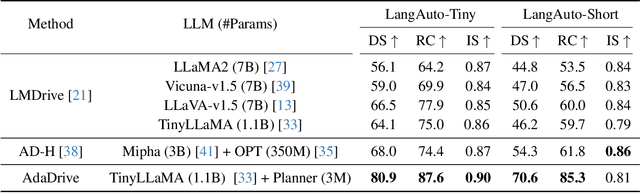

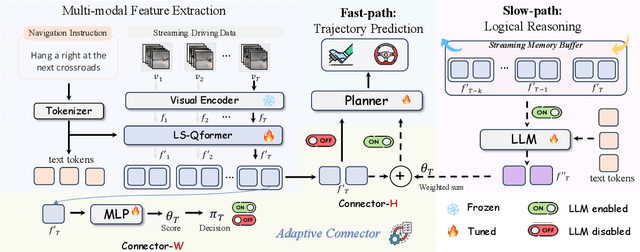

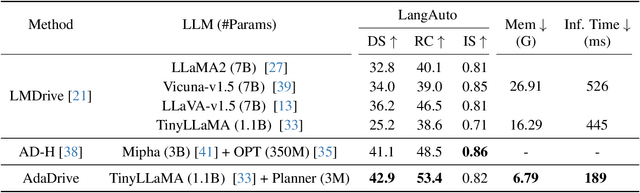

AdaDrive: Self-Adaptive Slow-Fast System for Language-Grounded Autonomous Driving

Nov 09, 2025

Abstract:Effectively integrating Large Language Models (LLMs) into autonomous driving requires a balance between leveraging high-level reasoning and maintaining real-time efficiency. Existing approaches either activate LLMs too frequently, causing excessive computational overhead, or use fixed schedules, failing to adapt to dynamic driving conditions. To address these challenges, we propose AdaDrive, an adaptively collaborative slow-fast framework that optimally determines when and how LLMs contribute to decision-making. (1) When to activate the LLM: AdaDrive employs a novel adaptive activation loss that dynamically determines LLM invocation based on a comparative learning mechanism, ensuring activation only in complex or critical scenarios. (2) How to integrate LLM assistance: Instead of rigid binary activation, AdaDrive introduces an adaptive fusion strategy that modulates a continuous, scaled LLM influence based on scene complexity and prediction confidence, ensuring seamless collaboration with conventional planners. Through these strategies, AdaDrive provides a flexible, context-aware framework that maximizes decision accuracy without compromising real-time performance. Extensive experiments on language-grounded autonomous driving benchmarks demonstrate that AdaDrive state-of-the-art performance in terms of both driving accuracy and computational efficiency. Code is available at https://github.com/ReaFly/AdaDrive.

VLDrive: Vision-Augmented Lightweight MLLMs for Efficient Language-grounded Autonomous Driving

Nov 09, 2025Abstract:Recent advancements in language-grounded autonomous driving have been significantly promoted by the sophisticated cognition and reasoning capabilities of large language models (LLMs). However, current LLM-based approaches encounter critical challenges: (1) Failure analysis reveals that frequent collisions and obstructions, stemming from limitations in visual representations, remain primary obstacles to robust driving performance. (2) The substantial parameters of LLMs pose considerable deployment hurdles. To address these limitations, we introduce VLDrive, a novel approach featuring a lightweight MLLM architecture with enhanced vision components. VLDrive achieves compact visual tokens through innovative strategies, including cycle-consistent dynamic visual pruning and memory-enhanced feature aggregation. Furthermore, we propose a distance-decoupled instruction attention mechanism to improve joint visual-linguistic feature learning, particularly for long-range visual tokens. Extensive experiments conducted in the CARLA simulator demonstrate VLDrive`s effectiveness. Notably, VLDrive achieves state-of-the-art driving performance while reducing parameters by 81% (from 7B to 1.3B), yielding substantial driving score improvements of 15.4%, 16.8%, and 7.6% at tiny, short, and long distances, respectively, in closed-loop evaluations. Code is available at https://github.com/ReaFly/VLDrive.

Safe Navigation under State Uncertainty: Online Adaptation for Robust Control Barrier Functions

Aug 26, 2025Abstract:Measurements and state estimates are often imperfect in control practice, posing challenges for safety-critical applications, where safety guarantees rely on accurate state information. In the presence of estimation errors, several prior robust control barrier function (R-CBF) formulations have imposed strict conditions on the input. These methods can be overly conservative and can introduce issues such as infeasibility, high control effort, etc. This work proposes a systematic method to improve R-CBFs, and demonstrates its advantages on a tracked vehicle that navigates among multiple obstacles. A primary contribution is a new optimization-based online parameter adaptation scheme that reduces the conservativeness of existing R-CBFs. In order to reduce the complexity of the parameter optimization, we merge several safety constraints into one unified numerical CBF via Poisson's equation. We further address the dual relative degree issue that typically causes difficulty in vehicle tracking. Experimental trials demonstrate the overall performance improvement of our approach over existing formulations.

Secure Safety Filter: Towards Safe Flight Control under Sensor Attacks

May 11, 2025Abstract:Modern autopilot systems are prone to sensor attacks that can jeopardize flight safety. To mitigate this risk, we proposed a modular solution: the secure safety filter, which extends the well-established control barrier function (CBF)-based safety filter to account for, and mitigate, sensor attacks. This module consists of a secure state reconstructor (which generates plausible states) and a safety filter (which computes the safe control input that is closest to the nominal one). Differing from existing work focusing on linear, noise-free systems, the proposed secure safety filter handles bounded measurement noise and, by leveraging reduced-order model techniques, is applicable to the nonlinear dynamics of drones. Software-in-the-loop simulations and drone hardware experiments demonstrate the effectiveness of the secure safety filter in rendering the system safe in the presence of sensor attacks.

Seeing the Future, Perceiving the Future: A Unified Driving World Model for Future Generation and Perception

Mar 17, 2025

Abstract:We present UniFuture, a simple yet effective driving world model that seamlessly integrates future scene generation and perception within a single framework. Unlike existing models focusing solely on pixel-level future prediction or geometric reasoning, our approach jointly models future appearance (i.e., RGB image) and geometry (i.e., depth), ensuring coherent predictions. Specifically, during the training, we first introduce a Dual-Latent Sharing scheme, which transfers image and depth sequence in a shared latent space, allowing both modalities to benefit from shared feature learning. Additionally, we propose a Multi-scale Latent Interaction mechanism, which facilitates bidirectional refinement between image and depth features at multiple spatial scales, effectively enhancing geometry consistency and perceptual alignment. During testing, our UniFuture can easily predict high-consistency future image-depth pairs by only using the current image as input. Extensive experiments on the nuScenes dataset demonstrate that UniFuture outperforms specialized models on future generation and perception tasks, highlighting the advantages of a unified, structurally-aware world model. The project page is at https://github.com/dk-liang/UniFuture.

HisTrackMap: Global Vectorized High-Definition Map Construction via History Map Tracking

Mar 10, 2025

Abstract:As an essential component of autonomous driving systems, high-definition (HD) maps provide rich and precise environmental information for auto-driving scenarios; however, existing methods, which primarily rely on query-based detection frameworks to directly model map elements or implicitly propagate queries over time, often struggle to maintain consistent temporal perception outcomes. These inconsistencies pose significant challenges to the stability and reliability of real-world autonomous driving and map data collection systems. To address this limitation, we propose a novel end-to-end tracking framework for global map construction by temporally tracking map elements' historical trajectories. Firstly, instance-level historical rasterization map representation is designed to explicitly store previous perception results, which can control and maintain different global instances' history information in a fine-grained way. Secondly, we introduce a Map-Trajectory Prior Fusion module within this tracking framework, leveraging historical priors for tracked instances to improve temporal smoothness and continuity. Thirdly, we propose a global perspective metric to evaluate the quality of temporal geometry construction in HD maps, filling the gap in current metrics for assessing global geometric perception results. Substantial experiments on the nuScenes and Argoverse2 datasets demonstrate that the proposed method outperforms state-of-the-art (SOTA) methods in both single-frame and temporal metrics. our project page: $\href{https://yj772881654.github.io/HisTrackMap/}{https://yj772881654.github.io/HisTrackMap.}$

LDMapNet-U: An End-to-End System for City-Scale Lane-Level Map Updating

Jan 06, 2025

Abstract:An up-to-date city-scale lane-level map is an indispensable infrastructure and a key enabling technology for ensuring the safety and user experience of autonomous driving systems. In industrial scenarios, reliance on manual annotation for map updates creates a critical bottleneck. Lane-level updates require precise change information and must ensure consistency with adjacent data while adhering to strict standards. Traditional methods utilize a three-stage approach-construction, change detection, and updating-which often necessitates manual verification due to accuracy limitations. This results in labor-intensive processes and hampers timely updates. To address these challenges, we propose LDMapNet-U, which implements a new end-to-end paradigm for city-scale lane-level map updating. By reconceptualizing the update task as an end-to-end map generation process grounded in historical map data, we introduce a paradigm shift in map updating that simultaneously generates vectorized maps and change information. To achieve this, a Prior-Map Encoding (PME) module is introduced to effectively encode historical maps, serving as a critical reference for detecting changes. Additionally, we incorporate a novel Instance Change Prediction (ICP) module that learns to predict associations with historical maps. Consequently, LDMapNet-U simultaneously achieves vectorized map element generation and change detection. To demonstrate the superiority and effectiveness of LDMapNet-U, extensive experiments are conducted using large-scale real-world datasets. In addition, LDMapNet-U has been successfully deployed in production at Baidu Maps since April 2024, supporting map updating for over 360 cities and significantly shortening the update cycle from quarterly to weekly. The updated maps serve hundreds of millions of users and are integrated into the autonomous driving systems of several leading vehicle companies.

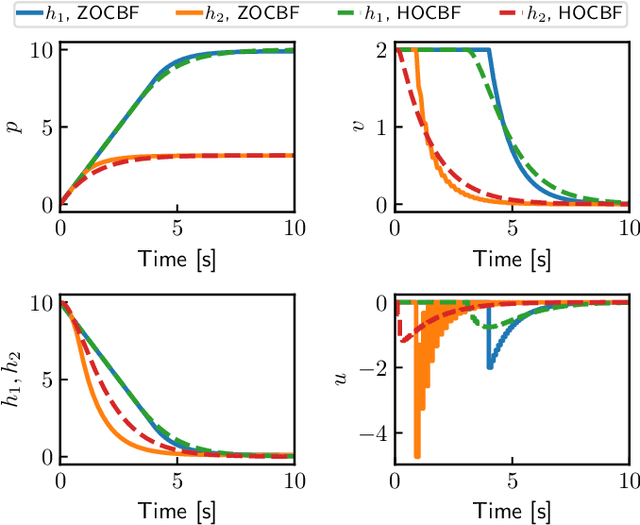

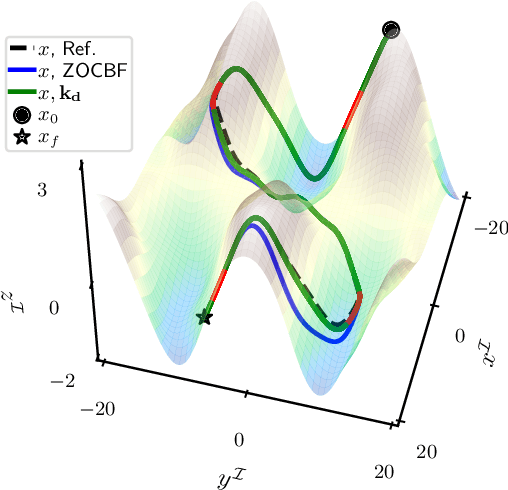

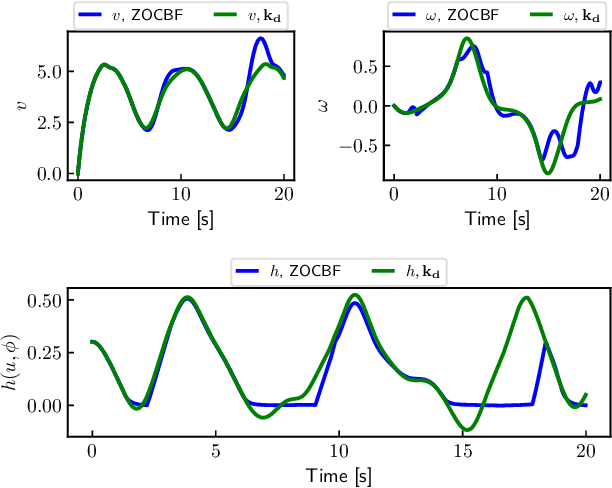

Zero-order Control Barrier Functions for Sampled-Data Systems with State and Input Dependent Safety Constraints

Nov 26, 2024

Abstract:We propose a novel zero-order control barrier function (ZOCBF) for sampled-data systems to ensure system safety. Our formulation generalizes conventional control barrier functions and straightforwardly handles safety constraints with high-relative degrees or those that explicitly depend on both system states and inputs. The proposed ZOCBF condition does not require any differentiation operation. Instead, it involves computing the difference of the ZOCBF values at two consecutive sampling instants. We propose three numerical approaches to enforce the ZOCBF condition, tailored to different problem settings and available computational resources. We demonstrate the effectiveness of our approach through a collision avoidance example and a rollover prevention example on uneven terrains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge