Mengmeng Yang

Tsinghua University

COME: Adding Scene-Centric Forecasting Control to Occupancy World Model

Jun 16, 2025Abstract:World models are critical for autonomous driving to simulate environmental dynamics and generate synthetic data. Existing methods struggle to disentangle ego-vehicle motion (perspective shifts) from scene evolvement (agent interactions), leading to suboptimal predictions. Instead, we propose to separate environmental changes from ego-motion by leveraging the scene-centric coordinate systems. In this paper, we introduce COME: a framework that integrates scene-centric forecasting Control into the Occupancy world ModEl. Specifically, COME first generates ego-irrelevant, spatially consistent future features through a scene-centric prediction branch, which are then converted into scene condition using a tailored ControlNet. These condition features are subsequently injected into the occupancy world model, enabling more accurate and controllable future occupancy predictions. Experimental results on the nuScenes-Occ3D dataset show that COME achieves consistent and significant improvements over state-of-the-art (SOTA) methods across diverse configurations, including different input sources (ground-truth, camera-based, fusion-based occupancy) and prediction horizons (3s and 8s). For example, under the same settings, COME achieves 26.3% better mIoU metric than DOME and 23.7% better mIoU metric than UniScene. These results highlight the efficacy of disentangled representation learning in enhancing spatio-temporal prediction fidelity for world models. Code and videos will be available at https://github.com/synsin0/COME.

AgentThink: A Unified Framework for Tool-Augmented Chain-of-Thought Reasoning in Vision-Language Models for Autonomous Driving

May 21, 2025

Abstract:Vision-Language Models (VLMs) show promise for autonomous driving, yet their struggle with hallucinations, inefficient reasoning, and limited real-world validation hinders accurate perception and robust step-by-step reasoning. To overcome this, we introduce \textbf{AgentThink}, a pioneering unified framework that, for the first time, integrates Chain-of-Thought (CoT) reasoning with dynamic, agent-style tool invocation for autonomous driving tasks. AgentThink's core innovations include: \textbf{(i) Structured Data Generation}, by establishing an autonomous driving tool library to automatically construct structured, self-verified reasoning data explicitly incorporating tool usage for diverse driving scenarios; \textbf{(ii) A Two-stage Training Pipeline}, employing Supervised Fine-Tuning (SFT) with Group Relative Policy Optimization (GRPO) to equip VLMs with the capability for autonomous tool invocation; and \textbf{(iii) Agent-style Tool-Usage Evaluation}, introducing a novel multi-tool assessment protocol to rigorously evaluate the model's tool invocation and utilization. Experiments on the DriveLMM-o1 benchmark demonstrate AgentThink significantly boosts overall reasoning scores by \textbf{53.91\%} and enhances answer accuracy by \textbf{33.54\%}, while markedly improving reasoning quality and consistency. Furthermore, ablation studies and robust zero-shot/few-shot generalization experiments across various benchmarks underscore its powerful capabilities. These findings highlight a promising trajectory for developing trustworthy and tool-aware autonomous driving models.

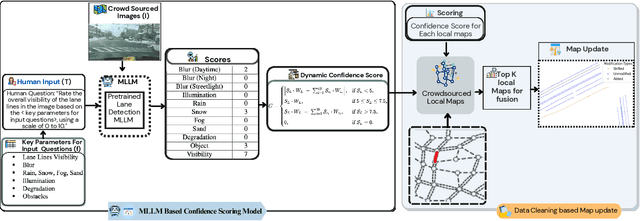

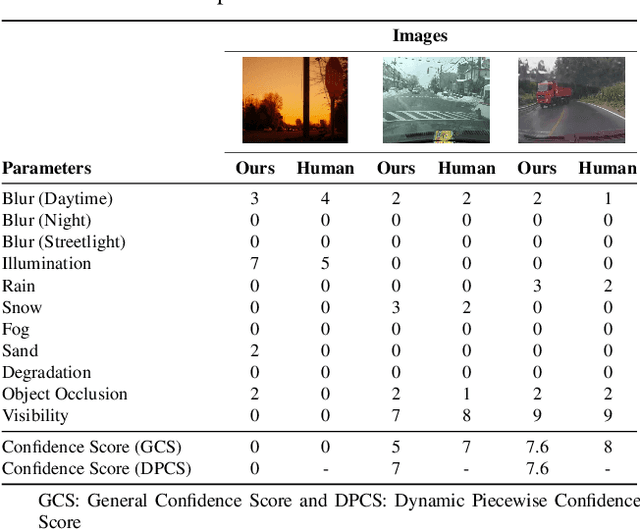

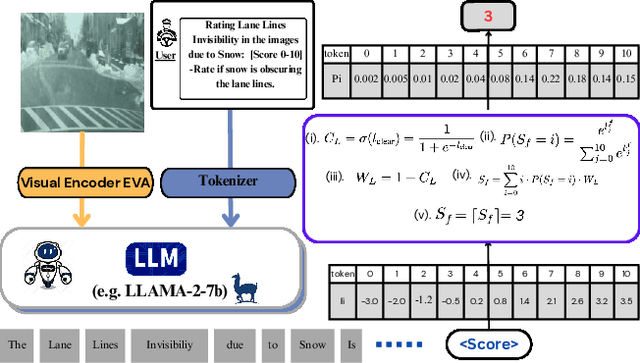

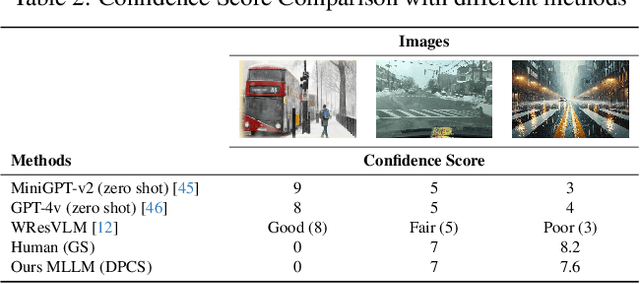

CleanMAP: Distilling Multimodal LLMs for Confidence-Driven Crowdsourced HD Map Updates

Apr 14, 2025

Abstract:The rapid growth of intelligent connected vehicles (ICVs) and integrated vehicle-road-cloud systems has increased the demand for accurate, real-time HD map updates. However, ensuring map reliability remains challenging due to inconsistencies in crowdsourced data, which suffer from motion blur, lighting variations, adverse weather, and lane marking degradation. This paper introduces CleanMAP, a Multimodal Large Language Model (MLLM)-based distillation framework designed to filter and refine crowdsourced data for high-confidence HD map updates. CleanMAP leverages an MLLM-driven lane visibility scoring model that systematically quantifies key visual parameters, assigning confidence scores (0-10) based on their impact on lane detection. A novel dynamic piecewise confidence-scoring function adapts scores based on lane visibility, ensuring strong alignment with human evaluations while effectively filtering unreliable data. To further optimize map accuracy, a confidence-driven local map fusion strategy ranks and selects the top-k highest-scoring local maps within an optimal confidence range (best score minus 10%), striking a balance between data quality and quantity. Experimental evaluations on a real-world autonomous vehicle dataset validate CleanMAP's effectiveness, demonstrating that fusing the top three local maps achieves the lowest mean map update error of 0.28m, outperforming the baseline (0.37m) and meeting stringent accuracy thresholds (<= 0.32m). Further validation with real-vehicle data confirms 84.88% alignment with human evaluators, reinforcing the model's robustness and reliability. This work establishes CleanMAP as a scalable and deployable solution for crowdsourced HD map updates, ensuring more precise and reliable autonomous navigation. The code will be available at https://Ankit-Zefan.github.io/CleanMap/

POD: Predictive Object Detection with Single-Frame FMCW LiDAR Point Cloud

Apr 08, 2025

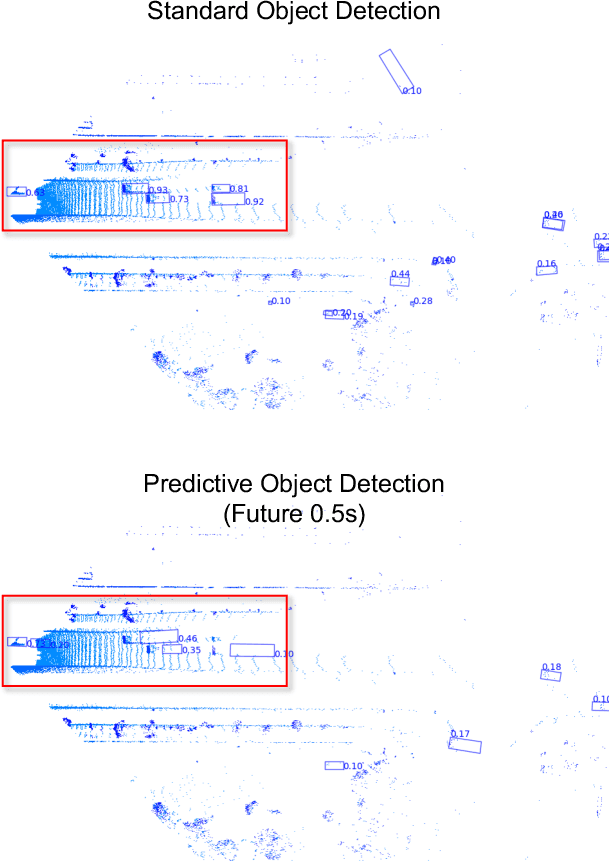

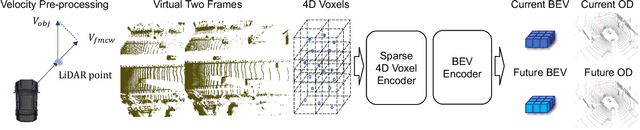

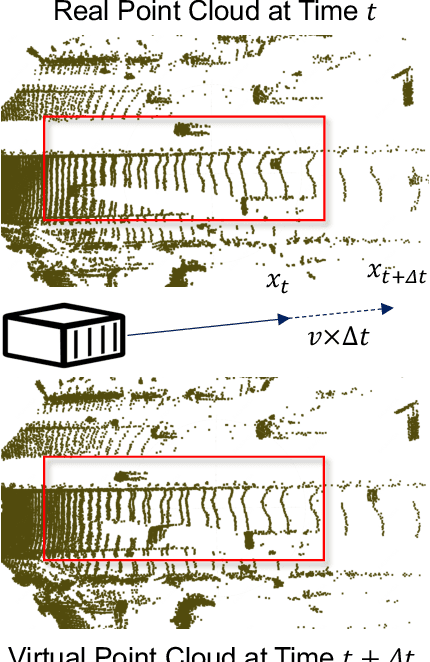

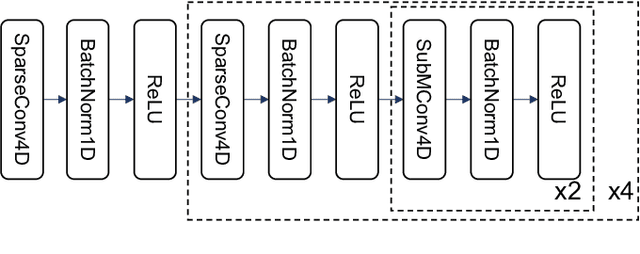

Abstract:LiDAR-based 3D object detection is a fundamental task in the field of autonomous driving. This paper explores the unique advantage of Frequency Modulated Continuous Wave (FMCW) LiDAR in autonomous perception. Given a single frame FMCW point cloud with radial velocity measurements, we expect that our object detector can detect the short-term future locations of objects using only the current frame sensor data and demonstrate a fast ability to respond to intermediate danger. To achieve this, we extend the standard object detection task to a novel task named predictive object detection (POD), which aims to predict the short-term future location and dimensions of objects based solely on current observations. Typically, a motion prediction task requires historical sensor information to process the temporal contexts of each object, while our detector's avoidance of multi-frame historical information enables a much faster response time to potential dangers. The core advantage of FMCW LiDAR lies in the radial velocity associated with every reflected point. We propose a novel POD framework, the core idea of which is to generate a virtual future point using a ray casting mechanism, create virtual two-frame point clouds with the current and virtual future frames, and encode these two-frame voxel features with a sparse 4D encoder. Subsequently, the 4D voxel features are separated by temporal indices and remapped into two Bird's Eye View (BEV) features: one decoded for standard current frame object detection and the other for future predictive object detection. Extensive experiments on our in-house dataset demonstrate the state-of-the-art standard and predictive detection performance of the proposed POD framework.

FASIONAD++ : Integrating High-Level Instruction and Information Bottleneck in FAt-Slow fusION Systems for Enhanced Safety in Autonomous Driving with Adaptive Feedback

Mar 11, 2025

Abstract:Ensuring safe, comfortable, and efficient planning is crucial for autonomous driving systems. While end-to-end models trained on large datasets perform well in standard driving scenarios, they struggle with complex low-frequency events. Recent Large Language Models (LLMs) and Vision Language Models (VLMs) advancements offer enhanced reasoning but suffer from computational inefficiency. Inspired by the dual-process cognitive model "Thinking, Fast and Slow", we propose $\textbf{FASIONAD}$ -- a novel dual-system framework that synergizes a fast end-to-end planner with a VLM-based reasoning module. The fast system leverages end-to-end learning to achieve real-time trajectory generation in common scenarios, while the slow system activates through uncertainty estimation to perform contextual analysis and complex scenario resolution. Our architecture introduces three key innovations: (1) A dynamic switching mechanism enabling slow system intervention based on real-time uncertainty assessment; (2) An information bottleneck with high-level plan feedback that optimizes the slow system's guidance capability; (3) A bidirectional knowledge exchange where visual prompts enhance the slow system's reasoning while its feedback refines the fast planner's decision-making. To strengthen VLM reasoning, we develop a question-answering mechanism coupled with reward-instruct training strategy. In open-loop experiments, FASIONAD achieves a $6.7\%$ reduction in average $L2$ trajectory error and $28.1\%$ lower collision rate.

LEGO-Motion: Learning-Enhanced Grids with Occupancy Instance Modeling for Class-Agnostic Motion Prediction

Mar 10, 2025

Abstract:Accurate and reliable spatial and motion information plays a pivotal role in autonomous driving systems. However, object-level perception models struggle with handling open scenario categories and lack precise intrinsic geometry. On the other hand, occupancy-based class-agnostic methods excel in representing scenes but fail to ensure physics consistency and ignore the importance of interactions between traffic participants, hindering the model's ability to learn accurate and reliable motion. In this paper, we introduce a novel occupancy-instance modeling framework for class-agnostic motion prediction tasks, named LEGO-Motion, which incorporates instance features into Bird's Eye View (BEV) space. Our model comprises (1) a BEV encoder, (2) an Interaction-Augmented Instance Encoder, and (3) an Instance-Enhanced BEV Encoder, improving both interaction relationships and physics consistency within the model, thereby ensuring a more accurate and robust understanding of the environment. Extensive experiments on the nuScenes dataset demonstrate that our method achieves state-of-the-art performance, outperforming existing approaches. Furthermore, the effectiveness of our framework is validated on the advanced FMCW LiDAR benchmark, showcasing its practical applicability and generalization capabilities. The code will be made publicly available to facilitate further research.

Advancing Autonomous Vehicle Intelligence: Deep Learning and Multimodal LLM for Traffic Sign Recognition and Robust Lane Detection

Mar 08, 2025Abstract:Autonomous vehicles (AVs) require reliable traffic sign recognition and robust lane detection capabilities to ensure safe navigation in complex and dynamic environments. This paper introduces an integrated approach combining advanced deep learning techniques and Multimodal Large Language Models (MLLMs) for comprehensive road perception. For traffic sign recognition, we systematically evaluate ResNet-50, YOLOv8, and RT-DETR, achieving state-of-the-art performance of 99.8% with ResNet-50, 98.0% accuracy with YOLOv8, and achieved 96.6% accuracy in RT-DETR despite its higher computational complexity. For lane detection, we propose a CNN-based segmentation method enhanced by polynomial curve fitting, which delivers high accuracy under favorable conditions. Furthermore, we introduce a lightweight, Multimodal, LLM-based framework that directly undergoes instruction tuning using small yet diverse datasets, eliminating the need for initial pretraining. This framework effectively handles various lane types, complex intersections, and merging zones, significantly enhancing lane detection reliability by reasoning under adverse conditions. Despite constraints in available training resources, our multimodal approach demonstrates advanced reasoning capabilities, achieving a Frame Overall Accuracy (FRM) of 53.87%, a Question Overall Accuracy (QNS) of 82.83%, lane detection accuracies of 99.6% in clear conditions and 93.0% at night, and robust performance in reasoning about lane invisibility due to rain (88.4%) or road degradation (95.6%). The proposed comprehensive framework markedly enhances AV perception reliability, thus contributing significantly to safer autonomous driving across diverse and challenging road scenarios.

Residual Learning towards High-fidelity Vehicle Dynamics Modeling with Transformer

Feb 17, 2025Abstract:The vehicle dynamics model serves as a vital component of autonomous driving systems, as it describes the temporal changes in vehicle state. In a long period, researchers have made significant endeavors to accurately model vehicle dynamics. Traditional physics-based methods employ mathematical formulae to model vehicle dynamics, but they are unable to adequately describe complex vehicle systems due to the simplifications they entail. Recent advancements in deep learning-based methods have addressed this limitation by directly regressing vehicle dynamics. However, the performance and generalization capabilities still require further enhancement. In this letter, we address these problems by proposing a vehicle dynamics correction system that leverages deep neural networks to correct the state residuals of a physical model instead of directly estimating the states. This system greatly reduces the difficulty of network learning and thus improves the estimation accuracy of vehicle dynamics. Furthermore, we have developed a novel Transformer-based dynamics residual correction network, DyTR. This network implicitly represents state residuals as high-dimensional queries, and iteratively updates the estimated residuals by interacting with dynamics state features. The experiments in simulations demonstrate the proposed system works much better than physics model, and our proposed DyTR model achieves the best performances on dynamics state residual correction task, reducing the state prediction errors of a simple 3 DoF vehicle model by an average of 92.3% and 59.9% in two dataset, respectively.

LDMapNet-U: An End-to-End System for City-Scale Lane-Level Map Updating

Jan 06, 2025

Abstract:An up-to-date city-scale lane-level map is an indispensable infrastructure and a key enabling technology for ensuring the safety and user experience of autonomous driving systems. In industrial scenarios, reliance on manual annotation for map updates creates a critical bottleneck. Lane-level updates require precise change information and must ensure consistency with adjacent data while adhering to strict standards. Traditional methods utilize a three-stage approach-construction, change detection, and updating-which often necessitates manual verification due to accuracy limitations. This results in labor-intensive processes and hampers timely updates. To address these challenges, we propose LDMapNet-U, which implements a new end-to-end paradigm for city-scale lane-level map updating. By reconceptualizing the update task as an end-to-end map generation process grounded in historical map data, we introduce a paradigm shift in map updating that simultaneously generates vectorized maps and change information. To achieve this, a Prior-Map Encoding (PME) module is introduced to effectively encode historical maps, serving as a critical reference for detecting changes. Additionally, we incorporate a novel Instance Change Prediction (ICP) module that learns to predict associations with historical maps. Consequently, LDMapNet-U simultaneously achieves vectorized map element generation and change detection. To demonstrate the superiority and effectiveness of LDMapNet-U, extensive experiments are conducted using large-scale real-world datasets. In addition, LDMapNet-U has been successfully deployed in production at Baidu Maps since April 2024, supporting map updating for over 360 cities and significantly shortening the update cycle from quarterly to weekly. The updated maps serve hundreds of millions of users and are integrated into the autonomous driving systems of several leading vehicle companies.

Enhancing Lane Segment Perception and Topology Reasoning with Crowdsourcing Trajectory Priors

Nov 26, 2024Abstract:In autonomous driving, recent advances in lane segment perception provide autonomous vehicles with a comprehensive understanding of driving scenarios. Moreover, incorporating prior information input into such perception model represents an effective approach to ensure the robustness and accuracy. However, utilizing diverse sources of prior information still faces three key challenges: the acquisition of high-quality prior information, alignment between prior and online perception, efficient integration. To address these issues, we investigate prior augmentation from a novel perspective of trajectory priors. In this paper, we initially extract crowdsourcing trajectory data from Argoverse2 motion forecasting dataset and encode trajectory data into rasterized heatmap and vectorized instance tokens, then we incorporate such prior information into the online mapping model through different ways. Besides, with the purpose of mitigating the misalignment between prior and online perception, we design a confidence-based fusion module that takes alignment into account during the fusion process. We conduct extensive experiments on OpenLane-V2 dataset. The results indicate that our method's performance significantly outperforms the current state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge