Sicong Jiang

AgentThink: A Unified Framework for Tool-Augmented Chain-of-Thought Reasoning in Vision-Language Models for Autonomous Driving

May 21, 2025

Abstract:Vision-Language Models (VLMs) show promise for autonomous driving, yet their struggle with hallucinations, inefficient reasoning, and limited real-world validation hinders accurate perception and robust step-by-step reasoning. To overcome this, we introduce \textbf{AgentThink}, a pioneering unified framework that, for the first time, integrates Chain-of-Thought (CoT) reasoning with dynamic, agent-style tool invocation for autonomous driving tasks. AgentThink's core innovations include: \textbf{(i) Structured Data Generation}, by establishing an autonomous driving tool library to automatically construct structured, self-verified reasoning data explicitly incorporating tool usage for diverse driving scenarios; \textbf{(ii) A Two-stage Training Pipeline}, employing Supervised Fine-Tuning (SFT) with Group Relative Policy Optimization (GRPO) to equip VLMs with the capability for autonomous tool invocation; and \textbf{(iii) Agent-style Tool-Usage Evaluation}, introducing a novel multi-tool assessment protocol to rigorously evaluate the model's tool invocation and utilization. Experiments on the DriveLMM-o1 benchmark demonstrate AgentThink significantly boosts overall reasoning scores by \textbf{53.91\%} and enhances answer accuracy by \textbf{33.54\%}, while markedly improving reasoning quality and consistency. Furthermore, ablation studies and robust zero-shot/few-shot generalization experiments across various benchmarks underscore its powerful capabilities. These findings highlight a promising trajectory for developing trustworthy and tool-aware autonomous driving models.

FASIONAD++ : Integrating High-Level Instruction and Information Bottleneck in FAt-Slow fusION Systems for Enhanced Safety in Autonomous Driving with Adaptive Feedback

Mar 11, 2025

Abstract:Ensuring safe, comfortable, and efficient planning is crucial for autonomous driving systems. While end-to-end models trained on large datasets perform well in standard driving scenarios, they struggle with complex low-frequency events. Recent Large Language Models (LLMs) and Vision Language Models (VLMs) advancements offer enhanced reasoning but suffer from computational inefficiency. Inspired by the dual-process cognitive model "Thinking, Fast and Slow", we propose $\textbf{FASIONAD}$ -- a novel dual-system framework that synergizes a fast end-to-end planner with a VLM-based reasoning module. The fast system leverages end-to-end learning to achieve real-time trajectory generation in common scenarios, while the slow system activates through uncertainty estimation to perform contextual analysis and complex scenario resolution. Our architecture introduces three key innovations: (1) A dynamic switching mechanism enabling slow system intervention based on real-time uncertainty assessment; (2) An information bottleneck with high-level plan feedback that optimizes the slow system's guidance capability; (3) A bidirectional knowledge exchange where visual prompts enhance the slow system's reasoning while its feedback refines the fast planner's decision-making. To strengthen VLM reasoning, we develop a question-answering mechanism coupled with reward-instruct training strategy. In open-loop experiments, FASIONAD achieves a $6.7\%$ reduction in average $L2$ trajectory error and $28.1\%$ lower collision rate.

Adversarial Vulnerabilities in Large Language Models for Time Series Forecasting

Dec 11, 2024

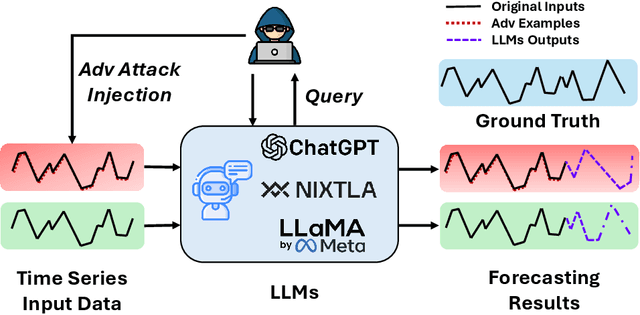

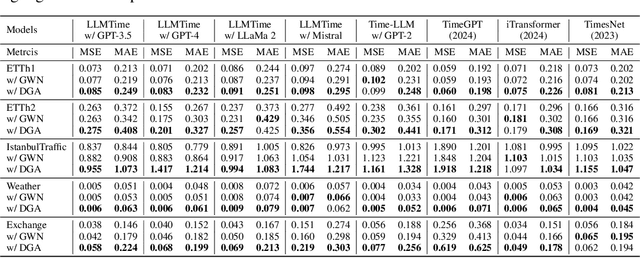

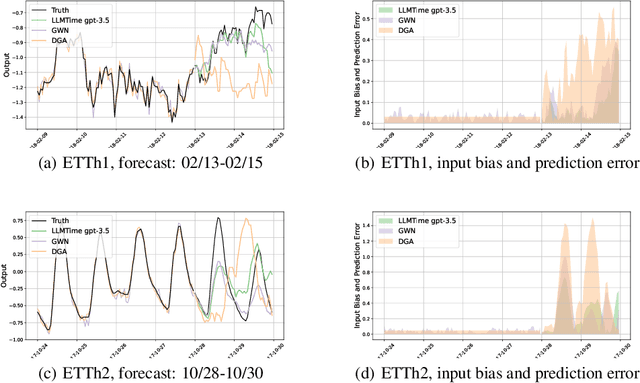

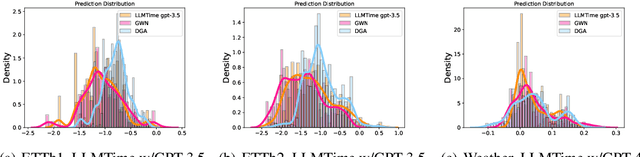

Abstract:Large Language Models (LLMs) have recently demonstrated significant potential in the field of time series forecasting, offering impressive capabilities in handling complex temporal data. However, their robustness and reliability in real-world applications remain under-explored, particularly concerning their susceptibility to adversarial attacks. In this paper, we introduce a targeted adversarial attack framework for LLM-based time series forecasting. By employing both gradient-free and black-box optimization methods, we generate minimal yet highly effective perturbations that significantly degrade the forecasting accuracy across multiple datasets and LLM architectures. Our experiments, which include models like TimeGPT and LLM-Time with GPT-3.5, GPT-4, LLaMa, and Mistral, show that adversarial attacks lead to much more severe performance degradation than random noise, and demonstrate the broad effectiveness of our attacks across different LLMs. The results underscore the critical vulnerabilities of LLMs in time series forecasting, highlighting the need for robust defense mechanisms to ensure their reliable deployment in practical applications.

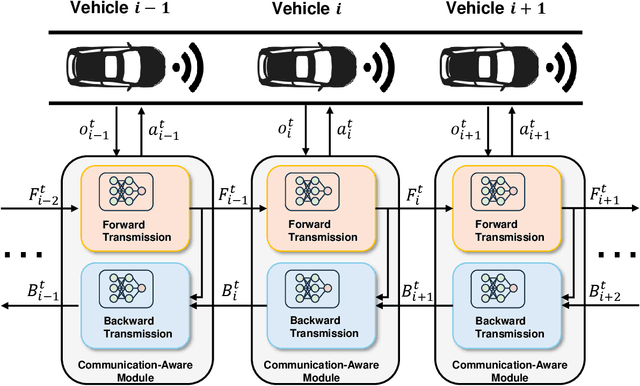

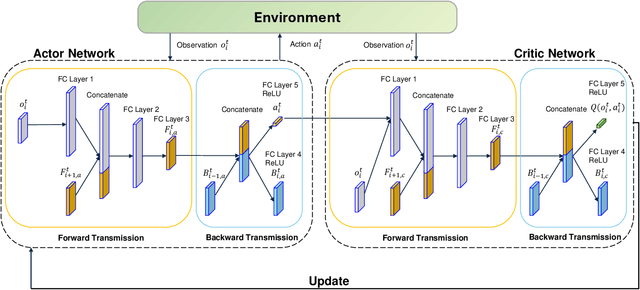

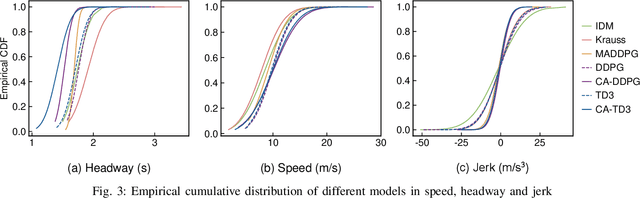

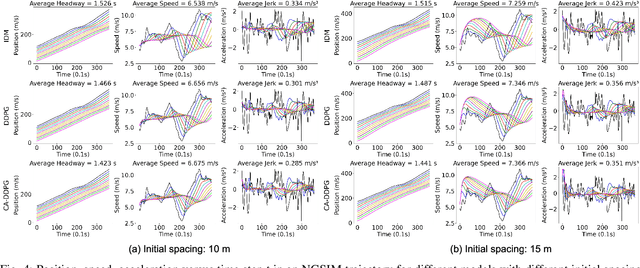

Communication-Aware Reinforcement Learning for Cooperative Adaptive Cruise Control

Jul 12, 2024

Abstract:Cooperative Adaptive Cruise Control (CACC) plays a pivotal role in enhancing traffic efficiency and safety in Connected and Autonomous Vehicles (CAVs). Reinforcement Learning (RL) has proven effective in optimizing complex decision-making processes in CACC, leading to improved system performance and adaptability. Among RL approaches, Multi-Agent Reinforcement Learning (MARL) has shown remarkable potential by enabling coordinated actions among multiple CAVs through Centralized Training with Decentralized Execution (CTDE). However, MARL often faces scalability issues, particularly when CACC vehicles suddenly join or leave the platoon, resulting in performance degradation. To address these challenges, we propose Communication-Aware Reinforcement Learning (CA-RL). CA-RL includes a communication-aware module that extracts and compresses vehicle communication information through forward and backward information transmission modules. This enables efficient cyclic information propagation within the CACC traffic flow, ensuring policy consistency and mitigating the scalability problems of MARL in CACC. Experimental results demonstrate that CA-RL significantly outperforms baseline methods in various traffic scenarios, achieving superior scalability, robustness, and overall system performance while maintaining reliable performance despite changes in the number of participating vehicles.

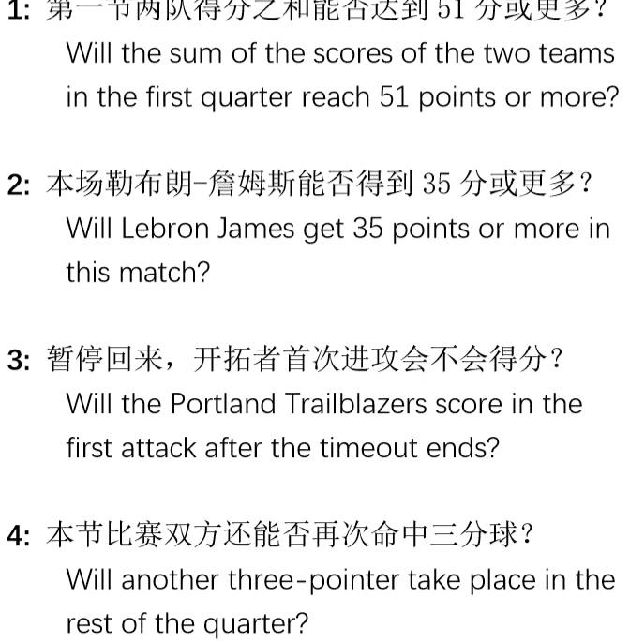

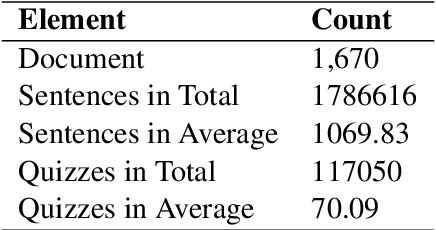

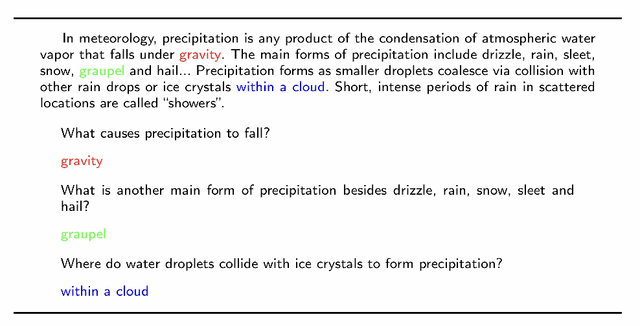

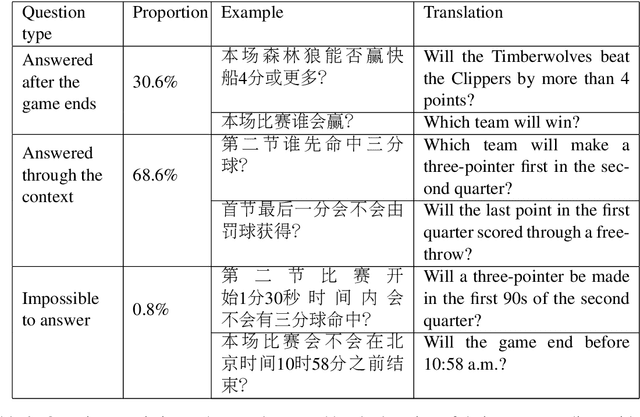

LiveQA: A Question Answering Dataset over Sports Live

Oct 01, 2020

Abstract:In this paper, we introduce LiveQA, a new question answering dataset constructed from play-by-play live broadcast. It contains 117k multiple-choice questions written by human commentators for over 1,670 NBA games, which are collected from the Chinese Hupu (https://nba.hupu.com/games) website. Derived from the characteristics of sports games, LiveQA can potentially test the reasoning ability across timeline-based live broadcasts, which is challenging compared to the existing datasets. In LiveQA, the questions require understanding the timeline, tracking events or doing mathematical computations. Our preliminary experiments show that the dataset introduces a challenging problem for question answering models, and a strong baseline model only achieves the accuracy of 53.1\% and cannot beat the dominant option rule. We release the code and data of this paper for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge