Qianying Liu

Memorization, Emergence, and Explaining Reversal Failures: A Controlled Study of Relational Semantics in LLMs

Jan 06, 2026Abstract:Autoregressive LLMs perform well on relational tasks that require linking entities via relational words (e.g., father/son, friend), but it is unclear whether they learn the logical semantics of such relations (e.g., symmetry and inversion logic) and, if so, whether reversal-type failures arise from missing relational semantics or left-to-right order bias. We propose a controlled Knowledge Graph-based synthetic framework that generates text from symmetric/inverse triples, train GPT-style autoregressive models from scratch, and evaluate memorization, logical inference, and in-context generalization to unseen entities to address these questions. We find a sharp phase transition in which relational semantics emerge with sufficient logic-bearing supervision, even in shallow (2-3 layer) models, and that successful generalization aligns with stable intermediate-layer signals. Finally, order-matched forward/reverse tests and a diffusion baseline indicate that reversal failures are primarily driven by autoregressive order bias rather than deficient inversion semantics.

A Convolutional Neural Deferred Shader for Physics Based Rendering

Dec 22, 2025

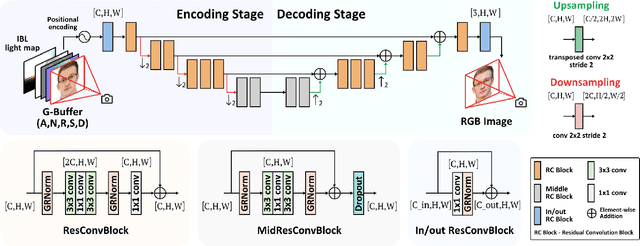

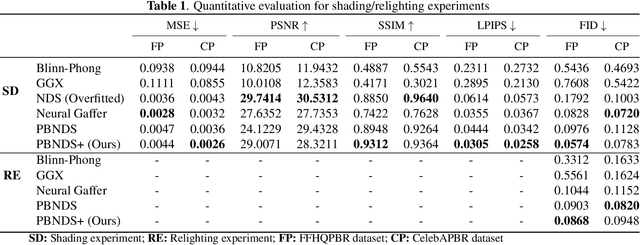

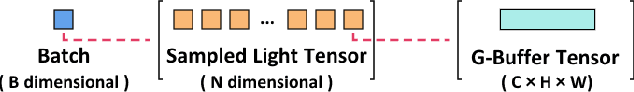

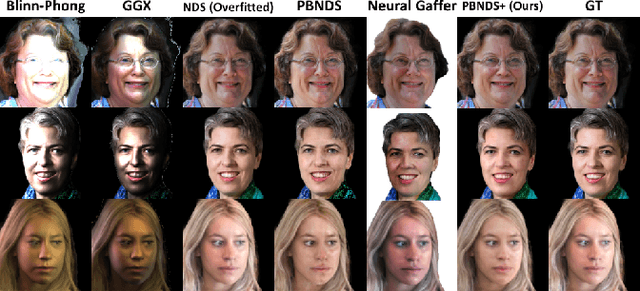

Abstract:Recent advances in neural rendering have achieved impressive results on photorealistic shading and relighting, by using a multilayer perceptron (MLP) as a regression model to learn the rendering equation from a real-world dataset. Such methods show promise for photorealistically relighting real-world objects, which is difficult to classical rendering, as there is no easy-obtained material ground truth. However, significant challenges still remain the dense connections in MLPs result in a large number of parameters, which requires high computation resources, complicating the training, and reducing performance during rendering. Data driven approaches require large amounts of training data for generalization; unbalanced data might bias the model to ignore the unusual illumination conditions, e.g. dark scenes. This paper introduces pbnds+: a novel physics-based neural deferred shading pipeline utilizing convolution neural networks to decrease the parameters and improve the performance in shading and relighting tasks; Energy regularization is also proposed to restrict the model reflection during dark illumination. Extensive experiments demonstrate that our approach outperforms classical baselines, a state-of-the-art neural shading model, and a diffusion-based method.

Language Lives in Sparse Dimensions: Toward Interpretable and Efficient Multilingual Control for Large Language Models

Oct 08, 2025Abstract:Large language models exhibit strong multilingual capabilities despite limited exposure to non-English data. Prior studies show that English-centric large language models map multilingual content into English-aligned representations at intermediate layers and then project them back into target-language token spaces in the final layer. From this observation, we hypothesize that this cross-lingual transition is governed by a small and sparse set of dimensions, which occur at consistent indices across the intermediate to final layers. Building on this insight, we introduce a simple, training-free method to identify and manipulate these dimensions, requiring only as few as 50 sentences of either parallel or monolingual data. Experiments on a multilingual generation control task reveal the interpretability of these dimensions, demonstrating that the interventions in these dimensions can switch the output language while preserving semantic content, and that it surpasses the performance of prior neuron-based approaches at a substantially lower cost.

Credit Risk Analysis for SMEs Using Graph Neural Networks in Supply Chain

Jul 10, 2025Abstract:Small and Medium-sized Enterprises (SMEs) are vital to the modern economy, yet their credit risk analysis often struggles with scarce data, especially for online lenders lacking direct credit records. This paper introduces a Graph Neural Network (GNN)-based framework, leveraging SME interactions from transaction and social data to map spatial dependencies and predict loan default risks. Tests on real-world datasets from Discover and Ant Credit (23.4M nodes for supply chain analysis, 8.6M for default prediction) show the GNN surpasses traditional and other GNN baselines, with AUCs of 0.995 and 0.701 for supply chain mining and default prediction, respectively. It also helps regulators model supply chain disruption impacts on banks, accurately forecasting loan defaults from material shortages, and offers Federal Reserve stress testers key data for CCAR risk buffers. This approach provides a scalable, effective tool for assessing SME credit risk.

BIS Reasoning 1.0: The First Large-Scale Japanese Benchmark for Belief-Inconsistent Syllogistic Reasoning

Jun 08, 2025Abstract:We present BIS Reasoning 1.0, the first large-scale Japanese dataset of syllogistic reasoning problems explicitly designed to evaluate belief-inconsistent reasoning in large language models (LLMs). Unlike prior datasets such as NeuBAROCO and JFLD, which focus on general or belief-aligned reasoning, BIS Reasoning 1.0 introduces logically valid yet belief-inconsistent syllogisms to uncover reasoning biases in LLMs trained on human-aligned corpora. We benchmark state-of-the-art models - including GPT models, Claude models, and leading Japanese LLMs - revealing significant variance in performance, with GPT-4o achieving 79.54% accuracy. Our analysis identifies critical weaknesses in current LLMs when handling logically valid but belief-conflicting inputs. These findings have important implications for deploying LLMs in high-stakes domains such as law, healthcare, and scientific literature, where truth must override intuitive belief to ensure integrity and safety.

Beyond Chains: Bridging Large Language Models and Knowledge Bases in Complex Question Answering

May 20, 2025Abstract:Knowledge Base Question Answering (KBQA) aims to answer natural language questions using structured knowledge from KBs. While LLM-only approaches offer generalization, they suffer from outdated knowledge, hallucinations, and lack of transparency. Chain-based KG-RAG methods address these issues by incorporating external KBs, but are limited to simple chain-structured questions due to the absence of planning and logical structuring. Inspired by semantic parsing methods, we propose PDRR: a four-stage framework consisting of Predict, Decompose, Retrieve, and Reason. Our method first predicts the question type and decomposes the question into structured triples. Then retrieves relevant information from KBs and guides the LLM as an agent to reason over and complete the decomposed triples. Experimental results demonstrate that PDRR consistently outperforms existing methods across various LLM backbones and achieves superior performance on both chain-structured and non-chain complex questions.

Assessing Large Language Models in Agentic Multilingual National Bias

Feb 25, 2025Abstract:Large Language Models have garnered significant attention for their capabilities in multilingual natural language processing, while studies on risks associated with cross biases are limited to immediate context preferences. Cross-language disparities in reasoning-based recommendations remain largely unexplored, with a lack of even descriptive analysis. This study is the first to address this gap. We test LLM's applicability and capability in providing personalized advice across three key scenarios: university applications, travel, and relocation. We investigate multilingual bias in state-of-the-art LLMs by analyzing their responses to decision-making tasks across multiple languages. We quantify bias in model-generated scores and assess the impact of demographic factors and reasoning strategies (e.g., Chain-of-Thought prompting) on bias patterns. Our findings reveal that local language bias is prevalent across different tasks, with GPT-4 and Sonnet reducing bias for English-speaking countries compared to GPT-3.5 but failing to achieve robust multilingual alignment, highlighting broader implications for multilingual AI agents and applications such as education.

Cross-lingual Embedding Clustering for Hierarchical Softmax in Low-Resource Multilingual Speech Recognition

Jan 29, 2025

Abstract:We present a novel approach centered on the decoding stage of Automatic Speech Recognition (ASR) that enhances multilingual performance, especially for low-resource languages. It utilizes a cross-lingual embedding clustering method to construct a hierarchical Softmax (H-Softmax) decoder, which enables similar tokens across different languages to share similar decoder representations. It addresses the limitations of the previous Huffman-based H-Softmax method, which relied on shallow features in token similarity assessments. Through experiments on a downsampled dataset of 15 languages, we demonstrate the effectiveness of our approach in improving low-resource multilingual ASR accuracy.

Credit Risk Identification in Supply Chains Using Generative Adversarial Networks

Jan 17, 2025

Abstract:Credit risk management within supply chains has emerged as a critical research area due to its significant implications for operational stability and financial sustainability. The intricate interdependencies among supply chain participants mean that credit risks can propagate across networks, with impacts varying by industry. This study explores the application of Generative Adversarial Networks (GANs) to enhance credit risk identification in supply chains. GANs enable the generation of synthetic credit risk scenarios, addressing challenges related to data scarcity and imbalanced datasets. By leveraging GAN-generated data, the model improves predictive accuracy while effectively capturing dynamic and temporal dependencies in supply chain data. The research focuses on three representative industries-manufacturing (steel), distribution (pharmaceuticals), and services (e-commerce) to assess industry-specific credit risk contagion. Experimental results demonstrate that the GAN-based model outperforms traditional methods, including logistic regression, decision trees, and neural networks, achieving superior accuracy, recall, and F1 scores. The findings underscore the potential of GANs in proactive risk management, offering robust tools for mitigating financial disruptions in supply chains. Future research could expand the model by incorporating external market factors and supplier relationships to further enhance predictive capabilities. Keywords- Generative Adversarial Networks (GANs); Supply Chain Risk; Credit Risk Identification; Machine Learning; Data Augmentation

Developing Cryptocurrency Trading Strategy Based on Autoencoder-CNN-GANs Algorithms

Dec 24, 2024

Abstract:This paper leverages machine learning algorithms to forecast and analyze financial time series. The process begins with a denoising autoencoder to filter out random noise fluctuations from the main contract price data. Then, one-dimensional convolution reduces the dimensionality of the filtered data and extracts key information. The filtered and dimensionality-reduced price data is fed into a GANs network, and its output serve as input of a fully connected network. Through cross-validation, a model is trained to capture features that precede large price fluctuations. The model predicts the likelihood and direction of significant price changes in real-time price sequences, placing trades at moments of high prediction accuracy. Empirical results demonstrate that using autoencoders and convolution to filter and denoise financial data, combined with GANs, achieves a certain level of predictive performance, validating the capabilities of machine learning algorithms to discover underlying patterns in financial sequences. Keywords - CNN;GANs; Cryptocurrency; Prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge