Shaojie Tang

Learning to Optimize Job Shop Scheduling Under Structural Uncertainty

Jan 29, 2026Abstract:The Job-Shop Scheduling Problem (JSSP), under various forms of manufacturing uncertainty, has recently attracted considerable research attention. Most existing studies focus on parameter uncertainty, such as variable processing times, and typically adopt the actor-critic framework. In this paper, we explore a different but prevalent form of uncertainty in JSSP: structural uncertainty. Structural uncertainty arises when a job may follow one of several routing paths, and the selection is determined not by policy, but by situational factors (e.g., the quality of intermediate products) that cannot be known in advance. Existing methods struggle to address this challenge due to incorrect credit assignment: a high-quality action may be unfairly penalized if it is followed by a time-consuming path. To address this problem, we propose a novel method named UP-AAC. In contrast to conventional actor-critic methods, UP-AAC employs an asymmetric architecture. While its actor receives a standard stochastic state, the critic is crucially provided with a deterministic state reconstructed in hindsight. This design allows the critic to learn a more accurate value function, which in turn provides a lower-variance policy gradient to the actor, leading to more stable learning. In addition, we design an attention-based Uncertainty Perception Model (UPM) to enhance the actor's scheduling decisions. Extensive experiments demonstrate that our method outperforms existing approaches in reducing makespan on benchmark instances.

Scalable Mixed-Integer Optimization with Neural Constraints via Dual Decomposition

Nov 12, 2025Abstract:Embedding deep neural networks (NNs) into mixed-integer programs (MIPs) is attractive for decision making with learned constraints, yet state-of-the-art monolithic linearisations blow up in size and quickly become intractable. In this paper, we introduce a novel dual-decomposition framework that relaxes the single coupling equality u=x with an augmented Lagrange multiplier and splits the problem into a vanilla MIP and a constrained NN block. Each part is tackled by the solver that suits it best-branch and cut for the MIP subproblem, first-order optimisation for the NN subproblem-so the model remains modular, the number of integer variables never grows with network depth, and the per-iteration cost scales only linearly with the NN size. On the public \textsc{SurrogateLIB} benchmark, our method proves \textbf{scalable}, \textbf{modular}, and \textbf{adaptable}: it runs \(120\times\) faster than an exact Big-M formulation on the largest test case; the NN sub-solver can be swapped from a log-barrier interior step to a projected-gradient routine with no code changes and identical objective value; and swapping the MLP for an LSTM backbone still completes the full optimisation in 47s without any bespoke adaptation.

MVP-Shapley: Feature-based Modeling for Evaluating the Most Valuable Player in Basketball

Jun 05, 2025Abstract:The burgeoning growth of the esports and multiplayer online gaming community has highlighted the critical importance of evaluating the Most Valuable Player (MVP). The establishment of an explainable and practical MVP evaluation method is very challenging. In our study, we specifically focus on play-by-play data, which records related events during the game, such as assists and points. We aim to address the challenges by introducing a new MVP evaluation framework, denoted as \oursys, which leverages Shapley values. This approach encompasses feature processing, win-loss model training, Shapley value allocation, and MVP ranking determination based on players' contributions. Additionally, we optimize our algorithm to align with expert voting results from the perspective of causality. Finally, we substantiated the efficacy of our method through validation using the NBA dataset and the Dunk City Dynasty dataset and implemented online deployment in the industry.

Automated Privacy Information Annotation in Large Language Model Interactions

May 27, 2025Abstract:Users interacting with large language models (LLMs) under their real identifiers often unknowingly risk disclosing private information. Automatically notifying users whether their queries leak privacy and which phrases leak what private information has therefore become a practical need. Existing privacy detection methods, however, were designed for different objectives and application scenarios, typically tagging personally identifiable information (PII) in anonymous content. In this work, to support the development and evaluation of privacy detection models for LLM interactions that are deployable on local user devices, we construct a large-scale multilingual dataset with 249K user queries and 154K annotated privacy phrases. In particular, we build an automated privacy annotation pipeline with cloud-based strong LLMs to automatically extract privacy phrases from dialogue datasets and annotate leaked information. We also design evaluation metrics at the levels of privacy leakage, extracted privacy phrase, and privacy information. We further establish baseline methods using light-weight LLMs with both tuning-free and tuning-based methods, and report a comprehensive evaluation of their performance. Evaluation results reveal a gap between current performance and the requirements of real-world LLM applications, motivating future research into more effective local privacy detection methods grounded in our dataset.

Enhancing Visual Representation with Textual Semantics: Textual Semantics-Powered Prototypes for Heterogeneous Federated Learning

Mar 16, 2025

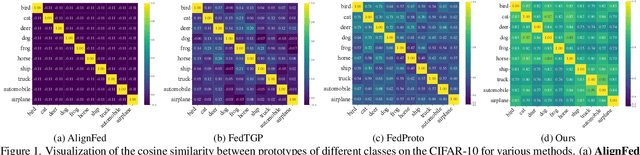

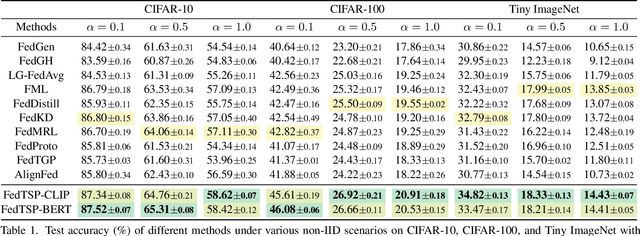

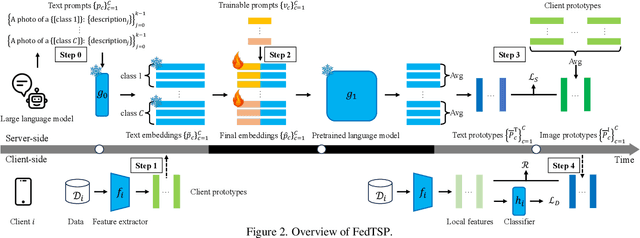

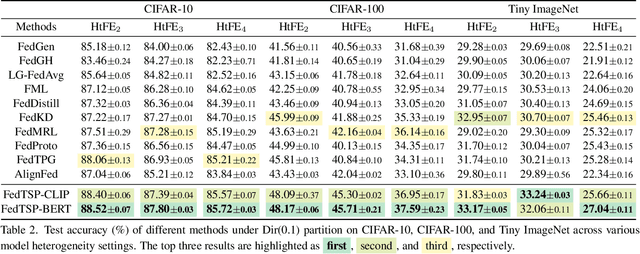

Abstract:Federated Prototype Learning (FedPL) has emerged as an effective strategy for handling data heterogeneity in Federated Learning (FL). In FedPL, clients collaboratively construct a set of global feature centers (prototypes), and let local features align with these prototypes to mitigate the effects of data heterogeneity. The performance of FedPL highly depends on the quality of prototypes. Existing methods assume that larger inter-class distances among prototypes yield better performance, and thus design different methods to increase these distances. However, we observe that while these methods increase prototype distances to enhance class discrimination, they inevitably disrupt essential semantic relationships among classes, which are crucial for model generalization. This raises an important question: how to construct prototypes that inherently preserve semantic relationships among classes? Directly learning these relationships from limited and heterogeneous client data can be problematic in FL. Recently, the success of pre-trained language models (PLMs) demonstrates their ability to capture semantic relationships from vast textual corpora. Motivated by this, we propose FedTSP, a novel method that leverages PLMs to construct semantically enriched prototypes from the textual modality, enabling more effective collaboration in heterogeneous data settings. We first use a large language model (LLM) to generate fine-grained textual descriptions for each class, which are then processed by a PLM on the server to form textual prototypes. To address the modality gap between client image models and the PLM, we introduce trainable prompts, allowing prototypes to adapt better to client tasks. Extensive experiments demonstrate that FedTSP mitigates data heterogeneity while significantly accelerating convergence.

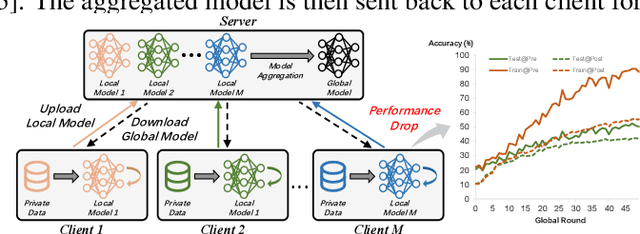

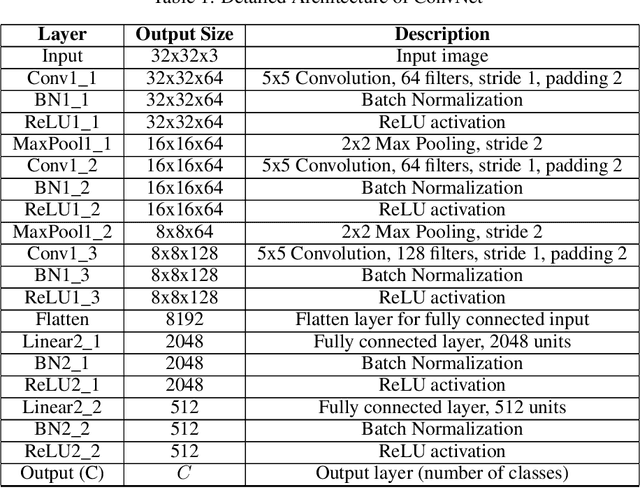

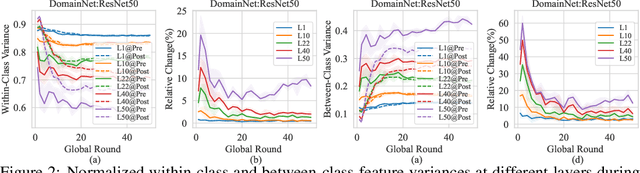

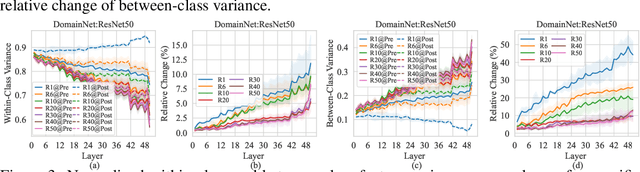

The Other Side of the Coin: Unveiling the Downsides of Model Aggregation in Federated Learning from a Layer-peeled Perspective

Feb 05, 2025

Abstract:In federated learning (FL), model aggregation is a critical step by which multiple clients share their knowledge with one another. However, it is also widely recognized that the aggregated model, when sent back to each client, performs poorly on local data until after several rounds of local training. This temporary performance drop can potentially slow down the convergence of the FL model. Most research in FL regards this performance drop as an inherent cost of knowledge sharing among clients and does not give it special attention. While some studies directly focus on designing techniques to alleviate the issue, an in-depth investigation of the reasons behind this performance drop has yet to be conducted.To address this gap, we conduct a layer-peeled analysis of model aggregation across various datasets and model architectures. Our findings reveal that the performance drop can be attributed to two major consequences of the aggregation process: (1) it disrupts feature variability suppression in deep neural networks (DNNs), and (2) it weakens the coupling between features and subsequent parameters.Based on these findings, we propose several simple yet effective strategies to mitigate the negative impacts of model aggregation while still enjoying the benefit it brings. To the best of our knowledge, our work is the first to conduct a layer-peeled analysis of model aggregation, potentially paving the way for the development of more effective FL algorithms.

A Unified Regularization Approach to High-Dimensional Generalized Tensor Bandits

Jan 18, 2025

Abstract:Modern decision-making scenarios often involve data that is both high-dimensional and rich in higher-order contextual information, where existing bandits algorithms fail to generate effective policies. In response, we propose in this paper a generalized linear tensor bandits algorithm designed to tackle these challenges by incorporating low-dimensional tensor structures, and further derive a unified analytical framework of the proposed algorithm. Specifically, our framework introduces a convex optimization approach with the weakly decomposable regularizers, enabling it to not only achieve better results based on the tensor low-rankness structure assumption but also extend to cases involving other low-dimensional structures such as slice sparsity and low-rankness. The theoretical analysis shows that, compared to existing low-rankness tensor result, our framework not only provides better bounds but also has a broader applicability. Notably, in the special case of degenerating to low-rank matrices, our bounds still offer advantages in certain scenarios.

The Power of Adaptation: Boosting In-Context Learning through Adaptive Prompting

Dec 23, 2024

Abstract:Large Language Models (LLMs) have demonstrated exceptional abilities across a broad range of language-related tasks, including generating solutions to complex reasoning problems. An effective technique to enhance LLM performance is in-context learning, which encourages a step-by-step reasoning process by including explanatory examples to guide the model's responses. However, selecting appropriate exemplars for the model poses a challenge, as each dataset demands a distinct set of exemplars to enable the LLM to learn effectively and perform well on the test set. Current studies often rely on uncertainty- or diversity-based selection strategies to select exemplars for annotation and to improve model learning. However, these studies typically employ a non-adaptive approach, selecting a set of exemplars all at once. We argue that this non-adaptive strategy may result in a set of exemplars with high redundancy in terms of the knowledge covered, ultimately reducing their overall informativeness. To address this limitation, we propose \textsc{Adaptive-Prompt}, a novel method that adaptively selects exemplars by leveraging model feedback from previously chosen exemplars. Experimental results show that \textsc{Adaptive-Prompt} significantly enhances LLM performance across a variety of reasoning tasks.

Why Go Full? Elevating Federated Learning Through Partial Network Updates

Oct 15, 2024

Abstract:Federated learning is a distributed machine learning paradigm designed to protect user data privacy, which has been successfully implemented across various scenarios. In traditional federated learning, the entire parameter set of local models is updated and averaged in each training round. Although this full network update method maximizes knowledge acquisition and sharing for each model layer, it prevents the layers of the global model from cooperating effectively to complete the tasks of each client, a challenge we refer to as layer mismatch. This mismatch problem recurs after every parameter averaging, consequently slowing down model convergence and degrading overall performance. To address the layer mismatch issue, we introduce the FedPart method, which restricts model updates to either a single layer or a few layers during each communication round. Furthermore, to maintain the efficiency of knowledge acquisition and sharing, we develop several strategies to select trainable layers in each round, including sequential updating and multi-round cycle training. Through both theoretical analysis and experiments, our findings demonstrate that the FedPart method significantly surpasses conventional full network update strategies in terms of convergence speed and accuracy, while also reducing communication and computational overheads.

Learning Submodular Sequencing from Samples

Sep 09, 2024Abstract:This paper addresses the problem of sequential submodular maximization: selecting and ranking items in a sequence to optimize some composite submodular function. In contrast to most of the previous works, which assume access to the utility function, we assume that we are given only a set of samples. Each sample includes a random sequence of items and its associated utility. We present an algorithm that, given polynomially many samples drawn from a two-stage uniform distribution, achieves an approximation ratio dependent on the curvature of individual submodular functions. Our results apply in a wide variety of real-world scenarios, such as ranking products in online retail platforms, where complete knowledge of the utility function is often impossible to obtain. Our algorithm gives an empirically useful solution in such contexts, thus proving that limited data can be of great use in sequencing tasks. From a technical perspective, our results extend prior work on ``optimization from samples'' by generalizing from optimizing a set function to a sequence-dependent function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge