Communication-Aware Reinforcement Learning for Cooperative Adaptive Cruise Control

Paper and Code

Jul 12, 2024

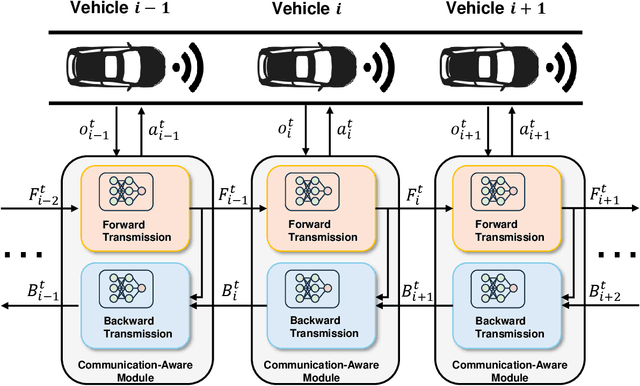

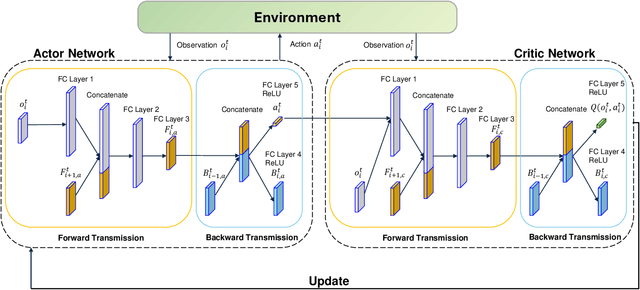

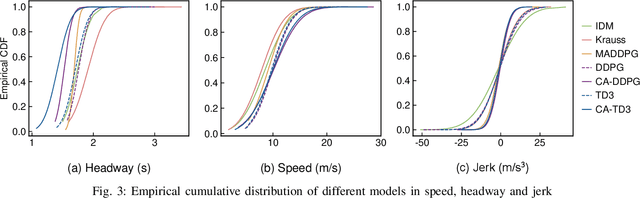

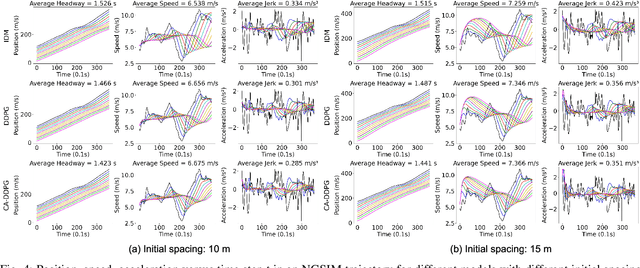

Cooperative Adaptive Cruise Control (CACC) plays a pivotal role in enhancing traffic efficiency and safety in Connected and Autonomous Vehicles (CAVs). Reinforcement Learning (RL) has proven effective in optimizing complex decision-making processes in CACC, leading to improved system performance and adaptability. Among RL approaches, Multi-Agent Reinforcement Learning (MARL) has shown remarkable potential by enabling coordinated actions among multiple CAVs through Centralized Training with Decentralized Execution (CTDE). However, MARL often faces scalability issues, particularly when CACC vehicles suddenly join or leave the platoon, resulting in performance degradation. To address these challenges, we propose Communication-Aware Reinforcement Learning (CA-RL). CA-RL includes a communication-aware module that extracts and compresses vehicle communication information through forward and backward information transmission modules. This enables efficient cyclic information propagation within the CACC traffic flow, ensuring policy consistency and mitigating the scalability problems of MARL in CACC. Experimental results demonstrate that CA-RL significantly outperforms baseline methods in various traffic scenarios, achieving superior scalability, robustness, and overall system performance while maintaining reliable performance despite changes in the number of participating vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge