Seongjin Choi

Weaver: Kronecker Product Approximations of Spatiotemporal Attention for Traffic Network Forecasting

Nov 12, 2025Abstract:Spatiotemporal forecasting on transportation networks is a complex task that requires understanding how traffic nodes interact within a dynamic, evolving system dictated by traffic flow dynamics and social behavioral patterns. The importance of transportation networks and ITS for modern mobility and commerce necessitates forecasting models that are not only accurate but also interpretable, efficient, and robust under structural or temporal perturbations. Recent approaches, particularly Transformer-based architectures, have improved predictive performance but often at the cost of high computational overhead and diminished architectural interpretability. In this work, we introduce Weaver, a novel attention-based model that applies Kronecker product approximations (KPA) to decompose the PN X PN spatiotemporal attention of O(P^2N^2) complexity into local P X P temporal and N X N spatial attention maps. This Kronecker attention map enables our Parallel-Kronecker Matrix-Vector product (P2-KMV) for efficient spatiotemporal message passing with O(P^2N + N^2P) complexity. To capture real-world traffic dynamics, we address the importance of negative edges in modeling traffic behavior by introducing Valence Attention using the continuous Tanimoto coefficient (CTC), which provides properties conducive to precise latent graph generation and training stability. To fully utilize the model's learning capacity, we introduce the Traffic Phase Dictionary for self-conditioning. Evaluations on PEMS-BAY and METR-LA show that Weaver achieves competitive performance across model categories while training more efficiently.

BO4Mob: Bayesian Optimization Benchmarks for High-Dimensional Urban Mobility Problem

Oct 21, 2025Abstract:We introduce \textbf{BO4Mob}, a new benchmark framework for high-dimensional Bayesian Optimization (BO), driven by the challenge of origin-destination (OD) travel demand estimation in large urban road networks. Estimating OD travel demand from limited traffic sensor data is a difficult inverse optimization problem, particularly in real-world, large-scale transportation networks. This problem involves optimizing over high-dimensional continuous spaces where each objective evaluation is computationally expensive, stochastic, and non-differentiable. BO4Mob comprises five scenarios based on real-world San Jose, CA road networks, with input dimensions scaling up to 10,100. These scenarios utilize high-resolution, open-source traffic simulations that incorporate realistic nonlinear and stochastic dynamics. We demonstrate the benchmark's utility by evaluating five optimization methods: three state-of-the-art BO algorithms and two non-BO baselines. This benchmark is designed to support both the development of scalable optimization algorithms and their application for the design of data-driven urban mobility models, including high-resolution digital twins of metropolitan road networks. Code and documentation are available at https://github.com/UMN-Choi-Lab/BO4Mob.

Federated Continual Recommendation

Aug 06, 2025Abstract:The increasing emphasis on privacy in recommendation systems has led to the adoption of Federated Learning (FL) as a privacy-preserving solution, enabling collaborative training without sharing user data. While Federated Recommendation (FedRec) effectively protects privacy, existing methods struggle with non-stationary data streams, failing to maintain consistent recommendation quality over time. On the other hand, Continual Learning Recommendation (CLRec) methods address evolving user preferences but typically assume centralized data access, making them incompatible with FL constraints. To bridge this gap, we introduce Federated Continual Recommendation (FCRec), a novel task that integrates FedRec and CLRec, requiring models to learn from streaming data while preserving privacy. As a solution, we propose F3CRec, a framework designed to balance knowledge retention and adaptation under the strict constraints of FCRec. F3CRec introduces two key components: Adaptive Replay Memory on the client side, which selectively retains past preferences based on user-specific shifts, and Item-wise Temporal Mean on the server side, which integrates new knowledge while preserving prior information. Extensive experiments demonstrate that F3CRec outperforms existing approaches in maintaining recommendation quality over time in a federated environment.

Hypergraph Neural Sheaf Diffusion: A Symmetric Simplicial Set Framework for Higher-Order Learning

May 09, 2025Abstract:The absence of intrinsic adjacency relations and orientation systems in hypergraphs creates fundamental challenges for constructing sheaf Laplacians of arbitrary degrees. We resolve these limitations through symmetric simplicial sets derived directly from hypergraphs, which encode all possible oriented subrelations within each hyperedge as ordered tuples. This construction canonically defines adjacency via facet maps while inherently preserving hyperedge provenance. We establish that the normalized degree zero sheaf Laplacian on our induced symmetric simplicial set reduces exactly to the traditional graph normalized sheaf Laplacian when restricted to graphs, validating its mathematical consistency with prior graph-based sheaf theory. Furthermore, the induced structure preserves all structural information from the original hypergraph, ensuring that every multi-way relational detail is faithfully retained. Leveraging this framework, we introduce Hypergraph Neural Sheaf Diffusion (HNSD), the first principled extension of Neural Sheaf Diffusion (NSD) to hypergraphs. HNSD operates via normalized degree zero sheaf Laplacians over symmetric simplicial sets, resolving orientation ambiguity and adjacency sparsity inherent to hypergraph learning. Experimental evaluations demonstrate HNSD's competitive performance across established benchmarks.

Toward Safe Integration of UAM in Terminal Airspace: UAM Route Feasibility Assessment using Probabilistic Aircraft Trajectory Prediction

Jan 28, 2025

Abstract:Integrating Urban Air Mobility (UAM) into airspace managed by Air Traffic Control (ATC) poses significant challenges, particularly in congested terminal environments. This study proposes a framework to assess the feasibility of UAM route integration using probabilistic aircraft trajectory prediction. By leveraging conditional Normalizing Flows, the framework predicts short-term trajectory distributions of conventional aircraft, enabling UAM vehicles to dynamically adjust speeds and maintain safe separations. The methodology was applied to airspace over Seoul metropolitan area, encompassing interactions between UAM and conventional traffic at multiple altitudes and lanes. The results reveal that different physical locations of lanes and routes experience varying interaction patterns and encounter dynamics. For instance, Lane 1 at lower altitudes (1,500 ft and 2,000 ft) exhibited minimal interactions with conventional aircraft, resulting in the largest separations and the most stable delay proportions. In contrast, Lane 4 near the airport experienced more frequent and complex interactions due to its proximity to departing traffic. The limited trajectory data for departing aircraft in this region occasionally led to tighter separations and increased operational challenges. This study underscores the potential of predictive modeling in facilitating UAM integration while highlighting critical trade-offs between safety and efficiency. The findings contribute to refining airspace management strategies and offer insights for scaling UAM operations in complex urban environments.

TrajFlow: A Generative Framework for Occupancy Density Estimation Using Normalizing Flows

Jan 24, 2025

Abstract:In transportation systems and autonomous vehicles, intelligent agents must understand the future motion of traffic participants to effectively plan motion trajectories. At the same time, the motion of traffic participants is inherently uncertain. In this paper, we propose TrajFlow, a generative framework for estimating the occupancy density of traffic participants. Our framework utilizes a causal encoder to extract semantically meaningful embeddings of the observed trajectory, as well as a normalizing flow to decode these embeddings and determine the most likely future location of traffic participants at some time point in the future. Our formulation differs from existing approaches because we model the marginal distribution of spatial locations instead of the joint distribution of unobserved trajectories. The advantages of a marginal formulation are numerous. First, we demonstrate that the marginal formulation produces higher accuracy on challenging trajectory forecasting benchmarks. Second, the marginal formulation allows for a fully continuous sampling of future locations. Finally, marginal densities are better suited for downstream tasks as they allow for the computation of per-agent motion trajectories and occupancy grids, the two most commonly used representations for motion forecasting. We present a novel architecture based entirely on neural differential equations as an implementation of this framework and provide ablations to demonstrate the advantages of a continuous implementation over a more traditional discrete neural network based approach. The code is available at https://github.com/kosieram21/TrajFlow .

Adversarial Vulnerabilities in Large Language Models for Time Series Forecasting

Dec 11, 2024

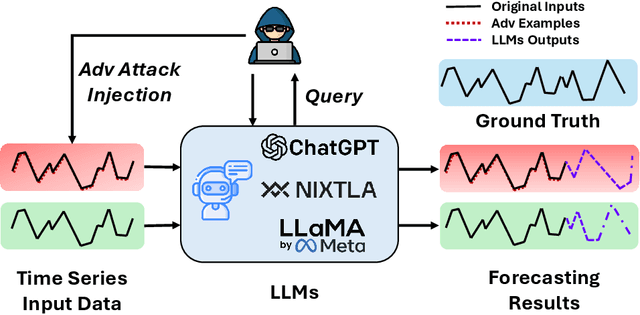

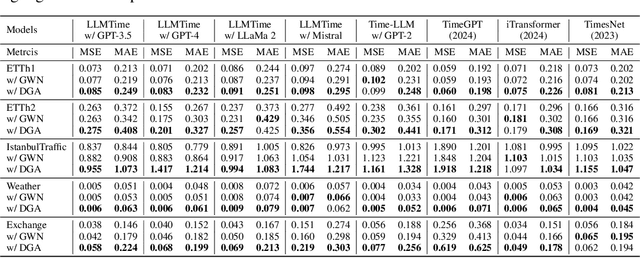

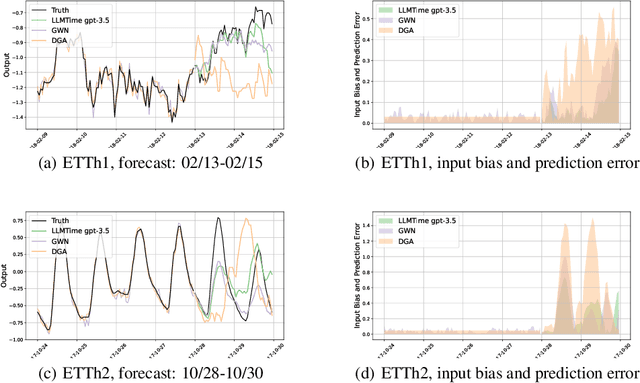

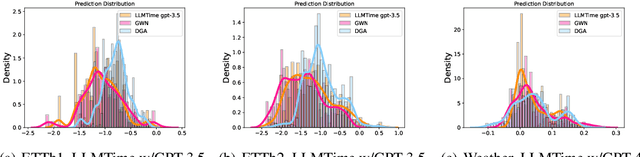

Abstract:Large Language Models (LLMs) have recently demonstrated significant potential in the field of time series forecasting, offering impressive capabilities in handling complex temporal data. However, their robustness and reliability in real-world applications remain under-explored, particularly concerning their susceptibility to adversarial attacks. In this paper, we introduce a targeted adversarial attack framework for LLM-based time series forecasting. By employing both gradient-free and black-box optimization methods, we generate minimal yet highly effective perturbations that significantly degrade the forecasting accuracy across multiple datasets and LLM architectures. Our experiments, which include models like TimeGPT and LLM-Time with GPT-3.5, GPT-4, LLaMa, and Mistral, show that adversarial attacks lead to much more severe performance degradation than random noise, and demonstrate the broad effectiveness of our attacks across different LLMs. The results underscore the critical vulnerabilities of LLMs in time series forecasting, highlighting the need for robust defense mechanisms to ensure their reliable deployment in practical applications.

A Gentle Introduction and Tutorial on Deep Generative Models in Transportation Research

Oct 09, 2024Abstract:Deep Generative Models (DGMs) have rapidly advanced in recent years, becoming essential tools in various fields due to their ability to learn complex data distributions and generate synthetic data. Their importance in transportation research is increasingly recognized, particularly for applications like traffic data generation, prediction, and feature extraction. This paper offers a comprehensive introduction and tutorial on DGMs, with a focus on their applications in transportation. It begins with an overview of generative models, followed by detailed explanations of fundamental models, a systematic review of the literature, and practical tutorial code to aid implementation. The paper also discusses current challenges and opportunities, highlighting how these models can be effectively utilized and further developed in transportation research. This paper serves as a valuable reference, guiding researchers and practitioners from foundational knowledge to advanced applications of DGMs in transportation research.

Communication-Aware Reinforcement Learning for Cooperative Adaptive Cruise Control

Jul 12, 2024

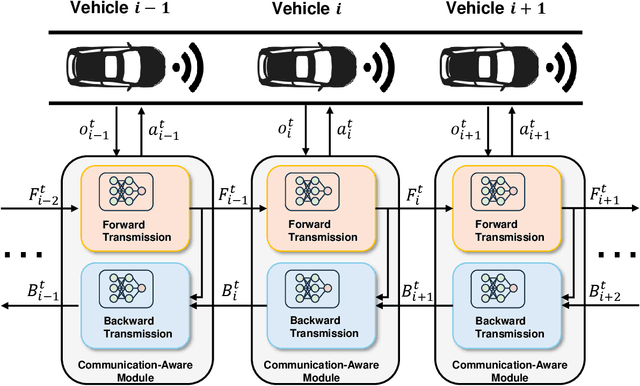

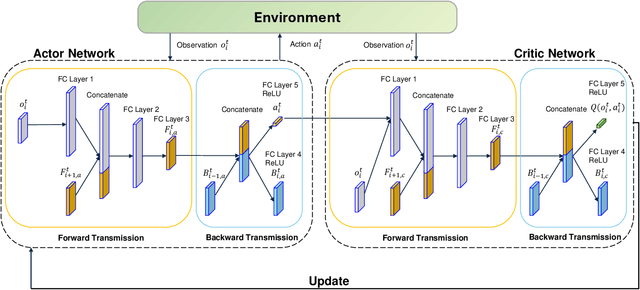

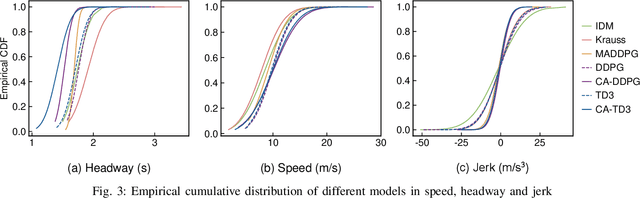

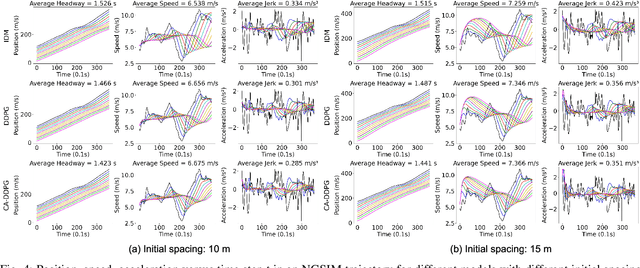

Abstract:Cooperative Adaptive Cruise Control (CACC) plays a pivotal role in enhancing traffic efficiency and safety in Connected and Autonomous Vehicles (CAVs). Reinforcement Learning (RL) has proven effective in optimizing complex decision-making processes in CACC, leading to improved system performance and adaptability. Among RL approaches, Multi-Agent Reinforcement Learning (MARL) has shown remarkable potential by enabling coordinated actions among multiple CAVs through Centralized Training with Decentralized Execution (CTDE). However, MARL often faces scalability issues, particularly when CACC vehicles suddenly join or leave the platoon, resulting in performance degradation. To address these challenges, we propose Communication-Aware Reinforcement Learning (CA-RL). CA-RL includes a communication-aware module that extracts and compresses vehicle communication information through forward and backward information transmission modules. This enables efficient cyclic information propagation within the CACC traffic flow, ensuring policy consistency and mitigating the scalability problems of MARL in CACC. Experimental results demonstrate that CA-RL significantly outperforms baseline methods in various traffic scenarios, achieving superior scalability, robustness, and overall system performance while maintaining reliable performance despite changes in the number of participating vehicles.

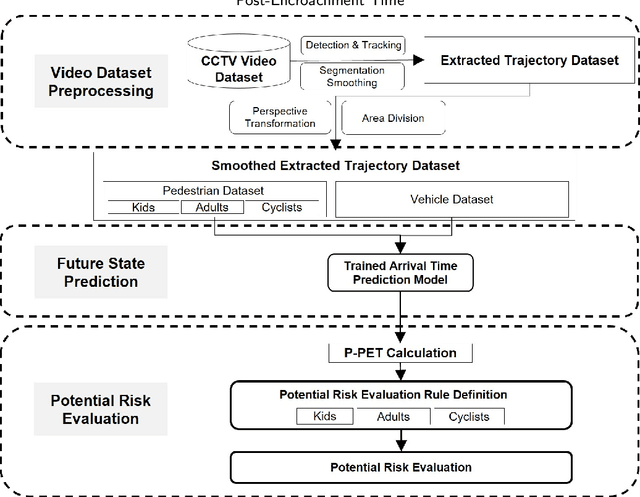

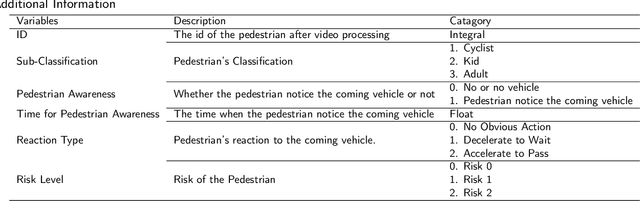

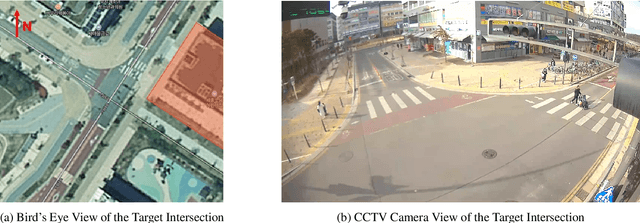

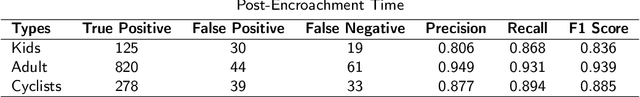

A Real-time Evaluation Framework for Pedestrian's Potential Risk at Non-Signalized Intersections Based on Predicted Post-Encroachment Time

Apr 24, 2024

Abstract:Addressing pedestrian safety at intersections is one of the paramount concerns in the field of transportation research, driven by the urgency of reducing traffic-related injuries and fatalities. With advances in computer vision technologies and predictive models, the pursuit of developing real-time proactive protection systems is increasingly recognized as vital to improving pedestrian safety at intersections. The core of these protection systems lies in the prediction-based evaluation of pedestrian's potential risks, which plays a significant role in preventing the occurrence of accidents. The major challenges in the current prediction-based potential risk evaluation research can be summarized into three aspects: the inadequate progress in creating a real-time framework for the evaluation of pedestrian's potential risks, the absence of accurate and explainable safety indicators that can represent the potential risk, and the lack of tailor-made evaluation criteria specifically for each category of pedestrians. To address these research challenges, in this study, a framework with computer vision technologies and predictive models is developed to evaluate the potential risk of pedestrians in real time. Integral to this framework is a novel surrogate safety measure, the Predicted Post-Encroachment Time (P-PET), derived from deep learning models capable to predict the arrival time of pedestrians and vehicles at intersections. To further improve the effectiveness and reliability of pedestrian risk evaluation, we classify pedestrians into distinct categories and apply specific evaluation criteria for each group. The results demonstrate the framework's ability to effectively identify potential risks through the use of P-PET, indicating its feasibility for real-time applications and its improved performance in risk evaluation across different categories of pedestrians.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge