Chandan Kumar Sah

Beihang University

Uncertainty and Fairness Awareness in LLM-Based Recommendation Systems

Jan 31, 2026Abstract:Large language models (LLMs) enable powerful zero-shot recommendations by leveraging broad contextual knowledge, yet predictive uncertainty and embedded biases threaten reliability and fairness. This paper studies how uncertainty and fairness evaluations affect the accuracy, consistency, and trustworthiness of LLM-generated recommendations. We introduce a benchmark of curated metrics and a dataset annotated for eight demographic attributes (31 categorical values) across two domains: movies and music. Through in-depth case studies, we quantify predictive uncertainty (via entropy) and demonstrate that Google DeepMind's Gemini 1.5 Flash exhibits systematic unfairness for certain sensitive attributes; measured similarity-based gaps are SNSR at 0.1363 and SNSV at 0.0507. These disparities persist under prompt perturbations such as typographical errors and multilingual inputs. We further integrate personality-aware fairness into the RecLLM evaluation pipeline to reveal personality-linked bias patterns and expose trade-offs between personalization and group fairness. We propose a novel uncertainty-aware evaluation methodology for RecLLMs, present empirical insights from deep uncertainty case studies, and introduce a personality profile-informed fairness benchmark that advances explainability and equity in LLM recommendations. Together, these contributions establish a foundation for safer, more interpretable RecLLMs and motivate future work on multi-model benchmarks and adaptive calibration for trustworthy deployment.

CleanMAP: Distilling Multimodal LLMs for Confidence-Driven Crowdsourced HD Map Updates

Apr 14, 2025

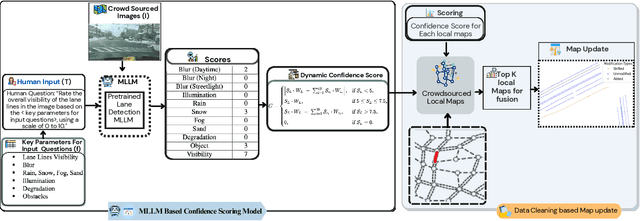

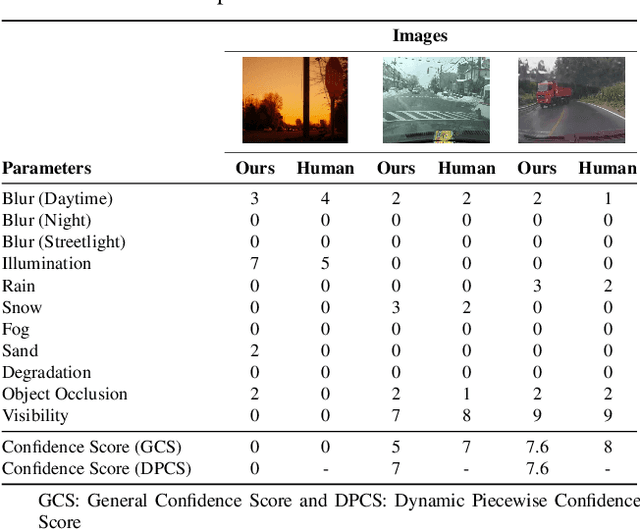

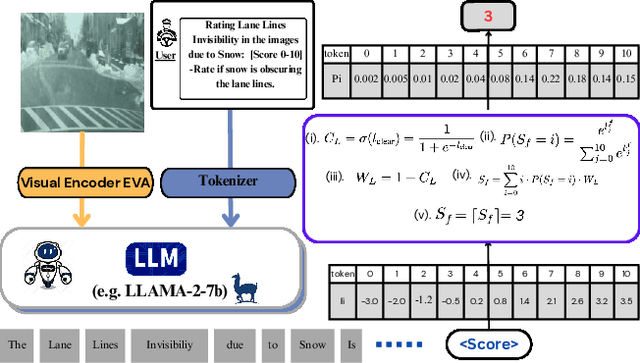

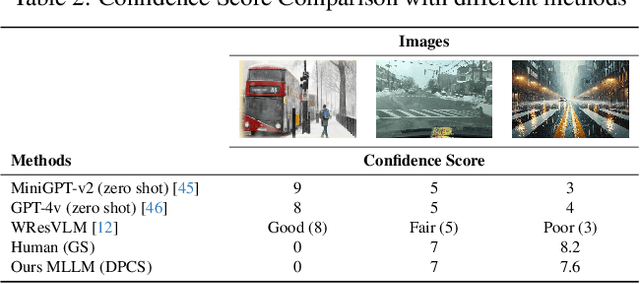

Abstract:The rapid growth of intelligent connected vehicles (ICVs) and integrated vehicle-road-cloud systems has increased the demand for accurate, real-time HD map updates. However, ensuring map reliability remains challenging due to inconsistencies in crowdsourced data, which suffer from motion blur, lighting variations, adverse weather, and lane marking degradation. This paper introduces CleanMAP, a Multimodal Large Language Model (MLLM)-based distillation framework designed to filter and refine crowdsourced data for high-confidence HD map updates. CleanMAP leverages an MLLM-driven lane visibility scoring model that systematically quantifies key visual parameters, assigning confidence scores (0-10) based on their impact on lane detection. A novel dynamic piecewise confidence-scoring function adapts scores based on lane visibility, ensuring strong alignment with human evaluations while effectively filtering unreliable data. To further optimize map accuracy, a confidence-driven local map fusion strategy ranks and selects the top-k highest-scoring local maps within an optimal confidence range (best score minus 10%), striking a balance between data quality and quantity. Experimental evaluations on a real-world autonomous vehicle dataset validate CleanMAP's effectiveness, demonstrating that fusing the top three local maps achieves the lowest mean map update error of 0.28m, outperforming the baseline (0.37m) and meeting stringent accuracy thresholds (<= 0.32m). Further validation with real-vehicle data confirms 84.88% alignment with human evaluators, reinforcing the model's robustness and reliability. This work establishes CleanMAP as a scalable and deployable solution for crowdsourced HD map updates, ensuring more precise and reliable autonomous navigation. The code will be available at https://Ankit-Zefan.github.io/CleanMap/

FairEval: Evaluating Fairness in LLM-Based Recommendations with Personality Awareness

Apr 10, 2025Abstract:Recent advances in Large Language Models (LLMs) have enabled their application to recommender systems (RecLLMs), yet concerns remain regarding fairness across demographic and psychological user dimensions. We introduce FairEval, a novel evaluation framework to systematically assess fairness in LLM-based recommendations. FairEval integrates personality traits with eight sensitive demographic attributes,including gender, race, and age, enabling a comprehensive assessment of user-level bias. We evaluate models, including ChatGPT 4o and Gemini 1.5 Flash, on music and movie recommendations. FairEval's fairness metric, PAFS, achieves scores up to 0.9969 for ChatGPT 4o and 0.9997 for Gemini 1.5 Flash, with disparities reaching 34.79 percent. These results highlight the importance of robustness in prompt sensitivity and support more inclusive recommendation systems.

Advancing Autonomous Vehicle Intelligence: Deep Learning and Multimodal LLM for Traffic Sign Recognition and Robust Lane Detection

Mar 08, 2025Abstract:Autonomous vehicles (AVs) require reliable traffic sign recognition and robust lane detection capabilities to ensure safe navigation in complex and dynamic environments. This paper introduces an integrated approach combining advanced deep learning techniques and Multimodal Large Language Models (MLLMs) for comprehensive road perception. For traffic sign recognition, we systematically evaluate ResNet-50, YOLOv8, and RT-DETR, achieving state-of-the-art performance of 99.8% with ResNet-50, 98.0% accuracy with YOLOv8, and achieved 96.6% accuracy in RT-DETR despite its higher computational complexity. For lane detection, we propose a CNN-based segmentation method enhanced by polynomial curve fitting, which delivers high accuracy under favorable conditions. Furthermore, we introduce a lightweight, Multimodal, LLM-based framework that directly undergoes instruction tuning using small yet diverse datasets, eliminating the need for initial pretraining. This framework effectively handles various lane types, complex intersections, and merging zones, significantly enhancing lane detection reliability by reasoning under adverse conditions. Despite constraints in available training resources, our multimodal approach demonstrates advanced reasoning capabilities, achieving a Frame Overall Accuracy (FRM) of 53.87%, a Question Overall Accuracy (QNS) of 82.83%, lane detection accuracies of 99.6% in clear conditions and 93.0% at night, and robust performance in reasoning about lane invisibility due to rain (88.4%) or road degradation (95.6%). The proposed comprehensive framework markedly enhances AV perception reliability, thus contributing significantly to safer autonomous driving across diverse and challenging road scenarios.

Event-Based Adaptive Koopman Framework for Optic Flow-Guided Landing on Moving Platforms

Jan 28, 2025

Abstract:This paper presents an optic flow-guided approach for achieving soft landings by resource-constrained unmanned aerial vehicles (UAVs) on dynamic platforms. An offline data-driven linear model based on Koopman operator theory is developed to describe the underlying (nonlinear) dynamics of optic flow output obtained from a single monocular camera that maps to vehicle acceleration as the control input. Moreover, a novel adaptation scheme within the Koopman framework is introduced online to handle uncertainties such as unknown platform motion and ground effect, which exert a significant influence during the terminal stage of the descent process. Further, to minimize computational overhead, an event-based adaptation trigger is incorporated into an event-driven Model Predictive Control (MPC) strategy to regulate optic flow and track a desired reference. A detailed convergence analysis ensures global convergence of the tracking error to a uniform ultimate bound while ensuring Zeno-free behavior. Simulation results demonstrate the algorithm's robustness and effectiveness in landing on dynamic platforms under ground effect and sensor noise, which compares favorably to non-adaptive event-triggered and time-triggered adaptive schemes.

Adaptive Koopman Embedding for Robust Control of Complex Dynamical Systems

May 15, 2024

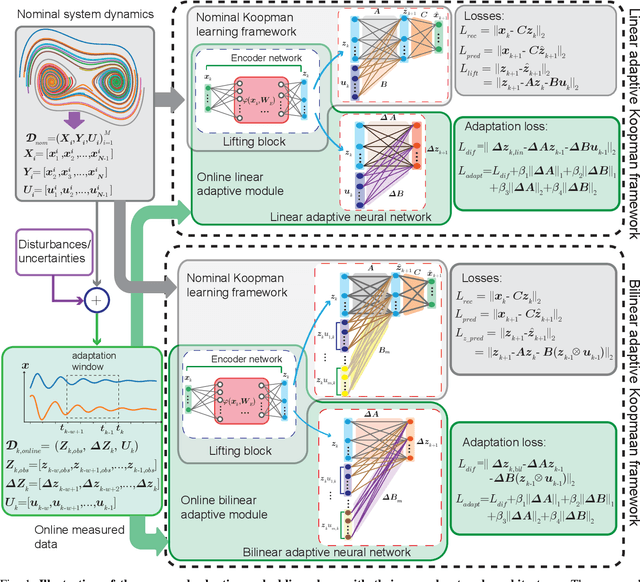

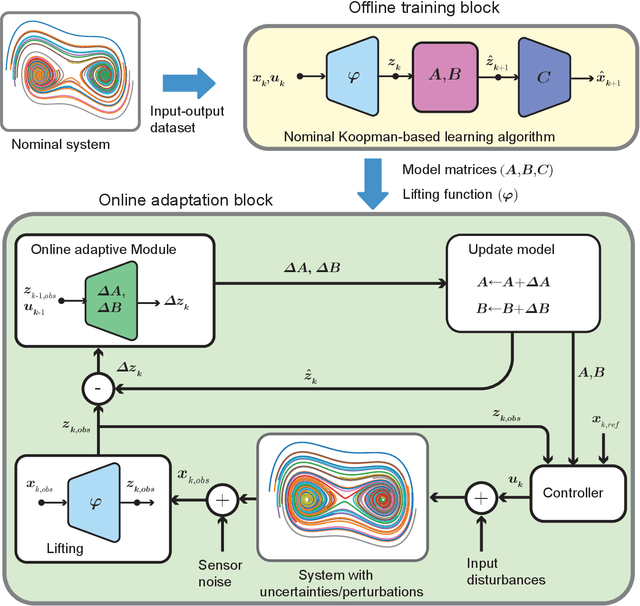

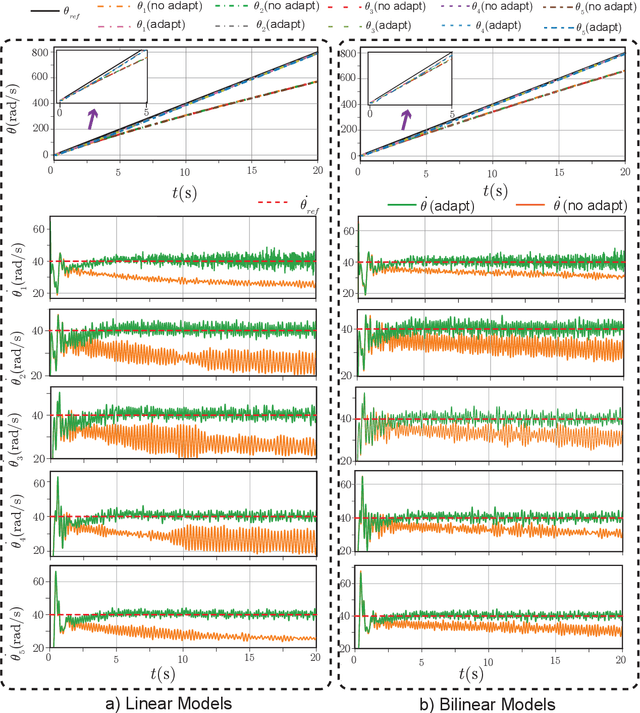

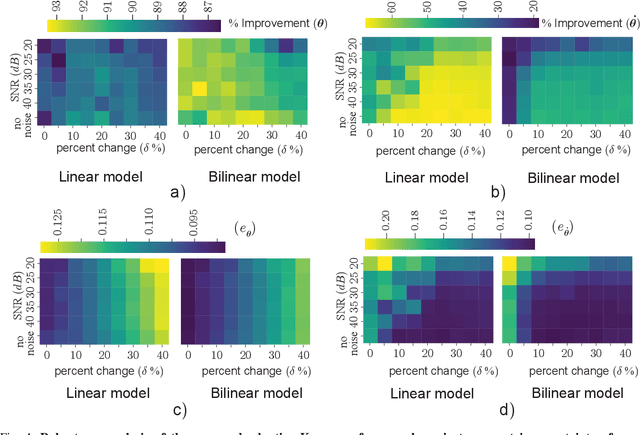

Abstract:The discovery of linear embedding is the key to the synthesis of linear control techniques for nonlinear systems. In recent years, while Koopman operator theory has become a prominent approach for learning these linear embeddings through data-driven methods, these algorithms often exhibit limitations in generalizability beyond the distribution captured by training data and are not robust to changes in the nominal system dynamics induced by intrinsic or environmental factors. To overcome these limitations, this study presents an adaptive Koopman architecture capable of responding to the changes in system dynamics online. The proposed framework initially employs an autoencoder-based neural network that utilizes input-output information from the nominal system to learn the corresponding Koopman embedding offline. Subsequently, we augment this nominal Koopman architecture with a feed-forward neural network that learns to modify the nominal dynamics in response to any deviation between the predicted and observed lifted states, leading to improved generalization and robustness to a wide range of uncertainties and disturbances compared to contemporary methods. Extensive tracking control simulations, which are undertaken by integrating the proposed scheme within a Model Predictive Control framework, are used to highlight its robustness against measurement noise, disturbances, and parametric variations in system dynamics.

Unveiling Bias in Fairness Evaluations of Large Language Models: A Critical Literature Review of Music and Movie Recommendation Systems

Jan 08, 2024Abstract:The rise of generative artificial intelligence, particularly Large Language Models (LLMs), has intensified the imperative to scrutinize fairness alongside accuracy. Recent studies have begun to investigate fairness evaluations for LLMs within domains such as recommendations. Given that personalization is an intrinsic aspect of recommendation systems, its incorporation into fairness assessments is paramount. Yet, the degree to which current fairness evaluation frameworks account for personalization remains unclear. Our comprehensive literature review aims to fill this gap by examining how existing frameworks handle fairness evaluations of LLMs, with a focus on the integration of personalization factors. Despite an exhaustive collection and analysis of relevant works, we discovered that most evaluations overlook personalization, a critical facet of recommendation systems, thereby inadvertently perpetuating unfair practices. Our findings shed light on this oversight and underscore the urgent need for more nuanced fairness evaluations that acknowledge personalization. Such improvements are vital for fostering equitable development within the AI community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge