Qiang Xu

Eric

Position: Universal Time Series Foundation Models Rest on a Category Error

Feb 05, 2026Abstract:This position paper argues that the pursuit of "Universal Foundation Models for Time Series" rests on a fundamental category error, mistaking a structural Container for a semantic Modality. We contend that because time series hold incompatible generative processes (e.g., finance vs. fluid dynamics), monolithic models degenerate into expensive "Generic Filters" that fail to generalize under distributional drift. To address this, we introduce the "Autoregressive Blindness Bound," a theoretical limit proving that history-only models cannot predict intervention-driven regime shifts. We advocate replacing universality with a Causal Control Agent paradigm, where an agent leverages external context to orchestrate a hierarchy of specialized solvers, from frozen domain experts to lightweight Just-in-Time adaptors. We conclude by calling for a shift in benchmarks from "Zero-Shot Accuracy" to "Drift Adaptation Speed" to prioritize robust, control-theoretic systems.

\textit{FocaLogic}: Logic-Based Interpretation of Visual Model Decisions

Jan 17, 2026Abstract:Interpretability of modern visual models is crucial, particularly in high-stakes applications. However, existing interpretability methods typically suffer from either reliance on white-box model access or insufficient quantitative rigor. To address these limitations, we introduce FocaLogic, a novel model-agnostic framework designed to interpret and quantify visual model decision-making through logic-based representations. FocaLogic identifies minimal interpretable subsets of visual regions-termed visual focuses-that decisively influence model predictions. It translates these visual focuses into precise and compact logical expressions, enabling transparent and structured interpretations. Additionally, we propose a suite of quantitative metrics, including focus precision, recall, and divergence, to objectively evaluate model behavior across diverse scenarios. Empirical analyses demonstrate FocaLogic's capability to uncover critical insights such as training-induced concentration, increasing focus accuracy through generalization, and anomalous focuses under biases and adversarial attacks. Overall, FocaLogic provides a systematic, scalable, and quantitative solution for interpreting visual models.

GNN-based Path-aware multi-view Circuit Learning for Technology Mapping

Jan 14, 2026Abstract:Traditional technology mapping suffers from systemic inaccuracies in delay estimation due to its reliance on abstract, technology-agnostic delay models that fail to capture the nuanced timing behavior behavior of real post-mapping circuits. To address this fundamental limitation, we introduce GPA(graph neural network (GNN)-based Path-Aware multi-view circuit learning), a novel GNN framework that learns precise, data-driven delay predictions by synergistically fusing three complementary views of circuit structure: And-Inverter Graphs (AIGs)-based functional encoding, post-mapping technology emphasizes critical timing paths. Trained exclusively on real cell delays extracted from critical paths of industrial-grade post-mapping netlists, GPA learns to classify cut delays with unprecedented accuracy, directly informing smarter mapping decisions. Evaluated on the 19 EPFL combinational benchmarks, GPA achieves 19.9%, 2.1% and 4.1% average delay reduction over the conventional heuristics methods (techmap, MCH) and the prior state-of-the-art ML-based approach SLAP, respectively-without compromising area efficiency.

Activations as Features: Probing LLMs for Generalizable Essay Scoring Representations

Dec 22, 2025Abstract:Automated essay scoring (AES) is a challenging task in cross-prompt settings due to the diversity of scoring criteria. While previous studies have focused on the output of large language models (LLMs) to improve scoring accuracy, we believe activations from intermediate layers may also provide valuable information. To explore this possibility, we evaluated the discriminative power of LLMs' activations in cross-prompt essay scoring task. Specifically, we used activations to fit probes and further analyzed the effects of different models and input content of LLMs on this discriminative power. By computing the directions of essays across various trait dimensions under different prompts, we analyzed the variation in evaluation perspectives of large language models concerning essay types and traits. Results show that the activations possess strong discriminative power in evaluating essay quality and that LLMs can adapt their evaluation perspectives to different traits and essay types, effectively handling the diversity of scoring criteria in cross-prompt settings.

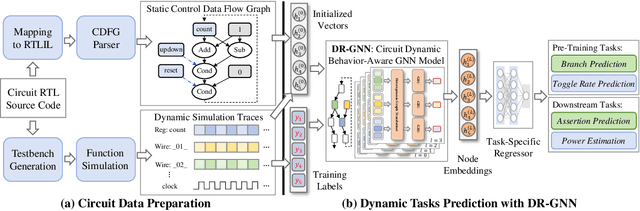

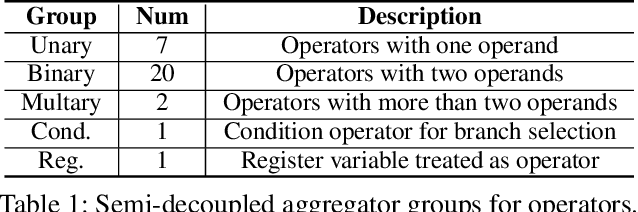

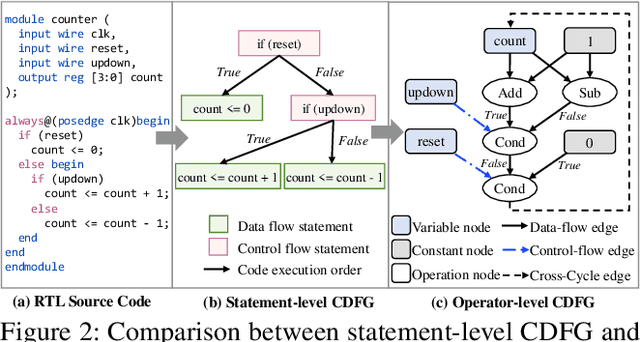

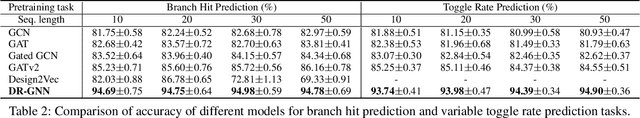

DynamicRTL: RTL Representation Learning for Dynamic Circuit Behavior

Nov 12, 2025

Abstract:There is a growing body of work on using Graph Neural Networks (GNNs) to learn representations of circuits, focusing primarily on their static characteristics. However, these models fail to capture circuit runtime behavior, which is crucial for tasks like circuit verification and optimization. To address this limitation, we introduce DR-GNN (DynamicRTL-GNN), a novel approach that learns RTL circuit representations by incorporating both static structures and multi-cycle execution behaviors. DR-GNN leverages an operator-level Control Data Flow Graph (CDFG) to represent Register Transfer Level (RTL) circuits, enabling the model to capture dynamic dependencies and runtime execution. To train and evaluate DR-GNN, we build the first comprehensive dynamic circuit dataset, comprising over 6,300 Verilog designs and 63,000 simulation traces. Our results demonstrate that DR-GNN outperforms existing models in branch hit prediction and toggle rate prediction. Furthermore, its learned representations transfer effectively to related dynamic circuit tasks, achieving strong performance in power estimation and assertion prediction.

FailureAtlas:Mapping the Failure Landscape of T2I Models via Active Exploration

Sep 26, 2025Abstract:Static benchmarks have provided a valuable foundation for comparing Text-to-Image (T2I) models. However, their passive design offers limited diagnostic power, struggling to uncover the full landscape of systematic failures or isolate their root causes. We argue for a complementary paradigm: active exploration. We introduce FailureAtlas, the first framework designed to autonomously explore and map the vast failure landscape of T2I models at scale. FailureAtlas frames error discovery as a structured search for minimal, failure-inducing concepts. While it is a computationally explosive problem, we make it tractable with novel acceleration techniques. When applied to Stable Diffusion models, our method uncovers hundreds of thousands of previously unknown error slices (over 247,000 in SD1.5 alone) and provides the first large-scale evidence linking these failures to data scarcity in the training set. By providing a principled and scalable engine for deep model auditing, FailureAtlas establishes a new, diagnostic-first methodology to guide the development of more robust generative AI. The code is available at https://github.com/cure-lab/FailureAtlas

Concept-SAE: Active Causal Probing of Visual Model Behavior

Sep 26, 2025Abstract:Standard Sparse Autoencoders (SAEs) excel at discovering a dictionary of a model's learned features, offering a powerful observational lens. However, the ambiguous and ungrounded nature of these features makes them unreliable instruments for the active, causal probing of model behavior. To solve this, we introduce Concept-SAE, a framework that forges semantically grounded concept tokens through a novel hybrid disentanglement strategy. We first quantitatively demonstrate that our dual-supervision approach produces tokens that are remarkably faithful and spatially localized, outperforming alternative methods in disentanglement. This validated fidelity enables two critical applications: (1) we probe the causal link between internal concepts and predictions via direct intervention, and (2) we probe the model's failure modes by systematically localizing adversarial vulnerabilities to specific layers. Concept-SAE provides a validated blueprint for moving beyond correlational interpretation to the mechanistic, causal probing of model behavior.

Revolution or Hype? Seeking the Limits of Large Models in Hardware Design

Sep 05, 2025Abstract:Recent breakthroughs in Large Language Models (LLMs) and Large Circuit Models (LCMs) have sparked excitement across the electronic design automation (EDA) community, promising a revolution in circuit design and optimization. Yet, this excitement is met with significant skepticism: Are these AI models a genuine revolution in circuit design, or a temporary wave of inflated expectations? This paper serves as a foundational text for the corresponding ICCAD 2025 panel, bringing together perspectives from leading experts in academia and industry. It critically examines the practical capabilities, fundamental limitations, and future prospects of large AI models in hardware design. The paper synthesizes the core arguments surrounding reliability, scalability, and interpretability, framing the debate on whether these models can meaningfully outperform or complement traditional EDA methods. The result is an authoritative overview offering fresh insights into one of today's most contentious and impactful technology trends.

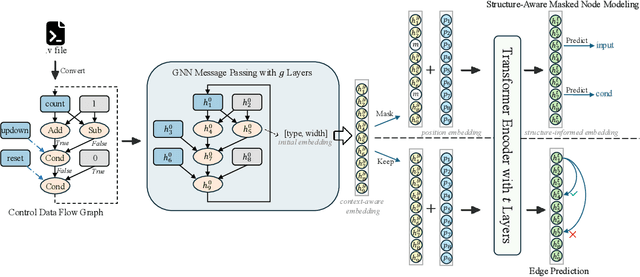

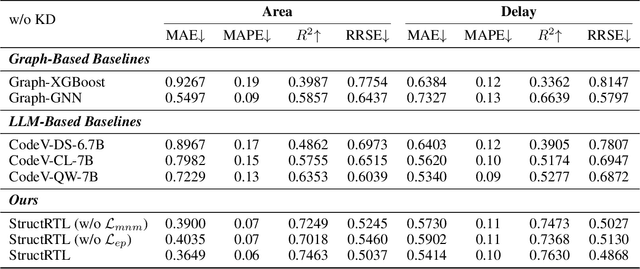

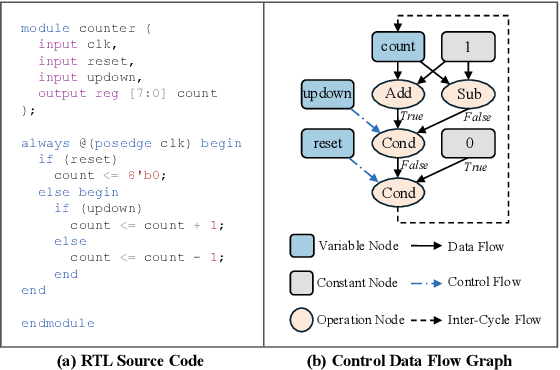

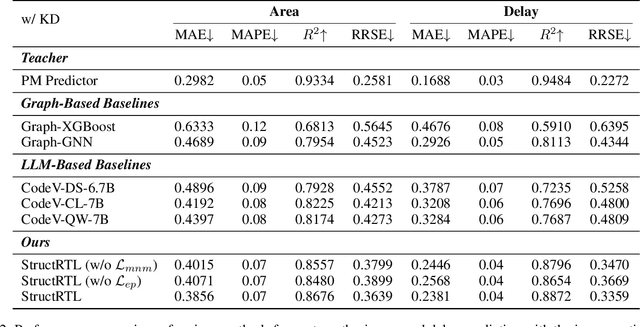

Beyond Tokens: Enhancing RTL Quality Estimation via Structural Graph Learning

Aug 26, 2025

Abstract:Estimating the quality of register transfer level (RTL) designs is crucial in the electronic design automation (EDA) workflow, as it enables instant feedback on key metrics like area and delay without the need for time-consuming logic synthesis. While recent approaches have leveraged large language models (LLMs) to derive embeddings from RTL code and achieved promising results, they overlook the structural semantics essential for accurate quality estimation. In contrast, the control data flow graph (CDFG) view exposes the design's structural characteristics more explicitly, offering richer cues for representation learning. In this work, we introduce a novel structure-aware graph self-supervised learning framework, StructRTL, for improved RTL design quality estimation. By learning structure-informed representations from CDFGs, our method significantly outperforms prior art on various quality estimation tasks. To further boost performance, we incorporate a knowledge distillation strategy that transfers low-level insights from post-mapping netlists into the CDFG predictor. Experiments show that our approach establishes new state-of-the-art results, demonstrating the effectiveness of combining structural learning with cross-stage supervision.

MPCAR: Multi-Perspective Contextual Augmentation for Enhanced Visual Reasoning in Large Vision-Language Models

Aug 17, 2025Abstract:Despite significant advancements, Large Vision-Language Models (LVLMs) continue to face challenges in complex visual reasoning tasks that demand deep contextual understanding, multi-angle analysis, or meticulous detail recognition. Existing approaches often rely on single-shot image encoding and prompts, limiting their ability to fully capture nuanced visual information. Inspired by the notion that strategically generated "additional" information can serve as beneficial contextual augmentation, we propose Multi-Perspective Contextual Augmentation for Reasoning (MPCAR), a novel inference-time strategy designed to enhance LVLM performance. MPCAR operates in three stages: first, an LVLM generates N diverse and complementary descriptions or preliminary reasoning paths from various angles; second, these descriptions are intelligently integrated with the original question to construct a comprehensive context-augmented prompt; and finally, this enriched prompt guides the ultimate LVLM for deep reasoning and final answer generation. Crucially, MPCAR achieves these enhancements without requiring any fine-tuning of the underlying LVLM's parameters. Extensive experiments on challenging Visual Question Answering (VQA) datasets, including GQA, VQA-CP v2, and ScienceQA (Image-VQA), demonstrate that MPCAR consistently outperforms established baseline methods. Our quantitative results show significant accuracy gains, particularly on tasks requiring robust contextual understanding, while human evaluations confirm improved coherence and completeness of the generated answers. Ablation studies further highlight the importance of diverse prompt templates and the number of generated perspectives. This work underscores the efficacy of leveraging LVLMs' inherent generative capabilities to enrich input contexts, thereby unlocking their latent reasoning potential for complex multimodal tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge