Zhengyuan Shi

GNN-based Path-aware multi-view Circuit Learning for Technology Mapping

Jan 14, 2026Abstract:Traditional technology mapping suffers from systemic inaccuracies in delay estimation due to its reliance on abstract, technology-agnostic delay models that fail to capture the nuanced timing behavior behavior of real post-mapping circuits. To address this fundamental limitation, we introduce GPA(graph neural network (GNN)-based Path-Aware multi-view circuit learning), a novel GNN framework that learns precise, data-driven delay predictions by synergistically fusing three complementary views of circuit structure: And-Inverter Graphs (AIGs)-based functional encoding, post-mapping technology emphasizes critical timing paths. Trained exclusively on real cell delays extracted from critical paths of industrial-grade post-mapping netlists, GPA learns to classify cut delays with unprecedented accuracy, directly informing smarter mapping decisions. Evaluated on the 19 EPFL combinational benchmarks, GPA achieves 19.9%, 2.1% and 4.1% average delay reduction over the conventional heuristics methods (techmap, MCH) and the prior state-of-the-art ML-based approach SLAP, respectively-without compromising area efficiency.

DynamicRTL: RTL Representation Learning for Dynamic Circuit Behavior

Nov 12, 2025

Abstract:There is a growing body of work on using Graph Neural Networks (GNNs) to learn representations of circuits, focusing primarily on their static characteristics. However, these models fail to capture circuit runtime behavior, which is crucial for tasks like circuit verification and optimization. To address this limitation, we introduce DR-GNN (DynamicRTL-GNN), a novel approach that learns RTL circuit representations by incorporating both static structures and multi-cycle execution behaviors. DR-GNN leverages an operator-level Control Data Flow Graph (CDFG) to represent Register Transfer Level (RTL) circuits, enabling the model to capture dynamic dependencies and runtime execution. To train and evaluate DR-GNN, we build the first comprehensive dynamic circuit dataset, comprising over 6,300 Verilog designs and 63,000 simulation traces. Our results demonstrate that DR-GNN outperforms existing models in branch hit prediction and toggle rate prediction. Furthermore, its learned representations transfer effectively to related dynamic circuit tasks, achieving strong performance in power estimation and assertion prediction.

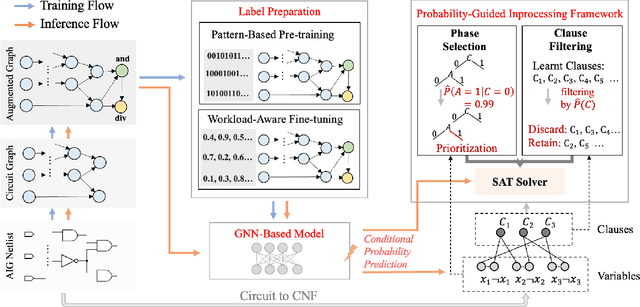

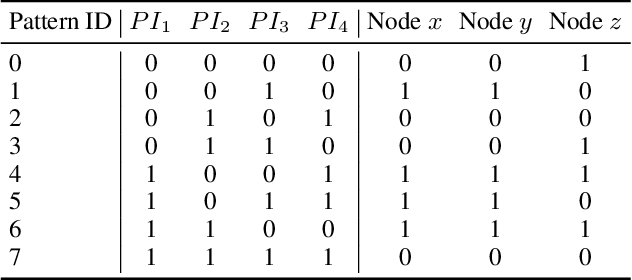

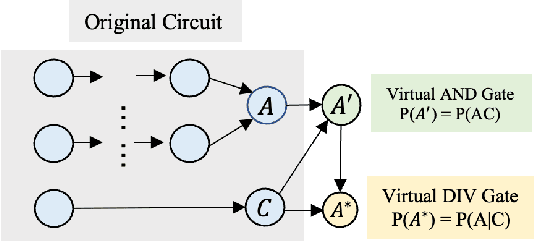

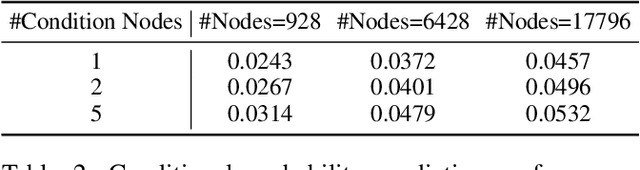

Circuit-Aware SAT Solving: Guiding CDCL via Conditional Probabilities

Aug 06, 2025

Abstract:Circuit Satisfiability (CSAT) plays a pivotal role in Electronic Design Automation. The standard workflow for solving CSAT problems converts circuits into Conjunctive Normal Form (CNF) and employs generic SAT solvers powered by Conflict-Driven Clause Learning (CDCL). However, this process inherently discards rich structural and functional information, leading to suboptimal solver performance. To address this limitation, we introduce CASCAD, a novel circuit-aware SAT solving framework that directly leverages circuit-level conditional probabilities computed via Graph Neural Networks (GNNs). By explicitly modeling gate-level conditional probabilities, CASCAD dynamically guides two critical CDCL heuristics -- variable phase selection and clause managementto significantly enhance solver efficiency. Extensive evaluations on challenging real-world Logical Equivalence Checking (LEC) benchmarks demonstrate that CASCAD reduces solving times by up to 10x compared to state-of-the-art CNF-based approaches, achieving an additional 23.5% runtime reduction via our probability-guided clause filtering strategy. Our results underscore the importance of preserving circuit-level structural insights within SAT solvers, providing a robust foundation for future improvements in SAT-solving efficiency and EDA tool design.

AC-Refiner: Efficient Arithmetic Circuit Optimization Using Conditional Diffusion Models

Jul 03, 2025Abstract:Arithmetic circuits, such as adders and multipliers, are fundamental components of digital systems, directly impacting the performance, power efficiency, and area footprint. However, optimizing these circuits remains challenging due to the vast design space and complex physical constraints. While recent deep learning-based approaches have shown promise, they struggle to consistently explore high-potential design variants, limiting their optimization efficiency. To address this challenge, we propose AC-Refiner, a novel arithmetic circuit optimization framework leveraging conditional diffusion models. Our key insight is to reframe arithmetic circuit synthesis as a conditional image generation task. By carefully conditioning the denoising diffusion process on target quality-of-results (QoRs), AC-Refiner consistently produces high-quality circuit designs. Furthermore, the explored designs are used to fine-tune the diffusion model, which focuses the exploration near the Pareto frontier. Experimental results demonstrate that AC-Refiner generates designs with superior Pareto optimality, outperforming state-of-the-art baselines. The performance gain is further validated by integrating AC-Refiner into practical applications.

Functional Matching of Logic Subgraphs: Beyond Structural Isomorphism

May 28, 2025Abstract:Subgraph matching in logic circuits is foundational for numerous Electronic Design Automation (EDA) applications, including datapath optimization, arithmetic verification, and hardware trojan detection. However, existing techniques rely primarily on structural graph isomorphism and thus fail to identify function-related subgraphs when synthesis transformations substantially alter circuit topology. To overcome this critical limitation, we introduce the concept of functional subgraph matching, a novel approach that identifies whether a given logic function is implicitly present within a larger circuit, irrespective of structural variations induced by synthesis or technology mapping. Specifically, we propose a two-stage multi-modal framework: (1) learning robust functional embeddings across AIG and post-mapping netlists for functional subgraph detection, and (2) identifying fuzzy boundaries using a graph segmentation approach. Evaluations on standard benchmarks (ITC99, OpenABCD, ForgeEDA) demonstrate significant performance improvements over existing structural methods, with average $93.8\%$ accuracy in functional subgraph detection and a dice score of $91.3\%$ in fuzzy boundary identification.

DeepCircuitX: A Comprehensive Repository-Level Dataset for RTL Code Understanding, Generation, and PPA Analysis

Feb 25, 2025

Abstract:This paper introduces DeepCircuitX, a comprehensive repository-level dataset designed to advance RTL (Register Transfer Level) code understanding, generation, and power-performance-area (PPA) analysis. Unlike existing datasets that are limited to either file-level RTL code or physical layout data, DeepCircuitX provides a holistic, multilevel resource that spans repository, file, module, and block-level RTL code. This structure enables more nuanced training and evaluation of large language models (LLMs) for RTL-specific tasks. DeepCircuitX is enriched with Chain of Thought (CoT) annotations, offering detailed descriptions of functionality and structure at multiple levels. These annotations enhance its utility for a wide range of tasks, including RTL code understanding, generation, and completion. Additionally, the dataset includes synthesized netlists and PPA metrics, facilitating early-stage design exploration and enabling accurate PPA prediction directly from RTL code. We demonstrate the dataset's effectiveness on various LLMs finetuned with our dataset and confirm the quality with human evaluations. Our results highlight DeepCircuitX as a critical resource for advancing RTL-focused machine learning applications in hardware design automation.Our data is available at https://zeju.gitbook.io/lcm-team.

DeepSeq2: Enhanced Sequential Circuit Learning with Disentangled Representations

Nov 01, 2024Abstract:Circuit representation learning is increasingly pivotal in Electronic Design Automation (EDA), serving various downstream tasks with enhanced model efficiency and accuracy. One notable work, DeepSeq, has pioneered sequential circuit learning by encoding temporal correlations. However, it suffers from significant limitations including prolonged execution times and architectural inefficiencies. To address these issues, we introduce DeepSeq2, a novel framework that enhances the learning of sequential circuits, by innovatively mapping it into three distinct embedding spaces-structure, function, and sequential behavior-allowing for a more nuanced representation that captures the inherent complexities of circuit dynamics. By employing an efficient Directed Acyclic Graph Neural Network (DAG-GNN) that circumvents the recursive propagation used in DeepSeq, DeepSeq2 significantly reduces execution times and improves model scalability. Moreover, DeepSeq2 incorporates a unique supervision mechanism that captures transitioning behaviors within circuits more effectively. DeepSeq2 sets a new benchmark in sequential circuit representation learning, outperforming prior works in power estimation and reliability analysis.

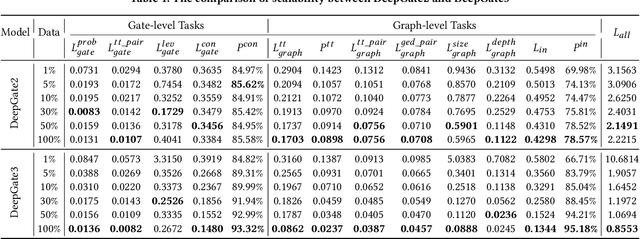

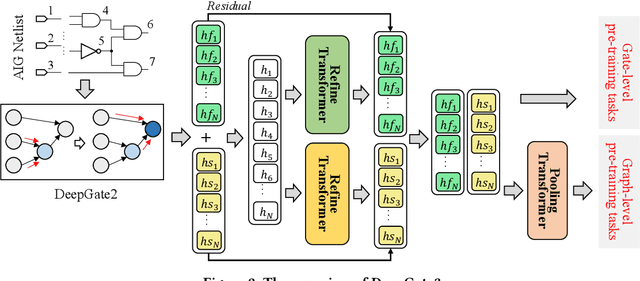

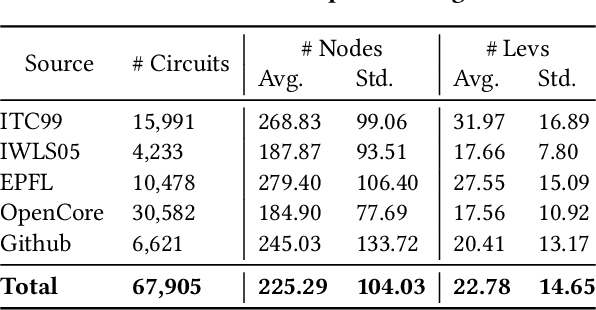

DeepGate3: Towards Scalable Circuit Representation Learning

Jul 15, 2024

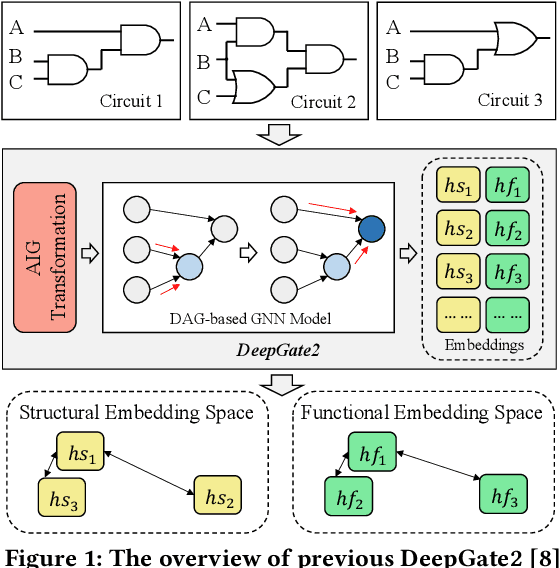

Abstract:Circuit representation learning has shown promising results in advancing the field of Electronic Design Automation (EDA). Existing models, such as DeepGate Family, primarily utilize Graph Neural Networks (GNNs) to encode circuit netlists into gate-level embeddings. However, the scalability of GNN-based models is fundamentally constrained by architectural limitations, impacting their ability to generalize across diverse and complex circuit designs. To address these challenges, we introduce DeepGate3, an enhanced architecture that integrates Transformer modules following the initial GNN processing. This novel architecture not only retains the robust gate-level representation capabilities of its predecessor, DeepGate2, but also enhances them with the ability to model subcircuits through a novel pooling transformer mechanism. DeepGate3 is further refined with multiple innovative supervision tasks, significantly enhancing its learning process and enabling superior representation of both gate-level and subcircuit structures. Our experiments demonstrate marked improvements in scalability and generalizability over traditional GNN-based approaches, establishing a significant step forward in circuit representation learning technology.

DeepGate2: Functionality-Aware Circuit Representation Learning

May 25, 2023

Abstract:Circuit representation learning aims to obtain neural representations of circuit elements and has emerged as a promising research direction that can be applied to various EDA and logic reasoning tasks. Existing solutions, such as DeepGate, have the potential to embed both circuit structural information and functional behavior. However, their capabilities are limited due to weak supervision or flawed model design, resulting in unsatisfactory performance in downstream tasks. In this paper, we introduce DeepGate2, a novel functionality-aware learning framework that significantly improves upon the original DeepGate solution in terms of both learning effectiveness and efficiency. Our approach involves using pairwise truth table differences between sampled logic gates as training supervision, along with a well-designed and scalable loss function that explicitly considers circuit functionality. Additionally, we consider inherent circuit characteristics and design an efficient one-round graph neural network (GNN), resulting in an order of magnitude faster learning speed than the original DeepGate solution. Experimental results demonstrate significant improvements in two practical downstream tasks: logic synthesis and Boolean satisfiability solving. The code is available at https://github.com/cure-lab/DeepGate2

Addressing Variable Dependency in GNN-based SAT Solving

Apr 18, 2023Abstract:Boolean satisfiability problem (SAT) is fundamental to many applications. Existing works have used graph neural networks (GNNs) for (approximate) SAT solving. Typical GNN-based end-to-end SAT solvers predict SAT solutions concurrently. We show that for a group of symmetric SAT problems, the concurrent prediction is guaranteed to produce a wrong answer because it neglects the dependency among Boolean variables in SAT problems. % We propose AsymSAT, a GNN-based architecture which integrates recurrent neural networks to generate dependent predictions for variable assignments. The experiment results show that dependent variable prediction extends the solving capability of the GNN-based method as it improves the number of solved SAT instances on large test sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge