Qi Zhao

SwiftRepertoire: Few-Shot Immune-Signature Synthesis via Dynamic Kernel Codes

Feb 01, 2026Abstract:Repertoire-level analysis of T cell receptors offers a biologically grounded signal for disease detection and immune monitoring, yet practical deployment is impeded by label sparsity, cohort heterogeneity, and the computational burden of adapting large encoders to new tasks. We introduce a framework that synthesizes compact task-specific parameterizations from a learned dictionary of prototypes conditioned on lightweight task descriptors derived from repertoire probes and pooled embedding statistics. This synthesis produces small adapter modules applied to a frozen pretrained backbone, enabling immediate adaptation to novel tasks with only a handful of support examples and without full model fine-tuning. The architecture preserves interpretability through motif-aware probes and a calibrated motif discovery pipeline that links predictive decisions to sequence-level signals. Together, these components yield a practical, sample-efficient, and interpretable pathway for translating repertoire-informed models into diverse clinical and research settings where labeled data are scarce and computational resources are constrained.

SentGraph: Hierarchical Sentence Graph for Multi-hop Retrieval-Augmented Question Answering

Jan 06, 2026Abstract:Traditional Retrieval-Augmented Generation (RAG) effectively supports single-hop question answering with large language models but faces significant limitations in multi-hop question answering tasks, which require combining evidence from multiple documents. Existing chunk-based retrieval often provides irrelevant and logically incoherent context, leading to incomplete evidence chains and incorrect reasoning during answer generation. To address these challenges, we propose SentGraph, a sentence-level graph-based RAG framework that explicitly models fine-grained logical relationships between sentences for multi-hop question answering. Specifically, we construct a hierarchical sentence graph offline by first adapting Rhetorical Structure Theory to distinguish nucleus and satellite sentences, and then organizing them into topic-level subgraphs with cross-document entity bridges. During online retrieval, SentGraph performs graph-guided evidence selection and path expansion to retrieve fine-grained sentence-level evidence. Extensive experiments on four multi-hop question answering benchmarks demonstrate the effectiveness of SentGraph, validating the importance of explicitly modeling sentence-level logical dependencies for multi-hop reasoning.

Benchmarking Continuous Dynamic Multi-Objective Optimization: Survey and Generalized Test Suite

Jan 04, 2026Abstract:Dynamic multi-objective optimization (DMOO) has recently attracted increasing interest from both academic researchers and engineering practitioners, as numerous real-world applications that evolve over time can be naturally formulated as dynamic multi-objective optimization problems (DMOPs). This growing trend necessitates advanced benchmarks for the rigorous evaluation of optimization algorithms under realistic conditions. This paper introduces a comprehensive and principled framework for constructing highly realistic and challenging DMOO benchmarks. The proposed framework features several novel components: a generalized formulation that allows the Pareto-optimal Set (PS) to change on hypersurfaces, a mechanism for creating controlled variable contribution imbalances to generate heterogeneous landscapes, and dynamic rotation matrices for inducing time-varying variable interactions and non-separability. Furthermore, we incorporate a temporal perturbation mechanism to simulate irregular environmental changes and propose a generalized time-linkage mechanism that systematically embeds historical solution quality into future problems, thereby capturing critical real-world phenomena such as error accumulation and time-deception. Extensive experimental results validate the effectiveness of the proposed framework, demonstrating its superiority over conventional benchmarks in terms of realism, complexity, and its capability for discriminating state-of-the-art algorithmic performance. This work establishes a new standard for dynamic multi-objective optimization benchmarking, providing a powerful tool for the development and evaluation of next-generation algorithms capable of addressing the complexities of real-world dynamic systems.

RoleRMBench & RoleRM: Towards Reward Modeling for Profile-Based Role Play in Dialogue Systems

Dec 11, 2025Abstract:Reward modeling has become a cornerstone of aligning large language models (LLMs) with human preferences. Yet, when extended to subjective and open-ended domains such as role play, existing reward models exhibit severe degradation, struggling to capture nuanced and persona-grounded human judgments. To address this gap, we introduce RoleRMBench, the first systematic benchmark for reward modeling in role-playing dialogue, covering seven fine-grained capabilities from narrative management to role consistency and engagement. Evaluation on RoleRMBench reveals large and consistent gaps between general-purpose reward models and human judgment, particularly in narrative and stylistic dimensions. We further propose RoleRM, a reward model trained with Continuous Implicit Preferences (CIP), which reformulates subjective evaluation as continuous consistent pairwise supervision under multiple structuring strategies. Comprehensive experiments show that RoleRM surpasses strong open- and closed-source reward models by over 24% on average, demonstrating substantial gains in narrative coherence and stylistic fidelity. Our findings highlight the importance of continuous preference representation and annotation consistency, establishing a foundation for subjective alignment in human-centered dialogue systems.

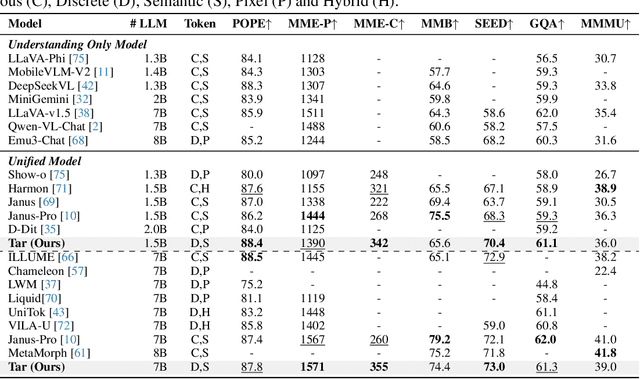

Growing Visual Generative Capacity for Pre-Trained MLLMs

Oct 02, 2025Abstract:Multimodal large language models (MLLMs) extend the success of language models to visual understanding, and recent efforts have sought to build unified MLLMs that support both understanding and generation. However, constructing such models remains challenging: hybrid approaches combine continuous embeddings with diffusion or flow-based objectives, producing high-quality images but breaking the autoregressive paradigm, while pure autoregressive approaches unify text and image prediction over discrete visual tokens but often face trade-offs between semantic alignment and pixel-level fidelity. In this work, we present Bridge, a pure autoregressive unified MLLM that augments pre-trained visual understanding models with generative ability through a Mixture-of-Transformers architecture, enabling both image understanding and generation within a single next-token prediction framework. To further improve visual generation fidelity, we propose a semantic-to-pixel discrete representation that integrates compact semantic tokens with fine-grained pixel tokens, achieving strong language alignment and precise description of visual details with only a 7.9% increase in sequence length. Extensive experiments across diverse multimodal benchmarks demonstrate that Bridge achieves competitive or superior results in both understanding and generation benchmarks, while requiring less training data and reduced training time compared to prior unified MLLMs.

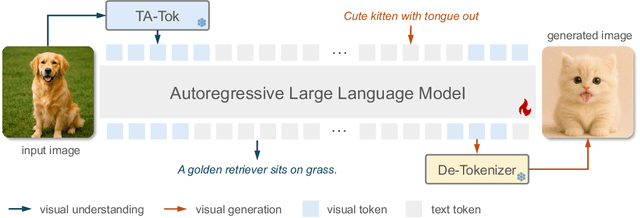

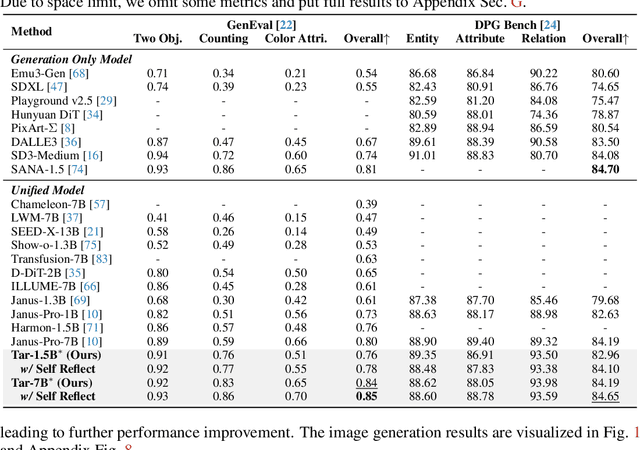

Vision as a Dialect: Unifying Visual Understanding and Generation via Text-Aligned Representations

Jun 23, 2025

Abstract:This paper presents a multimodal framework that attempts to unify visual understanding and generation within a shared discrete semantic representation. At its core is the Text-Aligned Tokenizer (TA-Tok), which converts images into discrete tokens using a text-aligned codebook projected from a large language model's (LLM) vocabulary. By integrating vision and text into a unified space with an expanded vocabulary, our multimodal LLM, Tar, enables cross-modal input and output through a shared interface, without the need for modality-specific designs. Additionally, we propose scale-adaptive encoding and decoding to balance efficiency and visual detail, along with a generative de-tokenizer to produce high-fidelity visual outputs. To address diverse decoding needs, we utilize two complementary de-tokenizers: a fast autoregressive model and a diffusion-based model. To enhance modality fusion, we investigate advanced pre-training tasks, demonstrating improvements in both visual understanding and generation. Experiments across benchmarks show that Tar matches or surpasses existing multimodal LLM methods, achieving faster convergence and greater training efficiency. Code, models, and data are available at https://tar.csuhan.com

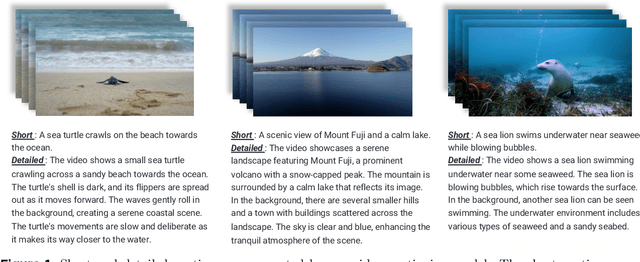

VINCIE: Unlocking In-context Image Editing from Video

Jun 12, 2025Abstract:In-context image editing aims to modify images based on a contextual sequence comprising text and previously generated images. Existing methods typically depend on task-specific pipelines and expert models (e.g., segmentation and inpainting) to curate training data. In this work, we explore whether an in-context image editing model can be learned directly from videos. We introduce a scalable approach to annotate videos as interleaved multimodal sequences. To effectively learn from this data, we design a block-causal diffusion transformer trained on three proxy tasks: next-image prediction, current segmentation prediction, and next-segmentation prediction. Additionally, we propose a novel multi-turn image editing benchmark to advance research in this area. Extensive experiments demonstrate that our model exhibits strong in-context image editing capabilities and achieves state-of-the-art results on two multi-turn image editing benchmarks. Despite being trained exclusively on videos, our model also shows promising abilities in multi-concept composition, story generation, and chain-of-editing applications.

Mastering Massive Multi-Task Reinforcement Learning via Mixture-of-Expert Decision Transformer

May 30, 2025Abstract:Despite recent advancements in offline multi-task reinforcement learning (MTRL) have harnessed the powerful capabilities of the Transformer architecture, most approaches focus on a limited number of tasks, with scaling to extremely massive tasks remaining a formidable challenge. In this paper, we first revisit the key impact of task numbers on current MTRL method, and further reveal that naively expanding the parameters proves insufficient to counteract the performance degradation as the number of tasks escalates. Building upon these insights, we propose M3DT, a novel mixture-of-experts (MoE) framework that tackles task scalability by further unlocking the model's parameter scalability. Specifically, we enhance both the architecture and the optimization of the agent, where we strengthen the Decision Transformer (DT) backbone with MoE to reduce task load on parameter subsets, and introduce a three-stage training mechanism to facilitate efficient training with optimal performance. Experimental results show that, by increasing the number of experts, M3DT not only consistently enhances its performance as model expansion on the fixed task numbers, but also exhibits remarkable task scalability, successfully extending to 160 tasks with superior performance.

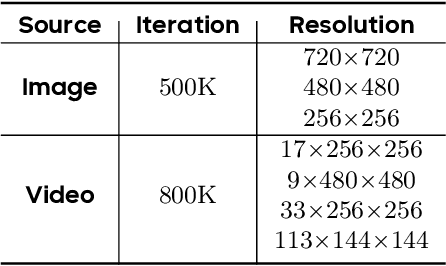

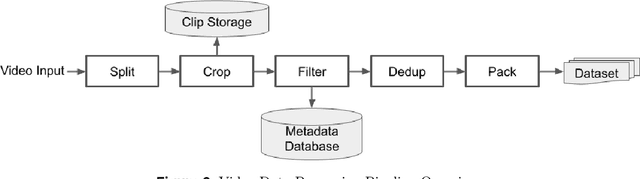

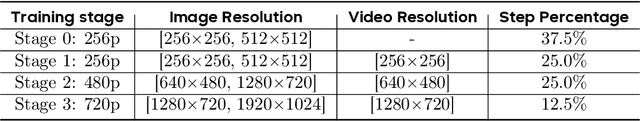

Seaweed-7B: Cost-Effective Training of Video Generation Foundation Model

Apr 11, 2025

Abstract:This technical report presents a cost-efficient strategy for training a video generation foundation model. We present a mid-sized research model with approximately 7 billion parameters (7B) called Seaweed-7B trained from scratch using 665,000 H100 GPU hours. Despite being trained with moderate computational resources, Seaweed-7B demonstrates highly competitive performance compared to contemporary video generation models of much larger size. Design choices are especially crucial in a resource-constrained setting. This technical report highlights the key design decisions that enhance the performance of the medium-sized diffusion model. Empirically, we make two observations: (1) Seaweed-7B achieves performance comparable to, or even surpasses, larger models trained on substantially greater GPU resources, and (2) our model, which exhibits strong generalization ability, can be effectively adapted across a wide range of downstream applications either by lightweight fine-tuning or continue training. See the project page at https://seaweed.video/

An Integrated AI-Enabled System Using One Class Twin Cross Learning (OCT-X) for Early Gastric Cancer Detection

Mar 31, 2025

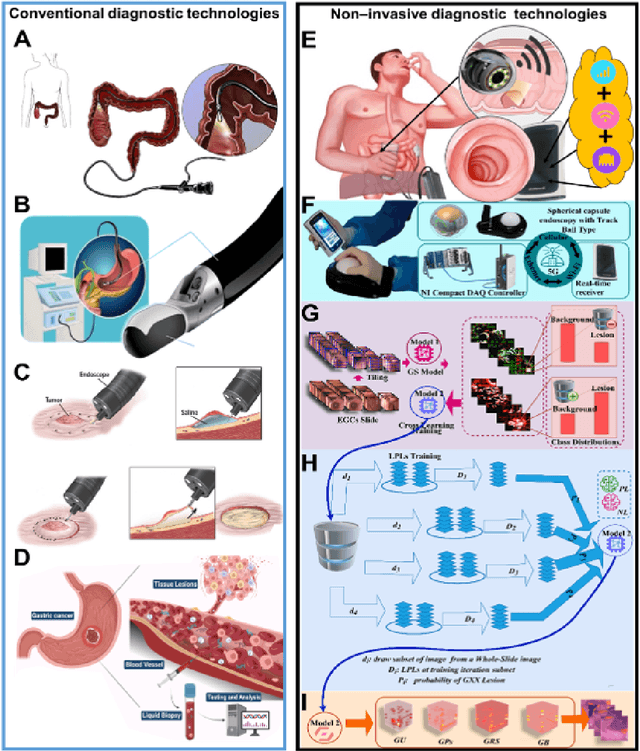

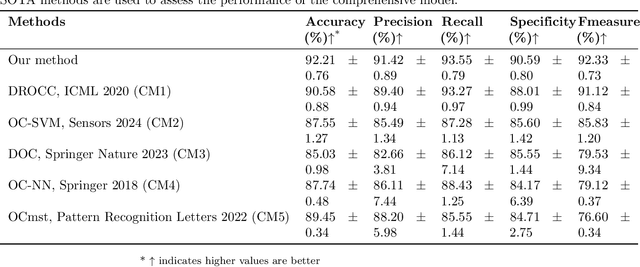

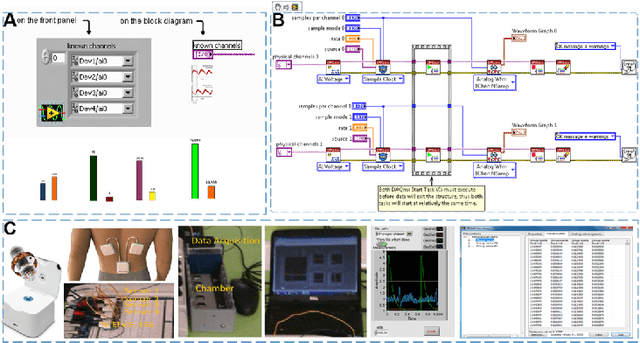

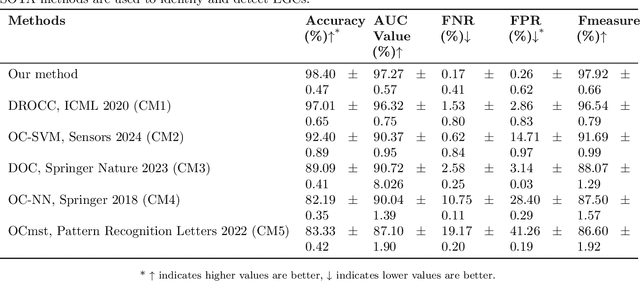

Abstract:Early detection of gastric cancer, a leading cause of cancer-related mortality worldwide, remains hampered by the limitations of current diagnostic technologies, leading to high rates of misdiagnosis and missed diagnoses. To address these challenges, we propose an integrated system that synergizes advanced hardware and software technologies to balance speed-accuracy. Our study introduces the One Class Twin Cross Learning (OCT-X) algorithm. Leveraging a novel fast double-threshold grid search strategy (FDT-GS) and a patch-based deep fully convolutional network, OCT-X maximizes diagnostic accuracy through real-time data processing and seamless lesion surveillance. The hardware component includes an all-in-one point-of-care testing (POCT) device with high-resolution imaging sensors, real-time data processing, and wireless connectivity, facilitated by the NI CompactDAQ and LabVIEW software. Our integrated system achieved an unprecedented diagnostic accuracy of 99.70%, significantly outperforming existing models by up to 4.47%, and demonstrated a 10% improvement in multirate adaptability. These findings underscore the potential of OCT-X as well as the integrated system in clinical diagnostics, offering a path toward more accurate, efficient, and less invasive early gastric cancer detection. Future research will explore broader applications, further advancing oncological diagnostics. Code is available at https://github.com/liu37972/Multirate-Location-on-OCT-X-Learning.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge