Sen Wang

XIT: Exploration and Exploitation Informed Trees for Active Gas Distribution Mapping in Unknown Environments

Feb 14, 2026Abstract:Mobile robotic gas distribution mapping (GDM) provides critical situational awareness during emergency responses to hazardous gas releases. However, most systems still rely on teleoperation, limiting scalability and response speed. Autonomous active GDM is challenging in unknown and cluttered environments, because the robot must simultaneously explore traversable space, map the environment, and infer the gas distribution belief from sparse chemical measurements. We address this by formulating active GDM as a next-best-trajectory informative path planning (IPP) problem and propose XIT (Exploration-Exploitation Informed Trees), a sampling-based planner that balances exploration and exploitation by generating concurrent trajectories toward exploration-rich goals while collecting informative gas measurements en route. XIT draws batches of samples from an Upper Confidence Bound (UCB) information field derived from the current gas posterior and expands trees using a cost that trades off travel effort against gas concentration and uncertainty. To enable plume-aware exploration, we introduce the gas frontier concept, defined as unobserved regions adjacent to high gas concentrations, and propose the Wavefront Gas Frontier Detection (WGFD) algorithm for their identification. High-fidelity simulations and real-world experiments demonstrate the benefits of XIT in terms of GDM quality and efficiency. Although developed for active GDM, XIT is readily applicable to other robotic information-gathering tasks in unknown environments that face the exploration and exploitation trade-off.

End-to-End Differentiable Photon Counting CT

Feb 12, 2026Abstract:Quantitative imaging is an important feature of spectral X-ray and CT systems, especially photon-counting CT (PCCT) imaging systems, which is achieved through material decomposition (MD) using spectral measurements. In this work, we present a novel framework that makes the PCCT imaging chain end-to-end differentiable (differentiable PCCT), with which we can leverage quantitative information in the image domain to enable cross-domain learning and optimization for upstream models. Specifically, the material decomposition from maximum-likelihood estimation (MLE) was made differentiable based on the Implicit Function Theorem and inserted as a layer into the imaging chain for end-to-end optimization. This framework allows for an automatic and adaptive solution of a wide range of imaging tasks, ultimately achieving quantitative imaging through computation rather than manual intervention. The end-to-end training mechanism effectively avoids the need for direct-domain training or supervision from intermediate references as models are trained using quantitative images. We demonstrate its applicability in two representative tasks: correcting detector energy bin drift and training an object scatter correction network using cross-domain reference from quantitative material images.

CUA-Skill: Develop Skills for Computer Using Agent

Jan 28, 2026Abstract:Computer-Using Agents (CUAs) aim to autonomously operate computer systems to complete real-world tasks. However, existing agentic systems remain difficult to scale and lag behind human performance. A key limitation is the absence of reusable and structured skill abstractions that capture how humans interact with graphical user interfaces and how to leverage these skills. We introduce CUA-Skill, a computer-using agentic skill base that encodes human computer-use knowledge as skills coupled with parameterized execution and composition graphs. CUA-Skill is a large-scale library of carefully engineered skills spanning common Windows applications, serving as a practical infrastructure and tool substrate for scalable, reliable agent development. Built upon this skill base, we construct CUA-Skill Agent, an end-to-end computer-using agent that supports dynamic skill retrieval, argument instantiation, and memory-aware failure recovery. Our results demonstrate that CUA-Skill substantially improves execution success rates and robustness on challenging end-to-end agent benchmarks, establishing a strong foundation for future computer-using agent development. On WindowsAgentArena, CUA-Skill Agent achieves state-of-the-art 57.5% (best of three) successful rate while being significantly more efficient than prior and concurrent approaches. The project page is available at https://microsoft.github.io/cua_skill/.

Explore with Long-term Memory: A Benchmark and Multimodal LLM-based Reinforcement Learning Framework for Embodied Exploration

Jan 11, 2026Abstract:An ideal embodied agent should possess lifelong learning capabilities to handle long-horizon and complex tasks, enabling continuous operation in general environments. This not only requires the agent to accurately accomplish given tasks but also to leverage long-term episodic memory to optimize decision-making. However, existing mainstream one-shot embodied tasks primarily focus on task completion results, neglecting the crucial process of exploration and memory utilization. To address this, we propose Long-term Memory Embodied Exploration (LMEE), which aims to unify the agent's exploratory cognition and decision-making behaviors to promote lifelong learning.We further construct a corresponding dataset and benchmark, LMEE-Bench, incorporating multi-goal navigation and memory-based question answering to comprehensively evaluate both the process and outcome of embodied exploration. To enhance the agent's memory recall and proactive exploration capabilities, we propose MemoryExplorer, a novel method that fine-tunes a multimodal large language model through reinforcement learning to encourage active memory querying. By incorporating a multi-task reward function that includes action prediction, frontier selection, and question answering, our model achieves proactive exploration. Extensive experiments against state-of-the-art embodied exploration models demonstrate that our approach achieves significant advantages in long-horizon embodied tasks.

NAS-GS: Noise-Aware Sonar Gaussian Splatting

Jan 09, 2026Abstract:Underwater sonar imaging plays a crucial role in various applications, including autonomous navigation in murky water, marine archaeology, and environmental monitoring. However, the unique characteristics of sonar images, such as complex noise patterns and the lack of elevation information, pose significant challenges for 3D reconstruction and novel view synthesis. In this paper, we present NAS-GS, a novel Noise-Aware Sonar Gaussian Splatting framework specifically designed to address these challenges. Our approach introduces a Two-Ways Splatting technique that accurately models the dual directions for intensity accumulation and transmittance calculation inherent in sonar imaging, significantly improving rendering speed without sacrificing quality. Moreover, we propose a Gaussian Mixture Model (GMM) based noise model that captures complex sonar noise patterns, including side-lobes, speckle, and multi-path noise. This model enhances the realism of synthesized images while preventing 3D Gaussian overfitting to noise, thereby improving reconstruction accuracy. We demonstrate state-of-the-art performance on both simulated and real-world large-scale offshore sonar scenarios, achieving superior results in novel view synthesis and 3D reconstruction.

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 23, 2025Abstract:Recent strides in video generation have paved the way for unified audio-visual generation. In this work, we present Seedance 1.5 pro, a foundational model engineered specifically for native, joint audio-video generation. Leveraging a dual-branch Diffusion Transformer architecture, the model integrates a cross-modal joint module with a specialized multi-stage data pipeline, achieving exceptional audio-visual synchronization and superior generation quality. To ensure practical utility, we implement meticulous post-training optimizations, including Supervised Fine-Tuning (SFT) on high-quality datasets and Reinforcement Learning from Human Feedback (RLHF) with multi-dimensional reward models. Furthermore, we introduce an acceleration framework that boosts inference speed by over 10X. Seedance 1.5 pro distinguishes itself through precise multilingual and dialect lip-syncing, dynamic cinematic camera control, and enhanced narrative coherence, positioning it as a robust engine for professional-grade content creation. Seedance 1.5 pro is now accessible on Volcano Engine at https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo.

VitalBench: A Rigorous Multi-Center Benchmark for Long-Term Vital Sign Prediction in Intraoperative Care

Nov 14, 2025Abstract:Intraoperative monitoring and prediction of vital signs are critical for ensuring patient safety and improving surgical outcomes. Despite recent advances in deep learning models for medical time-series forecasting, several challenges persist, including the lack of standardized benchmarks, incomplete data, and limited cross-center validation. To address these challenges, we introduce VitalBench, a novel benchmark specifically designed for intraoperative vital sign prediction. VitalBench includes data from over 4,000 surgeries across two independent medical centers, offering three evaluation tracks: complete data, incomplete data, and cross-center generalization. This framework reflects the real-world complexities of clinical practice, minimizing reliance on extensive preprocessing and incorporating masked loss techniques for robust and unbiased model evaluation. By providing a standardized and unified platform for model development and comparison, VitalBench enables researchers to focus on architectural innovation while ensuring consistency in data handling. This work lays the foundation for advancing predictive models for intraoperative vital sign forecasting, ensuring that these models are not only accurate but also robust and adaptable across diverse clinical environments. Our code and data are available at https://github.com/XiudingCai/VitalBench.

Distributed Zero-Shot Learning for Visual Recognition

Nov 11, 2025Abstract:In this paper, we propose a Distributed Zero-Shot Learning (DistZSL) framework that can fully exploit decentralized data to learn an effective model for unseen classes. Considering the data heterogeneity issues across distributed nodes, we introduce two key components to ensure the effective learning of DistZSL: a cross-node attribute regularizer and a global attribute-to-visual consensus. Our proposed cross-node attribute regularizer enforces the distances between attribute features to be similar across different nodes. In this manner, the overall attribute feature space would be stable during learning, and thus facilitate the establishment of visual-to-attribute(V2A) relationships. Then, we introduce the global attribute-tovisual consensus to mitigate biased V2A mappings learned from individual nodes. Specifically, we enforce the bilateral mapping between the attribute and visual feature distributions to be consistent across different nodes. Thus, the learned consistent V2A mapping can significantly enhance zero-shot learning across different nodes. Extensive experiments demonstrate that DistZSL achieves superior performance to the state-of-the-art in learning from distributed data.

MARCO: A Cooperative Knowledge Transfer Framework for Personalized Cross-domain Recommendations

Oct 06, 2025

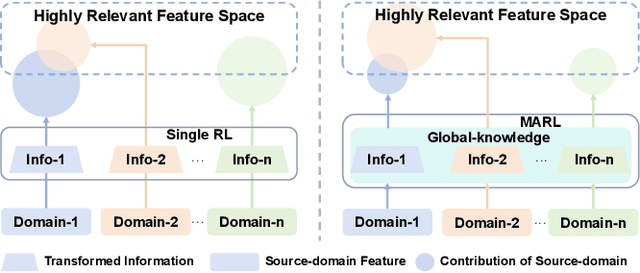

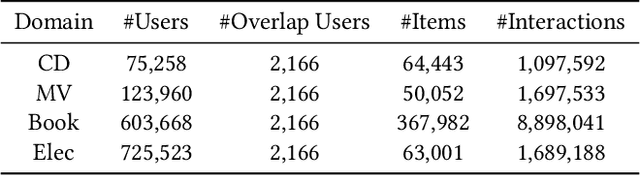

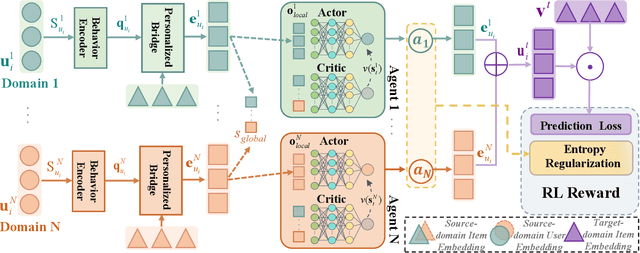

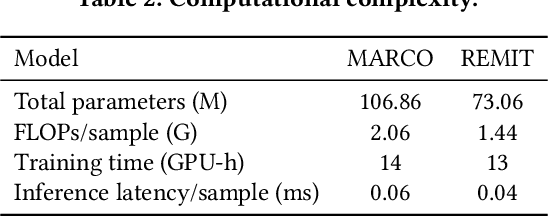

Abstract:Recommender systems frequently encounter data sparsity issues, particularly when addressing cold-start scenarios involving new users or items. Multi-source cross-domain recommendation (CDR) addresses these challenges by transferring valuable knowledge from multiple source domains to enhance recommendations in a target domain. However, existing reinforcement learning (RL)-based CDR methods typically rely on a single-agent framework, leading to negative transfer issues caused by inconsistent domain contributions and inherent distributional discrepancies among source domains. To overcome these limitations, MARCO, a Multi-Agent Reinforcement Learning-based Cross-Domain recommendation framework, is proposed. It leverages cooperative multi-agent reinforcement learning, where each agent is dedicated to estimating the contribution from an individual source domain, effectively managing credit assignment and mitigating negative transfer. In addition, an entropy-based action diversity penalty is introduced to enhance policy expressiveness and stabilize training by encouraging diverse agents' joint actions. Extensive experiments across four benchmark datasets demonstrate MARCO's superior performance over state-of-the-art methods, highlighting its robustness and strong generalization capabilities. The code is at https://github.com/xiewilliams/MARCO.

SAMPO:Scale-wise Autoregression with Motion PrOmpt for generative world models

Sep 19, 2025Abstract:World models allow agents to simulate the consequences of actions in imagined environments for planning, control, and long-horizon decision-making. However, existing autoregressive world models struggle with visually coherent predictions due to disrupted spatial structure, inefficient decoding, and inadequate motion modeling. In response, we propose \textbf{S}cale-wise \textbf{A}utoregression with \textbf{M}otion \textbf{P}r\textbf{O}mpt (\textbf{SAMPO}), a hybrid framework that combines visual autoregressive modeling for intra-frame generation with causal modeling for next-frame generation. Specifically, SAMPO integrates temporal causal decoding with bidirectional spatial attention, which preserves spatial locality and supports parallel decoding within each scale. This design significantly enhances both temporal consistency and rollout efficiency. To further improve dynamic scene understanding, we devise an asymmetric multi-scale tokenizer that preserves spatial details in observed frames and extracts compact dynamic representations for future frames, optimizing both memory usage and model performance. Additionally, we introduce a trajectory-aware motion prompt module that injects spatiotemporal cues about object and robot trajectories, focusing attention on dynamic regions and improving temporal consistency and physical realism. Extensive experiments show that SAMPO achieves competitive performance in action-conditioned video prediction and model-based control, improving generation quality with 4.4$\times$ faster inference. We also evaluate SAMPO's zero-shot generalization and scaling behavior, demonstrating its ability to generalize to unseen tasks and benefit from larger model sizes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge