Lifelong Learning with Task-Specific Adaptation: Addressing the Stability-Plasticity Dilemma

Paper and Code

Mar 08, 2025

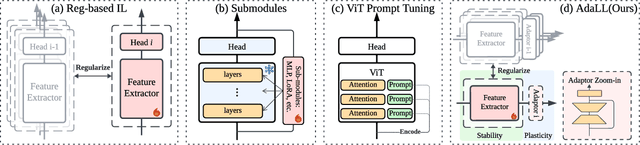

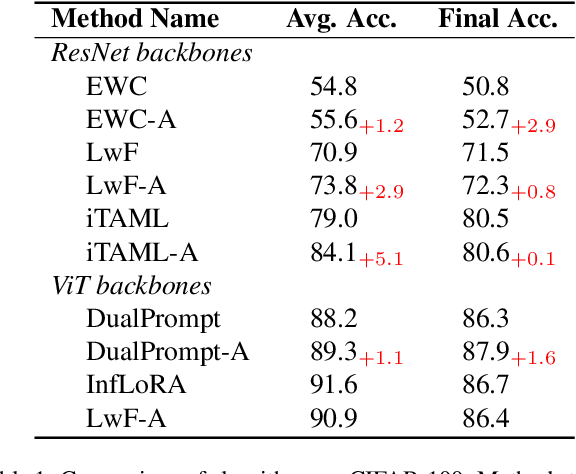

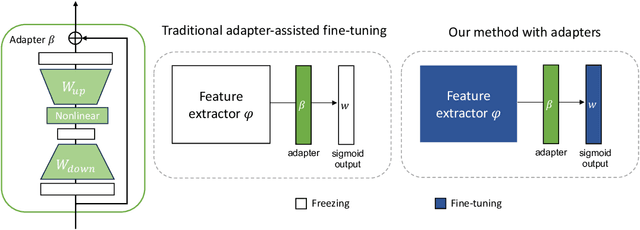

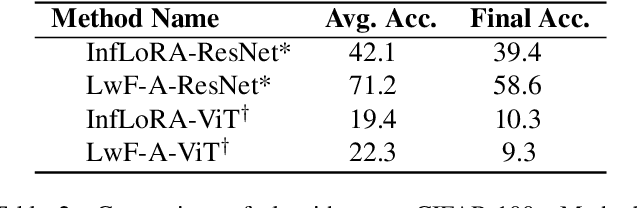

Lifelong learning (LL) aims to continuously acquire new knowledge while retaining previously learned knowledge. A central challenge in LL is the stability-plasticity dilemma, which requires models to balance the preservation of previous knowledge (stability) with the ability to learn new tasks (plasticity). While parameter-efficient fine-tuning (PEFT) has been widely adopted in large language models, its application to lifelong learning remains underexplored. To bridge this gap, this paper proposes AdaLL, an adapter-based framework designed to address the dilemma through a simple, universal, and effective strategy. AdaLL co-trains the backbone network and adapters under regularization constraints, enabling the backbone to capture task-invariant features while allowing the adapters to specialize in task-specific information. Unlike methods that freeze the backbone network, AdaLL incrementally enhances the backbone's capabilities across tasks while minimizing interference through backbone regularization. This architectural design significantly improves both stability and plasticity, effectively eliminating the stability-plasticity dilemma. Extensive experiments demonstrate that AdaLL consistently outperforms existing methods across various configurations, including dataset choices, task sequences, and task scales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge