Ziyan Yang

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 23, 2025Abstract:Recent strides in video generation have paved the way for unified audio-visual generation. In this work, we present Seedance 1.5 pro, a foundational model engineered specifically for native, joint audio-video generation. Leveraging a dual-branch Diffusion Transformer architecture, the model integrates a cross-modal joint module with a specialized multi-stage data pipeline, achieving exceptional audio-visual synchronization and superior generation quality. To ensure practical utility, we implement meticulous post-training optimizations, including Supervised Fine-Tuning (SFT) on high-quality datasets and Reinforcement Learning from Human Feedback (RLHF) with multi-dimensional reward models. Furthermore, we introduce an acceleration framework that boosts inference speed by over 10X. Seedance 1.5 pro distinguishes itself through precise multilingual and dialect lip-syncing, dynamic cinematic camera control, and enhanced narrative coherence, positioning it as a robust engine for professional-grade content creation. Seedance 1.5 pro is now accessible on Volcano Engine at https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo.

Growing Visual Generative Capacity for Pre-Trained MLLMs

Oct 02, 2025Abstract:Multimodal large language models (MLLMs) extend the success of language models to visual understanding, and recent efforts have sought to build unified MLLMs that support both understanding and generation. However, constructing such models remains challenging: hybrid approaches combine continuous embeddings with diffusion or flow-based objectives, producing high-quality images but breaking the autoregressive paradigm, while pure autoregressive approaches unify text and image prediction over discrete visual tokens but often face trade-offs between semantic alignment and pixel-level fidelity. In this work, we present Bridge, a pure autoregressive unified MLLM that augments pre-trained visual understanding models with generative ability through a Mixture-of-Transformers architecture, enabling both image understanding and generation within a single next-token prediction framework. To further improve visual generation fidelity, we propose a semantic-to-pixel discrete representation that integrates compact semantic tokens with fine-grained pixel tokens, achieving strong language alignment and precise description of visual details with only a 7.9% increase in sequence length. Extensive experiments across diverse multimodal benchmarks demonstrate that Bridge achieves competitive or superior results in both understanding and generation benchmarks, while requiring less training data and reduced training time compared to prior unified MLLMs.

NoiseShift: Resolution-Aware Noise Recalibration for Better Low-Resolution Image Generation

Oct 02, 2025Abstract:Text-to-image diffusion models trained on a fixed set of resolutions often fail to generalize, even when asked to generate images at lower resolutions than those seen during training. High-resolution text-to-image generators are currently unable to easily offer an out-of-the-box budget-efficient alternative to their users who might not need high-resolution images. We identify a key technical insight in diffusion models that when addressed can help tackle this limitation: Noise schedulers have unequal perceptual effects across resolutions. The same level of noise removes disproportionately more signal from lower-resolution images than from high-resolution images, leading to a train-test mismatch. We propose NoiseShift, a training-free method that recalibrates the noise level of the denoiser conditioned on resolution size. NoiseShift requires no changes to model architecture or sampling schedule and is compatible with existing models. When applied to Stable Diffusion 3, Stable Diffusion 3.5, and Flux-Dev, quality at low resolutions is significantly improved. On LAION-COCO, NoiseShift improves SD3.5 by 15.89%, SD3 by 8.56%, and Flux-Dev by 2.44% in FID on average. On CelebA, NoiseShift improves SD3.5 by 10.36%, SD3 by 5.19%, and Flux-Dev by 3.02% in FID on average. These results demonstrate the effectiveness of NoiseShift in mitigating resolution-dependent artifacts and enhancing the quality of low-resolution image generation.

Mixture of Contexts for Long Video Generation

Aug 28, 2025Abstract:Long video generation is fundamentally a long context memory problem: models must retain and retrieve salient events across a long range without collapsing or drifting. However, scaling diffusion transformers to generate long-context videos is fundamentally limited by the quadratic cost of self-attention, which makes memory and computation intractable and difficult to optimize for long sequences. We recast long-context video generation as an internal information retrieval task and propose a simple, learnable sparse attention routing module, Mixture of Contexts (MoC), as an effective long-term memory retrieval engine. In MoC, each query dynamically selects a few informative chunks plus mandatory anchors (caption, local windows) to attend to, with causal routing that prevents loop closures. As we scale the data and gradually sparsify the routing, the model allocates compute to salient history, preserving identities, actions, and scenes over minutes of content. Efficiency follows as a byproduct of retrieval (near-linear scaling), which enables practical training and synthesis, and the emergence of memory and consistency at the scale of minutes.

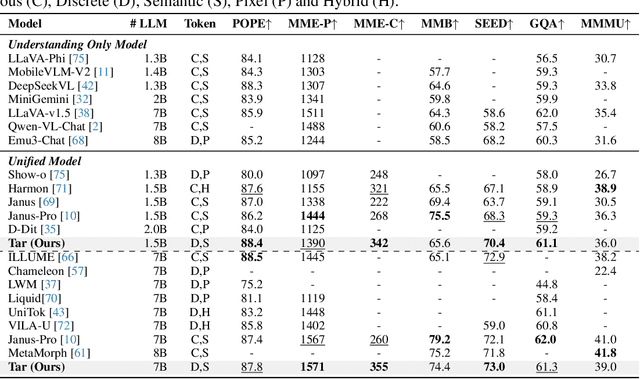

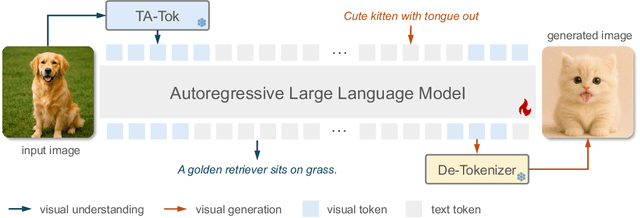

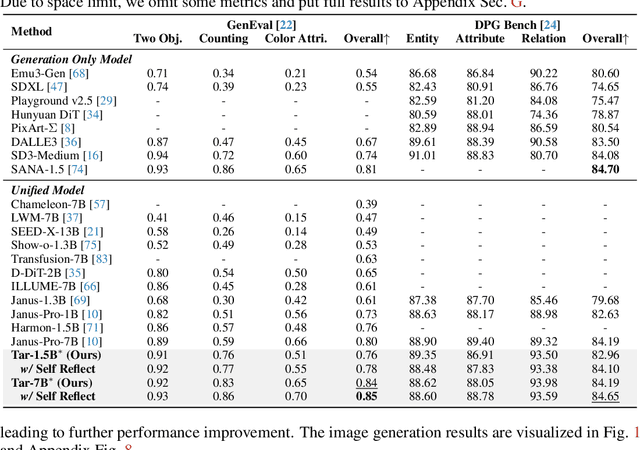

Vision as a Dialect: Unifying Visual Understanding and Generation via Text-Aligned Representations

Jun 23, 2025

Abstract:This paper presents a multimodal framework that attempts to unify visual understanding and generation within a shared discrete semantic representation. At its core is the Text-Aligned Tokenizer (TA-Tok), which converts images into discrete tokens using a text-aligned codebook projected from a large language model's (LLM) vocabulary. By integrating vision and text into a unified space with an expanded vocabulary, our multimodal LLM, Tar, enables cross-modal input and output through a shared interface, without the need for modality-specific designs. Additionally, we propose scale-adaptive encoding and decoding to balance efficiency and visual detail, along with a generative de-tokenizer to produce high-fidelity visual outputs. To address diverse decoding needs, we utilize two complementary de-tokenizers: a fast autoregressive model and a diffusion-based model. To enhance modality fusion, we investigate advanced pre-training tasks, demonstrating improvements in both visual understanding and generation. Experiments across benchmarks show that Tar matches or surpasses existing multimodal LLM methods, achieving faster convergence and greater training efficiency. Code, models, and data are available at https://tar.csuhan.com

VINCIE: Unlocking In-context Image Editing from Video

Jun 12, 2025Abstract:In-context image editing aims to modify images based on a contextual sequence comprising text and previously generated images. Existing methods typically depend on task-specific pipelines and expert models (e.g., segmentation and inpainting) to curate training data. In this work, we explore whether an in-context image editing model can be learned directly from videos. We introduce a scalable approach to annotate videos as interleaved multimodal sequences. To effectively learn from this data, we design a block-causal diffusion transformer trained on three proxy tasks: next-image prediction, current segmentation prediction, and next-segmentation prediction. Additionally, we propose a novel multi-turn image editing benchmark to advance research in this area. Extensive experiments demonstrate that our model exhibits strong in-context image editing capabilities and achieves state-of-the-art results on two multi-turn image editing benchmarks. Despite being trained exclusively on videos, our model also shows promising abilities in multi-concept composition, story generation, and chain-of-editing applications.

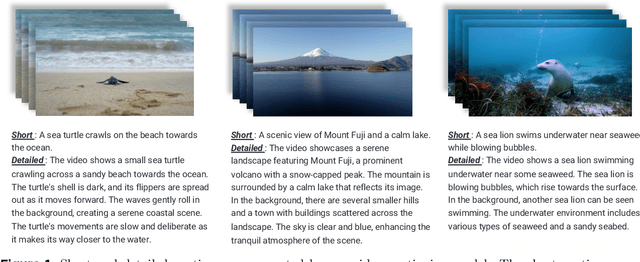

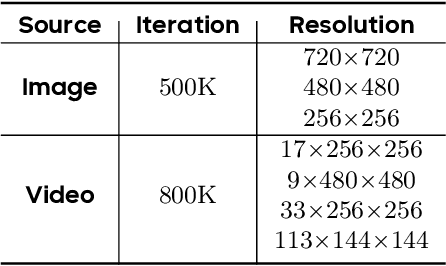

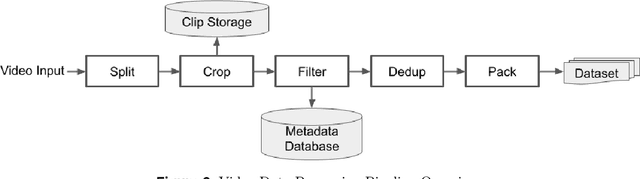

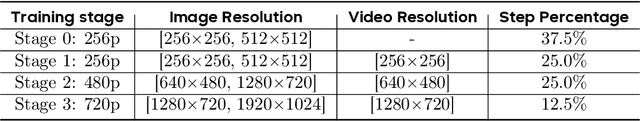

Seaweed-7B: Cost-Effective Training of Video Generation Foundation Model

Apr 11, 2025

Abstract:This technical report presents a cost-efficient strategy for training a video generation foundation model. We present a mid-sized research model with approximately 7 billion parameters (7B) called Seaweed-7B trained from scratch using 665,000 H100 GPU hours. Despite being trained with moderate computational resources, Seaweed-7B demonstrates highly competitive performance compared to contemporary video generation models of much larger size. Design choices are especially crucial in a resource-constrained setting. This technical report highlights the key design decisions that enhance the performance of the medium-sized diffusion model. Empirically, we make two observations: (1) Seaweed-7B achieves performance comparable to, or even surpasses, larger models trained on substantially greater GPU resources, and (2) our model, which exhibits strong generalization ability, can be effectively adapted across a wide range of downstream applications either by lightweight fine-tuning or continue training. See the project page at https://seaweed.video/

Evaluating Text-to-Image Synthesis with a Conditional Fréchet Distance

Mar 27, 2025

Abstract:Evaluating text-to-image synthesis is challenging due to misalignment between established metrics and human preferences. We propose cFreD, a metric based on the notion of Conditional Fr\'echet Distance that explicitly accounts for both visual fidelity and text-prompt alignment. Existing metrics such as Inception Score (IS), Fr\'echet Inception Distance (FID) and CLIPScore assess either image quality or image-text alignment but not both which limits their correlation with human preferences. Scoring models explicitly trained to replicate human preferences require constant updates and may not generalize to novel generation techniques or out-of-domain inputs. Through extensive experiments across multiple recently proposed text-to-image models and diverse prompt datasets, we demonstrate that cFreD exhibits a higher correlation with human judgments compared to statistical metrics, including metrics trained with human preferences. Our findings validate cFreD as a robust, future-proof metric for the systematic evaluation of text-to-image models, standardizing benchmarking in this rapidly evolving field. We release our evaluation toolkit and benchmark in the appendix.

Synthetic Video Enhances Physical Fidelity in Video Synthesis

Mar 26, 2025Abstract:We investigate how to enhance the physical fidelity of video generation models by leveraging synthetic videos derived from computer graphics pipelines. These rendered videos respect real-world physics, such as maintaining 3D consistency, and serve as a valuable resource that can potentially improve video generation models. To harness this potential, we propose a solution that curates and integrates synthetic data while introducing a method to transfer its physical realism to the model, significantly reducing unwanted artifacts. Through experiments on three representative tasks emphasizing physical consistency, we demonstrate its efficacy in enhancing physical fidelity. While our model still lacks a deep understanding of physics, our work offers one of the first empirical demonstrations that synthetic video enhances physical fidelity in video synthesis. Website: https://kevinz8866.github.io/simulation/

Long Context Tuning for Video Generation

Mar 13, 2025Abstract:Recent advances in video generation can produce realistic, minute-long single-shot videos with scalable diffusion transformers. However, real-world narrative videos require multi-shot scenes with visual and dynamic consistency across shots. In this work, we introduce Long Context Tuning (LCT), a training paradigm that expands the context window of pre-trained single-shot video diffusion models to learn scene-level consistency directly from data. Our method expands full attention mechanisms from individual shots to encompass all shots within a scene, incorporating interleaved 3D position embedding and an asynchronous noise strategy, enabling both joint and auto-regressive shot generation without additional parameters. Models with bidirectional attention after LCT can further be fine-tuned with context-causal attention, facilitating auto-regressive generation with efficient KV-cache. Experiments demonstrate single-shot models after LCT can produce coherent multi-shot scenes and exhibit emerging capabilities, including compositional generation and interactive shot extension, paving the way for more practical visual content creation. See https://guoyww.github.io/projects/long-context-video/ for more details.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge