Wenpei Bai

ASP-VMUNet: Atrous Shifted Parallel Vision Mamba U-Net for Skin Lesion Segmentation

Mar 25, 2025

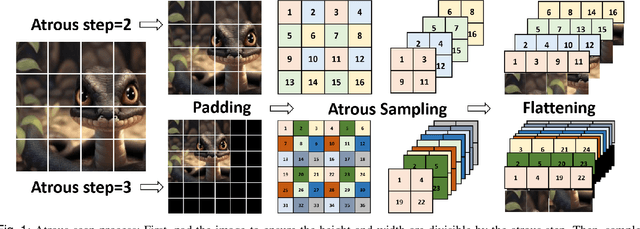

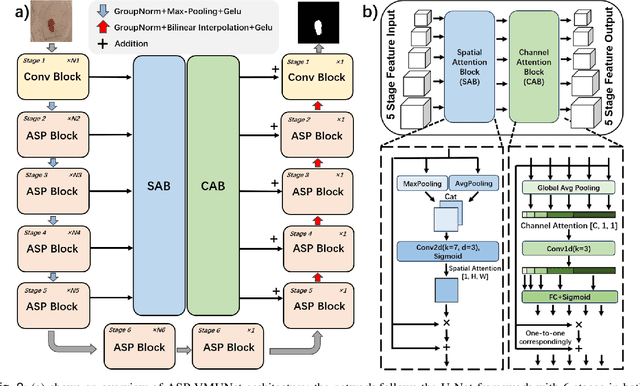

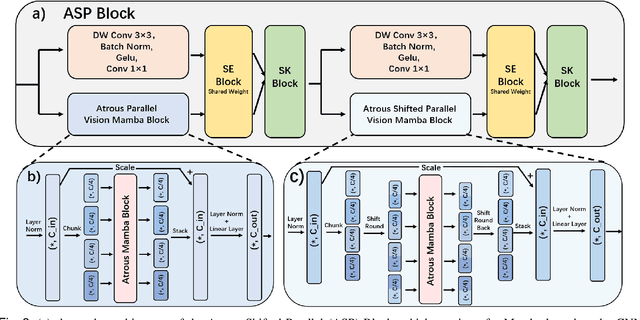

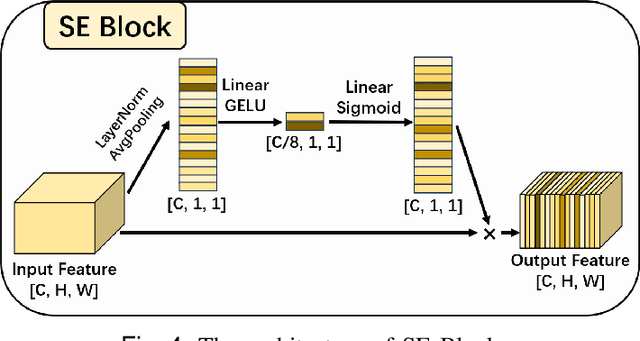

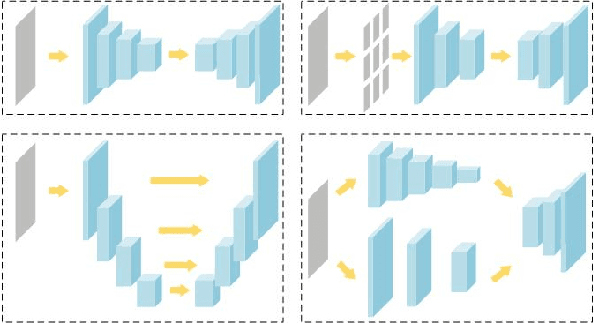

Abstract:Skin lesion segmentation is a critical challenge in computer vision, and it is essential to separate pathological features from healthy skin for diagnostics accurately. Traditional Convolutional Neural Networks (CNNs) are limited by narrow receptive fields, and Transformers face significant computational burdens. This paper presents a novel skin lesion segmentation framework, the Atrous Shifted Parallel Vision Mamba UNet (ASP-VMUNet), which integrates the efficient and scalable Mamba architecture to overcome limitations in traditional CNNs and computationally demanding Transformers. The framework introduces an atrous scan technique that minimizes background interference and expands the receptive field, enhancing Mamba's scanning capabilities. Additionally, the inclusion of a Parallel Vision Mamba (PVM) layer and a shift round operation optimizes feature segmentation and fosters rich inter-segment information exchange. A supplementary CNN branch with a Selective-Kernel (SK) Block further refines the segmentation by blending local and global contextual information. Tested on four benchmark datasets (ISIC16/17/18 and PH2), ASP-VMUNet demonstrates superior performance in skin lesion segmentation, validated by comprehensive ablation studies. This approach not only advances medical image segmentation but also highlights the benefits of hybrid architectures in medical imaging technology. Our code is available at https://github.com/BaoBao0926/ASP-VMUNet/tree/main.

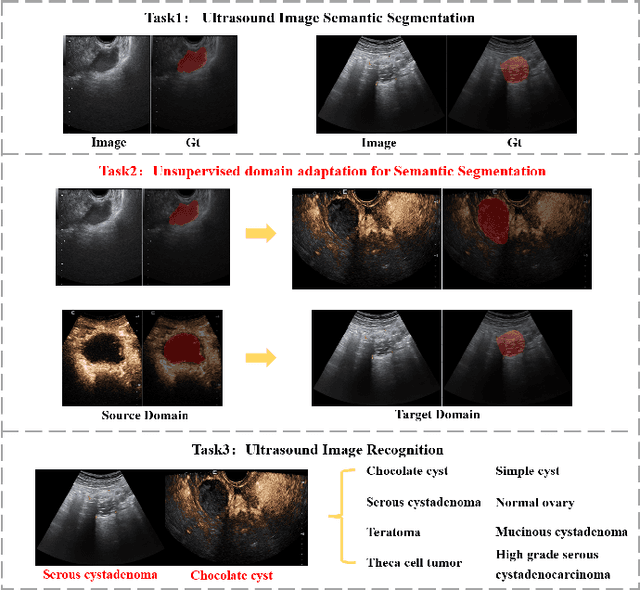

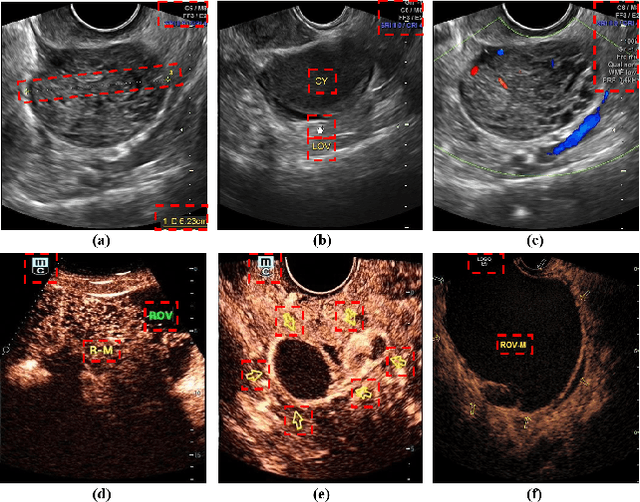

A Multi-Modality Ovarian Tumor Ultrasound Image Dataset for Unsupervised Cross-Domain Semantic Segmentation

Jul 14, 2022

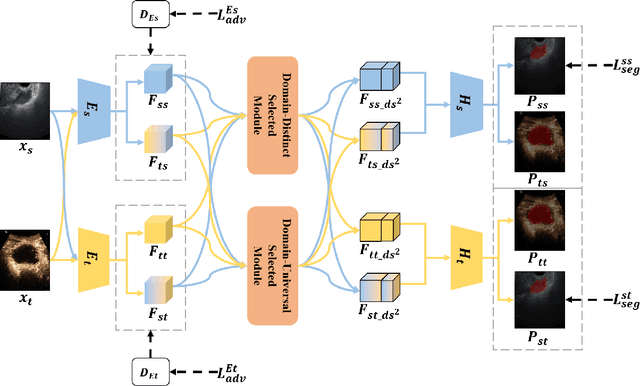

Abstract:Ovarian cancer is one of the most harmful gynecological diseases. Detecting ovarian tumors in early stage with computer-aided techniques can efficiently decrease the mortality rate. With the improvement of medical treatment standard, ultrasound images are widely applied in clinical treatment. However, recent notable methods mainly focus on single-modality ultrasound ovarian tumor segmentation or recognition, which means there still lacks of researches on exploring the representation capability of multi-modality ultrasound ovarian tumor images. To solve this problem, we propose a Multi-Modality Ovarian Tumor Ultrasound (MMOTU) image dataset containing 1469 2d ultrasound images and 170 contrast enhanced ultrasonography (CEUS) images with pixel-wise and global-wise annotations. Based on MMOTU, we mainly focus on unsupervised cross-domain semantic segmentation task. To solve the domain shift problem, we propose a feature alignment based architecture named Dual-Scheme Domain-Selected Network (DS$^2$Net). Specifically, we first design source-encoder and target-encoder to extract two-style features of source and target images. Then, we propose Domain-Distinct Selected Module (DDSM) and Domain-Universal Selected Module (DUSM) to extract the distinct and universal features in two styles (source-style or target-style). Finally, we fuse these two kinds of features and feed them into the source-decoder and target-decoder to generate final predictions. Extensive comparison experiments and analysis on MMOTU image dataset show that DS$^2$Net can boost the segmentation performance for bidirectional cross-domain adaptation of 2d ultrasound images and CEUS images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge